dlibの物体検出は、簡単に使えるし、素晴らしい性能ですが、時々誤検出が発生することもあります。このブログで紹介されているdeep learningを利用した物体検出は、HOGの検出器よりも性能よさそうなので試してみました。

dnn_mmod_ex.cpp

学習データからモデルをトレーニングするサンプルです。ビルド方法はこちらを参考に。

さて、このサンプルを実行した時、私のようにGPUがしょぼい環境だと、以下のようなエラーが出ると思います。

Error while calling cudaMalloc(&data, new_size*sizeof(float)) in file

/Users/mkisono/work/dlib/dlib/dnn/gpu_data.cpp:191. code: 2,

reason: out of memory

このサンプルを動かすためには、5GB以上のRAMが必要みたいです。私が使っているiMacのGPUはメモリが1GBしかありません。

/Users/mkisono/NVIDIA_CUDA-8.0_Samples/1_Utilities/deviceQuery/deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "GeForce GT 755M"

CUDA Driver Version / Runtime Version 8.0 / 8.0

CUDA Capability Major/Minor version number: 3.0

Total amount of global memory: 1024 MBytes (1073283072 bytes)

( 2) Multiprocessors, (192) CUDA Cores/MP: 384 CUDA Cores

GPU Max Clock rate: 1085 MHz (1.09 GHz)

Memory Clock rate: 2500 Mhz

Memory Bus Width: 128-bit

L2 Cache Size: 262144 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(65536), 2D=(65536, 65536), 3D=(4096, 4096, 4096)

Maximum Layered 1D Texture Size, (num) layers 1D=(16384), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(16384, 16384), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 1 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 8.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = GeForce GT 755M

Result = PASS

バッチサイズを小さくして対応します。cropperで指定している150を5に変更しました(これ以上の値だとエラーになった・・)

while(trainer.get_learning_rate() >= 1e-4)

{

// cropper(150, images_train, face_boxes_train, mini_batch_samples, mini_batch_labels);

cropper(5, images_train, face_boxes_train, mini_batch_samples, mini_batch_labels);

// We can also randomly jitter the colors and that often helps a detector

// generalize better to new images.

for (auto&& img : mini_batch_samples)

disturb_colors(img, rnd);

trainer.train_one_step(mini_batch_samples, mini_batch_labels);

}

一つのステップで処理するバッチがぐっと小さくなったので、learning rateを下げる閾値は大きくしておきます。この値を変更しないと、直ぐにlearning rateが小さくなってしまい、モデルの学習がうまくいきません。元は 300 だった値を 3000 に変更しました(もっと大きい値でもいいかも)。

trainer.set_iterations_without_progress_threshold(3000);

これでとりあえず学習は始まりました。とはいえ、どれくらい時間がかかるのか分からないので中断しました。サンプルでは5分ごとにモデルが保存されているので、次に学習を再開した時はそこから続きが出来ます。

% ./dnn_mmod_ex ../faces

num training images: 4

num testing images: 5

detection window width,height: 40,40

overlap NMS IOU thresh: 0.0781701

overlap NMS percent covered thresh: 0.257122

step#: 0 learning rate: 0.1 average loss: 0 steps without apparent progress: 0

step#: 312 learning rate: 0.1 average loss: 3.70172 steps without apparent progress: 81

step#: 625 learning rate: 0.1 average loss: 1.93546 steps without apparent progress: 122

step#: 941 learning rate: 0.1 average loss: 1.72469 steps without apparent progress: 325

step#: 1242 learning rate: 0.1 average loss: 1.6436 steps without apparent progress: 336

step#: 1547 learning rate: 0.1 average loss: 1.55475 steps without apparent progress: 262

step#: 1859 learning rate: 0.1 average loss: 1.55434 steps without apparent progress: 594

step#: 2171 learning rate: 0.1 average loss: 1.52154 steps without apparent progress: 121

Saved state to mmod_sync

step#: 2482 learning rate: 0.1 average loss: 1.41587 steps without apparent progress: 244

step#: 2792 learning rate: 0.1 average loss: 1.30095 steps without apparent progress: 313

step#: 3105 learning rate: 0.1 average loss: 1.13682 steps without apparent progress: 259

step#: 3401 learning rate: 0.1 average loss: 0.979448 steps without apparent progress: 186

step#: 3712 learning rate: 0.1 average loss: 0.906737 steps without apparent progress: 273

step#: 4018 learning rate: 0.1 average loss: 0.809688 steps without apparent progress: 194

step#: 4322 learning rate: 0.1 average loss: 0.781587 steps without apparent progress: 224

Saved state to mmod_sync

step#: 4620 learning rate: 0.1 average loss: 0.727887 steps without apparent progress: 553

step#: 4936 learning rate: 0.1 average loss: 0.654706 steps without apparent progress: 145

step#: 5249 learning rate: 0.1 average loss: 0.588801 steps without apparent progress: 180

step#: 5560 learning rate: 0.1 average loss: 0.580081 steps without apparent progress: 574

step#: 5872 learning rate: 0.1 average loss: 0.599059 steps without apparent progress: 909

step#: 6182 learning rate: 0.1 average loss: 0.504902 steps without apparent progress: 395

step#: 6495 learning rate: 0.1 average loss: 0.537297 steps without apparent progress: 753

step#: 6808 learning rate: 0.1 average loss: 0.539641 steps without apparent progress: 1104

Saved state to mmod_sync

step#: 7118 learning rate: 0.1 average loss: 0.503599 steps without apparent progress: 1350

step#: 7428 learning rate: 0.1 average loss: 0.486274 steps without apparent progress: 578

step#: 7746 learning rate: 0.1 average loss: 0.479272 steps without apparent progress: 892

step#: 8059 learning rate: 0.1 average loss: 0.448152 steps without apparent progress: 548

step#: 8374 learning rate: 0.1 average loss: 0.462102 steps without apparent progress: 519

step#: 8684 learning rate: 0.1 average loss: 0.460537 steps without apparent progress: 1184

step#: 8996 learning rate: 0.1 average loss: 0.474958 steps without apparent progress: 1592

Saved state to mmod_sync

step#: 9312 learning rate: 0.1 average loss: 0.424878 steps without apparent progress: 1453

step#: 9627 learning rate: 0.1 average loss: 0.421029 steps without apparent progress: 86

step#: 9943 learning rate: 0.1 average loss: 0.445149 steps without apparent progress: 956

step#: 10257 learning rate: 0.1 average loss: 0.407989 steps without apparent progress: 1087

step#: 10570 learning rate: 0.1 average loss: 0.44248 steps without apparent progress: 1576

step#: 10884 learning rate: 0.1 average loss: 0.46317 steps without apparent progress: 2187

step#: 11194 learning rate: 0.1 average loss: 0.431704 steps without apparent progress: 2360

step#: 11502 learning rate: 0.1 average loss: 0.404676 steps without apparent progress: 2509

dnn_mmod_face_detection_ex.cpp

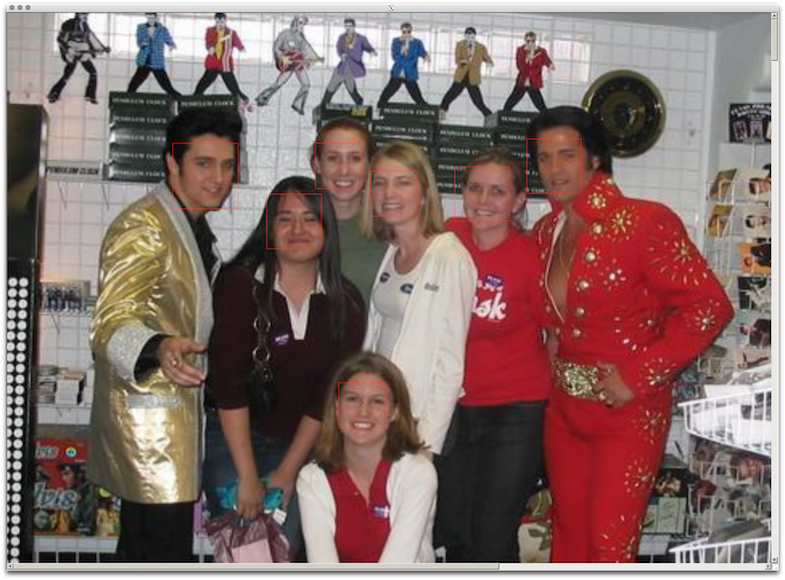

顔検出のサンプルです。学習済みのデータをダウンロードして使います。

% ./dnn_mmod_face_detection_ex

Call this program like this:

./dnn_mmod_face_detection_ex mmod_human_face_detector.dat faces/*.jpg

You can get the mmod_human_face_detector.dat file from:

http://dlib.net/files/mmod_human_face_detector.dat.bz2

実行してみると、またメモリ不足。

% ./dnn_mmod_face_detection_ex mmod_human_face_detector.dat ../faces/*.jpg

Error while calling cudaMalloc(&backward_filters_workspace, backward_filters_workspace_size_in_bytes) in file /Users/mkisono/work/dlib/dlib/dnn/cudnn_dlibapi.cpp:948. code: 2, reason: out of memory

諦めて、CUDAを外してサンプルをビルド仕直し(cmakeで -DDLIB_USE_CUDA=OFF を追加)実行しました。

その場合も、画像の拡大率をやや抑えないと実行できませんでした。

while(img.size() < 1000*1000)

pyramid_up(img);

ちなみに、dnn_mmod_ex.cppで学習させたモデルを dnn_mmod_face_detection_ex.cpp で使う場合は、モデルの定義が違うのでそのままでは動きません。dnn_mmod_face_detection_ex.cpp に記載がある通りです。

TRAINING THE MODEL

Finally, users interested in how the face detector was trained should

read the dnn_mmod_ex.cpp example program. It should be noted that the

face detector used in this example uses a bigger training dataset and

larger CNN architecture than what is shown in dnn_mmod_ex.cpp, but

otherwise training is the same. If you compare the net_type statements

in this file and dnn_mmod_ex.cpp you will see that they are very similar

except that the number of parameters has been increased.

感想

GPUがあれば非常に高速に処理できるし、性能もバッチリな感じがします。

これがPythonから使えたらどんなに便利か・・・ 対応予定は無さそうですが、モデルを使ったpredictがPythonから出来るだけでもうれしいな。

Pythonから使う (追記)

boost::python 使えばできるかなと思い、試作を始めたところでこれを見つけました。pybind11なるものを使ってPythonバインディングしている先人がおりました。これを参考にしてやってみたら出来ました。

ちなみに、dlibのPythonバインディング自体をpybind11にしようかという話題もあります。

pybind11は初めて使ってみましたが、boost:pythonより良さそうに思いました。