[前回] 自然言語処理モデルBERTの検証(3)-GLUEベンチマーク(その1)

はじめに

前回は、英語の言語理解ベンチマークであるGLUE(General Language Understanding Evaluation)について、

タスクCoLAの文法チェックを検証してみました。

今回は、他のタスクも試してみます。

GLUEタスクの種類

- MNLI: 2つの入力文が意味的に含意/矛盾/中立か判定

- QQP: 2つの質問文の意味が等価か判定

- QNLI: Q&A

- SST-2: 映画レビューの感情解析(ポジティブ、ネガティブ)

- CoLA: 入力文が英語文法として正しいか判定

- STS-B: ニュースの見出し文の類似度を5段階で評定

- MRPC: 2つの文が等しいか否かを判定

- RTE: 2つの入力文の含意を判定

- SQuAD: Q&A

- NER: 語の役割(人/組織/場所など)を特定

- SWAG: 入力文に後続する文を4つの選択肢から選ぶ

※ 自然言語処理で含意関係とは、文T(Text)とH(Hypothesis)において、Tが正しい場合にHも正しいと推定できる関係

GLUEタスクのデータセット

以下で入手できます。

https://gluebenchmark.com/tasks

SST-2: 映画レビューの感情解析(ポジティブ、ネガティブ)

前回自然言語処理モデルBERTの検証(3)-GLUEベンチマークの手順で、

下記より前の手順は変わりませんので、そのまま実行しておきます。

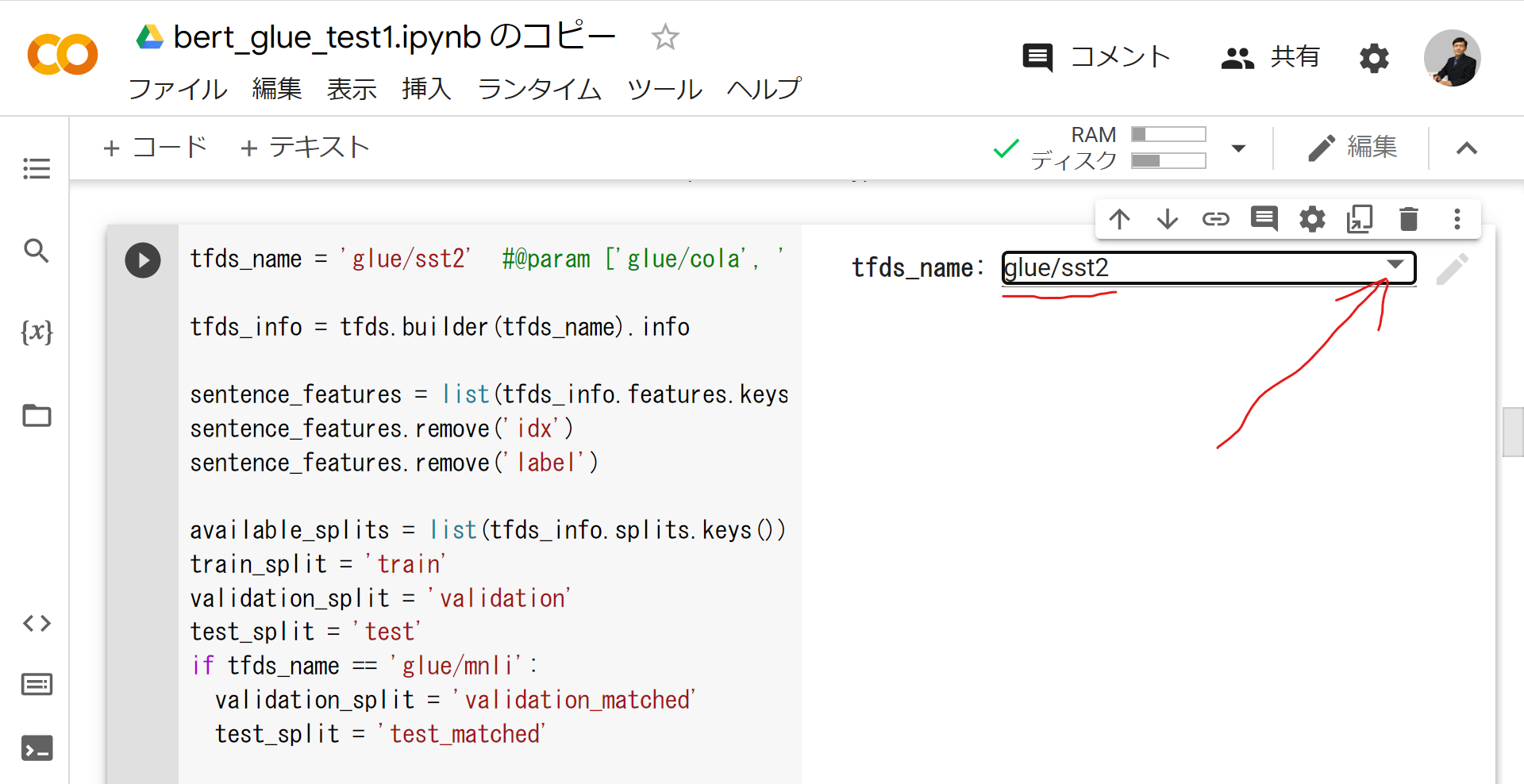

GLUEからタスクを選択

tfds_name = 'glue/sst2' #@param ['glue/cola', 'glue/sst2', 'glue/mrpc', 'glue/qqp', 'glue/mnli', 'glue/qnli', 'glue/rte', 'glue/wnli']

tfds_info = tfds.builder(tfds_name).info

sentence_features = list(tfds_info.features.keys())

sentence_features.remove('idx')

sentence_features.remove('label')

available_splits = list(tfds_info.splits.keys())

train_split = 'train'

validation_split = 'validation'

test_split = 'test'

if tfds_name == 'glue/mnli':

validation_split = 'validation_matched'

test_split = 'test_matched'

num_classes = tfds_info.features['label'].num_classes

num_examples = tfds_info.splits.total_num_examples

print(f'Using {tfds_name} from TFDS')

print(f'This dataset has {num_examples} examples')

print(f'Number of classes: {num_classes}')

print(f'Features {sentence_features}')

print(f'Splits {available_splits}')

with tf.device('/job:localhost'):

# batch_size=-1 is a way to load the dataset into memory

in_memory_ds = tfds.load(tfds_name, batch_size=-1, shuffle_files=True)

# The code below is just to show some samples from the selected dataset

print(f'Here are some sample rows from {tfds_name} dataset')

sample_dataset = tf.data.Dataset.from_tensor_slices(in_memory_ds[train_split])

labels_names = tfds_info.features['label'].names

print(labels_names)

print()

sample_i = 1

for sample_row in sample_dataset.take(5):

samples = [sample_row[feature] for feature in sentence_features]

print(f'sample row {sample_i}')

for sample in samples:

print(sample.numpy())

sample_label = sample_row['label']

print(f'label: {sample_label} ({labels_names[sample_label]})')

print()

sample_i += 1

glue/sst2タスクを選択し、実行します。

データセットのトレーニングのため、問題の種類(分類か回帰か)と損失関数を決定。

def get_configuration(glue_task):

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

if glue_task == 'glue/cola':

metrics = tfa.metrics.MatthewsCorrelationCoefficient(num_classes=2)

else:

metrics = tf.keras.metrics.SparseCategoricalAccuracy(

'accuracy', dtype=tf.float32)

return metrics, loss

モデルのトレーニング

epochs = 3

batch_size = 32

init_lr = 2e-5

print(f'Fine tuning {tfhub_handle_encoder} model')

bert_preprocess_model = make_bert_preprocess_model(sentence_features)

with strategy.scope():

# metric have to be created inside the strategy scope

metrics, loss = get_configuration(tfds_name)

train_dataset, train_data_size = load_dataset_from_tfds(

in_memory_ds, tfds_info, train_split, batch_size, bert_preprocess_model)

steps_per_epoch = train_data_size // batch_size

num_train_steps = steps_per_epoch * epochs

num_warmup_steps = num_train_steps // 10

validation_dataset, validation_data_size = load_dataset_from_tfds(

in_memory_ds, tfds_info, validation_split, batch_size,

bert_preprocess_model)

validation_steps = validation_data_size // batch_size

classifier_model = build_classifier_model(num_classes)

optimizer = optimization.create_optimizer(

init_lr=init_lr,

num_train_steps=num_train_steps,

num_warmup_steps=num_warmup_steps,

optimizer_type='adamw')

classifier_model.compile(optimizer=optimizer, loss=loss, metrics=[metrics])

classifier_model.fit(

x=train_dataset,

validation_data=validation_dataset,

steps_per_epoch=steps_per_epoch,

epochs=epochs,

validation_steps=validation_steps)

ファインチューニングに9分かかりました(タスクglue/colaの数倍)。

後、UserWarning表示されますが、無視します。

Fine tuning https://tfhub.dev/tensorflow/bert_en_uncased_L-12_H-768_A-12/3 model

/usr/local/lib/python3.7/dist-packages/keras/engine/functional.py:559: UserWarning: Input dict contained keys ['idx', 'label'] which did not match any model input. They will be ignored by the model.

inputs = self._flatten_to_reference_inputs(inputs)

Epoch 1/3

/usr/local/lib/python3.7/dist-packages/tensorflow/python/framework/indexed_slices.py:446: UserWarning: Converting sparse IndexedSlices(IndexedSlices(indices=Tensor("AdamWeightDecay/gradients/StatefulPartitionedCall:1", shape=(None,), dtype=int32), values=Tensor("clip_by_global_norm/clip_by_global_norm/_0:0", dtype=float32), dense_shape=Tensor("AdamWeightDecay/gradients/StatefulPartitionedCall:2", shape=(None,), dtype=int32))) to a dense Tensor of unknown shape. This may consume a large amount of memory.

"shape. This may consume a large amount of memory." % value)

推論のためエクスポート

前処理部分とファインチューニング済みBERTを含む、最終モデルを作成。

モデルをColabに保存し、後でダウンロードできるようにします。

main_save_path = './my_models'

bert_type = tfhub_handle_encoder.split('/')[-2]

saved_model_name = f'{tfds_name.replace("/", "_")}_{bert_type}'

saved_model_path = os.path.join(main_save_path, saved_model_name)

preprocess_inputs = bert_preprocess_model.inputs

bert_encoder_inputs = bert_preprocess_model(preprocess_inputs)

bert_outputs = classifier_model(bert_encoder_inputs)

model_for_export = tf.keras.Model(preprocess_inputs, bert_outputs)

print('Saving', saved_model_path)

# Save everything on the Colab host (even the variables from TPU memory)

save_options = tf.saved_model.SaveOptions(experimental_io_device='/job:localhost')

model_for_export.save(saved_model_path, include_optimizer=False,

options=save_options)

モデルをテスト

最後のステップとして、エクスポートされたモデル結果をテスト。

※ テストは、接続先のTPUワーカーではなく、Colabホストで実行される

with tf.device('/job:localhost'):

reloaded_model = tf.saved_model.load(saved_model_path)

#@title Utility methods

def prepare(record):

model_inputs = [[record[ft]] for ft in sentence_features]

return model_inputs

def prepare_serving(record):

model_inputs = {ft: record[ft] for ft in sentence_features}

return model_inputs

def print_bert_results(test, bert_result, dataset_name):

bert_result_class = tf.argmax(bert_result, axis=1)[0]

if dataset_name == 'glue/cola':

print('sentence:', test[0].numpy())

if bert_result_class == 1:

print('This sentence is acceptable')

else:

print('This sentence is unacceptable')

elif dataset_name == 'glue/sst2':

print('sentence:', test[0])

if bert_result_class == 1:

print('This sentence has POSITIVE sentiment')

else:

print('This sentence has NEGATIVE sentiment')

elif dataset_name == 'glue/mrpc':

print('sentence1:', test[0])

print('sentence2:', test[1])

if bert_result_class == 1:

print('Are a paraphrase')

else:

print('Are NOT a paraphrase')

elif dataset_name == 'glue/qqp':

print('question1:', test[0])

print('question2:', test[1])

if bert_result_class == 1:

print('Questions are similar')

else:

print('Questions are NOT similar')

elif dataset_name == 'glue/mnli':

print('premise :', test[0])

print('hypothesis:', test[1])

if bert_result_class == 1:

print('This premise is NEUTRAL to the hypothesis')

elif bert_result_class == 2:

print('This premise CONTRADICTS the hypothesis')

else:

print('This premise ENTAILS the hypothesis')

elif dataset_name == 'glue/qnli':

print('question:', test[0])

print('sentence:', test[1])

if bert_result_class == 1:

print('The question is NOT answerable by the sentence')

else:

print('The question is answerable by the sentence')

elif dataset_name == 'glue/rte':

print('sentence1:', test[0])

print('sentence2:', test[1])

if bert_result_class == 1:

print('Sentence1 DOES NOT entails sentence2')

else:

print('Sentence1 entails sentence2')

elif dataset_name == 'glue/wnli':

print('sentence1:', test[0])

print('sentence2:', test[1])

if bert_result_class == 1:

print('Sentence1 DOES NOT entails sentence2')

else:

print('Sentence1 entails sentence2')

print('BERT raw results:', bert_result[0])

print()

テスト

with tf.device('/job:localhost'):

test_dataset = tf.data.Dataset.from_tensor_slices(in_memory_ds[test_split])

for test_row in test_dataset.shuffle(1000).map(prepare).take(5):

if len(sentence_features) == 1:

result = reloaded_model(test_row[0])

else:

result = reloaded_model(list(test_row))

print_bert_results(test_row, result, tfds_name)

テスト結果です。

sentence: tf.Tensor([b"for anyone who grew up on disney 's 1950 treasure island , or remembers the 1934 victor fleming classic , this one feels like an impostor ."], shape=(1,), dtype=string)

This sentence has NEGATIVE sentiment

BERT raw results: tf.Tensor([ 3.5094056 -3.440254 ], shape=(2,), dtype=float32)

sentence: tf.Tensor([b'the only thing worse than your substandard , run-of-the-mill hollywood picture is an angst-ridden attempt to be profound .'], shape=(1,), dtype=string)

This sentence has NEGATIVE sentiment

BERT raw results: tf.Tensor([ 3.8467538 -3.1074412], shape=(2,), dtype=float32)

sentence: tf.Tensor([b'like a marathon runner trying to finish a race , you need a constant influx of liquid just to get through it .'], shape=(1,), dtype=string)

This sentence has NEGATIVE sentiment

BERT raw results: tf.Tensor([ 2.9375803 -2.4308932], shape=(2,), dtype=float32)

sentence: tf.Tensor([b'far from perfect , but its heart is in the right place ... innocent and well-meaning .'], shape=(1,), dtype=string)

This sentence has POSITIVE sentiment

BERT raw results: tf.Tensor([-5.709575 3.1275086], shape=(2,), dtype=float32)

sentence: tf.Tensor([b"it 's really yet another anemic and formulaic lethal weapon-derived buddy-cop movie , trying to pass off its lack of imagination as hip knowingness ."], shape=(1,), dtype=string)

This sentence has NEGATIVE sentiment

BERT raw results: tf.Tensor([ 3.471871 -3.522515], shape=(2,), dtype=float32)

映画レビューの感情解析結果、ポジティブ/ネガティブ判定がなされています。

それにしても、ポジティブ判定は一つのみで少ないです。

「完璧にはほど遠いですが」から、これも微妙?

おわりに

GLUEのタスクSST-2を解いてみました。

他のタスクも同じ要領で実行してみようと思います。

待望の日本語版GLUEベンチマーク(JGLUE)は、早大河原研究室が作成されており、

近々JGLUEベンチマーク結果が公開されるとのことでウォッチ中。。。

お楽しみに。