[前回] 自然言語処理モデルBERTの検証(5)-GLUEベンチマーク(その3)

はじめに

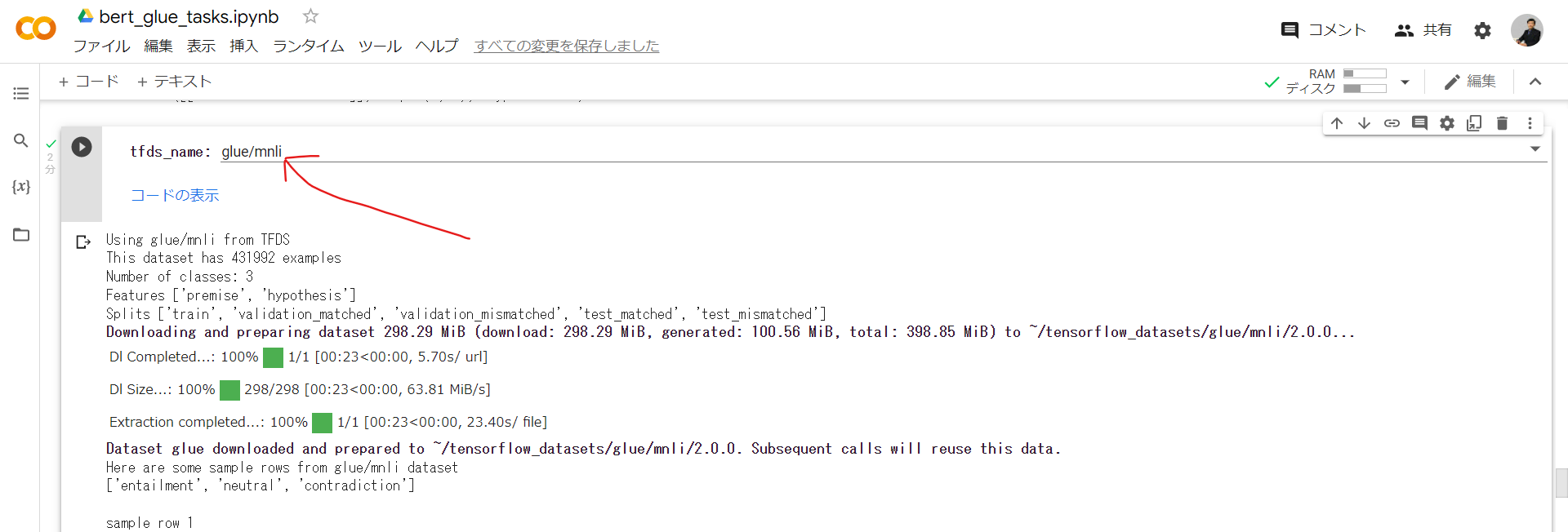

前回は、GLUEベンチマークのMNLIタスクを検証結果、

「2つの入力文が意味的に含意/矛盾/中立かの判定」を確認できました。

今回はMNLI同様、含意判定を目的とする2タスクを比較してみます。

- RTE(Recognizing Textual Entailment)

- WNLI(Winograd NLI)

検証手順

以下の検証手順をご参照ください。

自然言語処理モデルBERTの検証(3)-GLUEベンチマーク(その1)

1点のみ、手順「GLUEからタスクを選択」で、

ドロップダウンメニューから、該当するタスクを選びなおす必要あります。

早速、テスト結果です。

RTE

※ ファインチューニングは2分ほどで終わりました

sentence1: tf.Tensor([b'Kourkoulos was married to the daughter of the Greek tycoon Yiannis Latsis, Marianna Latsis, and had 3 children.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'Nikos Kourkoulos was a Greek actor.'], shape=(1,), dtype=string)

Sentence1 DOES NOT entails sentence2

BERT raw results: tf.Tensor([-1.6122011 0.5349688], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'The coalition drafted the principles below - originally dubbed the Valdez principles - to guide businesses on their individual path to environmental sustainability.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'The Valdez Principles are principles which guide businesses on their individual path to environmental sustainability.'], shape=(1,), dtype=string)

Sentence1 entails sentence2

BERT raw results: tf.Tensor([ 0.7309164 -1.1774694], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'First identified in 1930, swine flu is a common and sometimes fatal respiratory disease in pigs caused by type A influenza virus. The disease does not normally spread to humans, though infections are sporadically recorded, especially among people who have been directly exposed to pigs. The recent cases in Mexico and the United States, however, appear to have spread through human contact. From December 2005 through February 2009, only 12 cases of swine influenza were reported in the United States. In 1988 a pregnant woman died after contact with sick pigs. In 1976, swine flu at an U.S. military base at Fort Dix, New Jersey killed one soldier. Four were hospitalized with pneumonia. At first, experts feared the strain was related to the Spanish Flu of 1918, which killed millions, but the strain never spread beyond the base.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'Swine flu spreads to humans through contact with infected pigs.'], shape=(1,), dtype=string)

Sentence1 entails sentence2

BERT raw results: tf.Tensor([ 0.5360862 -1.0688126], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'The opinion poll was conducted on the sixth and seventh of October, and included a cross section of 861 adults with a margin of error estimated at 4%.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'The poll was carried out on the 6th and 7th of October.'], shape=(1,), dtype=string)

Sentence1 entails sentence2

BERT raw results: tf.Tensor([ 0.20490673 -1.0789552 ], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'The Guardian is reporting that the University is being used by young men and women to stock up on firebombs and break up marble slabs to throw at police. From behind their makeshift barriers, they vowed the unrest would become "an uprising the likes of which Greece has never seen." "We are experiencing moments of a great social revolution," leftist activist Panagiotis Sotiris told Reuters. Sotiris is among those occupying a university building. "The protests will last as long as necessary," he added.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'The riots in Greece started on December 6.'], shape=(1,), dtype=string)

Sentence1 DOES NOT entails sentence2

BERT raw results: tf.Tensor([-1.5859807 1.2540616], shape=(2,), dtype=float32)

2つの文が、含意であるか否か判定してくれました。

WNLI

※ こちらも、ファインチューニングにかかった時間は2分でした

sentence1: tf.Tensor([b'The Harbor folks said Grandma kept her house so clean that you could wipe her floor with a clean handkerchief without getting any dirt on it.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'You could wipe her floor with a clean handkerchief without getting any dirt on the dirt.'], shape=(1,), dtype=string)

Sentence1 entails sentence2

BERT raw results: tf.Tensor([0.5164316 0.46179643], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'Claire could not win a single point in her squash game with Lisa this afternoon. She was just exhausted.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'Lisa was just exhausted.'], shape=(1,), dtype=string)

Sentence1 entails sentence2

BERT raw results: tf.Tensor([0.93196255 0.6851963 ], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'Maude and Dora had seen the trains rushing across the prairie, with long, rolling puffs of black smoke streaming back from the engine. Their roars and their wild, clear whistles could be heard from far away. Horses ran away when they saw a train coming.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'The puffs saw a train coming.'], shape=(1,), dtype=string)

Sentence1 DOES NOT entails sentence2

BERT raw results: tf.Tensor([0.37662944 0.7906835 ], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'Edward dropped adhesive tape onto his window sill, and when he pulled the tape off, some of the glue was stuck on it.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'Some of the glue was stuck on the tape.'], shape=(1,), dtype=string)

Sentence1 DOES NOT entails sentence2

BERT raw results: tf.Tensor([0.29954222 0.7149271 ], shape=(2,), dtype=float32)

sentence1: tf.Tensor([b'Everyone, by now, felt a certain confidence in the British way of doing things. A general had the right to punish a soldier caught selling his arms, and also anyone who tempted him.'], shape=(1,), dtype=string)

sentence2: tf.Tensor([b'A general had the right to punish a soldier caught selling the general arms, and also anyone who tempted him.'], shape=(1,), dtype=string)

Sentence1 entails sentence2

BERT raw results: tf.Tensor([0.81498444 0.6301544 ], shape=(2,), dtype=float32)

二つの入力文が、含意であるか否かを判定してくれました。

ただし、2番目の含意判定には少し疑問を感じました。

入力文1

Claire could not win a single point in her squash game with Lisa this afternoon. She was just exhausted.

今日の午後、クレアはリサとのスカッシュゲームで1ポイントも獲得できなかった。

彼女は疲れ果てた。

入力文2

Lisa was just exhausted.

リサは疲れ果てた。 <- 文脈から、リサではなくクレアでは? リサも疲れたと推論した?

おわりに

含意判定を目的とした、MNLI、RTE、WNLIで共通する特徴は、

入力文1が長文で、入力文2は短文である点でした。

ビジネスシーンとして「資料の整理や要約」で効果発揮できるかも。。。

残りのタスクも追って検証します。

お楽しみに。