1、Windows 10のWSL設定

Windows Subsystem for Linuxをインストールしてみよう!

開発環境について、anaconda利用の場合、conda create のほうが個人的いいと思います。

2、WSL Anaconda インストール

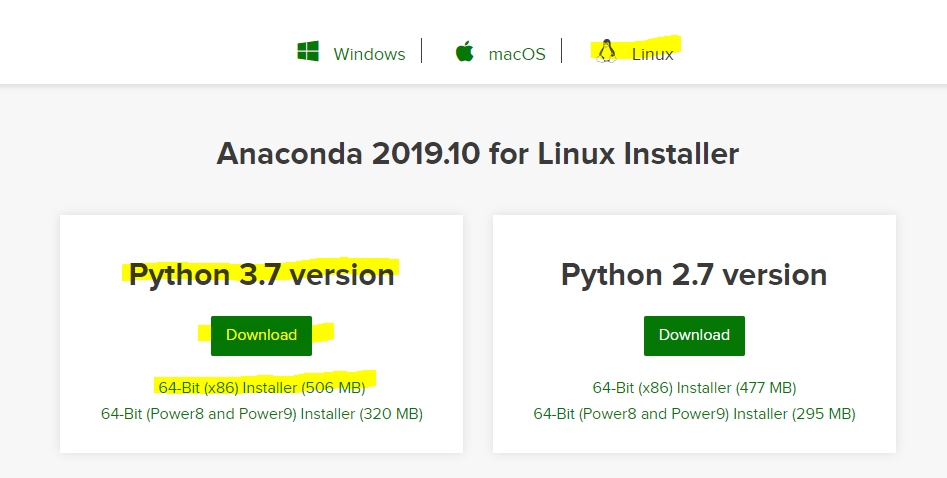

2-1、Anaconda Downlowd

Linux 3.7 64bit X86 をWindowsにダウンロードする。

2-2、Anaconda Install

ダウンロード先にcdして、インストーラを実行する。

(base) xxxx:~$ cd /mnt/c/Users/myname/Downloads/

(base) xxxx:/mnt/c/Users/myname/Downloads$ ./Anaconda3-2019.10-Linux-x86_64.sh

Welcome to Anaconda3 2019.10

In order to continue the installation process, please review the license

agreement.

Please, press ENTER to continue

詳細はインストールの記事を参照してください。

$conda コマンドを実行して、インストール結果を確認する。下記の結果になればOKです。

(base) xxxx:~$ conda

usage: conda [-h] [-V] command ...

conda is a tool for managing and deploying applications, environments and packages.

Options:

positional arguments:

command

clean Remove unused packages and caches.

config Modify configuration values in .condarc. This is modeled

pipなどコマンドも有効になっているので、開発環境の設定はここに参照

3、Spark インストール

超簡単Windows10共存Local検証版の設定法を紹介します。

以前ここの記事を参考して、WindowsのSpark環境を構築済みです。

C:\soft\spark>dir

ドライブ C のボリューム ラベルは Windows です

ボリューム シリアル番号は E6E4-3579 です

C:\soft\spark のディレクトリ

2019/08/28 06:30 <DIR> .

2019/08/28 06:30 <DIR> ..

2019/11/12 17:01 <DIR> bin

2019/11/12 17:03 <DIR> conf

2019/08/28 06:30 <DIR> data

2019/08/28 06:30 <DIR> examples

2019/08/28 06:30 <DIR> jars

2019/08/28 06:30 <DIR> kubernetes

2019/08/28 06:30 21,316 LICENSE

2019/08/28 06:30 <DIR> licenses

2019/08/28 06:30 42,919 NOTICE

2019/08/28 06:30 <DIR> python

2019/08/28 06:30 <DIR> R

2019/08/28 06:30 3,952 README.md

2019/08/28 06:30 164 RELEASE

2019/08/28 06:30 <DIR> sbin

2019/08/28 06:30 <DIR> yarn

4 個のファイル 68,351 バイト

13 個のディレクトリ 422,510,718,976 バイトの空き領域

C:\soft\spark>pyspark

Python 2.7.7 (default, Jun 1 2014, 14:21:57) [MSC v.1500 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

19/11/13 16:32:02 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.4

/_/

Using Python version 2.7.7 (default, Jun 1 2014 14:21:57)

SparkSession available as 'spark'.

>>>

SparkマルチOS対応ファイルを持ってますので、そのまま利用します。

シンボルリンクをはります。

(base) xxxx:~$ ln -s /mnt/c/soft/spark /opt/spark

(base) xxxx:~$ ll /opt

合計 0

drwxr-xr-x 1 root root 512 10月 30 16:54 ./

drwxr-xr-x 1 root root 512 10月 30 12:53 ../

drwxr-xr-x 1 root root 512 10月 30 13:20 anaconda3/

lrwxrwxrwx 1 root root 17 10月 30 16:54 spark -> /mnt/c/soft/spark/

.profileファイルにSPARK_HOME,HADOOP_HOME,PATHを設定します。(.bashrcファイルでも問題ありません)

# set JAVA_HOME JAVA_HOME="/usr/lib/jvm/java-11-openjdk-amd64"

# set SPARK_HOME

SPARK_HOME="/opt/spark"

# set HADOOP_HOME

HADOOP_HOME="/opt/spark"

PATH="$SPARK_HOME/bin:$PATH"

spark-shellを実行して、結果を確認してください。

(base) okada@OGNikkei11:~$ spark-shell

19/11/13 16:46:53 WARN Utils: Your hostname, OGNikkei11 resolves to a loopback address: 127.0.1.1; using 192.168.100.17 instead (on interface eth0)

19/11/13 16:46:53 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/mnt/c/soft/spark/jars/spark-unsafe_2.11-2.4.4.jar) to method java.nio.Bits.unaligned()

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

19/11/13 16:46:53 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://192.168.100.17:4040

Spark context available as 'sc' (master = local[*], app id = local-1573631219567).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.4

/_/

Using Scala version 2.11.12 (OpenJDK 64-Bit Server VM, Java 11.0.4)

Type in expressions to have them evaluated.

Type :help for more information.

pysparkも問題ないはずです。

4、Jupyter設定

$ cd /opt/spark/conf/

$ cp spark-env.sh.template spark-env.sh

$ vi spark-env.sh

下記2行追加

export PYSPARK_DRIVER_PYTHON=/opt/anaconda3/bin/jupyter

export PYSPARK_DRIVER_PYTHON_OPTS="notebook"

pysparkコマンド実行します。

(base) xxxx:~$ pyspark

[I 16:49:06.490 NotebookApp] JupyterLab extension loaded from /opt/anaconda3/lib/python3.7/site-packages/jupyterlab

[I 16:49:06.490 NotebookApp] JupyterLab application directory is /opt/anaconda3/share/jupyter/lab

[I 16:49:06.492 NotebookApp] Serving notebooks from local directory: /home/okada

[I 16:49:06.492 NotebookApp] The Jupyter Notebook is running at:

[I 16:49:06.493 NotebookApp] http://localhost:8888/?token=80b6d4029a0f52ba90c902df49d1156c976fdb0ebb8fc8e0

[I 16:49:06.493 NotebookApp] or http://127.0.0.1:8888/?token=80b6d4029a0f52ba90c902df49d1156c976fdb0ebb8fc8e0

[I 16:49:06.493 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[W 16:49:06.515 NotebookApp] No web browser found: could not locate runnable browser.

[C 16:49:06.516 NotebookApp]

To access the notebook, open this file in a browser:

file:///home/okada/.local/share/jupyter/runtime/nbserver-258-open.html

Or copy and paste one of these URLs:

http://localhost:8888/?token=80b6d4029a0f52ba90c902df49d1156c976fdb0ebb8fc8e0

or http://127.0.0.1:8888/?token=80b6d4029a0f52ba90c902df49d1156c976fdb0ebb8fc8e0

http://localhost:8888/?token=80b6d4029a0f52ba90c902df49d1156c976fdb0ebb8fc8e0をコピーして、Chromeにペスト

jupyter-Sparkの世界へようこそ

追記 Spark Streaming + Kafkaの環境では、Pythonがサポートされてないため、Scala環境を配置します。

Apache Toree (Spark kernel) のインストール

$ pip install --pre toree

$ jupyter toree install --spark_home=$SPARK_HOME

jupyter notebookを起動して、[Apache Toree - Scala] が追加されます。