あらまし

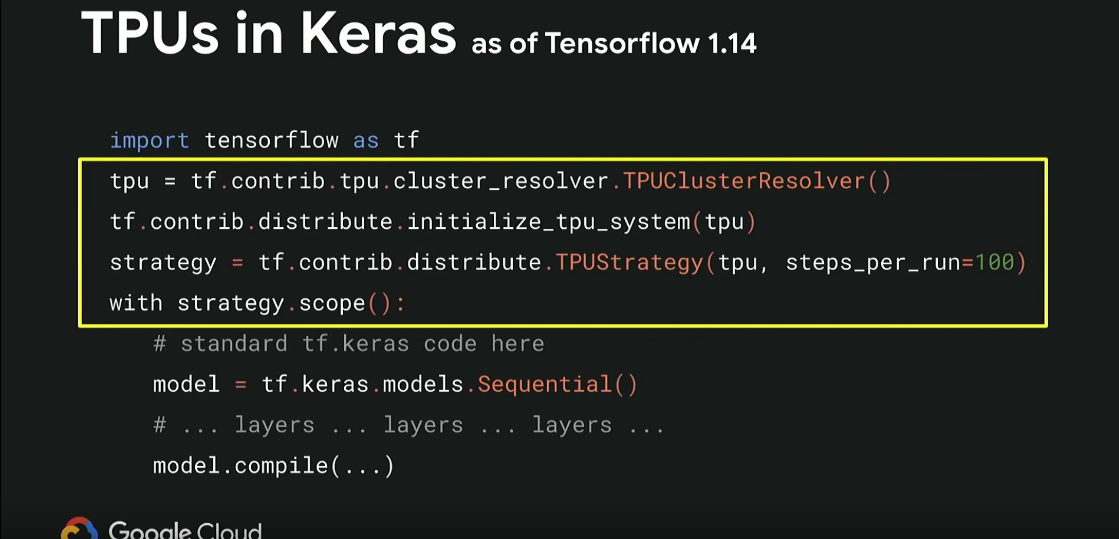

TensorFlow1.14以降、KerasでのTPUの扱い方が少し変わります。

Google I/O'19の資料より

変更点

1.13以前

Google ColabのTPUでは以前はこのような書き方でした。

import tensorflow as tf

from tensorflow.contrib.tpu.python.tpu import keras_support

import os

# model = create_network() # 何らかのネットワークを作るメソッド

# model.compile(...)

tpu_grpc_url = "grpc://"+os.environ["COLAB_TPU_ADDR"]

tpu_cluster_resolver = tf.contrib.cluster_resolver.TPUClusterResolver(tpu_grpc_url)

strategy = keras_support.TPUDistributionStrategy(tpu_cluster_resolver)

model = tf.contrib.tpu.keras_to_tpu_model(model, strategy=strategy)

# model.fit(...)

1.14以降

1.14以降は次のようになります。

import tensorflow as tf

import os

tpu_grpc_url = "grpc://"+os.environ["COLAB_TPU_ADDR"]

tpu_cluster_resolver = tf.contrib.cluster_resolver.TPUClusterResolver(tpu_grpc_url)

tf.contrib.distribute.initialize_tpu_system(tpu_cluster_resolver)

strategy = tf.contrib.distribute.TPUStrategy(tpu_cluster_resolver, steps_per_run=100)

with strategy.scope():

# model = create_network() # 何らかのネットワークを作るメソッド

# model.compile(...)

# model.fit(...)

「with~」によるスコープと、TPUの初期化をモデルの定義前にもってくるのがポイントです。

MNISTサンプル

Google Colabを6/14に確認したところ、TF1.14rc1にバージョンアップされていました。

print(tf.__version__)

# 1.14.0-rc1

1.14以降でのMNISTサンプルは以下のようになります。

import tensorflow as tf

import tensorflow.keras as keras

import tensorflow.keras.layers as layers

import numpy as np

import os

def conv_bn_relu(input, ch):

x = layers.Conv2D(ch, 3, padding="same")(input)

x = layers.BatchNormalization()(x)

return layers.Activation("relu")(x)

def create_network():

input = layers.Input((28, 28, 1))

x = conv_bn_relu(input, 32)

x = layers.AveragePooling2D(2)(x)

x = conv_bn_relu(x, 64)

x = layers.AveragePooling2D(2)(x)

x = conv_bn_relu(x, 128)

x = layers.GlobalAveragePooling2D()(x)

x = layers.Dense(10, activation="softmax")(x)

return keras.models.Model(input, x)

# TPUで訓練する(TF1.14以降)

def train_tpu():

(X_train, y_train), (X_test, y_test) = keras.datasets.mnist.load_data()

X_train = np.expand_dims(X_train / 255.0, axis=-1).astype(np.float32)

X_test = np.expand_dims(X_test / 255.0, axis=-1).astype(np.float32)

y_train = keras.utils.to_categorical(y_train)

y_test = keras.utils.to_categorical(y_test)

# TPUの初期設定

tpu_grpc_url = "grpc://"+os.environ["COLAB_TPU_ADDR"]

tpu_cluster_resolver = tf.contrib.cluster_resolver.TPUClusterResolver(tpu_grpc_url)

tf.contrib.distribute.initialize_tpu_system(tpu_cluster_resolver)

strategy = tf.contrib.distribute.TPUStrategy(tpu_cluster_resolver, steps_per_run=100)

with strategy.scope():

model = create_network()

model.compile(keras.optimizers.SGD(0.1, momentum=0.9), "categorical_crossentropy", ["acc"])

model.fit(X_train, y_train, validation_data=(X_test, y_test), batch_size=128,

steps_per_epoch=X_train.shape[0] // 128, validation_steps=X_test.shape[0] // 128,

epochs=10)

if __name__ == "__main__":

train_tpu()

以下のような出力になります。

何点かポイントがあります。

- 入力の変数(X_train, X_test…)の型がfloat32, bfloat16, float16限定になった。astypeで型を明示する必要がある。

- model.compileでtf.kerasのOptimizerを渡しても、特に問題なく動くようになった。古い資料だと「tf.train.○○Optimizer」を渡しているのもあるが、特にそれをやる必要はなさそう。これは純粋に改善点かと思われる。

- fit内で

steps_per_epochの指定をする必要が出てきた(fit_generatorみたいな扱いになる)。例えば、batch_size=128とすると以下のように怒られるが、steps_per_epochの指定をすると直る。validation_stepsは特に指定しなくても怒られないが、端数分のバッチ数は切り上げで計算しているので注意が必要。

ValueError: The dataset you passed contains 4687 batches, but you passed `epochs=10` and `steps_per_epoch=469`, which is a total of 4690 steps. We cannot draw that many steps from this dataset. We suggest to set `steps_per_epoch=468`.

1.14のTPUはfit_generatorに対応していない

残念なことに、1.14のTPUはfit_generatorに対応していません。例えば先程のMNISTサンプルは、このようにImageDataGeneratorを使っても書けるはずです。

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# 1.14では動かない

def train_by_generator():

(X_train, y_train), (X_test, y_test) = keras.datasets.mnist.load_data()

X_train, X_test = np.expand_dims(X_train, axis=-1), np.expand_dims(X_test, axis=-1)

y_train = keras.utils.to_categorical(y_train)

y_test = keras.utils.to_categorical(y_test)

train_gen = ImageDataGenerator(rescale=1.0 / 255).flow(X_train, y_train, 128, shuffle=True)

test_gen = ImageDataGenerator(rescale=1.0 / 255).flow(X_test, y_test, 128, shuffle=False)

# TPUの初期設定

tpu_grpc_url = "grpc://"+os.environ["COLAB_TPU_ADDR"]

tpu_cluster_resolver = tf.contrib.cluster_resolver.TPUClusterResolver(tpu_grpc_url)

tf.contrib.distribute.initialize_tpu_system(tpu_cluster_resolver)

strategy = tf.contrib.distribute.TPUStrategy(tpu_cluster_resolver, steps_per_run=100)

with strategy.scope():

model = create_network()

model.compile(keras.optimizers.SGD(0.1, momentum=0.9), "categorical_crossentropy", ["acc"])

model.fit_generator(train_gen, steps_per_epoch=X_train.shape[0] // 128,

validation_data=test_gen, validation_steps=X_test.shape[0] // 128,

epochs=10)

怒られます。未対応とのことです。

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1412 """

1413 if self._distribution_strategy:

-> 1414 raise NotImplementedError('`fit_generator` is not supported for '

1415 'models compiled with tf.distribute.Strategy.')

1416 _keras_api_gauge.get_cell('train').set(True)

NotImplementedError: `fit_generator` is not supported for models compiled with tf.distribute.Strategy.

ちなみに、1.13ではfit_generatorを使ったTPUの訓練はできていたので、fit_generatorで訓練したい場合は、対応できるまで1.13を使うしかなさそうです。

fit_generatorを使うために1.13にダウングレードする

暫定的な解決策です。さすがにfit_generatorを未対応のまま放置は、実用面からあり得ないと思われるので、この方法はすぐに不要になるかと思われます。

pip install tensorflow==1.13.1

ランタイムの再起動が必要です。

from tensorflow.contrib.tpu.python.tpu import keras_support

def lr_scheduler(epoch):

if epoch <= 5: return 0.1

else: return 0.01

def train_by_generator_113():

(X_train, y_train), (X_test, y_test) = keras.datasets.mnist.load_data()

X_train, X_test = np.expand_dims(X_train, axis=-1), np.expand_dims(X_test, axis=-1)

y_train = keras.utils.to_categorical(y_train)

y_test = keras.utils.to_categorical(y_test)

train_gen = ImageDataGenerator(rescale=1.0 / 255).flow(X_train, y_train, 128, shuffle=True)

test_gen = ImageDataGenerator(rescale=1.0 / 255).flow(X_test, y_test, 128, shuffle=False)

model = create_network()

model.compile(keras.optimizers.SGD(0.1, momentum=0.9), "categorical_crossentropy", ["acc"])

# TPUの初期設定

tpu_grpc_url = "grpc://"+os.environ["COLAB_TPU_ADDR"]

tpu_cluster_resolver = tf.contrib.cluster_resolver.TPUClusterResolver(tpu_grpc_url)

strategy = keras_support.TPUDistributionStrategy(tpu_cluster_resolver)

model = tf.contrib.tpu.keras_to_tpu_model(model, strategy=strategy)

scheduler = keras.callbacks.LearningRateScheduler(lr_scheduler)

model.fit_generator(train_gen, steps_per_epoch=X_train.shape[0] // 128,

validation_data=test_gen, validation_steps=X_test.shape[0] // 128,

epochs=10, callbacks=[scheduler])

LearningRateSchedulerは本来は不要なものですが、1.13ではtf.kerasのOptimizerをTPUに対応させるために必要になるので加えています(LearningRateSchedulerから学習率をセットさせる)。これを外すと「not fetchable」と怒られてしまうので。1.14以降では不要です。

昔出ていた方法のように、「tf.train.MomentumOptimizer」でも試してみましたが(こちらはLearningRateSchedulerが不要)、えらく訓練が遅くなってしまいました。原因は不明です。とりあえずtf.kerasのOptimizerを使ったほうが良さそうです。