日本語版

同内容の日本語記事はnoteに投稿しています

https://note.com/kamatari_san/n/n866915eede41

Introduction

The following tweet was posted by the TensorFlow official the other day.

https://twitter.com/TensorFlow/status/1432373876235898881

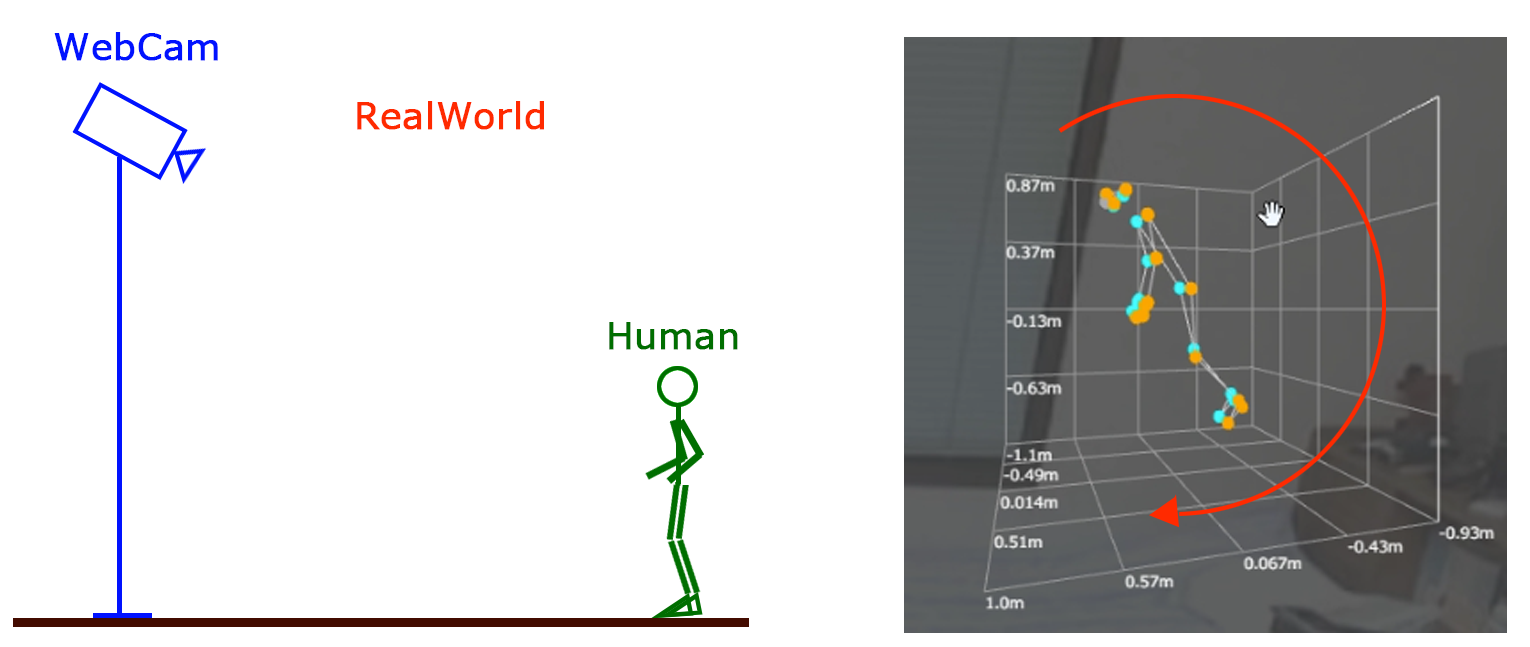

I was interested in the fact that you can get the Z-coordinate in 3D (Y-top) by looking at the link and demo in the tweet.

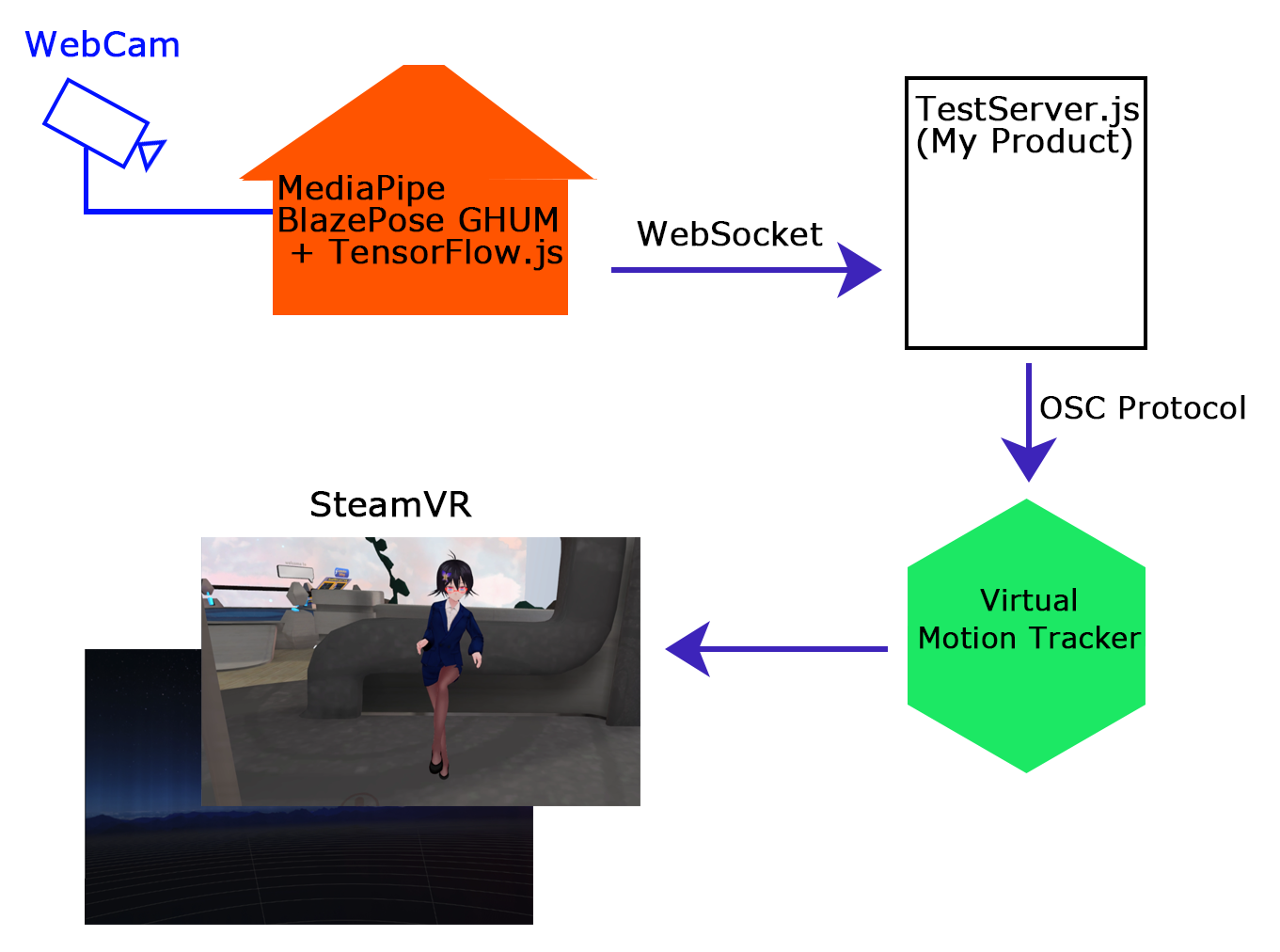

1.How do we get SteamVR to recognize it?

Valve has released a library and specifications for creating HMDs, controllers, and trackers as OpenVR (link below).

https://github.com/ValveSoftware/openvr

However, it seems to be quite troublesome.

But fortunately, there is a driver called Virtual Motion Tracker (VMT).

There is a person who has created a driver that can be recognized as a virtual tracker by sending coordinates, posture (rotation), etc. using the OSC protocol.

https://qiita.com/gpsnmeajp/items/9c41654e6c89c6b9702f

https://gpsnmeajp.github.io/VirtualMotionTrackerDocument/

I installed and configured the above.

(Note: Please do not contact the author of VMT about this article.

2. Get the official demo of MediaPipe BlazePose GHUM + TensorFlow.js (TFJS)

Get the TFJS code from below.

https://github.com/tensorflow/tfjs-models

Also, the hierarchy of the demo code is the link below.

https://github.com/tensorflow/tfjs-models/tree/master/pose-detection/demos/live_video/src

If you just want to run the official demo, just build the demo code obtained above with the yarn watch command in a development environment (node.js, yarn installed) and it will work.

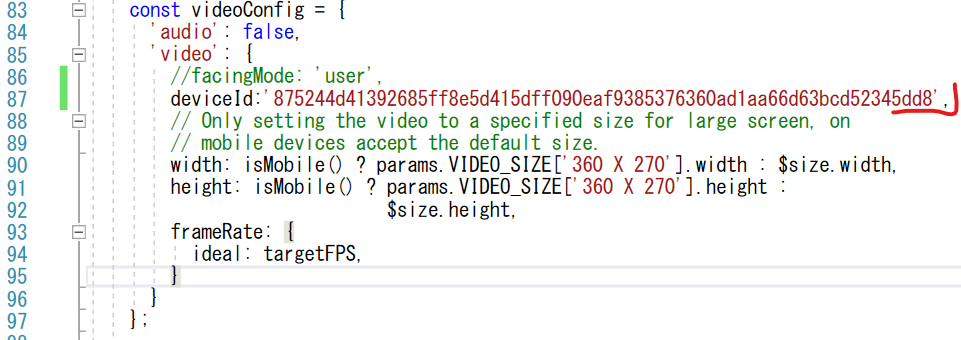

3.Editing the demo code1

Unfortunately, there is no webcam selector in this demo code.

First, I need to modify around line 81 of camera.js to make it possible to specify any webcam.

The code is still in the validation stage and is very messy, so I don't want to show you too much.

In my case, for now, I am writing the device ID of the webcam directly. (LOL

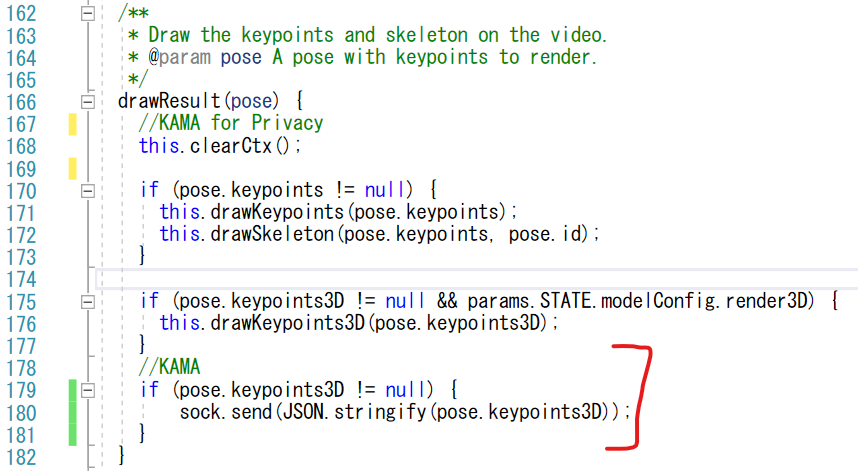

4.Editing the demo code2

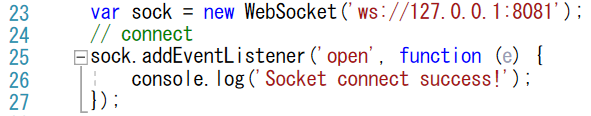

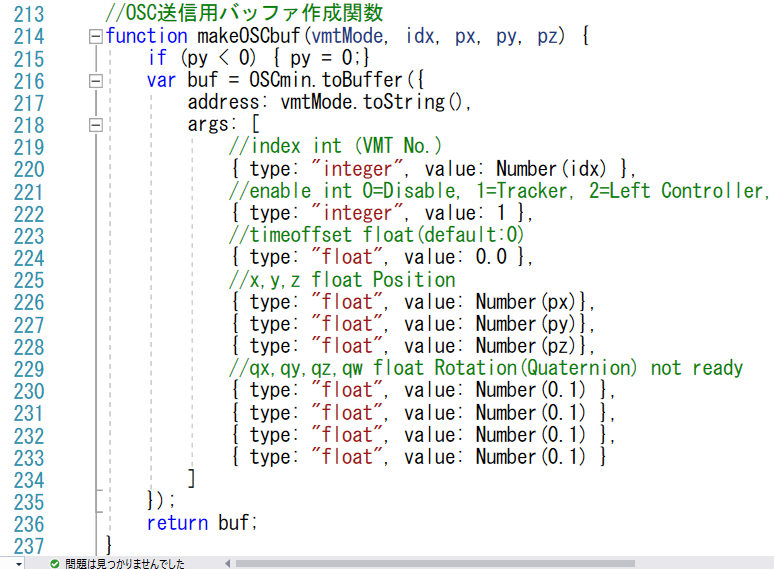

It would be nice if we could send the OSC protocol to VMT as it is, but since this is not possible, I will use WebSocket to send the KeyPoint3D information generated by TFJS to another server via WebSocket.

Added the process of WebSocket transmission to the drawKeypoints function in line 168.

Naturally, we also need to do something to establish WebSocket communication.

5.Creating an OSC protocol sending server via WebSocket.

In this section, we will use WebSocket to receive Keypoint3D information and modify it a little bit to make it make sense in VR space.

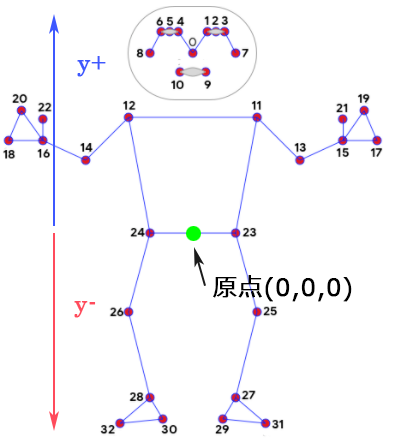

An overview of KeyPoint is described in the following link.

https://google.github.io/mediapipe/solutions/pose.html

5-1. Rotation on X-axis

First of all, depending on the position of the webcam, it is often placed from upward to downward in order to fit the whole body into the webcam.

This causes the Z coordinate to be shifted around the X axis.

Therefore, it is necessary to rotate it.

This can be done by using trigonometric functions, so I will not go into details.

5-2. Recalculate Y Position

The origin of the KeyPoint is the buttocks (the middle coordinate of P23 and P24).

Therefore, the y-coordinate of the lower body will be a negative value if the posture is upright, and the upper body will be a positive value.

Since this is difficult to handle in VR, I currently find the minimum value from P0 to P32 and use it as the offset value.

(Also, since I wanted to use it mainly on VRChat, I modified the values somewhat to make it easier for VRChat to handle.

(For trackers, I created "waist", "left leg", and "right leg", which are necessary for VRChat.

5-3.Send OSC Protocol to VMT

Once this is done, all that is left is to send the necessary values to VMT via the OSC protocol.

(I used the osc-min plugin this time because it was easy to use.

I am still in the process of trial and error for the quaternions to find the posture.

However, I believe that the direction of the feet can be obtained from P28-30-32, P27-29-31.

6.Execution result

The following link shows the results of running the program in VRChat.

https://twitter.com/kamatari_san/status/1447092158318579721?s=20

(Note: Because this is a capture of a previous version of this post, some of the movements are a bit awkward, and the left foot and right foot are reversed.

The following link is a capture from a game called Thief Simurator VR, where I couldn't crouch because I didn't have a waist tracker until now.

https://twitter.com/kamatari_san/status/1445765307142848525?s=20

7.System configuration diagram

The following is a poorly drawn diagram of the system.

Acknowledgements

MediaPipe

https://google.github.io/mediapipe/solutions/pose.html

TensorFlow.js

https://blog.tensorflow.org/2021/08/3d-pose-detection-with-mediapipe-blazepose-ghum-tfjs.html

Virtual Motion Tracker

https://gpsnmeajp.github.io/VirtualMotionTrackerDocument/