はじめに

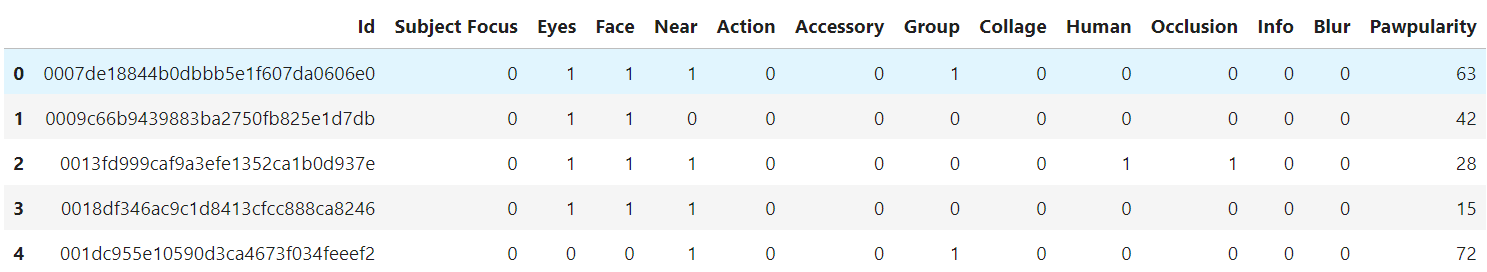

ペット写真の人気度を予測するkaggleのコンペ(PetFinder.my - Pawpularity Contest)を題材にXceptionの学習済モデルを使ってKerasで転移学習します。人気度は0~100でスコアリングされており以下はScore30とScore80の画像です。

VGG16, Inception, ResNetなどありますが、同様の考え方で実装可能です。

転移学習の実装をゴールにしているので推論はせず、学習させたモデルを保存して終わりにします。

転移学習とは

転移学習とファインチューニングは混同しがちですが、意味が異なるので定義を確認しておきます。

- 転移学習:学習済モデルの重みは固定。追加した最終層の重みだけを学習。

- ファインチューニング:追加した最終層を含めたモデル全体で重みを再学習。

データ水増しとは

画像を回転・移動・反転させることで、枚数の増加および各画像に対して多様性を持たせられるので「量」と「質」を向上できます。偏った傾向ばかり含まれるデータセットで学習すると、過学習によって汎化性能の低いモデルが出来上がりますが各画像にバリーエーションがあることでそれを防げます。 ただし、データセット内の既存画像と極端な類似画像を生成してしまった場合、余計に過学習しやすくなる可能性があるため注意が必要です。

実行環境

- Python:3.7.10

- TensorFlow.Keras:2.6.0

- Kaggle Notebook

- GPU

1. ライブラリ、データセット読み込み

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore")

from sklearn.model_selection import train_test_split

from tensorflow.keras.applications.xception import Xception

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dropout

from tensorflow.keras.layers import Dense

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.applications.xception import preprocess_input

from tensorflow.keras.callbacks import EarlyStopping

import tensorflow as tf

# Select dataset

PATH = f"../input/petfinder-pawpularity-score"

# PATH = f"../input/remove-duplicate-images"

# Data Load

train = pd.read_csv(f"{PATH}/train.csv")

test = pd.read_csv(f"{PATH}/test.csv")

submission = pd.read_csv(f"{PATH}/sample_submission.csv")

train.head()

2.訓練用、検証用、テスト用データを生成するジェネレータ作成

データ加工

# Idにjpgを付与

k_df = train[["Id","Pawpularity"]]

k_df["Image"] = k_df["Id"].apply(lambda x: x+".jpg")

k_df["Pawpularity"] = k_df["Pawpularity"]/100

# 訓練用、検証用データに分割

k_X_train, k_X_valid, k_y_train, k_y_valid = train_test_split(k_df["Image"],

k_df["Pawpularity"],

test_size=0.3,

random_state=42)

print("-"*80)

print("Train and test split sizes")

print("-"*80)

print(f"X_train : {k_X_train.shape}")

print(f"X_test : {k_X_valid.shape}")

print(f"y_train : {k_y_train.shape[0]}")

print(f"y_test : {k_y_valid.shape[0]}")

print("-"*80)

k_train_df = pd.DataFrame(k_X_train, columns=["Image"])

k_train_df["Pawpularity"] = k_y_train

k_valid_df = pd.DataFrame(k_X_valid, columns=["Image"])

k_valid_df["Pawpularity"] = k_y_valid

--------------------------------------------------------------------------------

Train and test split sizes

--------------------------------------------------------------------------------

X_train : (6919,)

X_test : (2966,)

y_train : 6919

y_test : 2966

ジェネレーター作成

画像はテキストに比べてデータが大きいのですべてメモリ上に読み込んで処理するのではなく、ミニバッチごとに読み込んでメモリを節約します。ジェネレーターで次の処理をします。

- ミニバッチごとに画像を読み込み

- リアルタイムにデータ水増し(データ拡張の引数を指定した場合)

- 水増しした画像でミニバッチ作成

def makeGenerator(datagen, df, subset=None):

"""ジェネレーター作成

"""

return datagen.flow_from_dataframe(dataframe=df,

directory=f"{PATH}/train/",

x_col="Image",

y_col="Pawpularity",

subset=subset,

target_size=(299,299),

batch_size=32,

seed=42,

shuffle=True,

class_mode="raw")

# データ水増しなし

# datagen = ImageDataGenerator(preprocessing_function=preprocess_input,

# validation_split=0.2)

# データ水増しあり

datagen = ImageDataGenerator(rotation_range=10, # rotation

width_shift_range=0.2, # horizontal shift

height_shift_range=0.2, # vertical shift

zoom_range=0.2, # zoom

horizontal_flip=True, # horizontal flip

preprocessing_function=preprocess_input,

validation_split=0.2)

# 画像データのバッチ生成

train_generator = makeGenerator(datagen, k_train_df, "training")

valid_generator = makeGenerator(datagen, k_train_df, "validation")

test_generator = makeGenerator(datagen, k_valid_df)

# 拡張データ表示

def plotImages(images_arr):

fig, axes = plt.subplots(1, 5, figsize=(20,20))

axes = axes.flatten()

for img, ax in zip( images_arr, axes):

ax.imshow(img)

ax.axis('off')

plt.tight_layout()

plt.show()

augmented_images = [train_generator[0][0][2] for i in range(5)]

plotImages(augmented_images)

Found 5551 validated image filenames.

Found 1387 validated image filenames.

Found 2974 validated image filenames.

flow_from_dataframe()

この関数の優れた点は、画像ファイル名とラベルがマッピングされたデータフレームを渡せるところです。

-

dataframe:画像のファイル名と目的変数(数値orクラス)がマッピングされたデータフレーム -

directory:画像を含むフォルダパス。x_colが絶対パスの場合はNone -

x_col:画像のファイル名を持つデータフレームの列 -

y_col:目的変数の列名 -

subset:trainorvalidation -

save_to_dir:生成された拡張画像を保存するフォルダパス。生成された画像を確認する時に便利。 -

batch_size:データセットを複数のサブセットに分割して学習を行います。サブセットに含まれるデータ数を意味します。 -

target_size:この画像サイズにリサイズされる -

class_mode:目的変数を生データのまま扱う。回帰に有用。

ImageDataGenerator()

入力ピクセル値を-1~1にスケーリングして、画像データのバッチ生成を設定します。

-

rotation_range:±10°の範囲で画像をランダムに回転 -

width_shift_range:±0.2×画像の横幅の範囲で左右にランダムに動かします -

height_shift_range:±0.2×画像の縦幅の範囲で上下にランダムに動かします -

zoom_range:[lower, upper] = [1-zoom_range, 1+zoom_range]の倍率でズームします -

horizontal_flip:ランダムに画像を左右反転 -

preprocessing_function:3次元データを返す関数を指定することでコールバック関数による前処理をします。

学習時のパラメータ設定

early_stopping = EarlyStopping(monitor="val_loss",

patience=15,

verbose=2,

restore_best_weights=True)

# step数 = データ数/バッチサイズ

STEP_SIZE_TRAIN = train_generator.n//train_generator.batch_size

STEP_SIZE_VALID = valid_generator.n//valid_generator.batch_size

-

patience:何エポック改善が見られなかったら訓練停止するか -

restore_best_weights:最適なエポックでのモデルを使用

3.モデル構築

KerasのXception読み込み

Xceptionは、ImageNetという大規模な画像データセットで学習した画像分類のモデルで1000個のクラスに分類できます。画像から人気度を予測する回帰問題を解きたいので、Xceptionで転移学習します。分類を行う層は必要ないのでネットワークの出力層側にある全結合層を除いてXceptionを読み込みます。既存の層の重みは固定できるようにbase_model.trainable = Falseとします。

# Xception読み込み

base_model = Xception(include_top=False,

weights='imagenet',

input_tensor=None,

input_shape=(299,299,3),

pooling="avg")

# 最終層以外は学習しない

base_model.trainable = False

base_model.summary()

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/xception/xception_weights_tf_dim_ordering_tf_kernels_notop.h5

83689472/83683744 [==============================] - 0s 0us/step

83697664/83683744 [==============================] - 0s 0us/step

Model: "xception"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 299, 299, 3) 0

__________________________________________________________________________________________________

block1_conv1 (Conv2D) (None, 149, 149, 32) 864 input_1[0][0]

__________________________________________________________________________________________________

block1_conv1_bn (BatchNormaliza (None, 149, 149, 32) 128 block1_conv1[0][0]

__________________________________________________________________________________________________

block1_conv1_act (Activation) (None, 149, 149, 32) 0 block1_conv1_bn[0][0]

__________________________________________________________________________________________________

block1_conv2 (Conv2D) (None, 147, 147, 64) 18432 block1_conv1_act[0][0]

__________________________________________________________________________________________________

block1_conv2_bn (BatchNormaliza (None, 147, 147, 64) 256 block1_conv2[0][0]

__________________________________________________________________________________________________

block1_conv2_act (Activation) (None, 147, 147, 64) 0 block1_conv2_bn[0][0]

__________________________________________________________________________________________________

block2_sepconv1 (SeparableConv2 (None, 147, 147, 128 8768 block1_conv2_act[0][0]

__________________________________________________________________________________________________

block2_sepconv1_bn (BatchNormal (None, 147, 147, 128 512 block2_sepconv1[0][0]

__________________________________________________________________________________________________

block2_sepconv2_act (Activation (None, 147, 147, 128 0 block2_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block2_sepconv2 (SeparableConv2 (None, 147, 147, 128 17536 block2_sepconv2_act[0][0]

__________________________________________________________________________________________________

block2_sepconv2_bn (BatchNormal (None, 147, 147, 128 512 block2_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 74, 74, 128) 8192 block1_conv2_act[0][0]

__________________________________________________________________________________________________

block2_pool (MaxPooling2D) (None, 74, 74, 128) 0 block2_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 74, 74, 128) 512 conv2d[0][0]

__________________________________________________________________________________________________

add (Add) (None, 74, 74, 128) 0 block2_pool[0][0]

batch_normalization[0][0]

__________________________________________________________________________________________________

block3_sepconv1_act (Activation (None, 74, 74, 128) 0 add[0][0]

__________________________________________________________________________________________________

block3_sepconv1 (SeparableConv2 (None, 74, 74, 256) 33920 block3_sepconv1_act[0][0]

__________________________________________________________________________________________________

block3_sepconv1_bn (BatchNormal (None, 74, 74, 256) 1024 block3_sepconv1[0][0]

__________________________________________________________________________________________________

block3_sepconv2_act (Activation (None, 74, 74, 256) 0 block3_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block3_sepconv2 (SeparableConv2 (None, 74, 74, 256) 67840 block3_sepconv2_act[0][0]

__________________________________________________________________________________________________

block3_sepconv2_bn (BatchNormal (None, 74, 74, 256) 1024 block3_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 37, 37, 256) 32768 add[0][0]

__________________________________________________________________________________________________

block3_pool (MaxPooling2D) (None, 37, 37, 256) 0 block3_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 37, 37, 256) 1024 conv2d_1[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 37, 37, 256) 0 block3_pool[0][0]

batch_normalization_1[0][0]

__________________________________________________________________________________________________

block4_sepconv1_act (Activation (None, 37, 37, 256) 0 add_1[0][0]

__________________________________________________________________________________________________

block4_sepconv1 (SeparableConv2 (None, 37, 37, 728) 188672 block4_sepconv1_act[0][0]

__________________________________________________________________________________________________

block4_sepconv1_bn (BatchNormal (None, 37, 37, 728) 2912 block4_sepconv1[0][0]

__________________________________________________________________________________________________

block4_sepconv2_act (Activation (None, 37, 37, 728) 0 block4_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block4_sepconv2 (SeparableConv2 (None, 37, 37, 728) 536536 block4_sepconv2_act[0][0]

__________________________________________________________________________________________________

block4_sepconv2_bn (BatchNormal (None, 37, 37, 728) 2912 block4_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 19, 19, 728) 186368 add_1[0][0]

__________________________________________________________________________________________________

block4_pool (MaxPooling2D) (None, 19, 19, 728) 0 block4_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 19, 19, 728) 2912 conv2d_2[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 19, 19, 728) 0 block4_pool[0][0]

batch_normalization_2[0][0]

__________________________________________________________________________________________________

block5_sepconv1_act (Activation (None, 19, 19, 728) 0 add_2[0][0]

__________________________________________________________________________________________________

block5_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block5_sepconv1_act[0][0]

__________________________________________________________________________________________________

block5_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block5_sepconv1[0][0]

__________________________________________________________________________________________________

block5_sepconv2_act (Activation (None, 19, 19, 728) 0 block5_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block5_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block5_sepconv2_act[0][0]

__________________________________________________________________________________________________

block5_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block5_sepconv2[0][0]

__________________________________________________________________________________________________

block5_sepconv3_act (Activation (None, 19, 19, 728) 0 block5_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block5_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block5_sepconv3_act[0][0]

__________________________________________________________________________________________________

block5_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block5_sepconv3[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 19, 19, 728) 0 block5_sepconv3_bn[0][0]

add_2[0][0]

__________________________________________________________________________________________________

block6_sepconv1_act (Activation (None, 19, 19, 728) 0 add_3[0][0]

__________________________________________________________________________________________________

block6_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block6_sepconv1_act[0][0]

__________________________________________________________________________________________________

block6_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block6_sepconv1[0][0]

__________________________________________________________________________________________________

block6_sepconv2_act (Activation (None, 19, 19, 728) 0 block6_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block6_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block6_sepconv2_act[0][0]

__________________________________________________________________________________________________

block6_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block6_sepconv2[0][0]

__________________________________________________________________________________________________

block6_sepconv3_act (Activation (None, 19, 19, 728) 0 block6_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block6_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block6_sepconv3_act[0][0]

__________________________________________________________________________________________________

block6_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block6_sepconv3[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 19, 19, 728) 0 block6_sepconv3_bn[0][0]

add_3[0][0]

__________________________________________________________________________________________________

block7_sepconv1_act (Activation (None, 19, 19, 728) 0 add_4[0][0]

__________________________________________________________________________________________________

block7_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block7_sepconv1_act[0][0]

__________________________________________________________________________________________________

block7_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block7_sepconv1[0][0]

__________________________________________________________________________________________________

block7_sepconv2_act (Activation (None, 19, 19, 728) 0 block7_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block7_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block7_sepconv2_act[0][0]

__________________________________________________________________________________________________

block7_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block7_sepconv2[0][0]

__________________________________________________________________________________________________

block7_sepconv3_act (Activation (None, 19, 19, 728) 0 block7_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block7_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block7_sepconv3_act[0][0]

__________________________________________________________________________________________________

block7_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block7_sepconv3[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 19, 19, 728) 0 block7_sepconv3_bn[0][0]

add_4[0][0]

__________________________________________________________________________________________________

block8_sepconv1_act (Activation (None, 19, 19, 728) 0 add_5[0][0]

__________________________________________________________________________________________________

block8_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block8_sepconv1_act[0][0]

__________________________________________________________________________________________________

block8_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block8_sepconv1[0][0]

__________________________________________________________________________________________________

block8_sepconv2_act (Activation (None, 19, 19, 728) 0 block8_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block8_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block8_sepconv2_act[0][0]

__________________________________________________________________________________________________

block8_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block8_sepconv2[0][0]

__________________________________________________________________________________________________

block8_sepconv3_act (Activation (None, 19, 19, 728) 0 block8_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block8_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block8_sepconv3_act[0][0]

__________________________________________________________________________________________________

block8_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block8_sepconv3[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 19, 19, 728) 0 block8_sepconv3_bn[0][0]

add_5[0][0]

__________________________________________________________________________________________________

block9_sepconv1_act (Activation (None, 19, 19, 728) 0 add_6[0][0]

__________________________________________________________________________________________________

block9_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block9_sepconv1_act[0][0]

__________________________________________________________________________________________________

block9_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block9_sepconv1[0][0]

__________________________________________________________________________________________________

block9_sepconv2_act (Activation (None, 19, 19, 728) 0 block9_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block9_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block9_sepconv2_act[0][0]

__________________________________________________________________________________________________

block9_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block9_sepconv2[0][0]

__________________________________________________________________________________________________

block9_sepconv3_act (Activation (None, 19, 19, 728) 0 block9_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block9_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block9_sepconv3_act[0][0]

__________________________________________________________________________________________________

block9_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block9_sepconv3[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 19, 19, 728) 0 block9_sepconv3_bn[0][0]

add_6[0][0]

__________________________________________________________________________________________________

block10_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_7[0][0]

__________________________________________________________________________________________________

block10_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block10_sepconv1_act[0][0]

__________________________________________________________________________________________________

block10_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block10_sepconv1[0][0]

__________________________________________________________________________________________________

block10_sepconv2_act (Activatio (None, 19, 19, 728) 0 block10_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block10_sepconv2 (SeparableConv (None, 19, 19, 728) 536536 block10_sepconv2_act[0][0]

__________________________________________________________________________________________________

block10_sepconv2_bn (BatchNorma (None, 19, 19, 728) 2912 block10_sepconv2[0][0]

__________________________________________________________________________________________________

block10_sepconv3_act (Activatio (None, 19, 19, 728) 0 block10_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block10_sepconv3 (SeparableConv (None, 19, 19, 728) 536536 block10_sepconv3_act[0][0]

__________________________________________________________________________________________________

block10_sepconv3_bn (BatchNorma (None, 19, 19, 728) 2912 block10_sepconv3[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 19, 19, 728) 0 block10_sepconv3_bn[0][0]

add_7[0][0]

__________________________________________________________________________________________________

block11_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_8[0][0]

__________________________________________________________________________________________________

block11_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block11_sepconv1_act[0][0]

__________________________________________________________________________________________________

block11_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block11_sepconv1[0][0]

__________________________________________________________________________________________________

block11_sepconv2_act (Activatio (None, 19, 19, 728) 0 block11_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block11_sepconv2 (SeparableConv (None, 19, 19, 728) 536536 block11_sepconv2_act[0][0]

__________________________________________________________________________________________________

block11_sepconv2_bn (BatchNorma (None, 19, 19, 728) 2912 block11_sepconv2[0][0]

__________________________________________________________________________________________________

block11_sepconv3_act (Activatio (None, 19, 19, 728) 0 block11_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block11_sepconv3 (SeparableConv (None, 19, 19, 728) 536536 block11_sepconv3_act[0][0]

__________________________________________________________________________________________________

block11_sepconv3_bn (BatchNorma (None, 19, 19, 728) 2912 block11_sepconv3[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 19, 19, 728) 0 block11_sepconv3_bn[0][0]

add_8[0][0]

__________________________________________________________________________________________________

block12_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_9[0][0]

__________________________________________________________________________________________________

block12_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block12_sepconv1_act[0][0]

__________________________________________________________________________________________________

block12_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block12_sepconv1[0][0]

__________________________________________________________________________________________________

block12_sepconv2_act (Activatio (None, 19, 19, 728) 0 block12_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block12_sepconv2 (SeparableConv (None, 19, 19, 728) 536536 block12_sepconv2_act[0][0]

__________________________________________________________________________________________________

block12_sepconv2_bn (BatchNorma (None, 19, 19, 728) 2912 block12_sepconv2[0][0]

__________________________________________________________________________________________________

block12_sepconv3_act (Activatio (None, 19, 19, 728) 0 block12_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block12_sepconv3 (SeparableConv (None, 19, 19, 728) 536536 block12_sepconv3_act[0][0]

__________________________________________________________________________________________________

block12_sepconv3_bn (BatchNorma (None, 19, 19, 728) 2912 block12_sepconv3[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 19, 19, 728) 0 block12_sepconv3_bn[0][0]

add_9[0][0]

__________________________________________________________________________________________________

block13_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_10[0][0]

__________________________________________________________________________________________________

block13_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block13_sepconv1_act[0][0]

__________________________________________________________________________________________________

block13_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block13_sepconv1[0][0]

__________________________________________________________________________________________________

block13_sepconv2_act (Activatio (None, 19, 19, 728) 0 block13_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block13_sepconv2 (SeparableConv (None, 19, 19, 1024) 752024 block13_sepconv2_act[0][0]

__________________________________________________________________________________________________

block13_sepconv2_bn (BatchNorma (None, 19, 19, 1024) 4096 block13_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 10, 10, 1024) 745472 add_10[0][0]

__________________________________________________________________________________________________

block13_pool (MaxPooling2D) (None, 10, 10, 1024) 0 block13_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 10, 10, 1024) 4096 conv2d_3[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 10, 10, 1024) 0 block13_pool[0][0]

batch_normalization_3[0][0]

__________________________________________________________________________________________________

block14_sepconv1 (SeparableConv (None, 10, 10, 1536) 1582080 add_11[0][0]

__________________________________________________________________________________________________

block14_sepconv1_bn (BatchNorma (None, 10, 10, 1536) 6144 block14_sepconv1[0][0]

__________________________________________________________________________________________________

block14_sepconv1_act (Activatio (None, 10, 10, 1536) 0 block14_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block14_sepconv2 (SeparableConv (None, 10, 10, 2048) 3159552 block14_sepconv1_act[0][0]

__________________________________________________________________________________________________

block14_sepconv2_bn (BatchNorma (None, 10, 10, 2048) 8192 block14_sepconv2[0][0]

__________________________________________________________________________________________________

block14_sepconv2_act (Activatio (None, 10, 10, 2048) 0 block14_sepconv2_bn[0][0]

__________________________________________________________________________________________________

global_average_pooling2d (Globa (None, 2048) 0 block14_sepconv2_act[0][0]

==================================================================================================

Total params: 20,861,480

Trainable params: 0

Non-trainable params: 20,861,480

__________________________________________________________________________________________________

Trainable params: 0より、重みを固定できたことを確認できました。

Xceptionの最終層に全結合層を追加

人気度を出力できるようにXceptionの最終層に新たな層を追加しモデルを構築します。出力層のニューロンは1つ、線形関数を指定し回帰問題にします。

# Add new fully-connected layers

x = base_model.output

x = Dense(128, activation='relu')(x)

x = Dropout(0.2)(x)

# Output : new classifier

predictions = Dense(1, activation='linear')(x)

# Define new model

xcept_model = Model(inputs=base_model.input,

outputs=predictions,name="new_xception_model")

xcept_model.compile(optimizer="adam",

loss=tf.keras.metrics.mean_squared_error)

xcept_model.summary()

Model: "new_xception_model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 299, 299, 3) 0

__________________________________________________________________________________________________

block1_conv1 (Conv2D) (None, 149, 149, 32) 864 input_1[0][0]

__________________________________________________________________________________________________

block1_conv1_bn (BatchNormaliza (None, 149, 149, 32) 128 block1_conv1[0][0]

__________________________________________________________________________________________________

block1_conv1_act (Activation) (None, 149, 149, 32) 0 block1_conv1_bn[0][0]

__________________________________________________________________________________________________

block1_conv2 (Conv2D) (None, 147, 147, 64) 18432 block1_conv1_act[0][0]

__________________________________________________________________________________________________

block1_conv2_bn (BatchNormaliza (None, 147, 147, 64) 256 block1_conv2[0][0]

__________________________________________________________________________________________________

block1_conv2_act (Activation) (None, 147, 147, 64) 0 block1_conv2_bn[0][0]

__________________________________________________________________________________________________

block2_sepconv1 (SeparableConv2 (None, 147, 147, 128 8768 block1_conv2_act[0][0]

__________________________________________________________________________________________________

block2_sepconv1_bn (BatchNormal (None, 147, 147, 128 512 block2_sepconv1[0][0]

__________________________________________________________________________________________________

block2_sepconv2_act (Activation (None, 147, 147, 128 0 block2_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block2_sepconv2 (SeparableConv2 (None, 147, 147, 128 17536 block2_sepconv2_act[0][0]

__________________________________________________________________________________________________

block2_sepconv2_bn (BatchNormal (None, 147, 147, 128 512 block2_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 74, 74, 128) 8192 block1_conv2_act[0][0]

__________________________________________________________________________________________________

block2_pool (MaxPooling2D) (None, 74, 74, 128) 0 block2_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 74, 74, 128) 512 conv2d[0][0]

__________________________________________________________________________________________________

add (Add) (None, 74, 74, 128) 0 block2_pool[0][0]

batch_normalization[0][0]

__________________________________________________________________________________________________

block3_sepconv1_act (Activation (None, 74, 74, 128) 0 add[0][0]

__________________________________________________________________________________________________

block3_sepconv1 (SeparableConv2 (None, 74, 74, 256) 33920 block3_sepconv1_act[0][0]

__________________________________________________________________________________________________

block3_sepconv1_bn (BatchNormal (None, 74, 74, 256) 1024 block3_sepconv1[0][0]

__________________________________________________________________________________________________

block3_sepconv2_act (Activation (None, 74, 74, 256) 0 block3_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block3_sepconv2 (SeparableConv2 (None, 74, 74, 256) 67840 block3_sepconv2_act[0][0]

__________________________________________________________________________________________________

block3_sepconv2_bn (BatchNormal (None, 74, 74, 256) 1024 block3_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 37, 37, 256) 32768 add[0][0]

__________________________________________________________________________________________________

block3_pool (MaxPooling2D) (None, 37, 37, 256) 0 block3_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 37, 37, 256) 1024 conv2d_1[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 37, 37, 256) 0 block3_pool[0][0]

batch_normalization_1[0][0]

__________________________________________________________________________________________________

block4_sepconv1_act (Activation (None, 37, 37, 256) 0 add_1[0][0]

__________________________________________________________________________________________________

block4_sepconv1 (SeparableConv2 (None, 37, 37, 728) 188672 block4_sepconv1_act[0][0]

__________________________________________________________________________________________________

block4_sepconv1_bn (BatchNormal (None, 37, 37, 728) 2912 block4_sepconv1[0][0]

__________________________________________________________________________________________________

block4_sepconv2_act (Activation (None, 37, 37, 728) 0 block4_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block4_sepconv2 (SeparableConv2 (None, 37, 37, 728) 536536 block4_sepconv2_act[0][0]

__________________________________________________________________________________________________

block4_sepconv2_bn (BatchNormal (None, 37, 37, 728) 2912 block4_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 19, 19, 728) 186368 add_1[0][0]

__________________________________________________________________________________________________

block4_pool (MaxPooling2D) (None, 19, 19, 728) 0 block4_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 19, 19, 728) 2912 conv2d_2[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 19, 19, 728) 0 block4_pool[0][0]

batch_normalization_2[0][0]

__________________________________________________________________________________________________

block5_sepconv1_act (Activation (None, 19, 19, 728) 0 add_2[0][0]

__________________________________________________________________________________________________

block5_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block5_sepconv1_act[0][0]

__________________________________________________________________________________________________

block5_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block5_sepconv1[0][0]

__________________________________________________________________________________________________

block5_sepconv2_act (Activation (None, 19, 19, 728) 0 block5_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block5_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block5_sepconv2_act[0][0]

__________________________________________________________________________________________________

block5_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block5_sepconv2[0][0]

__________________________________________________________________________________________________

block5_sepconv3_act (Activation (None, 19, 19, 728) 0 block5_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block5_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block5_sepconv3_act[0][0]

__________________________________________________________________________________________________

block5_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block5_sepconv3[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 19, 19, 728) 0 block5_sepconv3_bn[0][0]

add_2[0][0]

__________________________________________________________________________________________________

block6_sepconv1_act (Activation (None, 19, 19, 728) 0 add_3[0][0]

__________________________________________________________________________________________________

block6_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block6_sepconv1_act[0][0]

__________________________________________________________________________________________________

block6_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block6_sepconv1[0][0]

__________________________________________________________________________________________________

block6_sepconv2_act (Activation (None, 19, 19, 728) 0 block6_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block6_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block6_sepconv2_act[0][0]

__________________________________________________________________________________________________

block6_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block6_sepconv2[0][0]

__________________________________________________________________________________________________

block6_sepconv3_act (Activation (None, 19, 19, 728) 0 block6_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block6_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block6_sepconv3_act[0][0]

__________________________________________________________________________________________________

block6_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block6_sepconv3[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 19, 19, 728) 0 block6_sepconv3_bn[0][0]

add_3[0][0]

__________________________________________________________________________________________________

block7_sepconv1_act (Activation (None, 19, 19, 728) 0 add_4[0][0]

__________________________________________________________________________________________________

block7_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block7_sepconv1_act[0][0]

__________________________________________________________________________________________________

block7_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block7_sepconv1[0][0]

__________________________________________________________________________________________________

block7_sepconv2_act (Activation (None, 19, 19, 728) 0 block7_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block7_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block7_sepconv2_act[0][0]

__________________________________________________________________________________________________

block7_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block7_sepconv2[0][0]

__________________________________________________________________________________________________

block7_sepconv3_act (Activation (None, 19, 19, 728) 0 block7_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block7_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block7_sepconv3_act[0][0]

__________________________________________________________________________________________________

block7_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block7_sepconv3[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 19, 19, 728) 0 block7_sepconv3_bn[0][0]

add_4[0][0]

__________________________________________________________________________________________________

block8_sepconv1_act (Activation (None, 19, 19, 728) 0 add_5[0][0]

__________________________________________________________________________________________________

block8_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block8_sepconv1_act[0][0]

__________________________________________________________________________________________________

block8_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block8_sepconv1[0][0]

__________________________________________________________________________________________________

block8_sepconv2_act (Activation (None, 19, 19, 728) 0 block8_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block8_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block8_sepconv2_act[0][0]

__________________________________________________________________________________________________

block8_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block8_sepconv2[0][0]

__________________________________________________________________________________________________

block8_sepconv3_act (Activation (None, 19, 19, 728) 0 block8_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block8_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block8_sepconv3_act[0][0]

__________________________________________________________________________________________________

block8_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block8_sepconv3[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 19, 19, 728) 0 block8_sepconv3_bn[0][0]

add_5[0][0]

__________________________________________________________________________________________________

block9_sepconv1_act (Activation (None, 19, 19, 728) 0 add_6[0][0]

__________________________________________________________________________________________________

block9_sepconv1 (SeparableConv2 (None, 19, 19, 728) 536536 block9_sepconv1_act[0][0]

__________________________________________________________________________________________________

block9_sepconv1_bn (BatchNormal (None, 19, 19, 728) 2912 block9_sepconv1[0][0]

__________________________________________________________________________________________________

block9_sepconv2_act (Activation (None, 19, 19, 728) 0 block9_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block9_sepconv2 (SeparableConv2 (None, 19, 19, 728) 536536 block9_sepconv2_act[0][0]

__________________________________________________________________________________________________

block9_sepconv2_bn (BatchNormal (None, 19, 19, 728) 2912 block9_sepconv2[0][0]

__________________________________________________________________________________________________

block9_sepconv3_act (Activation (None, 19, 19, 728) 0 block9_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block9_sepconv3 (SeparableConv2 (None, 19, 19, 728) 536536 block9_sepconv3_act[0][0]

__________________________________________________________________________________________________

block9_sepconv3_bn (BatchNormal (None, 19, 19, 728) 2912 block9_sepconv3[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 19, 19, 728) 0 block9_sepconv3_bn[0][0]

add_6[0][0]

__________________________________________________________________________________________________

block10_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_7[0][0]

__________________________________________________________________________________________________

block10_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block10_sepconv1_act[0][0]

__________________________________________________________________________________________________

block10_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block10_sepconv1[0][0]

__________________________________________________________________________________________________

block10_sepconv2_act (Activatio (None, 19, 19, 728) 0 block10_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block10_sepconv2 (SeparableConv (None, 19, 19, 728) 536536 block10_sepconv2_act[0][0]

__________________________________________________________________________________________________

block10_sepconv2_bn (BatchNorma (None, 19, 19, 728) 2912 block10_sepconv2[0][0]

__________________________________________________________________________________________________

block10_sepconv3_act (Activatio (None, 19, 19, 728) 0 block10_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block10_sepconv3 (SeparableConv (None, 19, 19, 728) 536536 block10_sepconv3_act[0][0]

__________________________________________________________________________________________________

block10_sepconv3_bn (BatchNorma (None, 19, 19, 728) 2912 block10_sepconv3[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 19, 19, 728) 0 block10_sepconv3_bn[0][0]

add_7[0][0]

__________________________________________________________________________________________________

block11_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_8[0][0]

__________________________________________________________________________________________________

block11_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block11_sepconv1_act[0][0]

__________________________________________________________________________________________________

block11_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block11_sepconv1[0][0]

__________________________________________________________________________________________________

block11_sepconv2_act (Activatio (None, 19, 19, 728) 0 block11_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block11_sepconv2 (SeparableConv (None, 19, 19, 728) 536536 block11_sepconv2_act[0][0]

__________________________________________________________________________________________________

block11_sepconv2_bn (BatchNorma (None, 19, 19, 728) 2912 block11_sepconv2[0][0]

__________________________________________________________________________________________________

block11_sepconv3_act (Activatio (None, 19, 19, 728) 0 block11_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block11_sepconv3 (SeparableConv (None, 19, 19, 728) 536536 block11_sepconv3_act[0][0]

__________________________________________________________________________________________________

block11_sepconv3_bn (BatchNorma (None, 19, 19, 728) 2912 block11_sepconv3[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 19, 19, 728) 0 block11_sepconv3_bn[0][0]

add_8[0][0]

__________________________________________________________________________________________________

block12_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_9[0][0]

__________________________________________________________________________________________________

block12_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block12_sepconv1_act[0][0]

__________________________________________________________________________________________________

block12_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block12_sepconv1[0][0]

__________________________________________________________________________________________________

block12_sepconv2_act (Activatio (None, 19, 19, 728) 0 block12_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block12_sepconv2 (SeparableConv (None, 19, 19, 728) 536536 block12_sepconv2_act[0][0]

__________________________________________________________________________________________________

block12_sepconv2_bn (BatchNorma (None, 19, 19, 728) 2912 block12_sepconv2[0][0]

__________________________________________________________________________________________________

block12_sepconv3_act (Activatio (None, 19, 19, 728) 0 block12_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block12_sepconv3 (SeparableConv (None, 19, 19, 728) 536536 block12_sepconv3_act[0][0]

__________________________________________________________________________________________________

block12_sepconv3_bn (BatchNorma (None, 19, 19, 728) 2912 block12_sepconv3[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 19, 19, 728) 0 block12_sepconv3_bn[0][0]

add_9[0][0]

__________________________________________________________________________________________________

block13_sepconv1_act (Activatio (None, 19, 19, 728) 0 add_10[0][0]

__________________________________________________________________________________________________

block13_sepconv1 (SeparableConv (None, 19, 19, 728) 536536 block13_sepconv1_act[0][0]

__________________________________________________________________________________________________

block13_sepconv1_bn (BatchNorma (None, 19, 19, 728) 2912 block13_sepconv1[0][0]

__________________________________________________________________________________________________

block13_sepconv2_act (Activatio (None, 19, 19, 728) 0 block13_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block13_sepconv2 (SeparableConv (None, 19, 19, 1024) 752024 block13_sepconv2_act[0][0]

__________________________________________________________________________________________________

block13_sepconv2_bn (BatchNorma (None, 19, 19, 1024) 4096 block13_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 10, 10, 1024) 745472 add_10[0][0]

__________________________________________________________________________________________________

block13_pool (MaxPooling2D) (None, 10, 10, 1024) 0 block13_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 10, 10, 1024) 4096 conv2d_3[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 10, 10, 1024) 0 block13_pool[0][0]

batch_normalization_3[0][0]

__________________________________________________________________________________________________

block14_sepconv1 (SeparableConv (None, 10, 10, 1536) 1582080 add_11[0][0]

__________________________________________________________________________________________________

block14_sepconv1_bn (BatchNorma (None, 10, 10, 1536) 6144 block14_sepconv1[0][0]

__________________________________________________________________________________________________

block14_sepconv1_act (Activatio (None, 10, 10, 1536) 0 block14_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block14_sepconv2 (SeparableConv (None, 10, 10, 2048) 3159552 block14_sepconv1_act[0][0]

__________________________________________________________________________________________________

block14_sepconv2_bn (BatchNorma (None, 10, 10, 2048) 8192 block14_sepconv2[0][0]

__________________________________________________________________________________________________

block14_sepconv2_act (Activatio (None, 10, 10, 2048) 0 block14_sepconv2_bn[0][0]

__________________________________________________________________________________________________

global_average_pooling2d (Globa (None, 2048) 0 block14_sepconv2_act[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 128) 262272 global_average_pooling2d[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 128) 0 dense[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 1) 129 dropout[0][0]

==================================================================================================

Total params: 21,123,881

Trainable params: 262,401

Non-trainable params: 20,861,480

__________________________________________________________________________________________________

追加した層のパラメータは学習できます。Trainable params: 262,401

4. モデルの学習

%%time

# tf.keras.backend.clear_session()

history = xcept_model.fit(train_generator,

steps_per_epoch=STEP_SIZE_TRAIN,

epochs=50,

verbose=2,

callbacks=[early_stopping],

# validで評価

validation_data=valid_generator,

validation_steps=STEP_SIZE_VALID)

```

```

2021-11-08 23:30:44.332554: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

Epoch 1/50

2021-11-08 23:30:49.105402: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8005

173/173 - 273s - loss: 0.0582 - val_loss: 0.0398

Epoch 2/50

173/173 - 239s - loss: 0.0404 - val_loss: 0.0378

Epoch 3/50

173/173 - 238s - loss: 0.0365 - val_loss: 0.0353

Epoch 4/50

173/173 - 239s - loss: 0.0350 - val_loss: 0.0349

Epoch 5/50

173/173 - 238s - loss: 0.0337 - val_loss: 0.0357

Epoch 6/50

173/173 - 236s - loss: 0.0327 - val_loss: 0.0356

Epoch 7/50

173/173 - 238s - loss: 0.0316 - val_loss: 0.0350

Epoch 8/50

173/173 - 239s - loss: 0.0297 - val_loss: 0.0348

Epoch 9/50

173/173 - 234s - loss: 0.0292 - val_loss: 0.0346

Epoch 10/50

173/173 - 238s - loss: 0.0293 - val_loss: 0.0348

Epoch 11/50

173/173 - 240s - loss: 0.0289 - val_loss: 0.0350

Epoch 12/50

173/173 - 235s - loss: 0.0275 - val_loss: 0.0354

Epoch 13/50

173/173 - 238s - loss: 0.0264 - val_loss: 0.0355

Epoch 14/50

173/173 - 234s - loss: 0.0268 - val_loss: 0.0353

Epoch 15/50

173/173 - 235s - loss: 0.0269 - val_loss: 0.0374

Epoch 16/50

173/173 - 234s - loss: 0.0253 - val_loss: 0.0357

Epoch 17/50

173/173 - 233s - loss: 0.0259 - val_loss: 0.0358

Epoch 18/50

173/173 - 230s - loss: 0.0260 - val_loss: 0.0375

Epoch 19/50

173/173 - 231s - loss: 0.0247 - val_loss: 0.0359

Epoch 20/50

173/173 - 232s - loss: 0.0250 - val_loss: 0.0368

Epoch 21/50

173/173 - 233s - loss: 0.0251 - val_loss: 0.0377

Epoch 22/50

173/173 - 231s - loss: 0.0248 - val_loss: 0.0363

Epoch 23/50

173/173 - 238s - loss: 0.0237 - val_loss: 0.0389

Epoch 24/50

173/173 - 234s - loss: 0.0235 - val_loss: 0.0384

Restoring model weights from the end of the best epoch.

Epoch 00024: early stopping

CPU times: user 1h 30min 22s, sys: 4min, total: 1h 34min 23s

Wall time: 1h 34min 52s

```

- `epoch`:分割されたサブセットを全て学習し終えたら1エポック。例)全データが320、バッチサイズ32の時、サブセットは10個。10個分のサブセットを学習したら1エポック。

## 学習曲線

```python

fig = plt.figure(figsize=(12, 7))

plt.plot(history.history["loss"],color="#186fb4", linestyle="-.",label="Train")

plt.plot(history.history["val_loss"],color="#186fb4",label="Validation")

plt.legend()

plt.title("RMSE metric of Xception augmented model for Pawpularity", fontsize=20, fontweight='bold')

plt.show()

# evaluate model

score=xcept_model.evaluate_generator(valid_generator)

# print(score)

```

<img width="650" alt="image.png" src="https://qiita-image-store.s3.ap-northeast-1.amazonaws.com/0/1835792/782ee16b-0599-e035-55a0-cab9e903359b.png">

# 5.汎化性能評価

```python

xcept_pred = xcept_model.predict(test_generator)

```

```python

fig = plt.figure(figsize=(12,8))

# 散布図

plt.scatter(x=xcept_pred,

y=k_y_valid)

plt.ylabel("Pawpularity real values (k_y_valid)")

plt.xlabel("Predicted values (xcept_pred)")

plt.title("Predicted Pawpularity VS True values with Xception",

fontsize=20, fontweight='bold')

plt.show()

```

<img width="650" alt="image.png" src="https://qiita-image-store.s3.ap-northeast-1.amazonaws.com/0/1835792/c915b316-fb06-16b6-f648-de78327fc662.png">

# 6.モデル保存

```python

xcept_model.save('xception_model.h5')

```

# 参考文献

この記事は以下の情報を参考にして執筆しました。

- [転移学習とは?ディープラーニングで期待の「転移学習」はどうやる?](https://ledge.ai/transfer-learning/)

- [データ拡張(Data Augmentation)徹底入門!Pythonとkerasでデータ拡張を実装しよう](https://www.codexa.net/data_augmentation_python_keras/)

- [Pawpularity - EDA - Feature Engineering - Baseline](https://www.kaggle.com/michaelfumery/pawpularity-eda-feature-engineering-baseline)

- [Xception](https://keras.io/ja/applications/#xception)

- [画像の前処理](https://keras.io/ja/preprocessing/image/)

- [Tutorial on Keras ImageDataGenerator with flow_from_dataframe](https://vijayabhaskar96.medium.com/tutorial-on-keras-imagedatagenerator-with-flow-from-dataframe-8bd5776e45c1)