全体の構成

①データの下準備

②画像データの拡張(水増し)

③転移学習

環境情報

Python 3.6.5

tensorflow 2.3.1

【SIGANTE】画像ラベリング(10種類)について

画像データに対して、10種類のラベルの1つを割り当てるモデルを作成します。

学習データサンプル数:5000

以下リンク

https://signate.jp/competitions/133

転移学習

ここまでの処理は以下を参照

①データの下準備

https://qiita.com/hara_tatsu/items/a90173d33cb381648f72

②画像データの拡張(水増し)

https://qiita.com/hara_tatsu/items/86ddf3c00a374e9ae796

ライブラリーのインポート

import numpy as np

import matplotlib.pyplot as plt

# tensorflow

from tensorflow import keras

from tensorflow.keras import regularizers

from tensorflow.keras.layers import Dense

from tensorflow.keras.layers import Activation

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Dropout

# 学習済モデル(VGG16)

from tensorflow.keras.applications.vgg16 import VGG16

転移学習

今回はディープラーニングによる画像応用の代表的なモデルの一つとしてVGG16使用します。

# 学習済モデル(VGG16)の読み込み

base_model = VGG16(weights = 'imagenet', #学習済の重みを利用

include_top = False, #出力の全結合層は使わない

input_shape = (96, 96, 3)) #入力の値

base_model.summary()

Model: "vgg16"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 96, 96, 3)] 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 96, 96, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 96, 96, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 48, 48, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 48, 48, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 48, 48, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 24, 24, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 24, 24, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 24, 24, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 24, 24, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 12, 12, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 12, 12, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 12, 12, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 12, 12, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 6, 6, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 6, 6, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 6, 6, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 6, 6, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 3, 3, 512) 0

=================================================================

Total params: 14,714,688

Trainable params: 14,714,688

Non-trainable params: 0

_________________________________________________________________

ファインチューニング

出力層に近い部分だけ再学習を行います。

# 最後のconv層の直前までの層を凍結する

for layer in base_model.layers[:15]:

layer.trainable = False

# 重みが固定(False)されているかの確認

for layer in base_model.layers:

print(layer, layer.trainable )

<tensorflow.python.keras.engine.input_layer.InputLayer object at 0x7fd13a393860> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd13a3d4668> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138ae0c88> False

<tensorflow.python.keras.layers.pooling.MaxPooling2D object at 0x7fd138a305f8> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138a68cf8> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138ae0748> False

<tensorflow.python.keras.layers.pooling.MaxPooling2D object at 0x7fd1389f3ef0> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138a68dd8> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd1389f3080> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd1389ff4e0> False

<tensorflow.python.keras.layers.pooling.MaxPooling2D object at 0x7fd138a097f0> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd1389ffba8> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd1389ff7f0> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138a11a58> False

<tensorflow.python.keras.layers.pooling.MaxPooling2D object at 0x7fd138a1cd68> False

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138a11c18> True

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd138a11d68> True

<tensorflow.python.keras.layers.convolutional.Conv2D object at 0x7fd1389aa048> True

<tensorflow.python.keras.layers.pooling.MaxPooling2D object at 0x7fd1389b52e8> True

「True」となっている部分だけ再学習が行われます。

学習済モデルに出力層を追加してモデルを完成させる。

# VGG16のモデルに全結合分類を追加する

model = keras.Sequential()

model.add(base_model)

# モデルの作成

model.add(Flatten())

model.add(Dense(256, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

model.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

vgg16 (Functional) (None, 3, 3, 512) 14714688

_________________________________________________________________

flatten_1 (Flatten) (None, 4608) 0

_________________________________________________________________

dense_2 (Dense) (None, 256) 1179904

_________________________________________________________________

dropout_1 (Dropout) (None, 256) 0

_________________________________________________________________

dense_3 (Dense) (None, 10) 2570

=================================================================

Total params: 15,897,162

Trainable params: 8,261,898

Non-trainable params: 7,635,264

_________________________________________________________________

モデルの学習

model.compile(loss='categorical_crossentropy',

optimizer= keras.optimizers.SGD(lr=1e-4, momentum=0.9),

metrics=['accuracy'])

%%time

# 学習の実施

log = model.fit_generator(train_data_gen,

steps_per_epoch = 87,

epochs = 100,

validation_data = valid_data_gen,

validation_steps = 33)

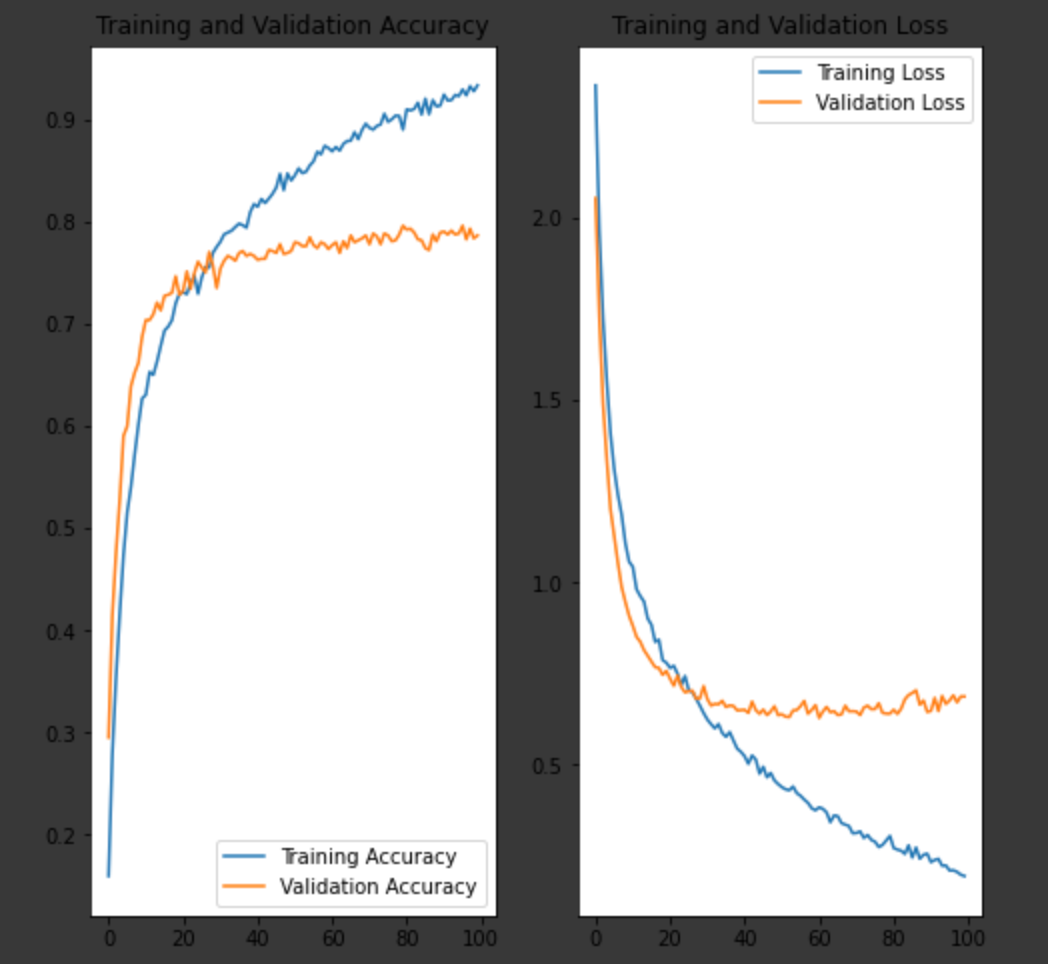

結果の確認

acc = log.history['accuracy']

val_acc = log.history['val_accuracy']

loss = log.history['loss']

val_loss = log.history['val_loss']

epochs_range = range(100)

plt.figure(figsize=(8, 8))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

結果はこんな感じ

結果を提出したところ

暫定評価: 0.8005000

順位 : 54位

まあまあの結果ですかね。。

おわりに

改良の余地があるとすれば、

・学習済モデルを変えてみる

・パラメーターの最適化

・自前のデータセットを追加する

くらいですかね。。

アドバイスがあればご教示ください!!!!