この記事は IDaaS Advent Calendar 2022 8日目の投稿です。

はじめに

Auth0のログはEnterpriseプランでも30日しか保管されません。(2022/12現在)

いざ分析などの要件が出てきた際にログが何も残っていないと困っちゃいますよね。

そのため、別の場所にログを避難させる必要がありますが、新しいテナントを作る度に設定するのは結構めんどくさい・・。

そんなあなたはTerraformを使ってサクっと設定を行いましょう。

設定してみた

以下の構成を考えます。S3に保管した後はAthenaやQuickSightを使って分析・可視化が可能です。

今回はS3に保管するまでを目標にします。

Terraform Auth0 Providerの基本的な利用方法については以下を参照ください。

前提条件

- Auth0のEssentialプラン以上であること

- Log Streaming機能はFreeプランでは利用できません (注意)

- Essentialプランで1つ、Professionalプラン以上で2つ設定可能

Auth0

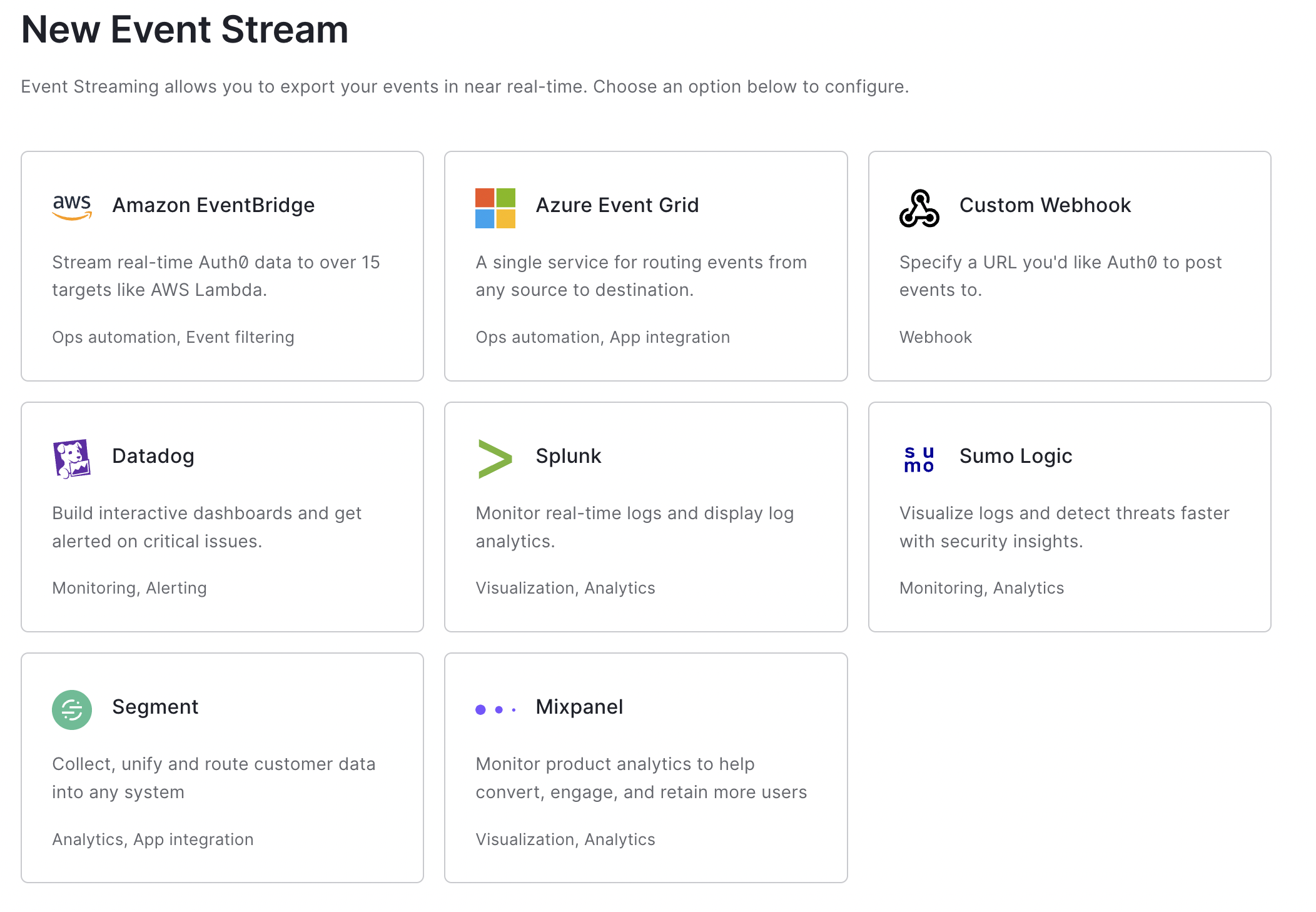

Auth0のダッシュボードを見てみると、このようにAWS以外のサービスにも対応しているようです。

DatadogやSplunkに直接投げれるのもいいですね。

今回はAWSを指定したいので以下のように記述

resource "auth0_log_stream" "eventbridge" {

name = "AWS Eventbridge"

type = "eventbridge"

status = "active"

sink {

aws_account_id = data.aws_caller_identity.self.id

aws_region = data.aws_region.current.name

}

}

data "aws_region" "current" {}

data "aws_caller_identity" "self" {}

EventBridge

サービスを跨いでパラメータの受け渡しができるのがTerraformの良い所です。

aws_cloudwatch_event_busにはauth0_log_streamにより生成された値を指定します。

resource "aws_cloudwatch_event_bus" "auth0" {

name = auth0_log_stream.eventbridge.sink[0].aws_partner_event_source

event_source_name = auth0_log_stream.eventbridge.sink[0].aws_partner_event_source

}

resource "aws_cloudwatch_event_rule" "auth0" {

name = "auth0"

description = "auth0"

event_bus_name = aws_cloudwatch_event_bus.auth0.name

event_pattern = <<EOF

{

"source": [{

"prefix": "aws.partner/auth0.com"

}]

}

EOF

}

resource "aws_cloudwatch_event_target" "auth0" {

rule = aws_cloudwatch_event_rule.auth0.name

target_id = "Auth0"

arn = aws_kinesis_firehose_delivery_stream.firehose.arn

role_arn = aws_iam_role.auth0_cwe_role.arn

event_bus_name = aws_cloudwatch_event_bus.auth0.name

}

event_patternは要件によって変更してください。今回はとりあえず全てのログを出力してみます。

Auth0のダッシュボードから、Monitoring > Streams > Create Stream > Amazon EventBridgeを選択すると以下のようなサンプルPayloadを確認できます。

{

"id": "623053cf-8e0f-9203-f464-e789cf18b0e2",

"detail-type": "Auth0 log",

"source": "aws.partner/auth0.com/example-tenant-635d694a-8a5a-4f1a-b223-1e0424edd19a/auth0.logs",

"account": "123456789012",

"time": "2020-01-29T17:26:50Z",

"region": "us-west-2",

"resources": [],

"detail": {

"log_id": "",

"data": {

"date": "2020-01-29T17:26:50.193Z",

"type": "sapi",

"description": "Create a log stream",

"client_id": "",

"client_name": "",

"ip": "",

"user_id": "",

"log_id": ""

}

}

}

Kinesis Data Firehose & S3 & CloudWatchLogs

KinesisとS3とログの設定をします。

resource "aws_kinesis_firehose_delivery_stream" "firehose" {

name = "auth0-kinesis-firehose"

destination = "extended_s3"

extended_s3_configuration {

role_arn = aws_iam_role.firehose_role.arn

bucket_arn = aws_s3_bucket.auth0.arn

buffer_size = 5

buffer_interval = 60

cloudwatch_logging_options {

enabled = true

log_group_name = aws_cloudwatch_log_group.auth0.name

log_stream_name = aws_cloudwatch_log_stream.auth0.name

}

}

}

resource "aws_s3_bucket" "auth0" {

bucket = "${var.auth0_domain}-auth0-log-bucket"

force_destroy = true

}

resource "aws_s3_bucket_public_access_block" "auth0" {

bucket = aws_s3_bucket.auth0.id

block_public_acls = true

block_public_policy = true

restrict_public_buckets = true

ignore_public_acls = true

}

resource "aws_s3_bucket_acl" "auth0" {

bucket = aws_s3_bucket.auth0.id

acl = "private"

}

resource "aws_cloudwatch_log_group" "auth0" {

name = "auth0-cloudwatch-log-group"

}

resource "aws_cloudwatch_log_stream" "auth0" {

name = "auth0-cloudwatch-log-stream"

log_group_name = aws_cloudwatch_log_group.auth0.name

}

IAM

さいごにIAMの定義をします。

resource "aws_iam_role" "firehose_role" {

name = "auth0-firehose-role"

assume_role_policy = data.aws_iam_policy_document.firehose_assume.json

}

data "aws_iam_policy_document" "firehose_assume" {

statement {

effect = "Allow"

actions = [

"sts:AssumeRole"

]

principals {

type = "Service"

identifiers = [

"firehose.amazonaws.com"

]

}

}

}

resource "aws_iam_role_policy" "firehose_role_policy" {

name = "auth0-firehose-role-policy"

role = aws_iam_role.firehose_role.id

policy = data.aws_iam_policy_document.firehose_role_policy_statement.json

}

data "aws_iam_policy_document" "firehose_role_policy_statement" {

statement {

effect = "Allow"

actions = [

"s3:AbortMultipartUpload",

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket",

"s3:ListBucketMultipartUploads",

"s3:PutObject"

]

resources = [

"arn:aws:s3:::${aws_s3_bucket.auth0.bucket}",

"arn:aws:s3:::${aws_s3_bucket.auth0.bucket}/*"

]

}

statement {

effect = "Allow"

actions = [

"logs:PutLogEvents"

]

resources = [

"${aws_cloudwatch_log_group.auth0.arn}:*"

]

}

}

resource "aws_iam_role" "auth0_cwe_role" {

name = "auth0-cwe-role"

assume_role_policy = data.aws_iam_policy_document.auth0_cwe_role_assume.json

}

data "aws_iam_policy_document" "auth0_cwe_role_assume" {

statement {

effect = "Allow"

actions = [

"sts:AssumeRole"

]

principals {

type = "Service"

identifiers = [

"events.amazonaws.com"

]

}

}

}

resource "aws_iam_role_policy" "auth0_cwe_role_policy" {

name = "auth0-cwe-role-policy"

role = aws_iam_role.auth0_cwe_role.id

policy = data.aws_iam_policy_document.auth0_cwe_role_policy_statement.json

}

data "aws_iam_policy_document" "auth0_cwe_role_policy_statement" {

statement {

effect = "Allow"

actions = [

"firehose:PutRecord",

"firehose:PutRecordBatch"

]

resources = [

aws_kinesis_firehose_delivery_stream.firehose.arn

]

}

}

確認

それではTerraform Applyしてみます。

適当なユーザーでAuth0のアプリケーションにログインしたのち、少し待ってS3を確認。

うまく出力されました。

2023/02/22追記

最後にEventBusからStart discoveryを実行してあげる必要がありました。(Terraformから有効化できない?

まとめ

- Auth0のログはすぐ消えるのでLog Stream機能を使って別の場所に保管しよう

- Terraformを活用して繰り返し作業の無駄を省こう

IDaaSを利用したアプリケーション開発にお悩みの方は弊社TC3までお問い合わせください。また、優秀なアーキテクトからの応募も常時受け付けています。