( 利用環境 )

Google Cloud Platform (GCP)のアカウントを取得後、ローカルのMacbookから、GCPにssh接続して、Google Video Intelligence APIを叩いています。

ハマりポイント

次のポイントでハマりましたので、解決策を掲載します。

- ローカルからファイルをGCPに送るときは、__input_uriではなく、input_content引数__を使う

__input_uriという引数__は、GCPのEC2インスタンスに格納している動画ファイルを読み込ませる場合に使います。

その際、__引数input_uri__には、その動画ファイルが置かれているEC2のバケット名を渡すのが正しい、とのこと。

GCP VideoIntelligenceのインスタンス作成まで

>>> import json

>>> from google.cloud import videointelligence

>>> from google.oauth2 import service_account

>>>

>>> service_account_key_name = "electroncgpvision-8bf54f743c93.json"

>>> info = json.load(open(service_account_key_name))

>>> creds = service_account.Credentials.from_service_account_info(info)

>>>

>>> video_client = videointelligence.VideoIntelligenceServiceClient(credentials=creds)

>>>

>>> features = [videointelligence.Feature.OBJECT_TRACKING]

>>>

>>> path = './olympic.mp4'

>>> import io

>>>

>>> with io.open(path, 'rb') as file:

... input_content = file.read()

...

>>>

エラー発生

発生したエラーgoogle.api_core.exceptions.PermissionDenied: 403 Cloud Video Intelligence API has not been used in project XXXXXX before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/videointelligence.googleapis.com/overview?project=XXXXXX then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry.

>>> operation = video_client.annotate_video(request={"features": features, "input_uri": input_content})

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/google/api_core/grpc_helpers.py", line 67, in error_remapped_callable

return callable_(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/grpc/_channel.py", line 923, in __call__

return _end_unary_response_blocking(state, call, False, None)

File "/usr/local/lib/python3.9/site-packages/grpc/_channel.py", line 826, in _end_unary_response_blocking

raise _InactiveRpcError(state)

grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

status = StatusCode.PERMISSION_DENIED

details = "Cloud Video Intelligence API has not been used in project 272208221351 before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/videointelligence.googleapis.com/overview?project=272208221351 then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry."

debug_error_string = "{"created":"@1627184708.601310000","description":"Error received from peer ipv6:[2404:6800:4004:813::200a]:443","file":"src/core/lib/surface/call.cc","file_line":1063,"grpc_message":"Cloud Video Intelligence API has not been used in project 272208221351 before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/videointelligence.googleapis.com/overview?project=272208221351 then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry.","grpc_status":7}"

>

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.9/site-packages/google/cloud/videointelligence_v1/services/video_intelligence_service/client.py", line 424, in annotate_video

response = rpc(request, retry=retry, timeout=timeout, metadata=metadata,)

File "/usr/local/lib/python3.9/site-packages/google/api_core/gapic_v1/method.py", line 145, in __call__

return wrapped_func(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/google/api_core/retry.py", line 285, in retry_wrapped_func

return retry_target(

File "/usr/local/lib/python3.9/site-packages/google/api_core/retry.py", line 188, in retry_target

return target()

File "/usr/local/lib/python3.9/site-packages/google/api_core/grpc_helpers.py", line 69, in error_remapped_callable

six.raise_from(exceptions.from_grpc_error(exc), exc)

File "<string>", line 3, in raise_from

google.api_core.exceptions.PermissionDenied: 403 Cloud Video Intelligence API has not been used in project XXXXXX before or it is disabled. Enable it by visiting https://console.developers.google.com/apis/api/videointelligence.googleapis.com/overview?project=XXXXXX then retry. If you enabled this API recently, wait a few minutes for the action to propagate to our systems and retry.

>>>

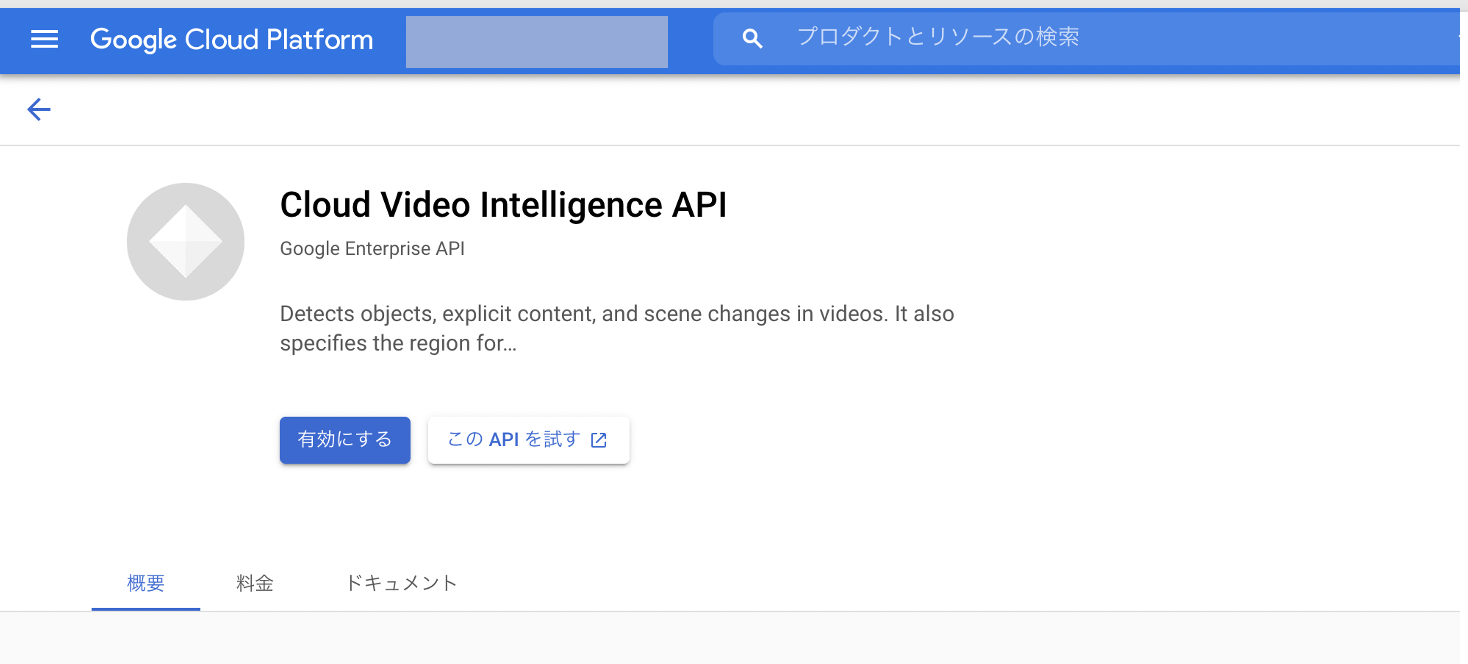

Google GCPのconsole画面でAPIを有効化する

( APIを有効化する前の画面 )

( APIを有効化中の画面 )

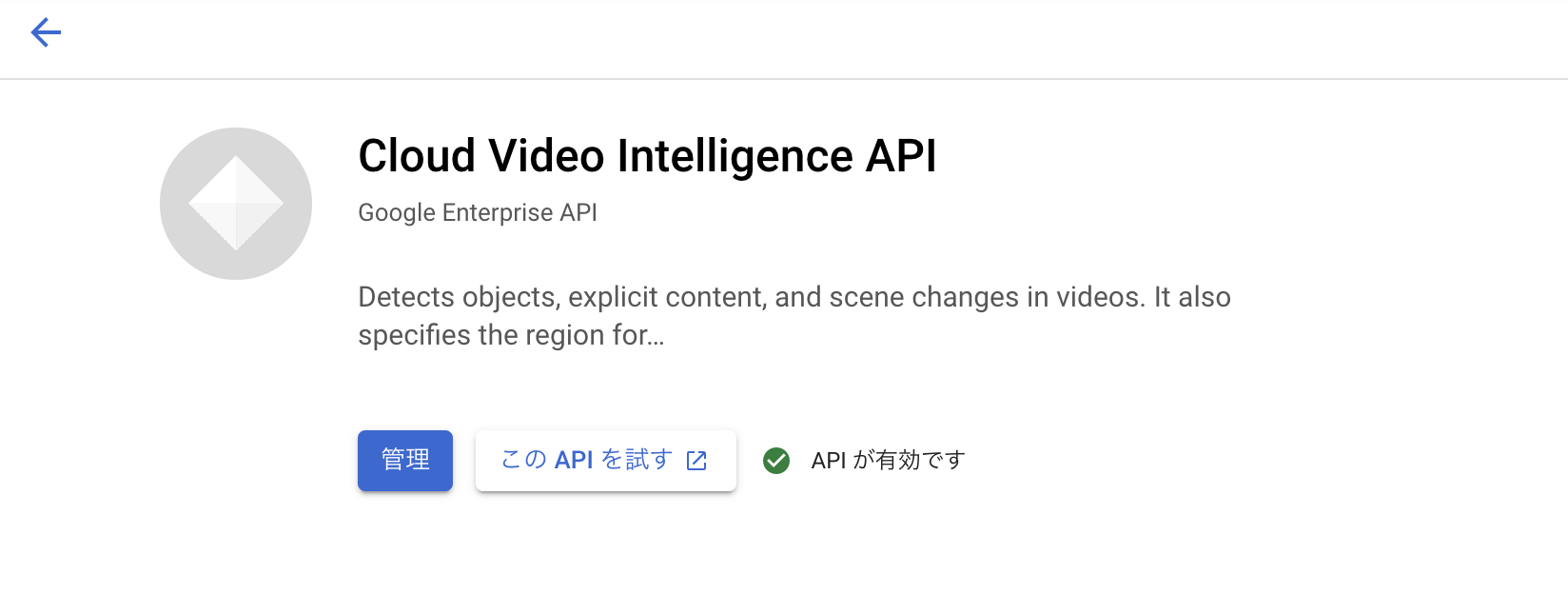

( APIを有効化した後の画面 )

今度は以下のエラーが。

>>> operation = video_client.annotate_video(request={"features": features, "input_uri": input_content})

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/google/api_core/grpc_helpers.py", line 67, in error_remapped_callable

return callable_(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/grpc/_channel.py", line 923, in __call__

return _end_unary_response_blocking(state, call, False, None)

File "/usr/local/lib/python3.9/site-packages/grpc/_channel.py", line 826, in _end_unary_response_blocking

raise _InactiveRpcError(state)

grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

status = StatusCode.INVALID_ARGUMENT

details = "Request contains an invalid argument."

debug_error_string = "{"created":"@1627185000.461894000","description":"Error received from peer ipv6:[2404:6800:4004:80e::200a]:443","file":"src/core/lib/surface/call.cc","file_line":1063,"grpc_message":"Request contains an invalid argument.","grpc_status":3}"

>

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.9/site-packages/google/cloud/videointelligence_v1/services/video_intelligence_service/client.py", line 424, in annotate_video

response = rpc(request, retry=retry, timeout=timeout, metadata=metadata,)

File "/usr/local/lib/python3.9/site-packages/google/api_core/gapic_v1/method.py", line 145, in __call__

return wrapped_func(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/google/api_core/retry.py", line 285, in retry_wrapped_func

return retry_target(

File "/usr/local/lib/python3.9/site-packages/google/api_core/retry.py", line 188, in retry_target

return target()

File "/usr/local/lib/python3.9/site-packages/google/api_core/grpc_helpers.py", line 69, in error_remapped_callable

six.raise_from(exceptions.from_grpc_error(exc), exc)

File "<string>", line 3, in raise_from

google.api_core.exceptions.InvalidArgument: 400 Request contains an invalid argument.

>>>

引数__input_uri__には、動画ファイルが置かれたのGCPバケットのURIの指定が必要ら

- https://cloud.google.com/video-intelligence/docs/shot-detection?hl=ja

)

video_client = videointelligence.VideoIntelligenceServiceClient()

features = [videointelligence.Feature.SHOT_CHANGE_DETECTION]

operation = video_client.annotate_video(

request={"features": features, "input_uri": path}

)

Video Intelligence API サービスの準備ができたので、そのサービスに対するリクエストを作成できます。Video Intelligence API へのリクエストは JSON オブジェクトとして作成されます。このようなリクエストの具体的な構造については、Video Intelligence API リファレンスをご覧ください。 このコード スニペットにより、次の処理が行われます。 - annotate_video() メソッドに対する POST リクエストの JSON を作成する。 - 渡された動画ファイルが存在する Google Cloud Storage の場所をリクエストに挿入する。 - annotate メソッドが SHOT_CHANGE_DETECTION を実行する必要があることを示す。

上の説明の中の、次の部分です。

- 渡された動画ファイルが存在する Google Cloud Storage の場所をリクエストに挿入する。

引数を、__input_uriからinpout_content__に変える

次のサイトでは、__input_url__ではなく、 __input_content__を使っていた。

- Video Intelligence APIで動画の物体検出をする

operation = video_client.annotate_video(input_content=input_content, features=features, location_id='us-east1')

input_uriの部分をinput_contentにすると読み込めた

>>> operation = video_client.annotate_video(

... request={"features": features, "input_content": input_content}

... )

>>>

以下、APIの実行に成功 (返り値の取得に成功)

以下を実行する。

GCPから処理結果が返されるまでしばらく待つ。

>>>

>>> timeout = 300

>>> result = operation.result(timeout=timeout)

結果が返された後、返り値を確認する。

>>> result = operation.result(timeout=timeout)

>>>

>>>

>>> import types

>>> print(type(result))

<class 'google.cloud.videointelligence_v1.types.video_intelligence.AnnotateVideoResponse'>

>>>

返り値の中身を出力する。

>>> print(result)

annotation_results {

segment {

start_time_offset {

}

end_time_offset {

seconds: 420

nanos: 253166000

}

}

object_annotations {

entity {

entity_id: "/m/019cfy"

description: "stadium"

language_code: "en-US"

}

frames {

normalized_bounding_box {

left: 0.0026057958602905273

top: 0.16879695653915405

right: 0.7864463925361633

bottom: 0.6468670964241028

}

time_offset {

}

}

( 省略 )

frames {

normalized_bounding_box {

left: 0.14923791587352753

top: 0.7004411220550537

right: 0.840565025806427

bottom: 0.9982033967971802

}

time_offset {

seconds: 420

nanos: 219800000

}

}

segment {

start_time_offset {

seconds: 417

nanos: 16600000

}

end_time_offset {

seconds: 420

nanos: 219800000

}

}

confidence: 0.7548047304153442

}

}

>>>

次のサイトによると、返り値の要素は、

result.annotation_results[0].object_annotations

で取り出せるらしい。

>>> tmp = result.annotation_results[0].object_annotations

>>> print(tmp[0:1])

[entity {

entity_id: "/m/019cfy"

description: "stadium"

language_code: "en-US"

}

frames {

normalized_bounding_box {

left: 0.0026057958602905273

top: 0.16879695653915405

right: 0.7864463925361633

bottom: 0.6468670964241028

}

time_offset {

}

}

frames {

normalized_bounding_box {

left: 0.0013162504183128476

top: 0.1661386787891388

right: 0.7859321236610413

bottom: 0.6482422947883606

}

time_offset {

nanos: 100100000

}

}

( 省略 )

frames {

normalized_bounding_box {

left: 0.017169591039419174

top: 0.1032341942191124

right: 0.9880898594856262

bottom: 0.9047158360481262

}

time_offset {

seconds: 2

nanos: 102100000

}

}

segment {

start_time_offset {

}

end_time_offset {

seconds: 2

nanos: 102100000

}

}

confidence: 0.5964018702507019

]

>>>

>>> print(len(tmp))

1143

>>> object_annotations = result.annotation_results[0].object_annotations

>>>

>>> detected_object_name_list = [object_annotation.entity.description for object_annotation in object_annotations]

>>> len(detected_object_name_list)

1143

>>>

>>> uniq_detected_object_name_list = list(set(detected_object_name_list))

>>> len((uniq_detected_object_name_list))

94

>>> print(uniq_detected_object_name_list[0:20])

['car', 'tire', 'ball', 'outerwear', 'stadium', 'food', 'vacuum', 'light fixture', 'tie', 'digital clock', 'bicycle', 'backpack', 'refrigerator', 'tableware', 'plant', 'house', 'packaged goods', 'sun hat', 'footwear', 'wardrobe']

>>>

>>> print(uniq_detected_object_name_list)

['car', 'tire', 'ball', 'outerwear', 'stadium', 'food', 'vacuum', 'light fixture', 'tie', 'digital clock', 'bicycle', 'backpack', 'refrigerator', 'tableware', 'plant', 'house', 'packaged goods', 'sun hat', 'footwear', 'wardrobe', 'musical instrument', 'box', 't-shirt', 'animal', 'drink', 'treadmill', 'balloon', 'weapon', 'bag', 'bus', 'furniture', 'poster', 'kitchen appliance', 'head-mounted display', 'flower', 'shorts', 'truck', 'helmet', 'ladder', 'luggage', 'television', 'street light', 'electric razor', 'shelf', 'tripod', 'curtain', 'luggage & bags', '2d barcode', 'tent', 'glove', 'laptop', 'necklace', 'computer monitor', 'airplane', 'home appliance', 'handbag', 'clothing', 'building', 'wheel', 'table', 'motorcycle', 'shirt', 'fountain', 'table tennis racket', 'chair', 'table top', 'eyewear', 'bridge', 'pants', 'microphone', 'vest', 'punching bag', 'license plate', 'bench', 'suit', 'golf cart', 'bird', 'stop sign', 'container', 'mobile phone', 'hat', 'flag', 'person', 'glasses', 'electronic device', 'top', 'desk', 'coat', 'window', 'display device', 'shoe', 'jeans', 'window blind', 'parking meter']

>>>