始めに

コンペ主催 cf. @solafune (https://solafune.com) にて、「夜間光データから土地価格を予測」というコンペが開催中です。

カラムが5行しかないということで、気軽に参加できます

こういった記事でのシェアリングを許可しているコンペです

(コード含め2時間くらいで書いたので、結構怪しいところ残っているかも。あまりリファクタリングできてません)

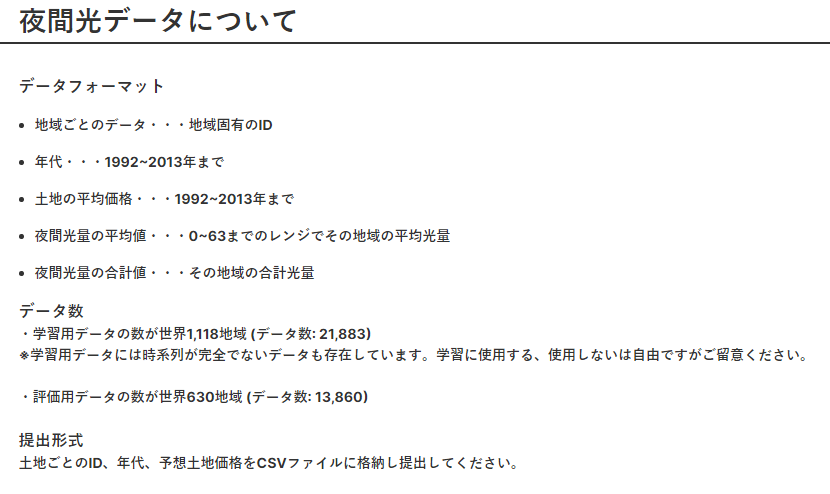

データ概要

公式より

把握しておくべきは、IDがtrainとtestで完全にわかれていることくらいです

予測対象は1992年~2013年までのある地域のすべての年の「土地の平均価格」です。

なので、地域ごとに1992年~2013年の時系列データととらえることも出来ます。

EDAはこの辺の記事みれば多分大体わかると思います。

Solafune 夜間光コンペ Baseline(xgb,lgb,cat)

[solafune] 夜間光データから土地価格を予測ベースモデル

コード

コード

import os

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import StratifiedKFold, GroupKFold

from copy import deepcopy

from sklearn.preprocessing import StandardScaler

sns.set_theme(style="ticks")

DATA_DIR = './data/'

OUTPUT_DIR = "output"

# データ読み込み

train = pd.read_csv(os.path.join(DATA_DIR, 'TrainDataSet.csv'))

test = pd.read_csv(os.path.join(DATA_DIR, 'EvaluationData.csv'))

submission = pd.read_csv(os.path.join(DATA_DIR, 'UploadFileTemplate.csv'))

# only full data

count_ids = train.PlaceID.value_counts().reset_index()

valid_PlaceID =count_ids[count_ids.PlaceID ==22]["index"]

n_train = len(train)

train = train[train.PlaceID.isin(valid_PlaceID)]

train = train.reset_index().drop(columns = "index")

print(f"Dropped : {round(100 - 100 * len(train)/n_train,1)}%")

from contextlib import contextmanager

from time import time

@contextmanager

def timer(logger=None, format_str='{:.3f}[s]', prefix=None, suffix=None):

if prefix: format_str = str(prefix) + format_str

if suffix: format_str = format_str + str(suffix)

start = time()

yield

d = time() - start

out_str = format_str.format(d)

if logger:

logger.info(out_str)

else:

print(out_str)

feature_cols = ['MeanLight', 'SumLight']

for f in feature_cols:

ss = StandardScaler()

train[f] = ss.fit_transform(train[[f]])

test[f] = ss.transform(test[[f]])

import torch

from torch.utils.data import Dataset

from torch import nn

import torch.optim as optim

class SeqDataset(Dataset):

def __init__(self, df, place_ids, feature_col, target_col, is_log = True, test = False):

self.df = df

self.place_ids = place_ids

self.feature_col = feature_col

self.target_col = target_col

self.is_log = is_log

if test:

self.df[target_col] = 0

def __len__(self):

return len(self.place_ids)

def __getitem__(self, idx):

place_id = self.place_ids[idx]

seq = self.df.loc[self.df.PlaceID == place_id, self.feature_col].values

label = self.df.loc[self.df.PlaceID == place_id, self.target_col].values

seq, label = torch.tensor(seq).float(), torch.tensor(label).float()

if self.is_log:

label = torch.log1p(label)

return seq, label

# dataset = SeqDataset(df = train, place_ids = train.PlaceID.unique(),

# feature_col = feature_cols, target_col = "AverageLandPrice")

testset = SeqDataset(df = test, place_ids = test.PlaceID.unique(),

feature_col = feature_cols, target_col = "AverageLandPrice", test = True)

# dataloader = torch.utils.data.DataLoader(dataset, batch_size = 5)

testloader = torch.utils.data.DataLoader(testset, batch_size = 1)

class BaselineLSTM(nn.Module):

def __init__(

self,

emb_dim=128,

rnn_dim=128,

hidden_size=128,

num_layers=2,

dropout=0.3,

rnn_dropout=0.3,

):

super().__init__()

self.emb_dim = emb_dim

self.rnn_dim = rnn_dim

self.hidden_size = hidden_size

self.num_layers = num_layers

self.dropout = dropout

self.rnn_dropout = rnn_dropout

self.lstm = nn.LSTM(

input_size=2,

hidden_size=hidden_size,

num_layers=num_layers,

dropout=rnn_dropout,

bidirectional=False,

batch_first=True,

)

self.fc = nn.Linear(hidden_size, 1)

def forward(self,x):

x, _ = self.lstm(x)

x = self.fc(x)

return x

EPOCHS = 10

LEARNING_LATE = 0.01

def fit_LSTM(X, y, cv=None,):

models = []

oof_pred = np.zeros_like(y, dtype=np.float)

for i, (idx_train, idx_valid) in enumerate(cv.split(X, y, train['PlaceID'])):

trainloader = torch.utils.data.DataLoader(

SeqDataset(

df = X.iloc[idx_train],

place_ids = X.iloc[idx_train].PlaceID.unique(),

feature_col = feature_cols,

target_col = "AverageLandPrice"

)

, batch_size = 4)

validloader = torch.utils.data.DataLoader(

SeqDataset(

df = X.iloc[idx_valid],

place_ids = X.iloc[idx_valid].PlaceID.unique(),

feature_col = feature_cols,

target_col = "AverageLandPrice"

)

, batch_size = 1)

model = BaselineLSTM()

criterion = nn.MSELoss()

#optimizer = optim.SGD(model.parameters(), lr=LEARNING_LATE)

optimizer = optim.Adam(model.parameters(), lr=LEARNING_LATE)

with timer(prefix='fit fold={} '.format(i + 1)):

for epoch in range(EPOCHS):

for data in trainloader:

seq_data,label = data

optimizer.zero_grad()

pred = model(seq_data)

loss = torch.sqrt(criterion(pred.squeeze(2), label))

loss.backward()

optimizer.step()

print(f"\repoch:{epoch} loss:{loss.item()}", end = "")

pred_i = []

for data in validloader:

seq_data, _ = data

with torch.no_grad():

model.eval()

pred_i.append(model(seq_data).squeeze(2).detach().numpy())

pred_i = np.array(pred_i).reshape(-1)

oof_pred[idx_valid] = pred_i

models.append(model)

print(f'Fold {i} RMSLE: {mean_squared_error(np.log1p(X.iloc[idx_valid].AverageLandPrice.values), pred_i):.4f}')

score = mean_squared_error(np.log1p(y), oof_pred)

print('FINISHED \ whole score: {:.4f}'.format(score))

return oof_pred, models

def create_predict(models, testloader):

pred = []

for data in testloader:

seq_data, _ = data

with torch.no_grad():

p = []

for model in models:

model.eval()

p.append(

model(seq_data).detach().numpy().reshape(-1)

)

pred.append(p)

pred = np.mean(pred, axis=1)

return pred

def fit_and_predict(train_df,

target_df, ):

target_name = "AverageLandPrice"

print('-' * 20 + ' start {} '.format(target_name) + '-' * 20)

y = target_df.values

cv = GroupKFold(n_splits=5)

# モデルの学習.

oof, models = fit_LSTM(train_df, y, cv=cv)

return oof, models

oof, models = fit_and_predict(train_df=train,

target_df=train.AverageLandPrice,)

# 予測モデルで推論実行

with timer(prefix='predict'):

pred = create_predict(

models,

testloader

)

submission.LandPrice = np.expm1(pred.reshape(-1))

submission.to_csv(os.path.join(OUTPUT_DIR, 'submission_lstm.csv'), index=False)

# LB 0.784676

本当はipynbなんですが、ipynbはれないので、中のコードだけバシバシはっておきます。

ipynbはsorafuneのディスコードに投下しておきます

To Do

モデルパラメータはすごく適当。FCやhidden sizeなど

学習のパラメータ。学習率やロスなどもわりとそのまま

入力の特徴量は2つのみ。もっといろいろ入れたら精度あがりそう

アンサンブルとかに使えるかも?