More than 5 years have passed since last update.

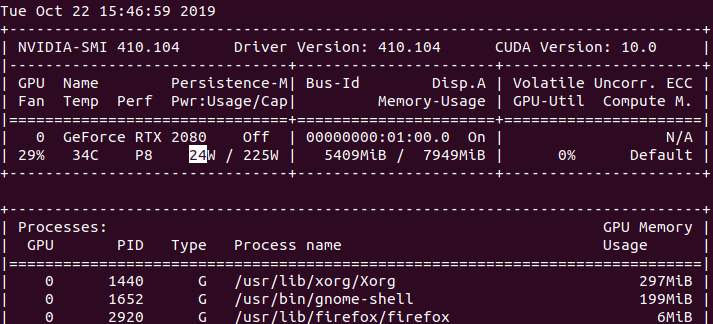

nvidia-smiでGPUのメモリ使用量を継続的に監視する

Last updated at Posted at 2019-10-22

Register as a new user and use Qiita more conveniently

- You get articles that match your needs

- You can efficiently read back useful information

- You can use dark theme