はじめに

画像からテキスト抽出をやってみました

開発環境

- Windows 10

- Anaconda

- Python 3.6

- OpenCV 4.4.0

- Azure Computer Vision API

- Computer Vision クライアント ライブラリ(必要なら)

導入

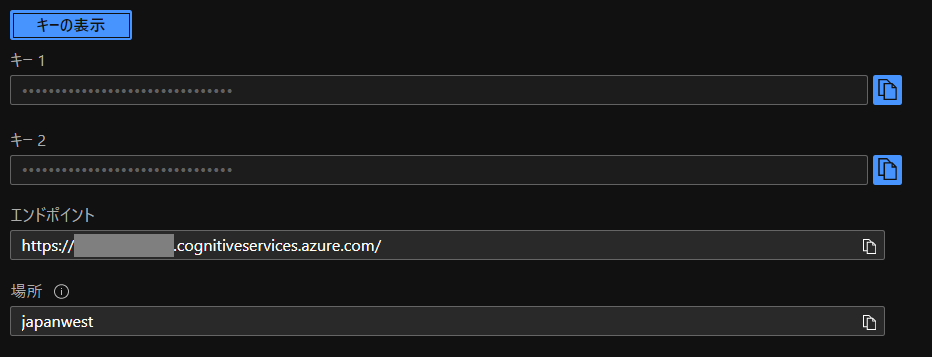

1.Azureポータルにログインします

2.Computer Vision APIのリソースを作成します

4.anaconda promptを開き、Python 3.6環境を作成します。

$ conda create -n py36 python=3.6

$ conda activate py36

5.必要なライブラリをインストールしてください。

pip install matplotlib

pip install pillow

pip install opencv-python

pip install --upgrade azure-cognitiveservices-vision-computervision

6.メモしたキーとエンドポイントを入力し、次のコードを実行してみましょう!

subscription_key = "<your subscription key>"

endpoint = "<your API endpoint>"

エンドポイントはリージョン(場所)指定でも動くようです。

endpoint = "https://<your region>.api.cognitive.microsoft.com/"

画像URLからテキスト抽出する

クイック スタート:Computer Vision の REST API と Python を使用して印刷されたテキストと手書きテキストを抽出する

import json

import os

import os.path

import sys

import requests

import time

import matplotlib.pyplot as plt

from matplotlib.patches import Polygon

from PIL import Image

from io import BytesIO

# import cv2

subscription_key = "<your subscription key>"

endpoint = "<your API endpoint>"

# endpoint = "https://japanwest.api.cognitive.microsoft.com/"

text_recognition_url = endpoint + "vision/v3.1/read/analyze"

image_url = "https://raw.githubusercontent.com/MicrosoftDocs/azure-docs/master/articles/cognitive-services/Computer-vision/Images/readsample.jpg"

headers = {'Ocp-Apim-Subscription-Key': subscription_key}

data = {'url': image_url}

response = requests.post(text_recognition_url, headers=headers, json=data)

response.raise_for_status()

operation_url = response.headers["Operation-Location"]

analysis = {}

poll = True

while (poll):

response_final = requests.get(response.headers["Operation-Location"], headers=headers)

analysis = response_final.json()

print(json.dumps(analysis, indent=4))

time.sleep(1)

if ("analyzeResult" in analysis):

poll = False

if ("status" in analysis and analysis['status'] == 'failed'):

poll = False

polygons = []

if ("analyzeResult" in analysis):

polygons = [(line["boundingBox"], line["text"])

for line in analysis["analyzeResult"]["readResults"][0]["lines"]]

image = Image.open(BytesIO(requests.get(image_url).content))

ax = plt.imshow(image)

for polygon in polygons:

vertices = [(polygon[0][i], polygon[0][i+1])

for i in range(0, len(polygon[0]), 2)]

text = polygon[1]

patch = Polygon(vertices, closed=True, fill=False, linewidth=2, color='y')

ax.axes.add_patch(patch)

plt.text(vertices[0][0], vertices[0][1], text, fontsize=20, va="top")

plt.show()

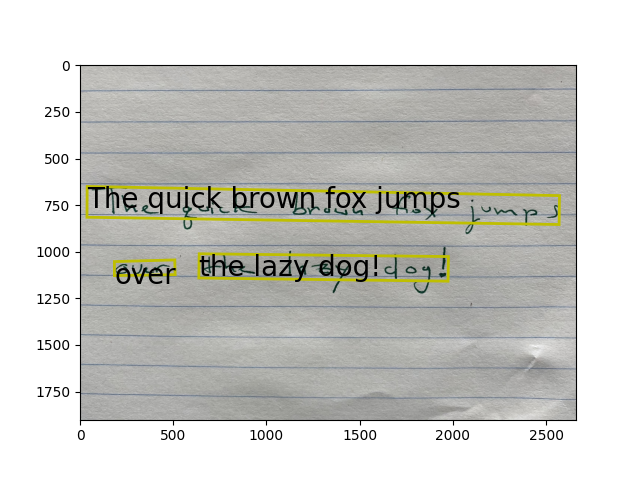

| input | output |

|---|---|

|

|

ローカル画像からテキスト抽出する

import json

import os

import os.path

import sys

import requests

import time

import matplotlib.pyplot as plt

from matplotlib.patches import Polygon

from PIL import Image

from io import BytesIO

import cv2

subscription_key = "<your subscription key>"

endpoint = "<your API endpoint>"

# endpoint = "https://japanwest.api.cognitive.microsoft.com/"

text_recognition_url = endpoint + "vision/v3.1/read/analyze"

headers = {'Ocp-Apim-Subscription-Key': subscription_key, 'Content-Type': 'application/octet-stream'}

filename = "readsample.jpg"

root, ext = os.path.splitext(filename)

# image_data = open(filename, "rb").read()

color = cv2.imread(filename, cv2.IMREAD_COLOR)

cv2.namedWindow("color", cv2.WINDOW_NORMAL)

cv2.imshow("color", color)

cv2.waitKey(1)

image_data = cv2.imencode(ext, color)[1].tostring()

response = requests.post(text_recognition_url, headers=headers, data=image_data)

response.raise_for_status()

operation_url = response.headers["Operation-Location"]

analysis = {}

poll = True

while (poll):

response_final = requests.get(

response.headers["Operation-Location"], headers=headers)

analysis = response_final.json()

print(json.dumps(analysis, indent=4))

time.sleep(1)

if ("analyzeResult" in analysis):

poll = False

if ("status" in analysis and analysis['status'] == 'failed'):

poll = False

polygons = []

if ("analyzeResult" in analysis):

polygons = [(line["boundingBox"], line["text"])

for line in analysis["analyzeResult"]["readResults"][0]["lines"]]

# image = Image.open(BytesIO(image_data))

image = Image.fromarray(color)

ax = plt.imshow(image)

for polygon in polygons:

vertices = [(polygon[0][i], polygon[0][i+1])

for i in range(0, len(polygon[0]), 2)]

text = polygon[1]

patch = Polygon(vertices, closed=True, fill=False, linewidth=2, color='y')

ax.axes.add_patch(patch)

plt.text(vertices[0][0], vertices[0][1], text, fontsize=20, va="top")

plt.show()

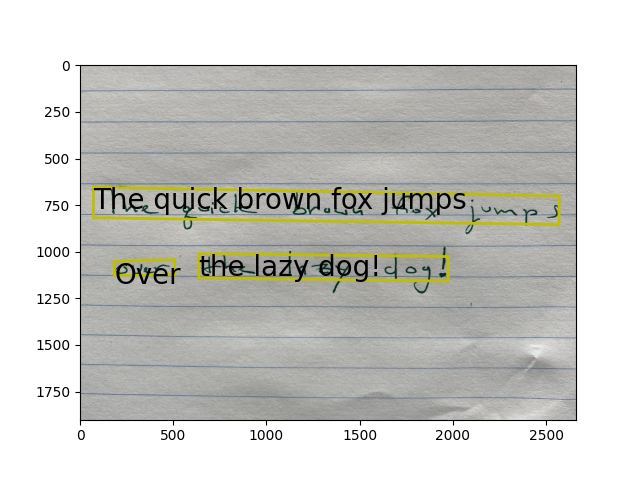

| input | output |

|---|---|

|

|

Computer Vision クライアント ライブラリを使用する

クイック スタート:Computer Vision クライアント ライブラリを使用する

from azure.cognitiveservices.vision.computervision import ComputerVisionClient

from azure.cognitiveservices.vision.computervision.models import OperationStatusCodes

from azure.cognitiveservices.vision.computervision.models import VisualFeatureTypes

from msrest.authentication import CognitiveServicesCredentials

from array import array

import os

from PIL import Image

import sys

import time

import cv2

from io import BytesIO

subscription_key = "<your subscription key>"

endpoint = "<your API endpoint>"

# endpoint = "https://japanwest.api.cognitive.microsoft.com/"

computervision_client = ComputerVisionClient(endpoint, CognitiveServicesCredentials(subscription_key))

print("===== Batch Read File - remote =====")

remote_image_handw_text_url = "https://raw.githubusercontent.com/MicrosoftDocs/azure-docs/master/articles/cognitive-services/Computer-vision/Images/readsample.jpg"

recognize_handw_results = computervision_client.read(remote_image_handw_text_url, raw=True)

operation_location_remote = recognize_handw_results.headers["Operation-Location"]

operation_id = operation_location_remote.split("/")[-1]

while True:

get_handw_text_results = computervision_client.get_read_result(operation_id)

if get_handw_text_results.status not in ['notStarted', 'running']:

break

time.sleep(1)

if get_handw_text_results.status == OperationStatusCodes.succeeded:

for text_result in get_handw_text_results.analyze_result.read_results:

for line in text_result.lines:

print(line.text)

print(line.bounding_box)

print()

===== Batch Read File - remote =====

The quick brown fox jumps

[38.0, 650.0, 2572.0, 699.0, 2570.0, 854.0, 37.0, 815.0]

over

[184.0, 1053.0, 508.0, 1044.0, 510.0, 1123.0, 184.0, 1128.0]

the lazy dog!

[639.0, 1011.0, 1976.0, 1026.0, 1974.0, 1158.0, 637.0, 1141.0]

お疲れ様でした。