大晦日ハッカソン2018の進捗です。

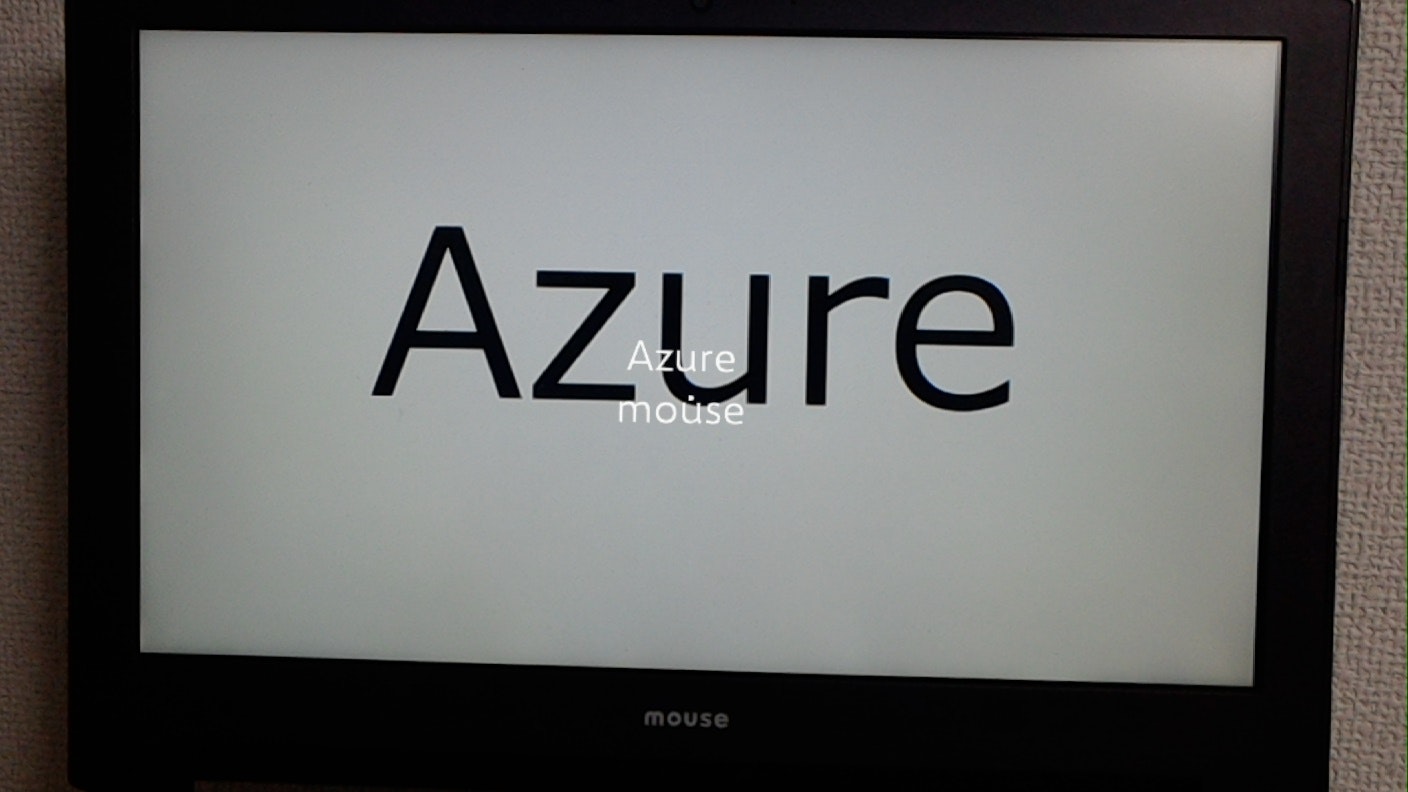

HoloLensでAzure Computer Vision APIのRecognizeTextAPIを叩いてみました!

タップすると、画像をキャプチャし、RecognizeTextAPIへ画像を送信、テキスト認識結果を表示します。もちろん、実機なしでもできます!

開発環境

- HoloLens RS5

- Visual Studio 2017 (15.9.2)

- Unity 2017.4.11.f1

- HoloToolkit-Unity-2017.4.3.0.unitypackage

- HoloToolkit-Unity-Examples-2017.4.3.0.unitypackage

- Azure Computer Vision API

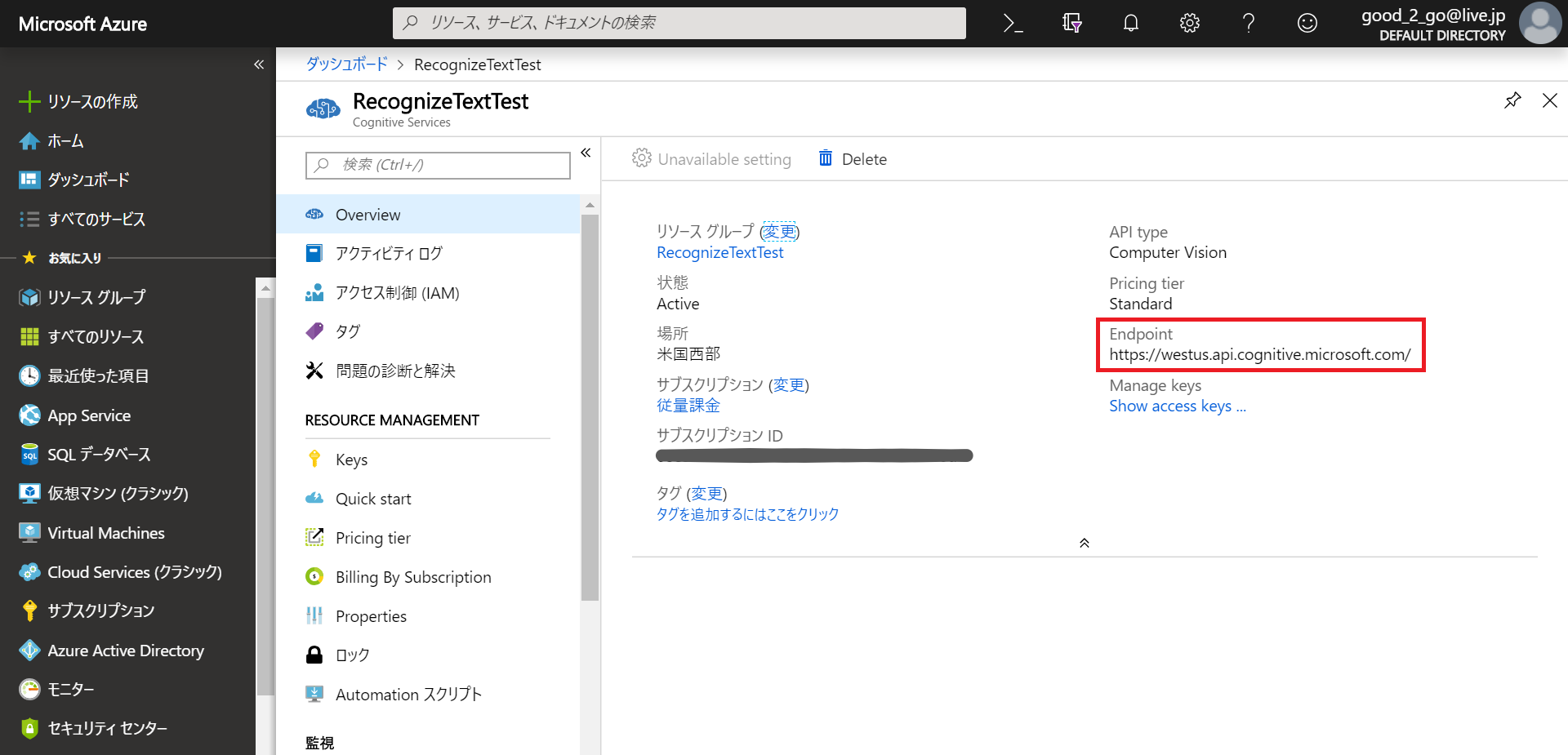

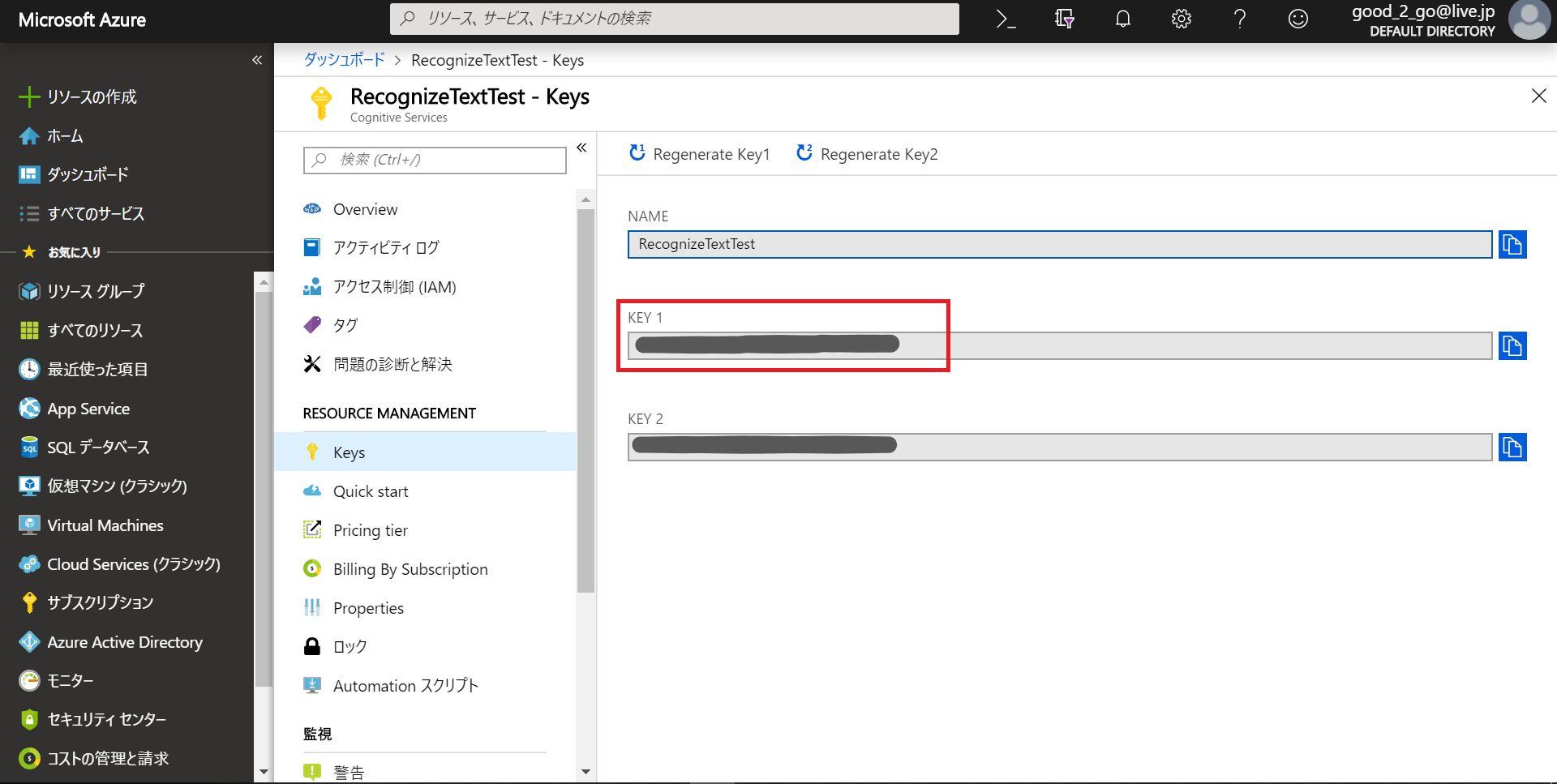

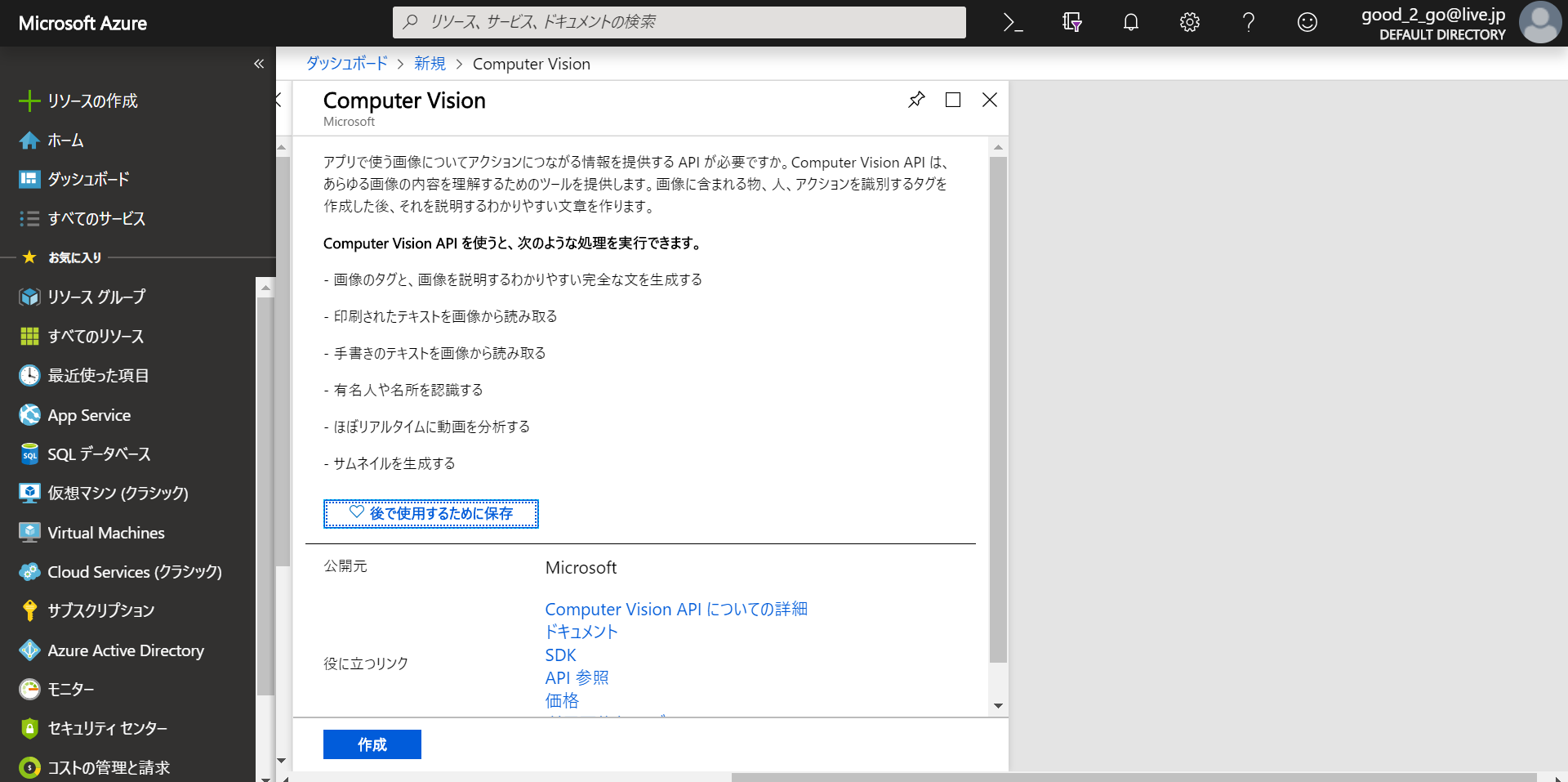

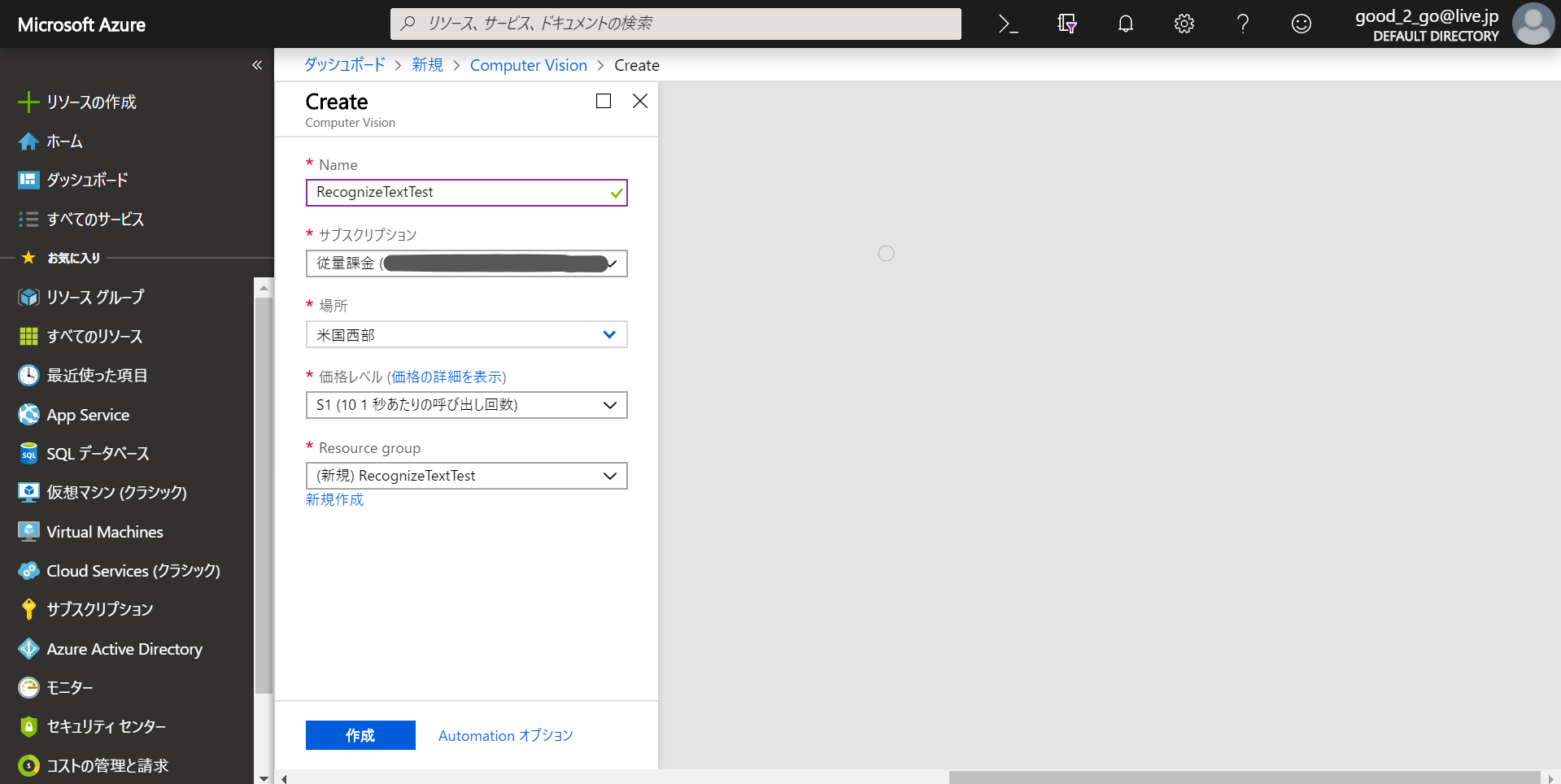

Azureの設定

Azure Portalを開き、Computer Vision APIを作成します。

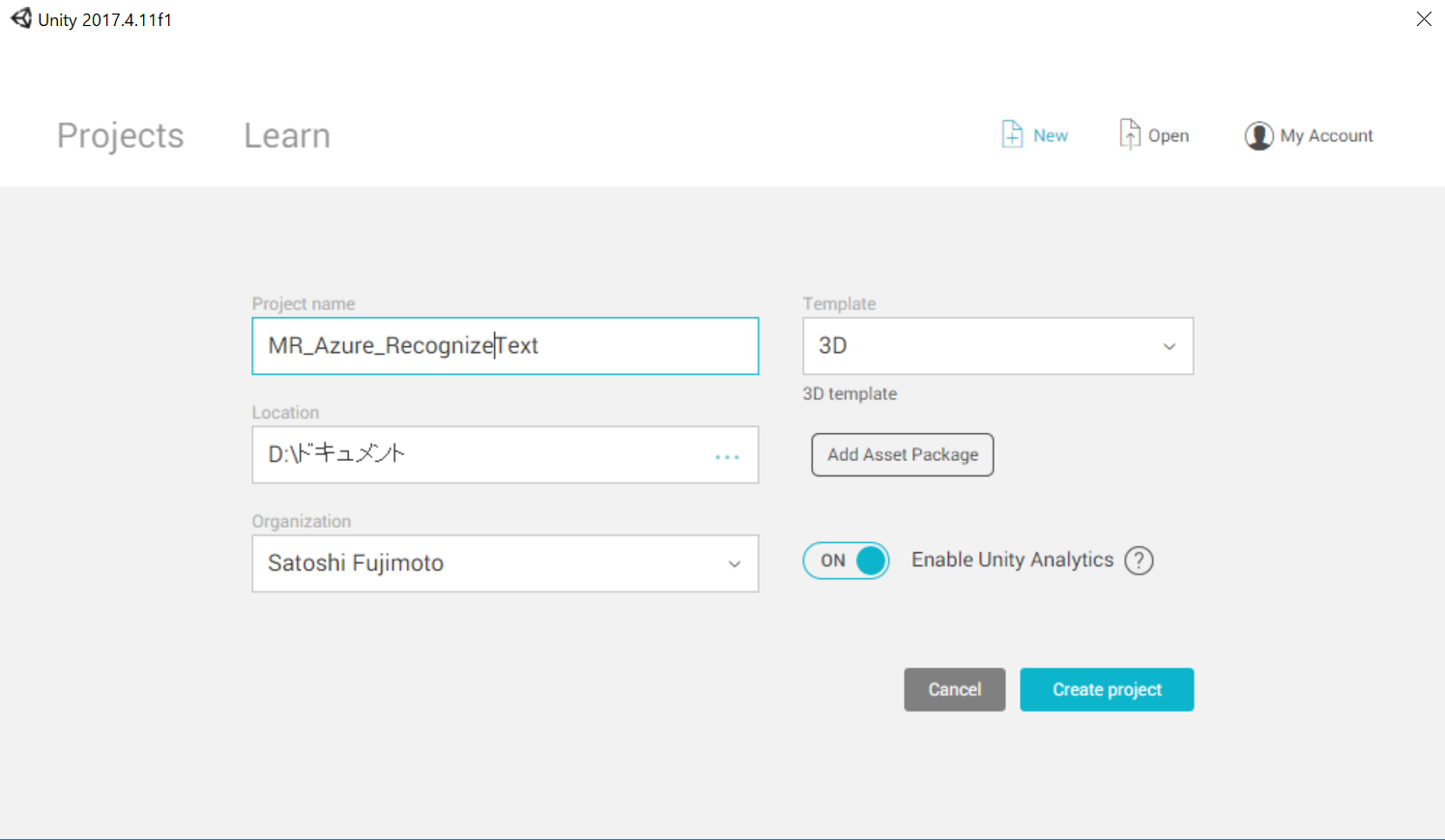

Unityプロジェクトの作成

プロジェクトを作成、HoloToolkitをインポートします。

いつもの設定をします。

MainCameraを削除し、ProjectビューからMixedRealityCameraParent、InputManager、DefaultCursorをHierarchyにD&Dします。

MixedRealityCameraParent->MixedRealityCameraのInspectorビューからCameraのClear FlagsをSolid Colorにします。そして、MixedRealityCameraManagerのClearFlagsをColor、Transparent Display SettingsのNear Clipを0.2にします。

File->Build SettingsからUniversal Windows Platformを選び、Switch Platformをクリックします。Player SettingsのOther Settings->Configuration->Scripting Backendを.NETにします。Publishing Settings->Capabilities->WebCam、Internet Clientにチェックを入れます。XR Settings->Virtual Reality Supportedにチェックを入れ、Virtual Reality SDKs->Windows Mixed Realityが追加されていることを確認します。

Ctrl+Sでシーンを保存します。名前はプロジェクト名と一緒にしました。Build Settings->Add OpenScenesからSceneを読み込み、Buildを選択、Appフォルダを作成し、ビルドします。

RecognizeTextManager.cs

空のGameObjectを作成し、名前をRecognizeTextManagerとします。

ProjectビューにScriptsフォルダを作成、RecognizeTextManager.csファイルを作成します。

using System;

using System.IO;

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.Networking;

public class RecognizeTextManager : MonoBehaviour

{

[Serializable]

public class Words

{

public int[] boundingBox;

public string text;

}

[Serializable]

public class Lines

{

public int[] boundingBox;

public string text;

public Words[] words;

}

[Serializable]

public class RecognitionResultData

{

public Lines[] lines;

}

[Serializable]

public class RecognizedTextObject

{

public string status;

public RecognitionResultData recognitionResult;

}

private string authorizationKey = "<insert your key>";

private const string ocpApimSubscriptionKeyHeader = "Ocp-Apim-Subscription-Key";

private string visionAnalysisEndpoint = "https://westus.api.cognitive.microsoft.com/vision/v2.0/recognizeText";

private string requestParameters = "mode=Printed"; //"mode=Handwritten"

private string operationLocation;

private string imageFilePath;

internal byte[] imageBytes;

internal string imagePath;

public TextMesh DebugText;

public static RecognizeTextManager instance;

private void Awake()

{

instance = this;

}

public IEnumerator RecognizeText()

{

WWWForm webForm = new WWWForm();

string uri = visionAnalysisEndpoint + "?" + requestParameters;

using (UnityWebRequest unityWebRequest = UnityWebRequest.Post(uri, webForm))

{

imageBytes = GetImageAsByteArray(imagePath);

unityWebRequest.SetRequestHeader("Content-Type", "application/octet-stream");

unityWebRequest.SetRequestHeader(ocpApimSubscriptionKeyHeader, authorizationKey);

unityWebRequest.downloadHandler = new DownloadHandlerBuffer();

unityWebRequest.uploadHandler = new UploadHandlerRaw(imageBytes);

unityWebRequest.uploadHandler.contentType = "application/octet-stream";

yield return unityWebRequest.SendWebRequest();

long responseCode = unityWebRequest.responseCode;

//Debug.Log(responseCode);

if(responseCode == 202)

{

try

{

var response = unityWebRequest.GetResponseHeaders();

operationLocation = response["Operation-Location"];

//Debug.Log(response["Operation-Location"]);

}

catch (Exception exception)

{

Debug.Log("Json exception.Message: " + exception.Message);

}

Boolean poll = true;

while (poll)

{

using (UnityWebRequest operationLocationRequest = UnityWebRequest.Get(operationLocation))

{

operationLocationRequest.SetRequestHeader(ocpApimSubscriptionKeyHeader, authorizationKey);

yield return operationLocationRequest.SendWebRequest();

responseCode = unityWebRequest.responseCode;

//Debug.Log("operationLocation : " + responseCode.ToString());

string jsonResponse = null;

jsonResponse = operationLocationRequest.downloadHandler.text;

//Debug.Log(jsonResponse);

RecognizedTextObject recognizedTextObject = new RecognizedTextObject();

recognizedTextObject = JsonUtility.FromJson<RecognizedTextObject>(jsonResponse);

//Debug.Log(recognizedTextObject.status);

if (recognizedTextObject.status == "Succeeded")

{

string result = null;

foreach (Lines line in recognizedTextObject.recognitionResult.lines)

{

result = result + line.text + "\n";

}

DebugText.text = result;

//Debug.Log(recognizedTextObject.recognitionResult.lines[0].text);

poll = false;

}

if (recognizedTextObject.status == "Failed")

{

poll = false;

}

}

}

}

yield return null;

}

}

private static byte[] GetImageAsByteArray(string imageFilePath)

{

FileStream fileStream = new FileStream(imageFilePath, FileMode.Open, FileAccess.Read);

BinaryReader binaryReader = new BinaryReader(fileStream);

return binaryReader.ReadBytes((int)fileStream.Length);

}

}

authorizationKeyにメモったKeyを入れ、visionAnalysisEndpointのリージョンも自分のに合わせます。requestParametersはPrinted(ロゴなど)もしくはHandwritten(手書き)にします。

MixedRealityCameraParent->MixedRealityCameraの子オブジェクトに3DTextPrefabを作成し、名前をDebugTextとします。

RecognizeTextManager.csをRecognizeTextManagerにAdd Componentし、DebugTextをアタッチします。

ImageCapture.cs

タップしたら、画像をキャプチャし、RecognizeTextManager.csのRecognizeText()を呼びます。

using System.Collections;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using UnityEngine;

using UnityEngine.XR.WSA.Input;

using UnityEngine.XR.WSA.WebCam;

using HoloToolkit.Unity.InputModule;

public class ImageCapture : MonoBehaviour, IInputClickHandler

{

public static ImageCapture instance;

public int tapsCount;

private PhotoCapture photoCaptureObject = null;

private bool currentlyCapturing = false;

private void Awake()

{

instance = this;

}

void Start()

{

InputManager.Instance.PushFallbackInputHandler(gameObject);

}

public void OnInputClicked(InputClickedEventData eventData)

{

if (currentlyCapturing == false)

{

currentlyCapturing = true;

tapsCount++;

ExecuteImageCaptureAndAnalysis();

}

}

void OnCapturedPhotoToDisk(PhotoCapture.PhotoCaptureResult result)

{

photoCaptureObject.StopPhotoModeAsync(OnStoppedPhotoMode);

}

void OnStoppedPhotoMode(PhotoCapture.PhotoCaptureResult result)

{

photoCaptureObject.Dispose();

photoCaptureObject = null;

StartCoroutine(RecognizeTextManager.instance.RecognizeText());

}

private void ExecuteImageCaptureAndAnalysis()

{

Resolution cameraResolution = PhotoCapture.SupportedResolutions.OrderByDescending((res) => res.width * res.height).First();

Texture2D targetTexture = new Texture2D(cameraResolution.width, cameraResolution.height);

PhotoCapture.CreateAsync(false, delegate (PhotoCapture captureObject)

{

photoCaptureObject = captureObject;

CameraParameters camParameters = new CameraParameters();

camParameters.hologramOpacity = 0.0f; // for MR 0.9f

camParameters.cameraResolutionWidth = targetTexture.width;

camParameters.cameraResolutionHeight = targetTexture.height;

camParameters.pixelFormat = CapturePixelFormat.BGRA32;

captureObject.StartPhotoModeAsync(camParameters, delegate (PhotoCapture.PhotoCaptureResult result)

{

string filename = string.Format(@"CapturedImage{0}.jpg", tapsCount);

string filePath = Path.Combine(Application.persistentDataPath, filename);

RecognizeTextManager.instance.imagePath = filePath;

photoCaptureObject.TakePhotoAsync(filePath, PhotoCaptureFileOutputFormat.JPG, OnCapturedPhotoToDisk);

currentlyCapturing = false;

});

});

}

}

実行

ビルドしたら、Appフォルダの中に生成されたMR_Azure_RecognizeText.slnをVisual Studioで開き、x86/ReleaseにしてHoloLensへビルドします。Unity Editor上でプレイボタンで開始してもOKです。

ソースコードはこちら。