i just learn data science from DataCamp about 2 months

it's my 1st step inside the data world

Environment

create a custom dockerfile from gw000/keras and modify it to add packages

# Dockerfile

FROM gw000/keras:2.0.5-py3-tf-cpu

# install dependencies from debian packages

RUN apt-get update -qq \

&& apt-get install --no-install-recommends -y \

python-matplotlib \

python-pillow

# install dependencies from python packages

RUN pip3 --no-cache-dir install \

pandas \

scikit-learn \

statsmodels

build it with tag name gw000/keras:2.0.5-py3-tf-cpu-datascience

$ docker build -t gw000/keras:2.0.5-py3-tf-cpu-datascience - < Dockerfile

Neural Network

download data from https://www.kaggle.com/c/digit-recognizer/data

create a sample code file called nn.py

model

- Rectified linear unit (ReLU) activation function for the first 3 layers

- Softmax activation function for the output layer

- Stochastic Optimization - Adam for optimizer

- categorical_crossentropy loss function for classification

# nn.py

import numpy as np

import pandas as pd

import keras

from keras.utils import to_categorical

from keras.models import Sequential

from keras.layers import Dense

# load train data

df = pd.read_csv('train.csv')

X = df.drop(['label'], axis=1).as_matrix()

y = to_categorical(df['label'])

# load test data

X_test = pd.read_csv('test.csv').as_matrix()

model = Sequential()

# hidden layer

model.add(Dense(25, activation='relu', input_shape=(X.shape[1],)))

model.add(Dense(25, activation='relu'))

model.add(Dense(25, activation='relu'))

# output layer

model.add(Dense(10, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(X, y, validation_split=0.3)

predict = np.argmax(model.predict(X_test), axis=1)

# write predict data to a csv file

result = pd.DataFrame({'ImageId': np.arange(1, predict.shape[0]+1), 'Label': predict})

result.to_csv('submission.csv', index=False)

run with docker

# run a keras container to execute bash inside

$ docker run -it --rm -v <volume-folder>:/srv gw000/keras:2.0.5-py3-tf-cpu-datascience bash

# execute nn.py in the volume folder of container

$ python3 nn.py

Using TensorFlow backend.

Train on 29399 samples, validate on 12601 samples

Epoch 1/10

2018-01-03 14:42:29.906464: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.

2018-01-03 14:42:29.908490: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2018-01-03 14:42:29.908599: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2018-01-03 14:42:29.908667: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2018-01-03 14:42:29.908725: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

29399/29399 [==============================] - 28s - loss: 7.4748 - acc: 0.5132 - val_loss: 5.7031 - val_acc: 0.6215

Epoch 2/10

29399/29399 [==============================] - 10s - loss: 5.1255 - acc: 0.6508 - val_loss: 3.9194 - val_acc: 0.6898

Epoch 3/10

29399/29399 [==============================] - 9s - loss: 1.4371 - acc: 0.8121 - val_loss: 0.4783 - val_acc: 0.8856

Epoch 4/10

...

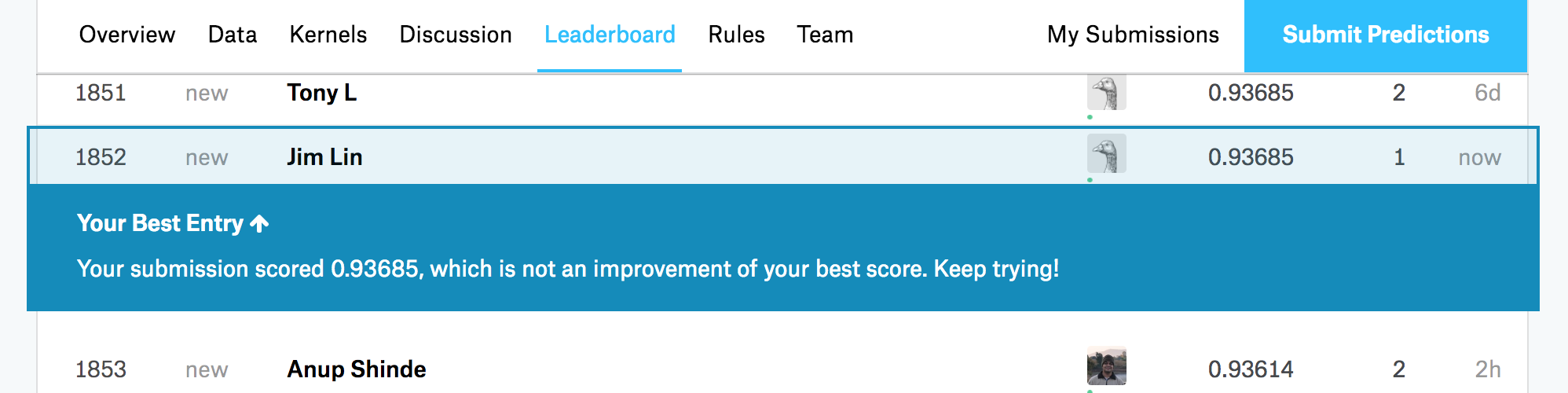

Rank

go to https://www.kaggle.com/c/digit-recognizer/submit

upload your submission csv file

it is a very simple example so the rank is not good!

you can improve it by CNN - Introduction to CNN Keras - 0.997 (top 6%)