Japanese English

- English -

1. Introduction

In this article, I'd like to share with you the quantization workflow I've been working on for six months. This is the output of know-how for converting Tensorflow checkpoints (.ckpt/.meta), FreezeGraph (.pb), saved_model (.pb), keras_model (.h5), Tensorflow.js models, and PyTorch checkpoints (.pth) into quantization models for Tensorflow Lite. Since Tensorflow was upgraded from v1.x to v2.x, it is necessary to take special steps to absorb the differences between the versions, and I often feel that there is a lack of material to start the conversion. Tensorflow, Tensorflow Lite, Keras, ONNX, PyTorch, and OpenVINO(OpenCV) are all used in combination.

I work hard on the quantization of the Neural Network every day. I'm using a lighter model to mass produce a quantized model with the goal of making fast reasoning without a GPU on edge terminals such as the RaspberryPi4. As an example, I did 8-bit integer quantization of the model, and the result of multistage inference between two models using only RaspberryPi4 CPU is shown in the following video. Two quantization models, Object Detection (MobileNetV2-SSDLite dm=0.5) and Head Pose Estimation, are run in series.

If you find the video too small to watch, **[PINTO_model_zoo](https://github.com/PINTO0309/PINTO_model_zoo#sample3---head-pose-estimation-multi-stage-inference-with-multi-model)** has an enlarged sample GIF, which can be viewed over Wi-Fi or wired.Head Pose Estimation の RaspberryPi4 CPU only + Tensorflow Lite + 4 Threads はかなりうまくいきました。 2段階推論にも関わらずサクサクの 13 FPS です。 発想力が足りないため見苦しいオッサンの顔でテストしてしまったことをお許しください。 あ〜、久々に達成感🤪https://t.co/hIwxA8eAZC

— Super PINTO (@PINTO03091) April 27, 2020

2. Table of contents

1. Introduction

2. Table of contents

3. Environment

4. Procedure

4-1. Check the model's INPUT and OUTPUT names and types, and change the batch size and type

4-1-1. In the case of a Tensorflow checkpoint

4-1-2. In the case of Tensorflow Freeze_Graph

4-1-3. In the case of Tensorflow saved_model

4-1-4. In the case of Tensorflow/Keras .h5/.json

4-2. Various quantization procedures

4-2-1. Quantization from a Tensorflow checkpoint (.ckpt)

4-2-1-1. Generating .meta from .index and .data-00000-of-00001

4-2-1-2. Generate Freeze_Graph from checkpoint (.meta)

4-2-1-3. Generate a saved_model from Freeze_Graph

4-2-1-4. Weight Quantization from saved_model (weight-only quantization)

4-2-1-5. Integer Quantization from saved_model (8-bit integer quantization)

4-2-1-6. saved_model to Full Integer Quantization (all 8-bit integer quantization)

4-2-1-7. Float16 Quantization from saved_model (Float16 quantization)

4-2-1-8. Full Integer Quantization to EdgeTPU convert

4-2-2. Quantization from a Tensorflow checkpoint (.meta)

4-2-3. Quantization from Tensorflow Freeze_Graph (.pb)

4-2-4. Quantization from Tensorflow saved_model (.pb)

4-2-5. Quantization from Tensorflow/Keras (.h5/.json)

4-2-5-1. Weight Quantization from .h5/.json (weight quantization)

4-2-5-2. Generating the calibration data set

4-2-5-3. Integer Quantization from .h5/.json (8-bit integer quantization)

4-2-5-4. Full Integer Quantization from .h5/.json (all 8-bit integer quantization)

4-2-5-5. Float16 Quantization from .h5/.json (Float16 quantization)

4-2-5-6. Full Integer Quantization to EdgeTPU convert

4-2-6. Quantization from a model for Tensorflow.js

4-2-6-1. Advance preparation

4-2-6-2. Generating a saved_model from Tensorflow.js

4-2-6-3. Import saved_model generated by Tensorflow v2.x into Tensorflow v1.x and process the input shape

4-2-6-4. Installation of Tensorflow v2.2.0

4-2-6-5. Weight Quantization from saved_model (Weight-only quantization)

4-2-6-6. Integer Quantization from saved_model (8-bit integer quantization)

4-2-6-7. Full Integer Quantization from saved_model (All 8-bit integer quantization)

4-2-6-8. Float16 Quantization from saved_model (Float16 quantization)

4-2-6-9. Full Integer Quantization to EdgeTPU convert

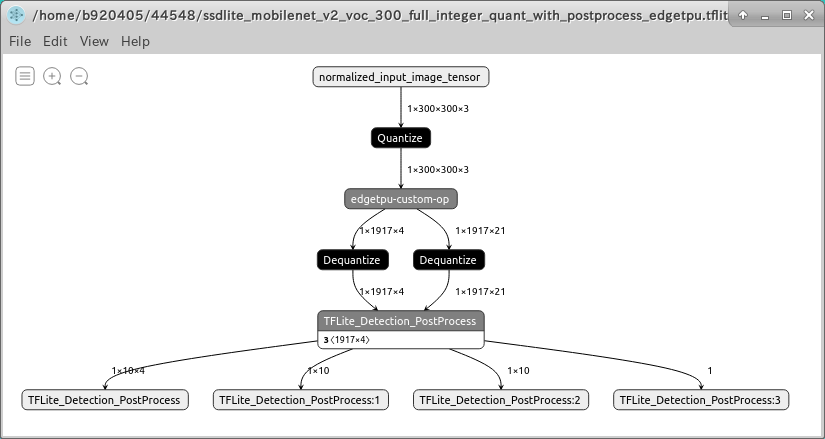

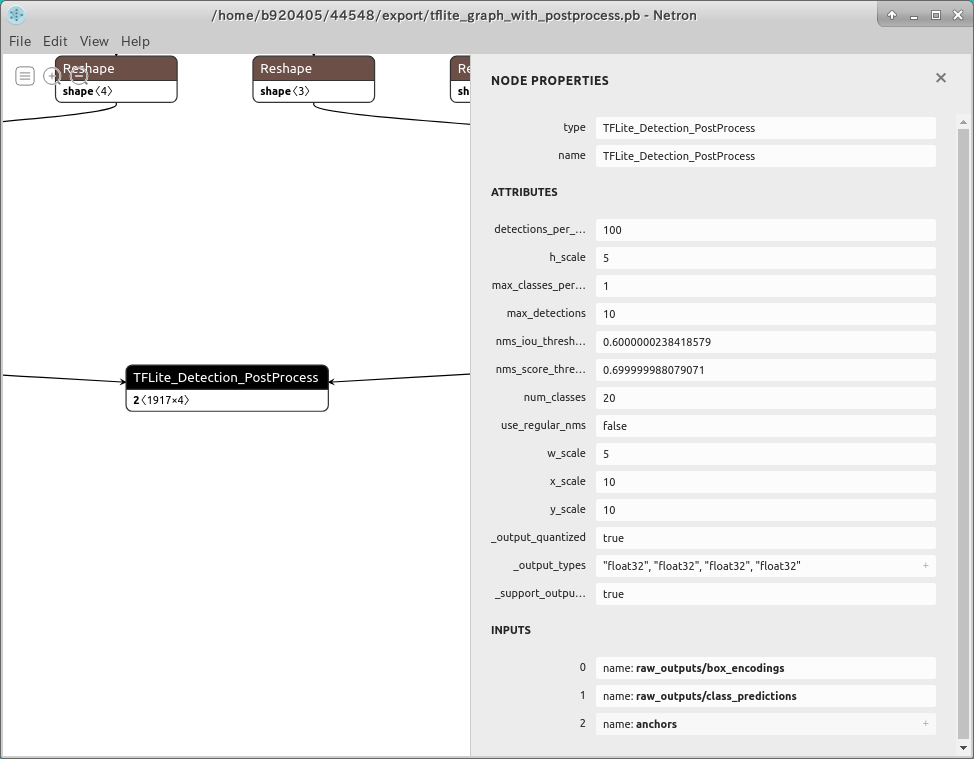

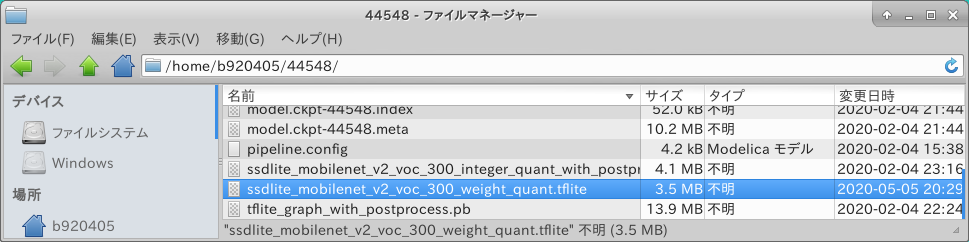

4-2-7. Quantize the model generated by the TensorFlow Object Detection API

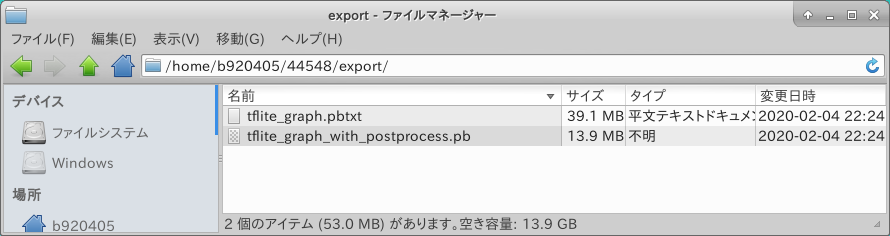

4-2-7-1. Generating a .pb file with Post-Process

4-2-7-2. Weight Quantization from Freeze_Graph (Weight-only quantization)

4-2-7-3. Integer Quantization from Freeze_Graph (8-bit integer quantization)

4-2-7-4. Full Integer Quantization from Freeze_Graph (All 8-bit integer quantization)

4-2-7-5. Float16 Quantization from Freeze_Graph (Float16 quantization)

4-2-7-6. Full Integer Quantization to EdgeTPU convert

4-2-8. Quantize models containing operations that are not supported by Tensorflow Lite but are supported by Tensorflow

4-2-8-1. Generate Mask-RCNN Inception V2 .pb file

4-2-8-2. Weight Quantization of Mask-RCNN Inception V2 (Weight-only quantization)

4-2-8-3. Float16 Quantization in Mask-RCNN Inception V2 (Float16 quantization)

4-2-8-4. Running a model with Flex Delegate (Tensorflow Select Ops) enabled

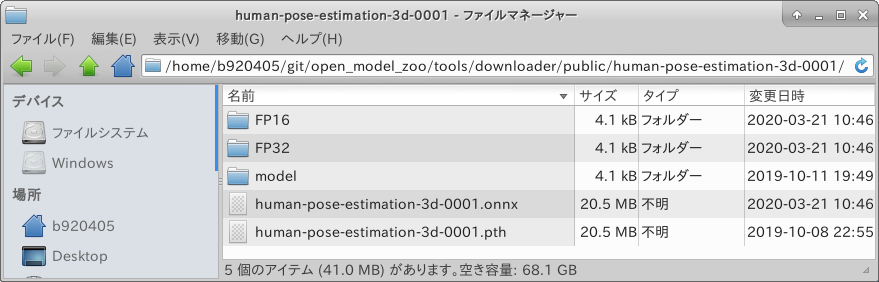

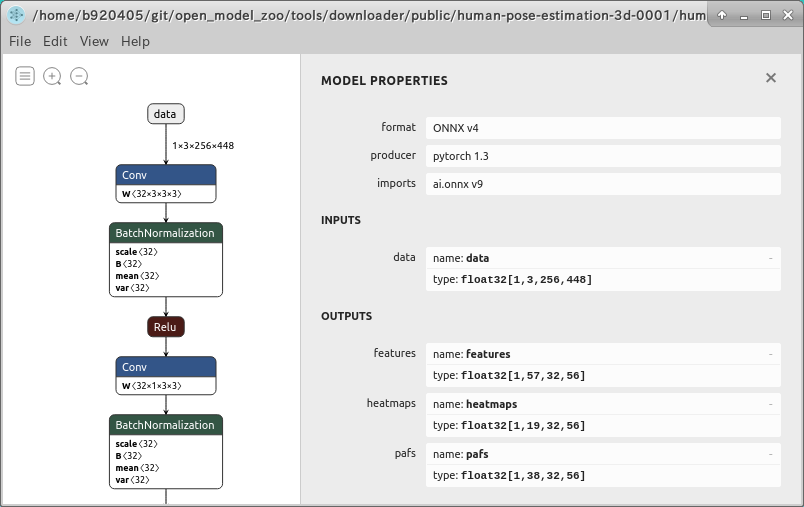

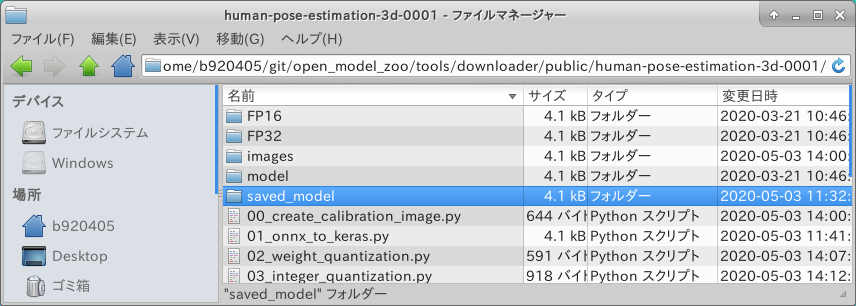

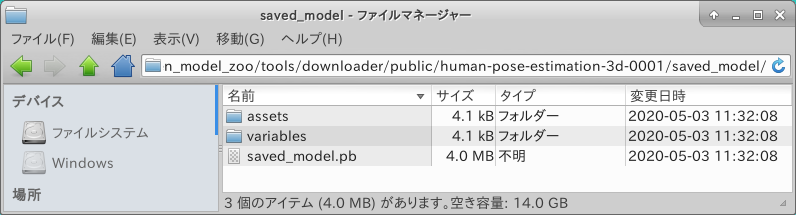

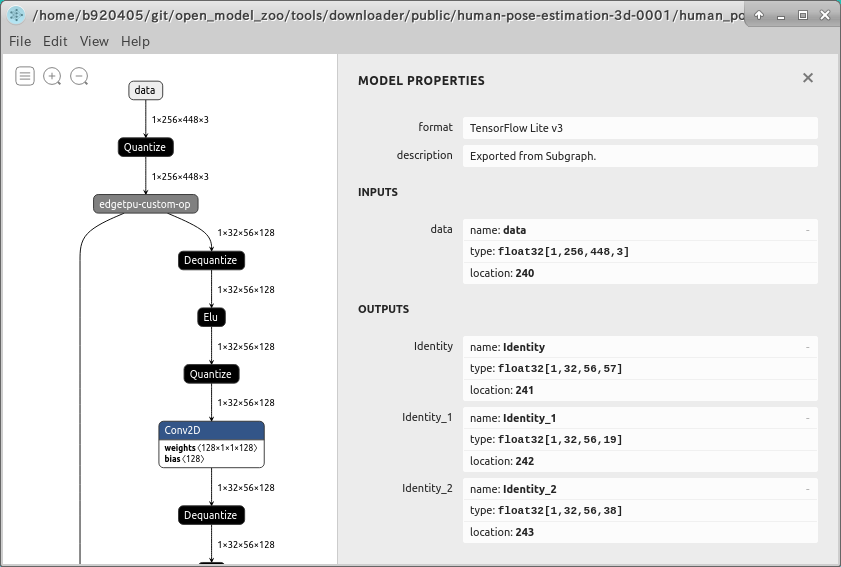

4-2-9. Quantization from a model for PyTorch

4-2-9-1. Advance preparation (PyTorch->ONNX)

4-2-9-2. ONNX->Keras conversion by onnx2keras

4-2-9-3. Weight Quantization from saved_model (Weight-only quantization)

4-2-9-4. Integer Quantization from saved_model (8-bit integer quantization)

4-2-9-5. Full Integer Quantization from saved_model (All 8-bit integer quantization)

4-2-9-6. Float16 Quantization from saved_model (Float16 quantization)

4-2-9-7. Full Integer Quantization to EdgeTPU convert

4-2-10. Quantization of MediaPipe's model BlazeFace(.tflite)

4-2-10-1. Build flatc and download schema.fbs

4-2-10-2. Download MediaPipe's BlazeFace model (.tflite)

4-2-10-3. Converting BlazeFace(.tflite) to saved_model(.pb)

4-2-10-4. Weight Quantization from saved_model (weight-only quantization)

4-2-10-5. Integer Quantization from saved_model (8-bit integer quantization)

4-2-10-6. Full Integer Quantization from saved_model (All 8-bit integer quantization)

4-2-10-7. Float16 Quantization from saved_model (Float16 quantization)

4-2-10-8. Full Integer Quantization to EdgeTPU convert

4-3. Performance benchmarks for the quantization model (.tflite)

4-3-1. Building the TFLite Model Benchmark Tool

4-3-2. Options for the TFLite Model Benchmark Tool

4-3-3. Benchmark example of a model that includes only standard Tensorflow Lite operations (No XNNPACK, 4 Threads)

4-3-4. Benchmark example of a model that includes only standard Tensorflow Lite operations (XNNPACK available, 4 Threads)

4-3-5. Benchmark examples of models with non-standard Tensorflow Lite operations (Flex enabled, no XNNPACK, 4 Threads)

4-3-6. Benchmark examples of models with non-standard Tensorflow Lite operations (Flex enabled, with XNNPACK, 4 Threads)

4-3-7. Execution log sample of Benchmark_Tool

3. Environment

- Tensorflow-GPU v1.15.2

- Tensorflow v2.1.0, v2.2.0 or tf-nightly

- Accelerated and Tuned Python API Tensorflow Lite

- PyTorch

- Caffe

- OpenVINO 2020.2

- OpenCV 4.2

- onnx2keras

- Netron

- RaspberryPi4 + Ubuntu aarch64

4. Procedure

4-1. Check the model's INPUT and OUTPUT names and types, and change the batch size and type

This is the hardest and most time-consuming first step. The amount of effort depends on the pattern of the conversion.

4-1-1. In the case of a Tensorflow checkpoint

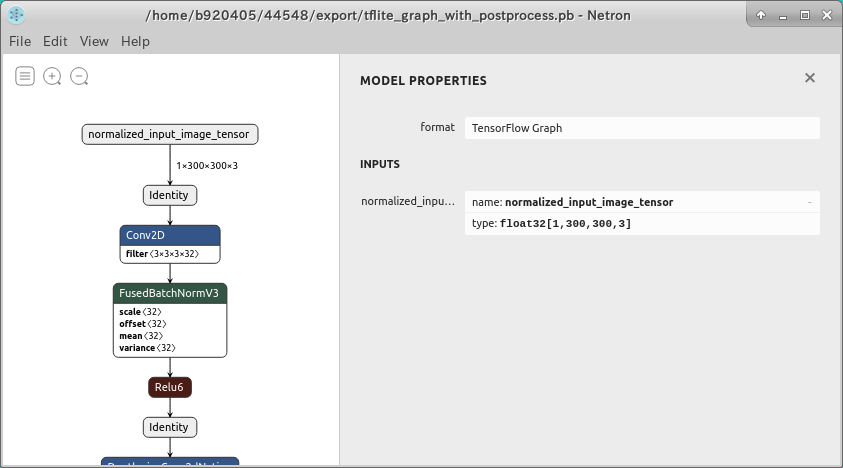

This pattern does not provide Freeze_Graph or saved_model. The quickest way to follow this pattern is to read or run the sample code for the Inference Test. Using Netron or Tensorflow's official visualization tools (Tensorboard or summarize_graph), it is not impossible to see the structure of the model, but the visualization fails because a lot of operations required for training are left in the file, or even if it is possible to visualize, the graph is too huge and it is difficult to find the INPUT and OUTPUT.

Now, let's check the INPUT/OUTPUT of the White-box-Cartoonization model that actually animates the live action as an example. In order to describe the various elements comprehensively, I have deliberately selected a high difficulty model with many moves this time. Note that you need to install Tensorflow v1.15.2 of the v1.x system to work on this model. If you have already installed Tensorflow v2.x, you need to uninstall it temporarily and install the v1.x system again. Alternatively, you can work in a Docker environment with Tensorflow already installed without polluting the environment.

Just in case, when I check the checkpoint that is offered. Yes, for some reason, kindly only the .meta file is not committed, is it? It's normal for people to lose motivation drastically at this point. That's right. If you started reading this article, you are not an ordinary person. Anyway, since it will not be a particular problem to proceed with the work, we will proceed as it is.

First of all, read cartoonize.py, the logic for the inference test. Then, let's look at the contents of the cartoonize() method, which is called right after main() at the beginning of the program. In the case of a simple, beginner-friendly test code, it only takes a minute to get to the INPUT definition part. Tensorflow's input operations are defined using placeholder. However, upon closer inspection, the "name" attribute is not defined. In this case, you can name your own name because it will make the subsequent conversion and confirmation work difficult. Also, the definition of the tensor is tainted with None. In the case of quantization, it is essential that the input resolution is fixed. Therefore, the input resolution is replaced by a fixed value. This time, I chose 720x720.

def cartoonize(load_folder, save_folder, model_path):

input_photo = tf.placeholder(tf.float32, [1, None, None, 3]) #<--- This is INPUT

network_out = network.unet_generator(input_photo)

final_out = guided_filter.guided_filter(input_photo, network_out, r=1, eps=5e-3)

all_vars = tf.trainable_variables()

gene_vars = [var for var in all_vars if 'generator' in var.name]

saver = tf.train.Saver(var_list=gene_vars)

def cartoonize(load_folder, save_folder, model_path):

input_photo = tf.placeholder(tf.float32, [1, 720, 720, 3], name='input') #<--- This is INPUT

network_out = network.unet_generator(input_photo)

final_out = guided_filter.guided_filter(input_photo, network_out, r=1, eps=5e-3)

all_vars = tf.trainable_variables()

gene_vars = [var for var in all_vars if 'generator' in var.name]

saver = tf.train.Saver(var_list=gene_vars)

The following is a note about placeholder in quantization.

1. N (batch size), H (height), W (width) and C (RGB channel) must all be fixed with integer values.

2. When defined by NCHW (channel-first), it should be defined or converted by NHWC (channel-last).

3. The type of placeholder should be tf.float32 (most models are defined in tf.uint8)

4. tf.cast operation does not support quantization operations and should be removed if possible.

By the way, the reason why it is 720x720 this time is because it is described in the logic of the preprocessing part of the image as follows, the operation to resize so that it does not go under 720 pixels in height and width. It seems that there is a limit to the size that can be specified depending on the model, so you have to read all the logic for inference tests or training logic. The White-box-Cartoonization gave an error and could not be deduced if the resolution was smaller than 720x720. If you don't want to read the logic, try repeatedly to see how small the resolution can be.

def resize_crop(image):

h, w, c = np.shape(image)

if min(h, w) > 720:

if h > w:

h, w = int(720*h/w), 720

else:

h, w = 720, int(720*w/h)

image = cv2.resize(image, (w, h),

interpolation=cv2.INTER_AREA)

h, w = (h//8)*8, (w//8)*8

image = image[:h, :w, :]

return image

Since many of the published models deal with images, a placeholder is defined for a uint8 type that takes an RGB value of 0-255, as shown below. In most cases, the next step in placeholder is to convert the type to float32 with a tf.cast operation. As mentioned above, tf.cast is an error when quantizing, so you should remove it at this time. When you actually run the inference, the image data read by OpenCV or Pillow will be of type uint8, so you need to cast it to type Float32 just before the business logic hands over the image to Tensorflow.

input_photo = tf.placeholder(tf.uint8, [1, 720, 720, 3], name='input')

casted_photo = tf.cast(input_photo, tf.float32)

input_photo = tf.placeholder(tf.float32, [1, 720, 720, 3], name='input')

Now let's look up the name of OUTPUT. The inference test logic of this model White-box-Cartoonization is so simple that the final OUTPUT was defined just below the INPUT definition line. The name of the variable is final_out, politely. There are two ways to look up the name of an OUTPUT without using Netron, summarize_graph or Tensorboard.

1. Digging deeper into the program and visually identifying the end of the model structure

2. Anyway, run a test run and find out the name of the final operation in the debug print

Since I'm a person who loves to cut corners, I took the method of 2.

def cartoonize(load_folder, save_folder, model_path):

input_photo = tf.placeholder(tf.float32, [1, 720, 720, 3], name='input')

network_out = network.unet_generator(input_photo)

final_out = guided_filter.guided_filter(input_photo, network_out, r=1, eps=5e-3) #<--- Here's an OUTPUT

print("input_photo.name =", input_photo.name) #<--- Added one line for debug printing of INPUT name.

print("input_photo.shape =", input_photo.shape) #<--- Added one more line for debug printing of INPUT shapes.

print("final_out.name =", final_out.name) #<--- Added one line for the debug print of OUTPUT name.

print("final_out.shape =", final_out.shape) #<--- Added one line for debug printing of OUTPUT shape.

all_vars = tf.trainable_variables()

gene_vars = [var for var in all_vars if 'generator' in var.name]

saver = tf.train.Saver(var_list=gene_vars)

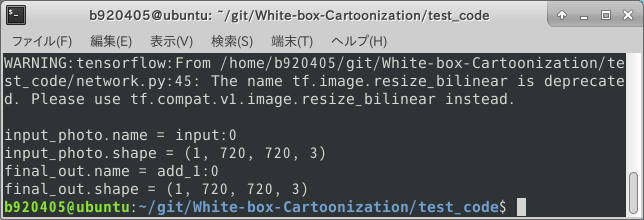

When I ran the program for the inference test, the names and shapes of the INPUT and OUTPUT are output in the debug print I added earlier. Apparently, the name of the OUTPUT is add_1:0. Also, it seems that the name and shape of placeholder, which I modified earlier, are correctly reflected.

This concludes the procedure for checking INPUT/OUTPUT names in a checkpoint model. I'll explain how to generate .meta, and I'll explain how to generate Freeze_Graph and saved_model in the steps that follow.

4-1-2. In the case of Tensorflow Freeze_Graph

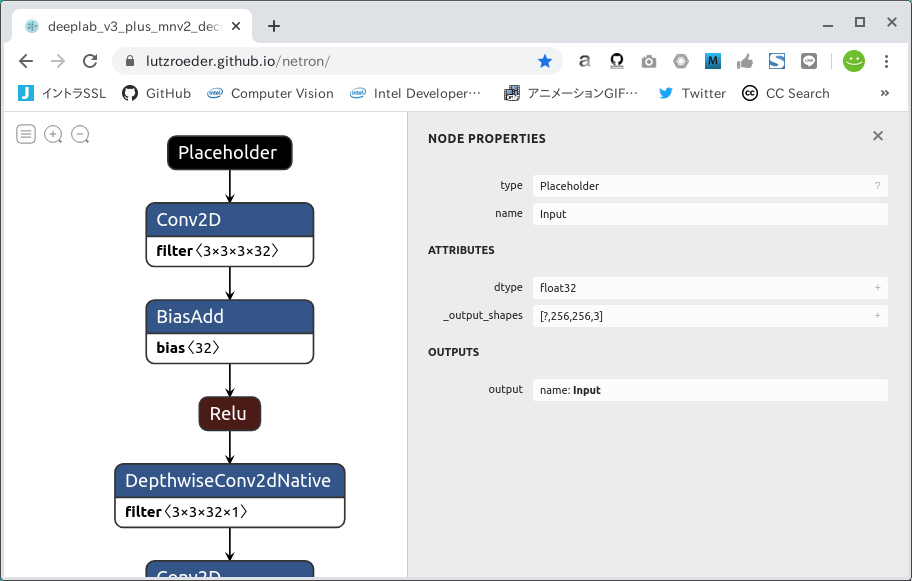

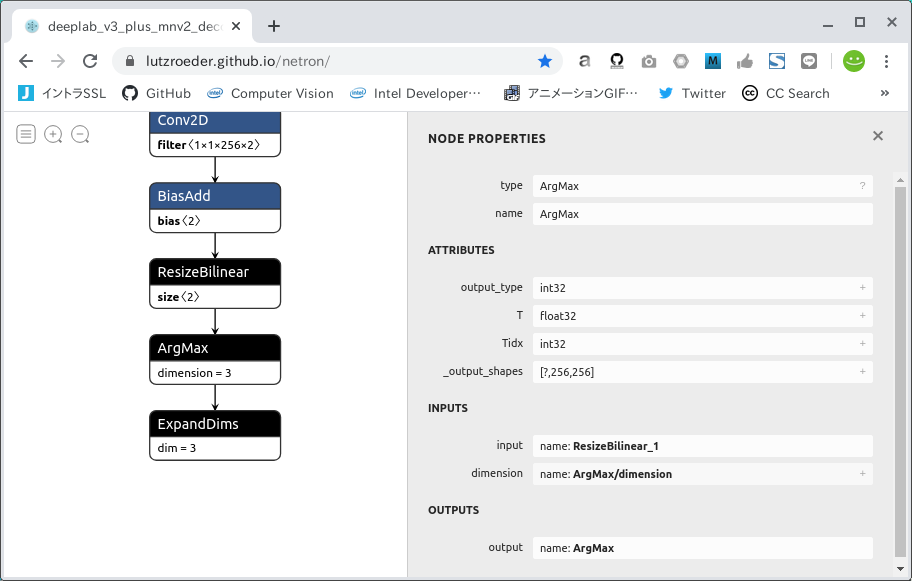

This is a working pattern when the model is provided in Freeze_Graph (.pb) format. It is very easy to identify the name of INPUT/OUTPUT in this case. Let's check the model of Semantic Segmentation Mobile-DeeplabV3-plus (MobileNetV2) for example.

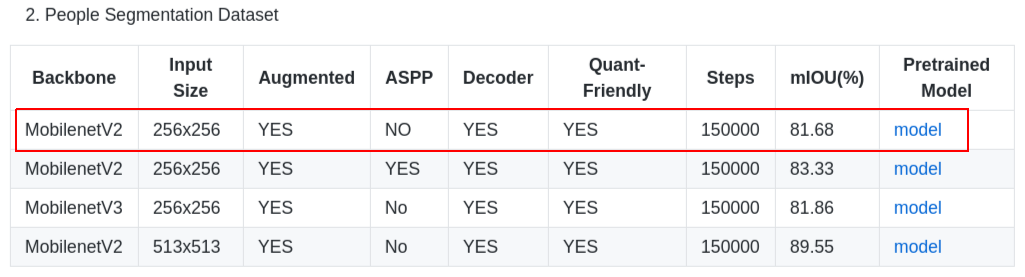

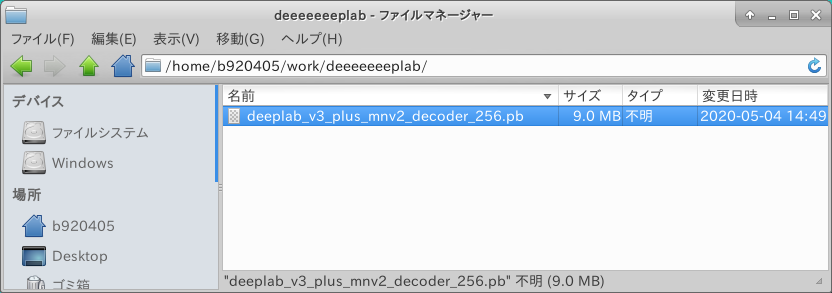

First, download the Freeze_Graph (.pb) file from the above repository. The only thing to note here is that models with special treatment such as ASPP will fail to quantize, so you should only get a model with a simple structure as possible.

When using the command line to download materials from Google Drive, it is necessary to bypass the confirmation dialog, so you can download by executing the 3-line command as shown below.

$ curl -sc /tmp/cookie "https://drive.google.com/uc?export=download&id=1VF5yMz_tIkTOVfgmIgg7tPAJJEEcZ49B" > /dev/null

$ CODE="$(awk '/_warning_/ {print $NF}' /tmp/cookie)"

$ curl -Lb /tmp/cookie "https://drive.google.com/uc?export=download&confirm=${CODE}&id=1VF5yMz_tIkTOVfgmIgg7tPAJJEEcZ49B" -o deeplab_v3_plus_mnv2_decoder_256.pb

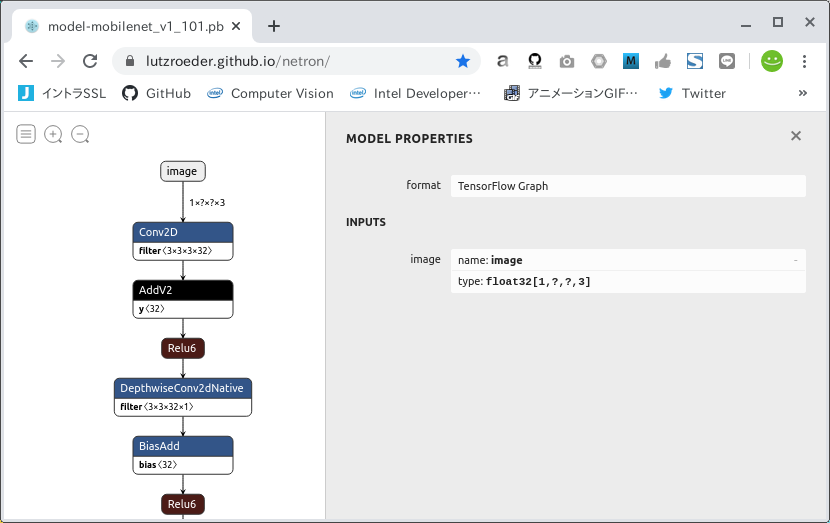

Check the structure of the model you have downloaded. There is a super useful site called Netron, which you can access first.

https://lutzroeder.github.io/netron/

"Open Model..." button and open the file deeplab_v3_plus_mnv2_decoder_256.pb which was downloaded earlier. The names of INPUT/OUTPUT are immediately known. INPUT is Input, shape and type is Float32 [?, 256, 256, 3], OUTPUT is ArgMax, shape and type is Float32 [?, 256, 256, 3], OUTPUT is ArgMax, and the shape and type is Float32 [?, 256, 256]. At first glance, you may think that ExpandDims is appropriate for the final OUTPUT, but in fact, it's not a problem if you select ArgMax, which is almost the same as Semantic Segmentation's model. Also, the ? part of the shape is synonymous with None in variable batches. However, this is not a problem for the quantization operation without immobilization. It is automatically converted to 1 when performing the quantization of the subsequent work.

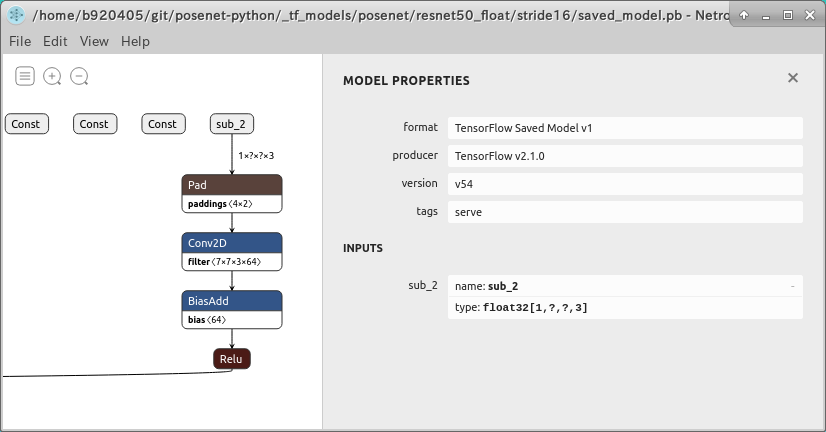

Now, the work up to this point has been too easy, hasn't it? Let's increase the level of difficulty a bit more. Next, let's try the following Python implementation of Tensorflow.js, based on Posenet v1.

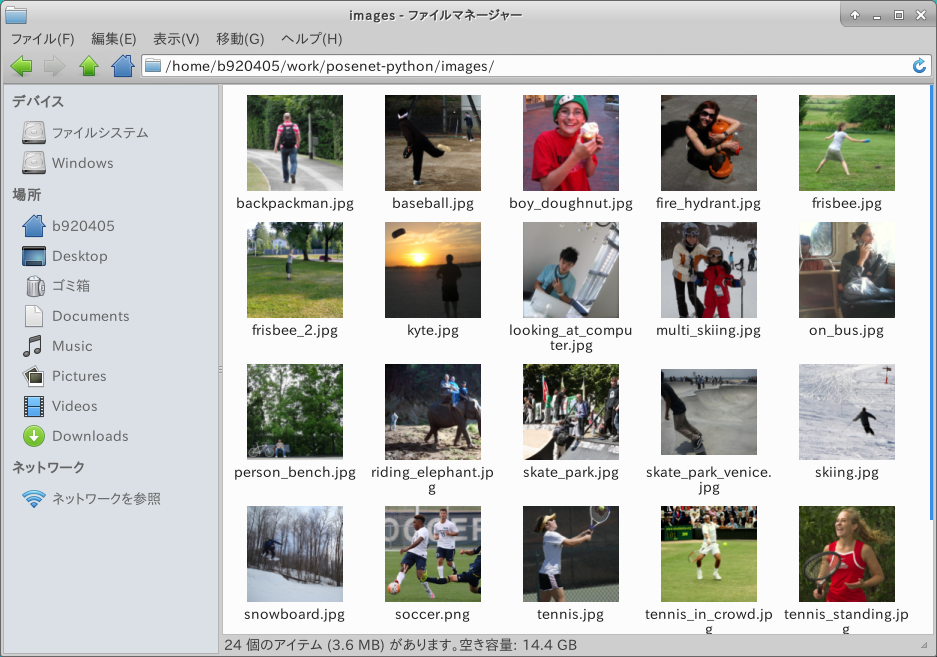

First, Clone the repository to get the Freeze_Graph and issue the following command. The argument of --image_dir can be any folder path where there is a human image file. I created a folder called images and put 24 images of people in it.

Convert a Tensorflow.js model to a Tensorflow model. The following processes require the introduction of Tensorflow v1.15.2.

$ sudo pip3 uninstall tensorboard-plugin-wit tb-nightly \

tf-estimator-nightly tensorflow-gpu \

tensorflow tf-nightly tensorflow_estimator

$ sudo pip3 install tensorflow-gpu==1.15.2

$ git clone https://github.com/rwightman/posenet-python.git

$ cd posenet-python

$ python3 image_demo.py \

--model 101 \

--image_dir ./images \

--output_dir ./output

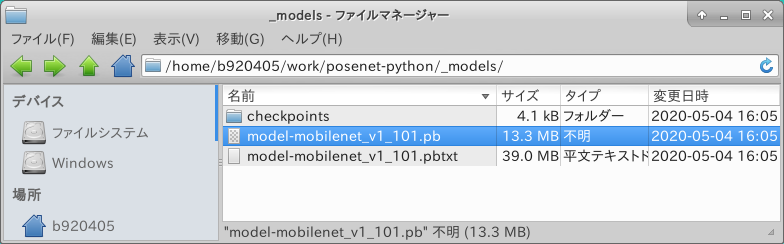

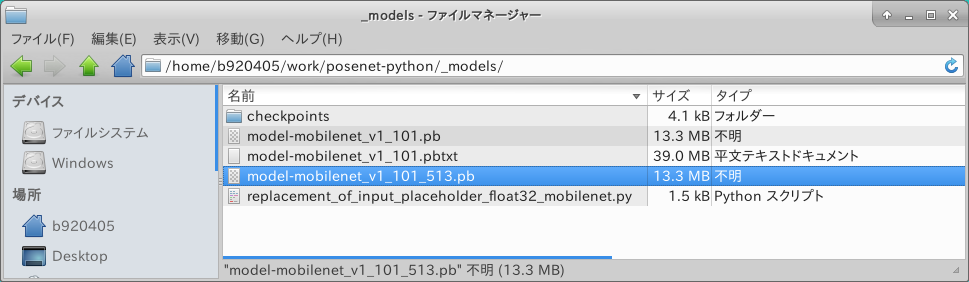

When the process is finished, three types of checkpoints, .pb and .pbtxt will be created under the folder _models.

It's based on MobileNetV1, so the accuracy is not very good. As an aside, the repository at the URL linked at the top of this article has already committed the high-precision, slow Posenet v2 quantization model, converted on a ResNet50 basis.

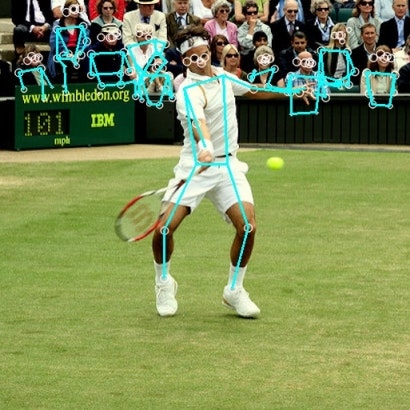

The figure below shows the results of the Posenet v2 inference to the same image from the ResNet50 backbone version. It's hard to tell, but the accuracy seems to have improved slightly.

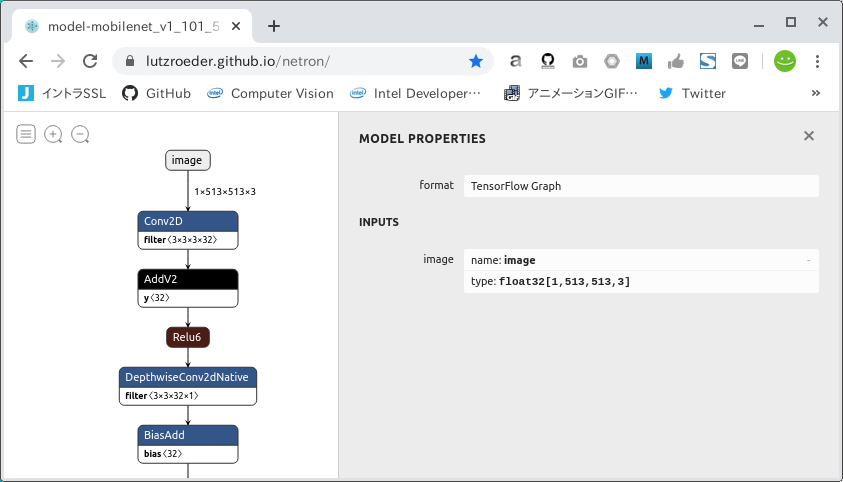

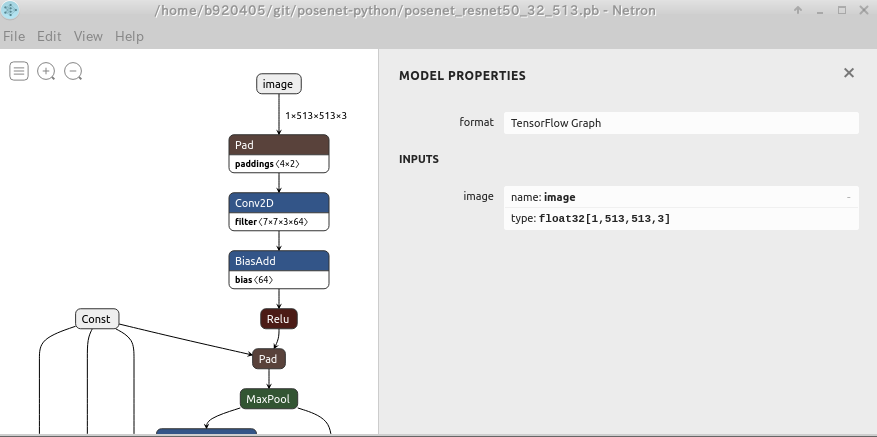

Now, let's visualize the .pb file using Netron as before. Freeze_Graph (.pb) has a name, type and shape name=image Float32 [1, ?, ?, 3] for name, type and shape. As it is, the quantization operation will fail. I will now explain how to convert the INPUT shape of a model whose H (height) and W (width) are 'None' with Freeze_Graph. Note that this procedure can only be performed with Tensorflow v1.x and does not work equally well for all models.

This section describes a program to convert the shape of the INPUT of Freeze_Graph. The general flow is as follows.

1. Defines a placeholder with a shape to be set after conversion.

2. Load a Freeze_Graph that you already have at hand.

3. Import the placeholder defined in 1.

4. ( Check the debug print to make sure placholder is captured correctly )

5. Remove all unnecessary Node with TransformGraph, a Tensorflow v1.x-based tool.

6. Output a processed Freeze_Graph to a .pb file.

### tensorflow-gpu==1.15.2

import tensorflow as tf

from tensorflow.tools.graph_transforms import TransformGraph

with tf.compat.v1.Session() as sess:

# shape=[1, ?, ?, 3] -> shape=[1, 513, 513, 3]

# name='image' specifies the placeholder name of the converted model

inputs = tf.compat.v1.placeholder(tf.float32, shape=[1, 513, 513, 3], name='image')

with tf.io.gfile.GFile('./model-mobilenet_v1_101.pb', 'rb') as f:

graph_def = tf.compat.v1.GraphDef()

graph_def.ParseFromString(f.read())

# 'image:0' specifies the placeholder name of the model before conversion

tf.graph_util.import_graph_def(graph_def, input_map={'image:0': inputs}, name='')

print([n for n in tf.compat.v1.get_default_graph().as_graph_def().node if n.name == 'image'])

# Delete Placeholder "image" before conversion

# see: https://github.com/tensorflow/tensorflow/tree/master/tensorflow/tools/graph_transforms

# TransformGraph(

# graph_def(),

# input_op_name,

# output_op_names,

# conversion options

# )

optimized_graph_def = TransformGraph(

tf.compat.v1.get_default_graph().as_graph_def(),

'image',

['heatmap','offset_2','displacement_fwd_2','displacement_bwd_2'],

['strip_unused_nodes(type=float, shape="1,513,513,3")'])

tf.io.write_graph(optimized_graph_def, './', 'model-mobilenet_v1_101_513.pb', as_text=False)

See Graph Transform Tool for specifications and usage of TransformGraph. Execute the created INPUT shape conversion program. The shape of the INPUT of the Freeze_Graph model-mobilenet_v1_101_513.pb generated after program execution is assumed to be [1, 513, 513, 3]. If you want to convert it to a slightly smaller shape, just change it like shape=[1, 257, 257, 3].

$ python3 replacement_of_input_placeholder_float32_mobilenet.py

Check the shape of the generated model-mobilenet_v1_101_513.pb with Netron.

This is the end of the procedure to find INPUT/OUTPUT names in a Freeze_Graph model and to convert a Freeze_Graph shape.

4-1-3. In the case of Tensorflow saved_model

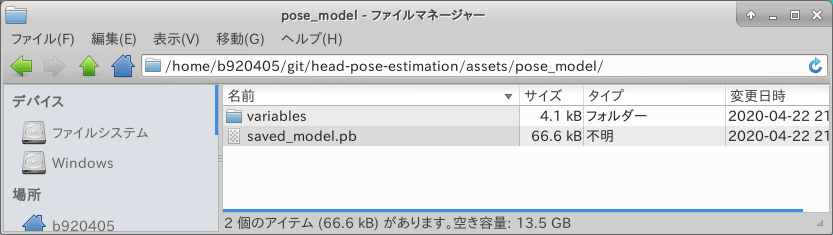

If the model is provided in the saved_model (.pb) format, this is the pattern. Again, it is very easy to identify the name of the INPUT/OUTPUT. So far, however, I don't see many examples of pre-trained models being offered in this format. This time, let's check the names of INPUT and OUTPUT based on the following Head Pose Estimation.

First, please clone the repository.

$ git clone https://github.com/yinguobing/head-pose-estimation.git

$ cd head-pose-estimation/assets

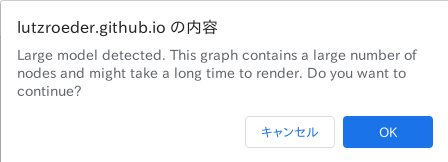

If you display a saved_model with a very large number of operators with the Web version of Netron, a warning is displayed as shown below, and it may take more than 5 minutes to render.

In the case of the proprietary version, it is displayed as shown below.

So, if you want to check saved_model format INPUT/OUTPUT easily, you can use standard command saved_model_cli. Since there is a folder called pose_model under the assets folder and saved_model.pb is deployed underneath it, run an analysis command on the pose_model folder directly underneath the assets folder.

$ saved_model_cli show --dir pose_model --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['predict']:

The given SavedModel SignatureDef contains the following input(s):

inputs['image'] tensor_info:

dtype: DT_UINT8

shape: (-1, -1, -1, 3)

name: image_tensor:0

The given SavedModel SignatureDef contains the following output(s):

outputs['output'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 136)

name: layer6/final_dense:0

Method name is: tensorflow/serving/predict

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['image'] tensor_info:

dtype: DT_UINT8

shape: (-1, -1, -1, 3)

name: image_tensor:0

The given SavedModel SignatureDef contains the following output(s):

outputs['output'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 136)

name: layer6/final_dense:0

Method name is: tensorflow/serving/predict

Looking at the definition of signature_def['serving_default'], the INPUT is defined as image Uint8[-1, -1, -1, 3] and the OUTPUT as output Float32[-1, 136]. As in the previous example, N (batch size), H (height), and W (width) of the INPUT are set to -1, so the signature needs to be rewritten. (-1 is synonymous with ? and None). Rather than my half-hearted explanation, the following article Summary of SavedModel - Qiita - t_shimmura is very helpful, so please see it. In addition, if you want to talk only about Head Pose Estimation, the training script and the export script to saved_model are described in the following repository.

For saved_model, we have only described how to use the saved_model_cli command to check the structure. I'll touch on this later in the process along with the checkpoint -> saved_model or Freeze_Graph -> saved_model conversion scripts.

4-1-4. In the case of Tensorflow/Keras .h5/.json

If the model is provided in Keras (.h5/.json) format, this is the pattern.

For example, to check the names of INPUTs and OUTPUTs based on the above repository, you will need a .json file after the training as shown below.

model = Model(inputs=xxxx,outputs=yyyy)

# model save

model_json = model.to_json()

open(model_path + 'model.json', 'w').write(model_json)

model.save_weights(model_path + 'weights.h5')

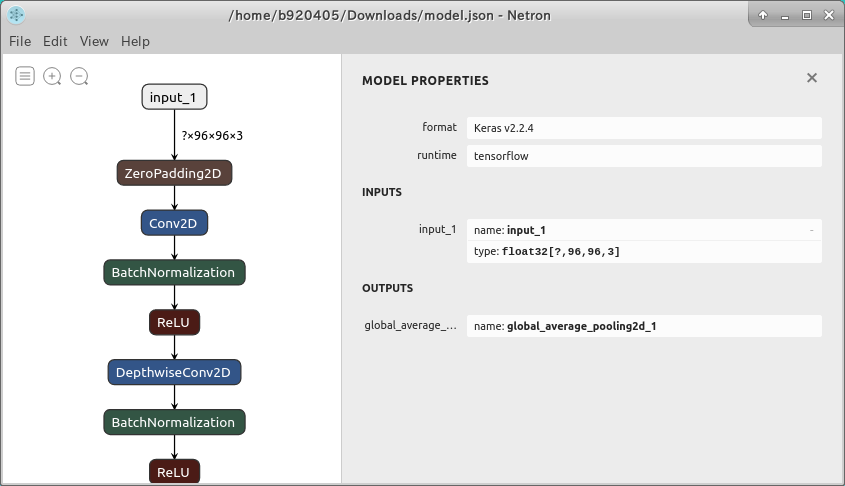

If you open the above example model.json with Netron, you can visualize it as shown below.

However, the syntax of Keras is very simple, so it would be faster to look directly at the program structure of the model.

If you want to change the shape of the input tensor, the QA of Keras -Transfer Learning - Change Input Tensor Shape is helpful. The easiest way seems to be to redefine an empty model with only a different INPUT size and re-transfer only the weights.

inputs = Input((None, None, 3))

.....

model = Model(inputs=[inputs], outputs=[outputs])

model.compile(optimizer='adam', loss='mean_squared_error')

model.load_weights('my_model_name.h5')

inputs2 = Input((512, 512, 3))

....

model2 = Model(inputs=[inputs2], outputs=[outputs])

model2.compile(optimizer='adam', loss='mean_squared_error')

model2.set_weights(model.get_weights())

4-2. Various quantization procedures

In this section I will try to quantize the various models into various patterns. In order to adapt the procedure to any situation, please be forgiven that there is some duplication of content in each section, and that some procedures are not necessary for quantization at the shortest distance.

4-2-1. Quantization from a Tensorflow checkpoint (.ckpt)

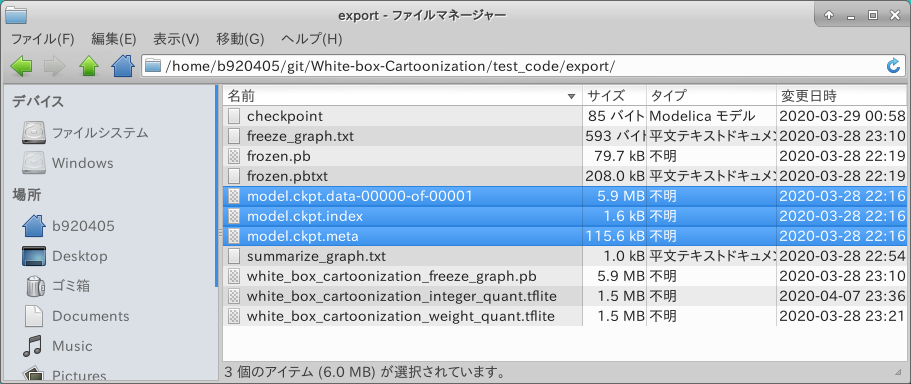

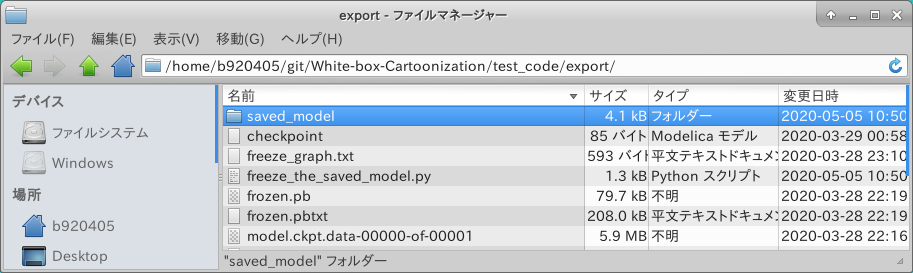

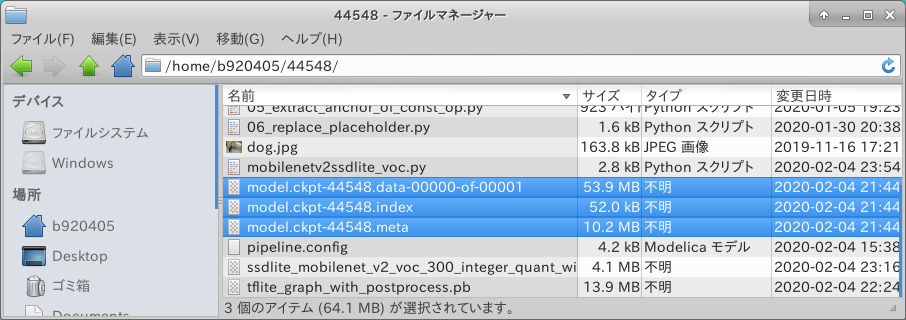

Let's quantize a model that animates a live action, White-box-Cartoonization, as an example. As described in 4-1-1. In the case of a Tensorflow checkpoint, the trained checkpoint in this model is released in a special state where only .meta does not exist. There are three normal checkpoints, .index .data-00000-of-00001 .meta, but this one is missing one file. I'm going to explain this procedure from the point of view of creating .meta, but this is only under the special circumstance that .meta doesn't exist, and it's not necessary unless you have to. Please take this as an example only. Of the steps described below, 4-2-1-2. Generate Freeze_Graph from checkpoint (.meta) is performed with Tensorflow v1.15.2 due to model constraints, and 4-2-1-3. Generate a saved_model from Freeze_Graph to 4-2-1-7. Float16 Quantization from saved_model (Float16 quantization) is performed with the latest Tensorflow v1.2.2.x or tf-nightly to support the latest operators and avoid bugs in Tensorflow itself.

4-2-1-1. Generating .meta from .index and .data-00000-of-00001

.index .data-00000-of-00001 .meta This step is not necessary if three types of checkpoints are provided: .index .data-00000-of-00001 .meta This section describes how to create .meta from .index and .data-00000-of-00001. I use the test code of White-box-Cartoonization as much as possible. Here's how the modified logic works

1. checkpoint Create a folder export for temporary output.

2. Build a model.

3. Debug printing the name and shape of INPUT/OUTPUT.

4. Restore the checkpoint.

5. Immediately save to the export folder.

def cartoonize(load_folder, save_folder, model_path):

input_photo = tf.placeholder(tf.float32, [1, None, None, 3])

network_out = network.unet_generator(input_photo)

final_out = guided_filter.guided_filter(input_photo, network_out, r=1, eps=5e-3)

all_vars = tf.trainable_variables()

gene_vars = [var for var in all_vars if 'generator' in var.name]

saver = tf.train.Saver(var_list=gene_vars)

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

sess = tf.Session(config=config)

sess.run(tf.global_variables_initializer())

saver.restore(sess, tf.train.latest_checkpoint(model_path))

def cartoonize(load_folder, save_folder, model_path):

import sys

import shutil

shutil.rmtree('./export', ignore_errors=True)

input_photo = tf.placeholder(tf.float32, [1, 720, 720, 3], name='input')

network_out = network.unet_generator(input_photo)

final_out = guided_filter.guided_filter(input_photo, network_out, r=1, eps=5e-3)

print("input_photo.name =", input_photo.name)

print("input_photo.shape =", input_photo.shape)

print("final_out.name =", final_out.name)

print("final_out.shape =", final_out.shape)

all_vars = tf.trainable_variables()

gene_vars = [var for var in all_vars if 'generator' in var.name]

saver = tf.train.Saver(var_list=gene_vars)

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

sess = tf.Session(config=config)

sess.run(tf.global_variables_initializer())

saver.restore(sess, tf.train.latest_checkpoint(model_path))

saver.save(sess, './export/model.ckpt')

sys.exit(0)

Let's run it.

$ python3 cartoonize.py

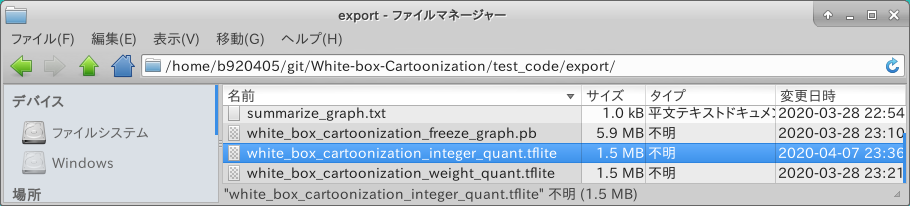

Three types of checkpoints, .index .data-00000-of-00001 .meta, have been successfully generated.

- Go to Table of contents -

4-2-1-2. Generate Freeze_Graph from checkpoint (.meta)

Now let's create a Freeze_Graph from a checkpoint. Let's take the .meta file we just created and create a Freeze_Graph. Here is a sample of the modified cartoonize method. However, it's OK to cut and run the new part of the file in a separate .py file.

def cartoonize(load_folder, save_folder, model_path):

import sys

#import shutil

#shutil.rmtree('./export', ignore_errors=True)

#input_photo = tf.placeholder(tf.float32, [1, 720, 720, 3], name='input')

#network_out = network.unet_generator(input_photo)

#final_out = guided_filter.guided_filter(input_photo, network_out, r=1, eps=5e-3)

#print("input_photo.name =", input_photo.name)

#print("input_photo.shape =", input_photo.shape)

#print("final_out.name =", final_out.name)

#print("final_out.shape =", final_out.shape)

#all_vars = tf.trainable_variables()

#gene_vars = [var for var in all_vars if 'generator' in var.name]

#saver = tf.train.Saver(var_list=gene_vars)

#config = tf.ConfigProto()

#config.gpu_options.allow_growth = True

#sess = tf.Session(config=config)

#sess.run(tf.global_variables_initializer())

#saver.restore(sess, tf.train.latest_checkpoint(model_path))

#saver.save(sess, './export/model.ckpt')

#sys.exit(0)

graph = tf.get_default_graph()

sess = tf.Session()

saver = tf.train.import_meta_graph('./export/model.ckpt.meta')

saver.restore(sess, './export/model.ckpt')

tf.train.write_graph(sess.graph_def, './export', 'white_box_cartoonization_freeze_graph.pbtxt', as_text=True)

tf.train.write_graph(sess.graph_def, './export', 'white_box_cartoonization_freeze_graph.pb', as_text=False)

sys.exit(0)

Let's run it.

$ python3 cartoonize.py

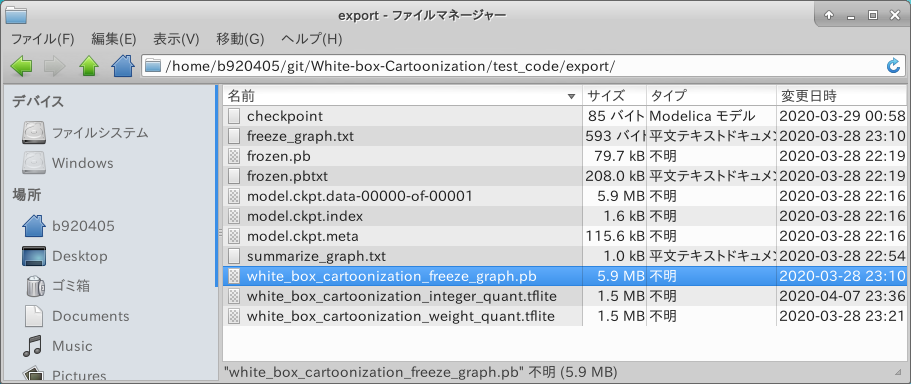

The white_box_cartoonization_freeze_graph.pb has been successfully generated.

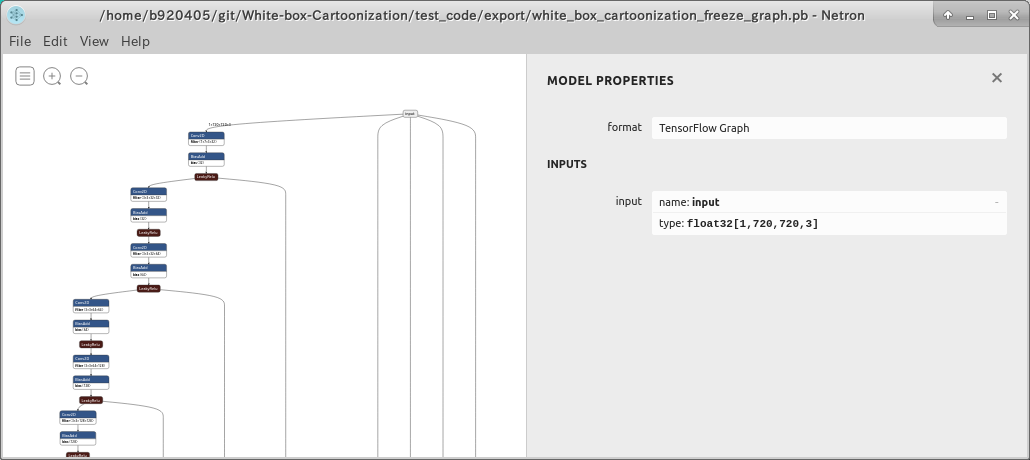

Checking the structure with Netron doesn't seem to be a problem.

4-2-1-3. Generate a saved_model from Freeze_Graph

Generate a saved_model from Freeze_Graph. It is written to work for both Tensorflow v1.x and Tensorflow v2.x. For input_name= and outputs=, specify the names of INPUT and OUTPUT specified in 4-1-1. In the case of a Tensorflow checkpoint. It can probably be used for any model, as long as you know the names of the INPUT and OUTPUT, and have Freeze_Graph at hand.

import tensorflow as tf

import os

import shutil

from tensorflow.python import ops

def get_graph_def_from_file(graph_filepath):

tf.compat.v1.reset_default_graph()

with ops.Graph().as_default():

with tf.compat.v1.gfile.GFile(graph_filepath, 'rb') as f:

graph_def = tf.compat.v1.GraphDef()

graph_def.ParseFromString(f.read())

return graph_def

def convert_graph_def_to_saved_model(export_dir, graph_filepath, input_name, outputs):

graph_def = get_graph_def_from_file(graph_filepath)

with tf.compat.v1.Session(graph=tf.Graph()) as session:

tf.import_graph_def(graph_def, name='')

tf.compat.v1.saved_model.simple_save(

session,

export_dir,# change input_image to node.name if you know the name

inputs={input_name: session.graph.get_tensor_by_name('{}:0'.format(node.name))

for node in graph_def.node if node.op=='Placeholder'},

outputs={t.rstrip(":0"):session.graph.get_tensor_by_name(t) for t in outputs}

)

print('Graph converted to SavedModel!')

tf.compat.v1.enable_eager_execution()

input_name="input"

outputs = ['add_1:0']

shutil.rmtree('./saved_model', ignore_errors=True)

convert_graph_def_to_saved_model('./saved_model', './white_box_cartoonization_freeze_graph.pb', input_name, outputs)

"""

$ saved_model_cli show --dir saved_model --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['input'] tensor_info:

dtype: DT_FLOAT

shape: (1, 720, 720, 3)

name: input:0

The given SavedModel SignatureDef contains the following output(s):

outputs['add_1'] tensor_info:

dtype: DT_FLOAT

shape: (1, 720, 720, 3)

name: add_1:0

Method name is: tensorflow/serving/predict

"""

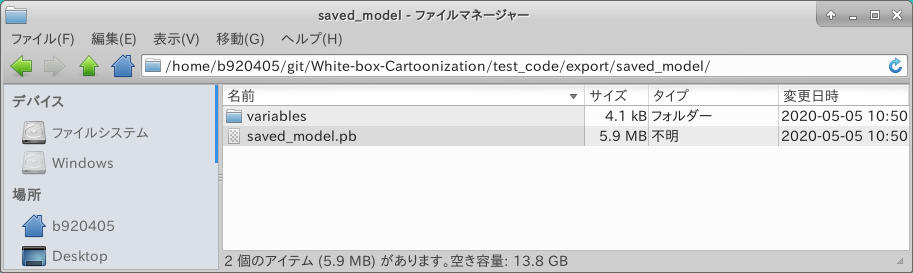

Let's run it.

$ python3 freeze_the_saved_model.py

It was successfully generated.

Let's check the structure of saved_model. It seems to have worked out well.

$ saved_model_cli show --dir saved_model --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['input'] tensor_info:

dtype: DT_FLOAT

shape: (1, 720, 720, 3)

name: input:0

The given SavedModel SignatureDef contains the following output(s):

outputs['add_1'] tensor_info:

dtype: DT_FLOAT

shape: (1, 720, 720, 3)

name: add_1:0

Method name is: tensorflow/serving/predict

4-2-1-4. Weight Quantization from saved_model (weight-only quantization)

Finally, the main topic is quantization. Create a program that does Weight Quantization from saved_model and generates a .tflite that can work with Tensorflow Lite.

import tensorflow as tf

tf.compat.v1.enable_eager_execution()

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('./saved_model')

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('./white_box_cartoonization_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - white_box_cartoonization_weight_quant.tflite")

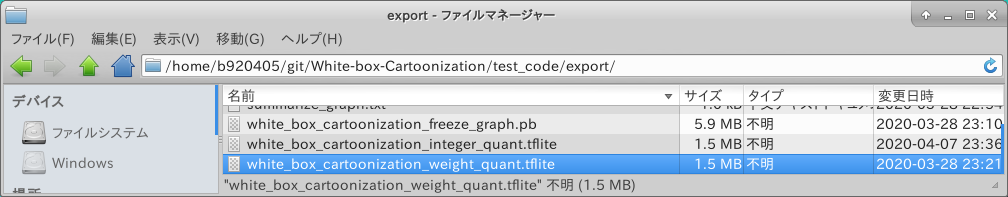

Execute it.

$ python3 weight_quantization.py

It's been generated safely. The file size has been reduced to a quarter of the original Freeze_Graph. It is important to note at this point that even if the file size is reduced by a quarter, it does not mean that the inference performance is four times faster. You need to understand that quantization of weights only means mere compression of file size. It depends on the environment in which the inference is performed, but as an example, if you want to improve performance when performing inference on the RaspberryPi4 CPU, you need to perform 4-2-1-5. Integer Quantization from saved_model (8-bit integer quantization) in the next section.

4-2-1-5. Integer Quantization from saved_model (8-bit integer quantization)

Create a program that does a Integer Quantization from a saved_model and generates a .tflite that can work with Tensorflow Lite. In case of Integer Quantization, it is necessary to give the image data for calibration in the process of converting the number of Float32 to UInt8. If possible, it would be better to use the images used during training, but this time I used a dataset that I can easily prepare on hand. If you write tfds.load(...), Google deploys datasets for training in Tensorflow Datasets on the cloud, so it will be downloaded automatically. You only need to do the download once, and I recommend that you change download=True to download=False for the second and subsequent runs. The sample logic below is set to automatically download the Pascal-VOC 2007 image dataset, but if you want to use other image datasets, you can find them in the left pane of the Tensorflow Datasets Catalog page. Most image datasets are available, but some may need to be downloaded manually due to copyright or sensitive images. (e.g., a dataset of face images). Among the various quantization operations, this Integer Quantization shows the best performance when performing inference on the RaspberryPi4 CPU alone. If you are interested, please click here 3. TFLite Model Benchmark. Here are the benchmark results for Ubuntu 19.10 aarch64 on RaspberryPi4 using the Integer Quantization model. Also, the benchmark results of Post-training quantization with TF2.0 Keras - nb.o's Diary - Nextremer_nb_o here are very helpful. Very importantly, since the number of operations that support quantization is increasing all the time, I recommend using the latest Tensorflow (Tensorflow v2.2.x / tf-nightly) for Integer Quantization and the Full Integer Quantization operations described below.

The flow of processing in representative_dataset_gen() is as follows.

1. Convert the data acquired by Tensorflow Datasets to Numpy

2. Resize the image size to INPUT size 720x720

3. Normalize image data to the range from -1 to 1

4. To match the shape of the INPUT [1, 720, 720, 3], add one dimension for batch size to the beginning of the image data in [720, 720, 3]

5. Return one image

import tensorflow as tf

import tensorflow_datasets as tfds

import numpy as np

def representative_dataset_gen():

for data in raw_test_data.take(100):

image = data['image'].numpy()

image = tf.image.resize(image, (720, 720))

image = image / 127.5 - 1

image = image[np.newaxis,:,:,:]

yield [image]

tf.compat.v1.enable_eager_execution()

raw_test_data, info = tfds.load(name="voc/2007",

with_info=True,

split="validation",

data_dir="~/TFDS",

download=True)

# Integer Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('./saved_model')

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

converter.representative_dataset = representative_dataset_gen

tflite_quant_model = converter.convert()

with open('./white_box_cartoonization_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Integer Quantization complete! - white_box_cartoonization_integer_quant.tflite")

Let's run it.

$ python3 integer_quantization.py

4-2-1-6. saved_model to Full Integer Quantization (all 8-bit integer quantization)

The notes and logic structure are almost the same as Integer Quantization and are omitted. Now let's write a program to execute Full Integer Quantization.

※ Unfortunately, as of 05/05/2020, the operation Div in the model of White-box-Cartoonization does not support Full Integer Quantization, so the script below will abort. However, other models that do not include Div work fine, so I'll describe the logic on the premise that it can be diverted to other models.

The .tflite file generated by Full Integer Quantization is the file you will need to generate the model for EdgeTPU. The performance of CPU inference on RaspberryPi4 is exactly the same as the Integer Quantization model.

import tensorflow as tf

import tensorflow_datasets as tfds

import numpy as np

def representative_dataset_gen():

for data in raw_test_data.take(100):

image = data['image'].numpy()

image = tf.image.resize(image, (720, 720))

image = image / 127.5 - 1

image = image[np.newaxis,:,:,:]

yield [image]

tf.compat.v1.enable_eager_execution()

raw_test_data, info = tfds.load(name="voc/2007",

with_info=True,

split="validation",

data_dir="~/TFDS",

download=False)

# Integer Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('./saved_model')

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.uint8

converter.representative_dataset = representative_dataset_gen

tflite_quant_model = converter.convert()

with open('./white_box_cartoonization_full_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Full Integer Quantization complete! - white_box_cartoonization_full_integer_quant.tflite")

4-2-1-7. Float16 Quantization from saved_model (Float16 quantization)

Generates a GPU-optimized Float16 quantization model suitable for operations. The program is described below.

import tensorflow as tf

tf.compat.v1.enable_eager_execution()

# Float16 Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('./saved_model')

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

tflite_quant_model = converter.convert()

with open('./white_box_cartoonization_float16_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Float16 Quantization complete! - white_box_cartoonization_float16_quant.tflite")

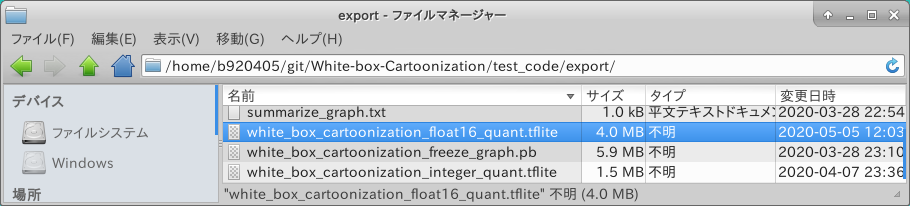

Execute it.

$ python3 float16_quantization.py

It seems to have been generated safely.

4-2-1-8. Full Integer Quantization to EdgeTPU convert

These are the steps that can be taken if Full Integer Quantization is successful. Perform model compilation for use with Google Coral EdgeTPU. It will abort if it contains unsupported or poorly implemented operations. It is my opinion, but the compiler is still unstable.

Edge TPU Compiler can be deployed according to Edge TPU Compiler here.

$ edgetpu_compiler -s white_box_cartoonization_full_integer_quant.tflite

Incidentally, the latest compiler 2.1.302470888 is said to support efficient reasoning on multi-TPU. Currently, only C++ APIs are provided, which is a burden for me as a Python user. I've owned three of them...![]()

@iwatake2222 was one of the first to implement Pipeline at this repository.

- Go to Table of contents -

4-2-2. Quantization from a Tensorflow checkpoint (.meta)

Same as 4-2-1-2. Generate Freeze_Graph from checkpoint (.meta) to 4-2-1-8. Full Integer Quantization to EdgeTPU convert. Change the image dataset used for calibration according to the characteristics of the model.

4-2-3. Quantization from Tensorflow Freeze_Graph (.pb)

4-2-1-3. Generate a saved_model from Freeze_Graph to 4-2-1-8. Full Integer Quantization to EdgeTPU convert. Change the image dataset used for calibration according to the characteristics of the model.

4-2-4. Quantization from Tensorflow saved_model (.pb)

4-2-1-4. Weight Quantization from saved_model (weight-only quantization) to 4-2-1-8. Full Integer Quantization to EdgeTPU convert Change the image dataset used for calibration according to the characteristics of the model.

4-2-5. Quantization from Tensorflow/Keras (.h5/.json)

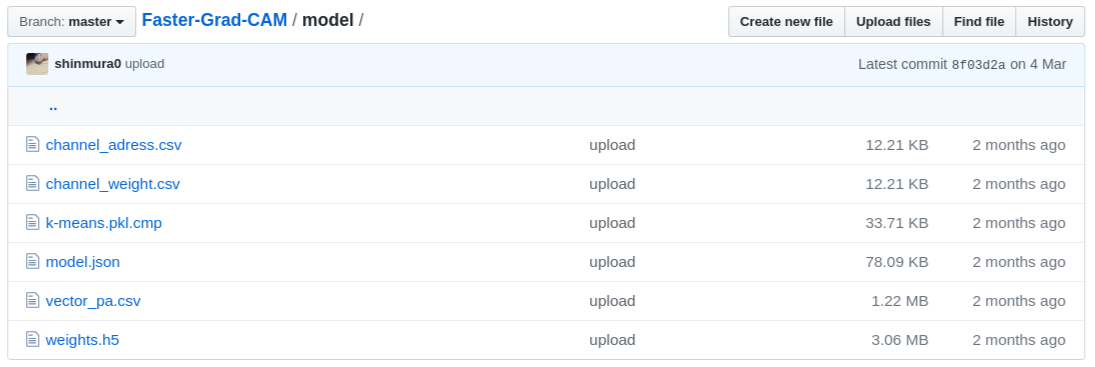

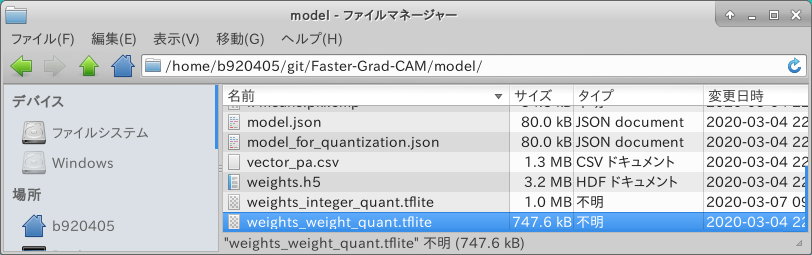

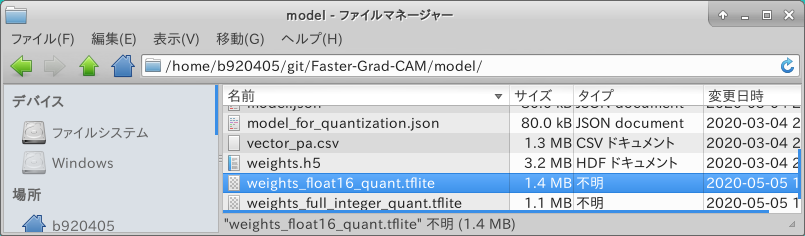

If the model is provided in Keras' .h5 and .json formats, this is the pattern. This time we'll use Faster-Grad-CAM as an example. The older Tensorflows up to Tensorflow v1.x and Tensorflow v2.1 seem to have an OOM bug (Out of Memory) in Keras quantization, so it is recommended to introduce Tensorflow v2.2.0 or tf-nightly to work with them.

Thankfully, all the material needed for quantization is committed, except for the image dataset needed for calibration.

I will use it to generate the missing calibration dataset.

4-2-5-1. Weight Quantization from .h5/.json (weight quantization)

You can do Weight Quantization immediately because the material is available. First, we clone the repository.

$ git clone https://github.com/shinmura0/Faster-Grad-CAM.git

$ cd Faster-Grad-CAM/model

The following is the program to execute Weight Quantization. The difference between the quantization pattern from the previous Tensorflow checkpoint is the difference between the loading part of the model and weights and the method for conversion. TFLiteConverter has the ability to pass a weight-loaded model object as is.

1. model = tf.keras.models.model_from_json(open('model.json').read())

2. model.load_weights('weights.h5')

3. tf.lite.TFLiteConverter.from_keras_model(model)

import tensorflow as tf

tf.compat.v1.enable_eager_execution()

# Weight Quantization - Input/Output=float32

# INPUT = input_1 (float32, 1 x 96 x 96 x 3)

# OUTPUT = block_16_expand_relu, global_average_pooling2d_1

model = tf.keras.models.model_from_json(open('model.json').read())

model.load_weights('weights.h5')

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('./weights_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - weights_weight_quant.tflite")

Let's run it.

$ python3 weight_quantization.py

It seems to have been generated safely.

- Go to Table of contents -

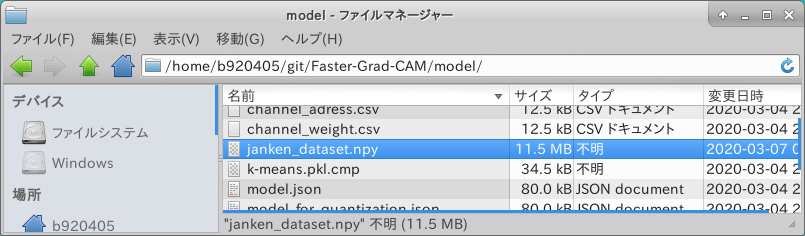

4-2-5-2. Generating the calibration data set

Clone a dataset image for calibration from @karaage0703's repository. Faster-Grad-CAM's trained models are trained with this gu dataset. It's tedious to go through all the disparate images, so I'll use the subsequent steps to pack them into one file.

$ wget https://github.com/karaage0703/janken_dataset.git

$ cd gu

Create a program to pack Numpy into binary format files.

from PIL import Image

import os, glob

import numpy as np

dataset = []

files = glob.glob("*.JPG")

for file in files:

image = Image.open(file)

image = image.convert("RGB")

data = np.asarray(image)

dataset.append(data)

dataset = np.array(dataset)

np.save("janken_dataset", dataset)

Now, let's give it a go.

$ python3 image_to_npy.py

It seems to have been generated without incident.

- Go to Table of contents -

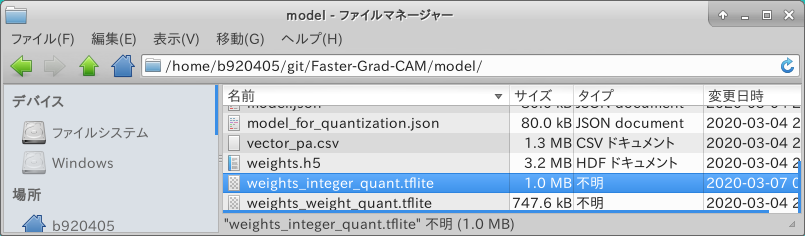

4-2-5-3. Integer Quantization from .h5/.json (8-bit integer quantization)

The method of Integer Quantization is almost the same as the previous method, except that it is handled slightly differently.

import tensorflow as tf

import numpy as np

def representative_dataset_gen():

raw_test_data = np.load('janken_dataset.npy')

for image in raw_test_data:

image = tf.image.resize(image, (96, 96))

image = image / 255

calibration_data = image[np.newaxis, :, :, :]

yield [calibration_data]

tf.compat.v1.enable_eager_execution()

# Integer Quantization - Input/Output=float32

# INPUT = input_1 (float32, 1 x 96 x 96 x 3)

# OUTPUT = block_16_expand_relu, global_average_pooling2d_1

model = tf.keras.models.model_from_json(open('model.json').read())

model.load_weights('weights.h5')

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

tflite_quant_model = converter.convert()

with open('./weights_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Integer Quantization complete! - weights_integer_quant.tflite")

Let's run it.

$ python3 integer_quantization.py

It seems to have been generated without incident.

- Go to Table of contents -

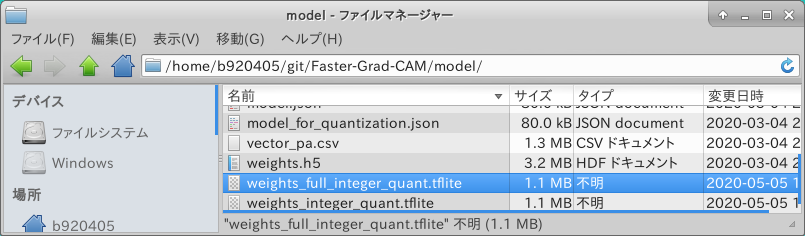

4-2-5-4. Full Integer Quantization from .h5/.json (all 8-bit integer quantization)

Write a program to do Full Integer Quantization.

import tensorflow as tf

import numpy as np

def representative_dataset_gen():

raw_test_data = np.load('janken_dataset.npy')

for image in raw_test_data:

image = tf.image.resize(image, (96, 96))

image = image / 255

calibration_data = image[np.newaxis, :, :, :]

yield [calibration_data]

tf.compat.v1.enable_eager_execution()

# Integer Quantization - Input/Output=float32

# INPUT = input_1 (float32, 1 x 96 x 96 x 3)

# OUTPUT = block_16_expand_relu, global_average_pooling2d_1

model = tf.keras.models.model_from_json(open('model.json').read())

model.load_weights('weights.h5')

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.experimental_new_converter = True #<--- Tensorflow v2.2.x以降を使用している場合は不要

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.uint8

converter.representative_dataset = representative_dataset_gen

tflite_quant_model = converter.convert()

with open('./weights_full_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Full Integer Quantization complete! - weights_full_integer_quant.tflite")

Let's run it.

$ python3 full_integer_quantization.py

It seems to have been generated without incident.

- Go to Table of contents -

4-2-5-5. Float16 Quantization from .h5/.json (Float16 quantization)

Write a program to do Float16 Quantization.

import tensorflow as tf

tf.compat.v1.enable_eager_execution()

# Weight Quantization - Input/Output=float32

# INPUT = input_1 (float32, 1 x 96 x 96 x 3)

# OUTPUT = block_16_expand_relu, global_average_pooling2d_1

model = tf.keras.models.model_from_json(open('model.json').read())

model.load_weights('weights.h5')

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.experimental_new_converter = True #<--- Not necessary if you are using Tensorflow v2.2.x or later.

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

tflite_quant_model = converter.convert()

with open('./weights_float16_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Float16 Quantization complete! - weights_float16_quant.tflite")

Let's run it.

$ python3 float16_quantization.py

It seems to have been generated safely.

- Go to Table of contents -

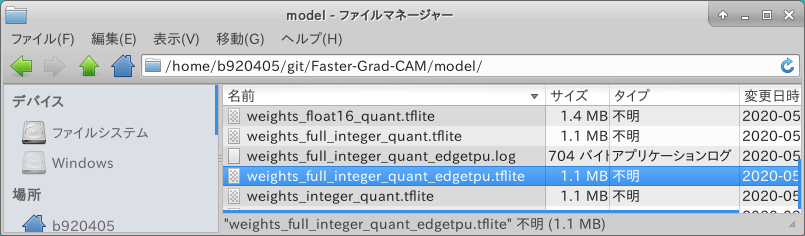

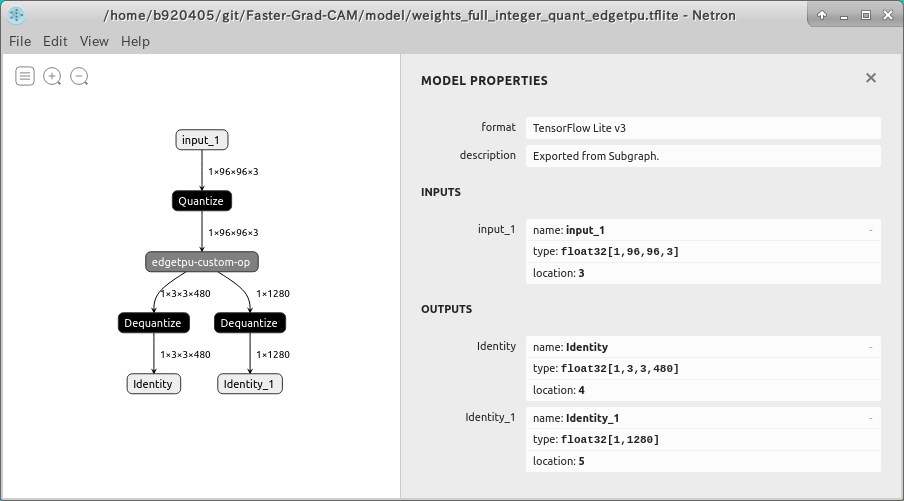

4-2-5-6. Full Integer Quantization to EdgeTPU convert

A model with Full Integer Quantization is used to generate a model with EdgeTPU support.

$ edgetpu_compiler -s weights_full_integer_quant.tflite

Edge TPU Compiler version 2.1.302470888

Model compiled successfully in 359 ms.

Input model: weights_full_integer_quant.tflite

Input size: 1.00MiB

Output model: weights_full_integer_quant_edgetpu.tflite

Output size: 1.06MiB

On-chip memory used for caching model parameters: 1014.00KiB

On-chip memory remaining for caching model parameters: 6.78MiB

Off-chip memory used for streaming uncached model parameters: 0.00B

Number of Edge TPU subgraphs: 1

Total number of operations: 71

Operation log: weights_full_integer_quant_edgetpu.log

Model successfully compiled but not all operations are supported by the Edge TPU. A percentage of the model will instead run on the CPU, which is slower. If possible, consider updating your model to use only operations supported by the Edge TPU. For details, visit g.co/coral/model-reqs.

Number of operations that will run on Edge TPU: 68

Number of operations that will run on CPU: 3

Operator Count Status

DEPTHWISE_CONV_2D 17 Mapped to Edge TPU

DEQUANTIZE 2 Operation is working on an unsupported data type

MEAN 1 Mapped to Edge TPU

ADD 10 Mapped to Edge TPU

QUANTIZE 1 Operation is otherwise supported, but not mapped due to some unspecified limitation

PAD 5 Mapped to Edge TPU

CONV_2D 35 Mapped to Edge TPU

It seems to have been generated safely.

- Go to Table of contents -

4-2-6. Quantization from a model for Tensorflow.js

Now it's time to tackle a more challenging task. Quantize Posenet V2 ResNet50 for Tensorflow.js, which was recently released by Google. To begin with, the code for checkpoint and training isn't published, so it's a tricky task. This is the repository from which to check. You will need to switch between Tensoflow v2.1.0 and Tensorflow v1.15.2. If you don't want to go through the trouble, please use PINTO_model_zoo, which is already committed with all patterns converted to the quantized model.

- Go to Table of contents -

4-2-6-1. Advance preparation

$ sudo pip3 uninstall tensorboard-plugin-wit tb-nightly \

tf-estimator-nightly tensorflow-gpu \

tensorflow tf-nightly tensorflow_estimator

$ sudo pip3 install tensorflow==2.1.0

$ git clone https://github.com/patlevin/tfjs-to-tf.git

$ cd tfjs-to-tf

$ sudo pip3 install . --no-deps

$ cd ..

$ git clone https://github.com/atomicbits/posenet-python.git

$ cd posenet-python

$ mkdir -p output

4-2-6-2. Generating a saved_model from Tensorflow.js

Create a saved_model by the following command. By specifying stride, it is possible to generate a model that balances accuracy and speed. You must save one or more sample images in the folder path specified in the argument of --image_dir in advance. Let's run it.

$ python3 image_demo.py \

--model resnet50 \

--stride 16 \

--image_dir ./images \

--output_dir ./output

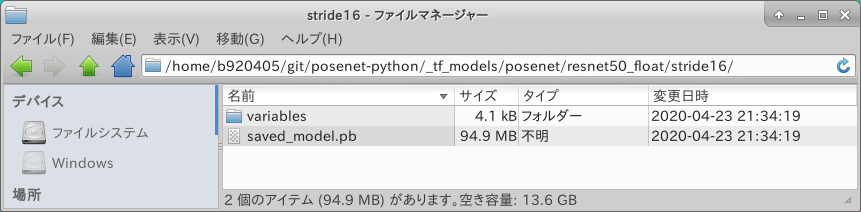

Generating saved_model output stride with 16 created saved_model under tf_models/posenet/resnet50_float/stride16/.

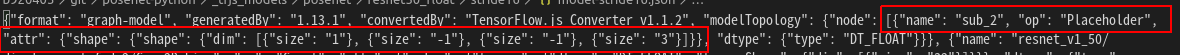

Check the structure with Netron. I know that INPUT is sub_2, but the shape is Float32 [1, ?, ?, 3]. As it is, the quantization will fail. I'm going to make another adjustment here.

※Actually, you can fix the INPUT shape of sub_2 in tfjs_models/posenet/resnet50_float/stride16/model-stride16.json just by modifying and re-converting the INPUT shape by hand. However, I'm going to go a long way just to explain the special processing for importing and processing Tensorflow v2 saved_model with Tensorflow v1.x.

- Go to Table of contents -

4-2-6-3. Import saved_model generated by Tensorflow v2.x into Tensorflow v1.x and process the input shape

Import saved_model into Tensorflow v1.15.2 and process the input shape. The only reason I use Tensorflow v1.15.2 is because I want to use the TransformGraph tool.

$ sudo pip3 uninstall tensorboard-plugin-wit tb-nightly \

tf-estimator-nightly tensorflow-gpu \

tensorflow tf-nightly tensorflow_estimator

$ sudo pip3 install tensorflow==1.15.2

The following is a program to change the input shape of saved_model. Replace the name sub_2 with the name image, while changing the input shape to [1, ?, ?, 3] to [1, 513, 513, 3], while replacing the name sub_2 with the name image. If you want to change the input form to a smaller form, you need to change 513 to 257. The part that is slightly different from the program introduced so far is that the logic to read the .pb file is slightly changed in order to import saved_model generated by Tensorflow v2.x into Tensorflow v1.x.

### tensorflow-gpu==1.15.2

import sys

import tensorflow as tf

from tensorflow.tools.graph_transforms import TransformGraph

from tensorflow.python.platform import gfile

from tensorflow.core.protobuf import saved_model_pb2

from tensorflow.python.util import compat

with tf.compat.v1.Session() as sess:

# shape=[1, ?, ?, 3] -> shape=[1, 513, 513, 3]

# name='image' specifies the placeholder name of the converted model

inputs = tf.compat.v1.placeholder(tf.float32, shape=[1, 513, 513, 3], name='image')

#inputs = tf.compat.v1.placeholder(tf.float32, shape=[1, 385, 385, 3], name='image')

#inputs = tf.compat.v1.placeholder(tf.float32, shape=[1, 321, 321, 3], name='image')

#inputs = tf.compat.v1.placeholder(tf.float32, shape=[1, 257, 257, 3], name='image')

#inputs = tf.compat.v1.placeholder(tf.float32, shape=[1, 225, 225, 3], name='image')

with gfile.FastGFile('_tf_models/posenet/resnet50_float/stride32/saved_model.pb', 'rb') as f:

data = compat.as_bytes(f.read())

sm = saved_model_pb2.SavedModel()

sm.ParseFromString(data)

if 1 != len(sm.meta_graphs):

print('More than one graph found. Not sure which to write')

sys.exit(1)

# 'image:0' specifies the placeholder name of the model before conversion

tf.graph_util.import_graph_def(sm.meta_graphs[0].graph_def, input_map={'sub_2:0': inputs}, name='')

print([n for n in tf.compat.v1.get_default_graph().as_graph_def().node if n.name == 'image'])

# Delete Placeholder "image" before conversion

# see: https://github.com/tensorflow/tensorflow/tree/master/tensorflow/tools/graph_transforms

# TransformGraph(

# graph_def(),

# input_name,

# output_names,

# conversion options

# )

optimized_graph_def = TransformGraph(

tf.compat.v1.get_default_graph().as_graph_def(),

'image',

['float_heatmaps','float_short_offsets','resnet_v1_50/displacement_fwd_2/BiasAdd','resnet_v1_50/displacement_bwd_2/BiasAdd'],

['strip_unused_nodes(type=float, shape="1,513,513,3")'])

tf.io.write_graph(optimized_graph_def, './', 'posenet_resnet50_32_513.pb', as_text=False)

with gfile.FastGFile('_tf_models/posenet/resnet50_float/stride32/saved_model.pb', 'rb') as f:

data = compat.as_bytes(f.read())

sm = saved_model_pb2.SavedModel()

sm.ParseFromString(data)

if 1 != len(sm.meta_graphs):

print('More than one graph found. Not sure which to write')

sys.exit(1)

Let's run it.

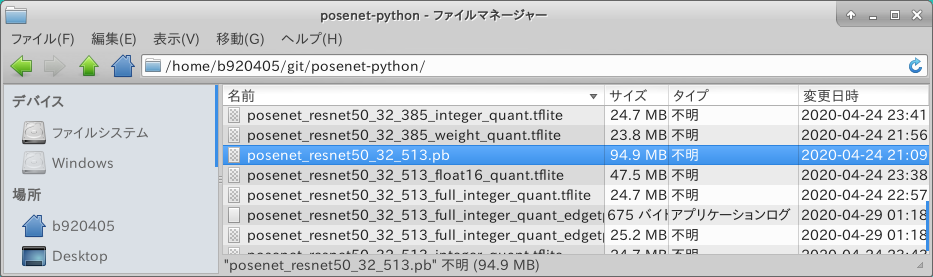

$ python3 replacement_of_input_placeholder_float32_resnet.py

It seems to have been generated safely.

- Go to Table of contents -

4-2-6-4. Installation of Tensorflow v2.2.0

Change Tensorflow v1.15.2 to Tensorflow v2.2.0 before quantization.

$ sudo pip3 uninstall tensorboard-plugin-wit tb-nightly \

tf-estimator-nightly tensorflow-gpu \

tensorflow tf-nightly tensorflow_estimator

$ sudo pip3 install tensorflow==2.2.0

4-2-6-5. Weight Quantization from saved_model (Weight-only quantization)

The quantization procedure from here on is the same as the previous one. The program for quantization is described below.

### tensorflow==2.2.0

import tensorflow as tf

import numpy as np

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_225')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_225_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_16_225_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_257')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_257_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_16_257_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_321')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_321_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_16_321_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_385')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_385_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_16_385_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_513')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_513_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_16_513_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_225')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_225_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_32_225_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_257')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_257_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_32_257_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_321')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_321_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_32_321_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_385')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_385_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_32_385_weight_quant.tflite")

# Weight Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_513')

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_513_weight_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Weight Quantization complete! - posenet_resnet50_32_513_weight_quant.tflite")

4-2-6-6. Integer Quantization from saved_model (8-bit integer quantization)

The method of Integer Quantization is the same as before. The images used for the calibration data set are 100 images with only people in them.

import tensorflow as tf

import tensorflow_datasets as tfds

import numpy as np

from PIL import Image

import os

import glob

## Generating a calibration data set

def representative_dataset_gen():

folder = ["images"]

image_size = 225

raw_test_data = []

for name in folder:

dir = "./" + name

files = glob.glob(dir + "/*.jpg")

for file in files:

image = Image.open(file)

image = image.convert("RGB")

image = image.resize((image_size, image_size))

image = np.asarray(image).astype(np.float32)

image = image[np.newaxis,:,:,:]

raw_test_data.append(image)

for data in raw_test_data:

yield [data]

# Integer Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_225')

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_225_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Integer Quantization complete! - posenet_resnet50_16_225_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_257')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_257_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_257_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_321')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_321_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_321_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_385')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_385_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_385_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_513')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_513_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_513_integer_quant.tflite")

# Integer Quantization - Input/Output=float32

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_225')

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_225_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Integer Quantization complete! - posenet_resnet50_32_225_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_257')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_257_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_257_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_321')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_321_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_321_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_385')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_385_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_385_integer_quant.tflite")

# # Integer Quantization - Input/Output=float32

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_513')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_513_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_513_integer_quant.tflite")

4-2-6-7. Full Integer Quantization from saved_model (All 8-bit integer quantization)

The method of Full Integer Quantization is the same as before. The images used for the calibration data set are 100 images with only people in them.

import tensorflow as tf

import tensorflow_datasets as tfds

import numpy as np

from PIL import Image

import os

import glob

## Generating a calibration data set

def representative_dataset_gen():

folder = ["images"]

image_size = 225

raw_test_data = []

for name in folder:

dir = "./" + name

files = glob.glob(dir + "/*.jpg")

for file in files:

image = Image.open(file)

image = image.convert("RGB")

image = image.resize((image_size, image_size))

image = np.asarray(image).astype(np.float32)

image = image[np.newaxis,:,:,:]

raw_test_data.append(image)

for data in raw_test_data:

yield [data]

# Integer Quantization - Input/Output=uint8

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_225')

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.uint8

tflite_quant_model = converter.convert()

with open('posenet_resnet50_16_225_full_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Integer Quantization complete! - posenet_resnet50_16_225_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_257')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_257_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_257_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_321')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_321_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_321_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_385')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_385_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_385_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_16_513')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_16_513_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_16_513_full_integer_quant.tflite")

# Integer Quantization - Input/Output=uint8

converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_225')

converter.experimental_new_converter = True

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.uint8

tflite_quant_model = converter.convert()

with open('posenet_resnet50_32_225_full_integer_quant.tflite', 'wb') as w:

w.write(tflite_quant_model)

print("Integer Quantization complete! - posenet_resnet50_32_225_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_257')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_257_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_257_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_321')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_321_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_321_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_385')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_dataset_gen

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_quant_model = converter.convert()

# with open('posenet_resnet50_32_385_full_integer_quant.tflite', 'wb') as w:

# w.write(tflite_quant_model)

# print("Integer Quantization complete! - posenet_resnet50_32_385_full_integer_quant.tflite")

# # Integer Quantization - Input/Output=uint8

# converter = tf.lite.TFLiteConverter.from_saved_model('saved_model_posenet_resnet50_32_513')

# converter.optimizations = [tf.lite.Optimize.DEFAULT]