1.Introduction

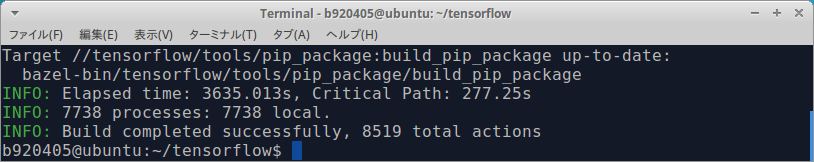

前回は Google Cloud Storage を使用したリモートキャッシングを試行しました。 Ultra-fast build of Tensorflow with Bazel Remote Caching [Google Cloud Storage version] ただ前回の手順は、有料の Googleアカウント が必要となるため少々敷居が高かったです。 今回は Bazel Remote Caching を Docker でお手軽で無料のローカルキャッシングビルド環境を構築します。 今回も、1回目のビルド時は1時間ほど掛かりますが、パラメータ調整を行ったり、バグフィックスを行ったあとの2回目以降のビルドは90秒ほどで終了します。 Google Cloud Storage を使用するよりも更に1分高速にビルドできました。 どれほど高速にビルドできるか興味がある方は下の動画をご覧ください。

Tensorflow v1.14.0 build, Bazel Remote Caching with Docker の動画を作成しました。 ローカルキャッシュを有効にした Docker を使用した場合、 Google Cloud Storage を使用するよりも更に1分ビルド時間が短くなりました。 フルビルドで1分30秒です。https://t.co/yNiBXVDyyI

— PINTO0309 (@PINTO03091) August 5, 2019

This time I will run Bazel Remote Caching locally on Docker. This time I will perform Bazel Remote Caching easily with Docker for local caching. This time, it takes about 1 hour for the first build, but the second and subsequent builds after parameter adjustments and bug fixes will take about 1 minutes.

2.Environment

- Ubuntu 16.04 x86_64

- Tensorflow v1.14.0 - Bazel 0.24.1

- Docker

3.Procedure

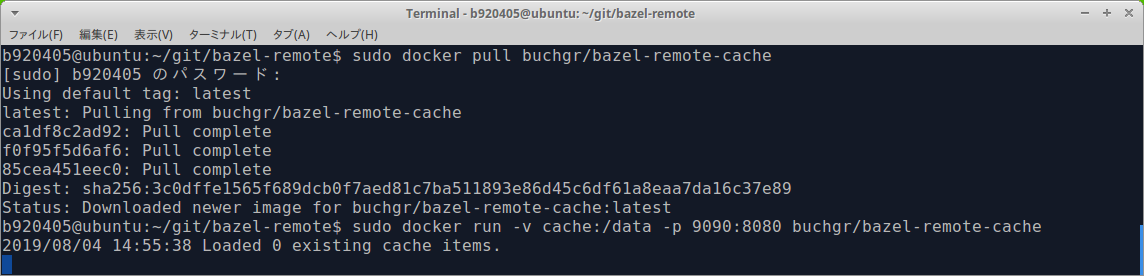

3−1.Running Docker for Bazel Remote Caching

$ ~

$ git clone https://github.com/buchgr/bazel-remote.git

$ cd bazel-remote

$ sudo docker pull buchgr/bazel-remote-cache

$ sudo docker run -v ${HOME}/bazel-remote/cache:/data -p 9090:8080 buchgr/bazel-remote-cache

3−2.First build of Tensorflow with Bazel Remote Caching enabled

Work on a different terminal from the one where you started Docker.

$ sudo apt-get install -y libhdf5-dev libc-ares-dev libeigen3-dev

$ sudo pip3 install keras_applications==1.0.7 --no-deps

$ sudo pip3 install keras_preprocessing==1.0.9 --no-deps

$ sudo pip3 install h5py==2.9.0

$ sudo apt-get install -y openmpi-bin libopenmpi-dev

$ sudo -H pip3 install -U --user six numpy wheel mock

$ sudo apt update;sudo apt upgrade

$ cd ~

$ Bazel_bin/0.24.1/Ubuntu1604_x86_64/install.sh

$ cd ~

$ git clone -b v1.14.0 https://github.com/tensorflow/tensorflow.git

$ cd tensorflow

$ git checkout -b v1.14.0

$ sudo bazel clean

$ ./configure

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.24.1- (@non-git) installed.

Please specify the location of python. [Default is /usr/bin/python]: /usr/bin/python3

Found possible Python library paths:

/opt/intel/openvino_2019.2.242/python/python3

/opt/intel/openvino_2019.2.242/python/python3.5

/home/b920405/git/caffe-jacinto/python

/opt/intel/openvino_2019.2.242/deployment_tools/model_optimizer

.

/opt/movidius/caffe/python

/usr/lib/python3/dist-packages

/usr/local/lib/python3.5/dist-packages

/usr/local/lib

Please input the desired Python library path to use. Default is [/opt/intel/openvino_2019.2.242/python/python3]

/usr/local/lib/python3.5/dist-packages

Do you wish to build TensorFlow with XLA JIT support? [Y/n]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]: n

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: n

No CUDA support will be enabled for TensorFlow.

Do you wish to download a fresh release of clang? (Experimental) [y/N]: n

Clang will not be downloaded.

Do you wish to build TensorFlow with MPI support? [y/N]: n

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native -Wno-sign-compare]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: n

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See .bazelrc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

--config=numa # Build with NUMA support.

--config=dynamic_kernels # (Experimental) Build kernels into separate shared objects.

Preconfigured Bazel build configs to DISABLE default on features:

--config=noaws # Disable AWS S3 filesystem support.

--config=nogcp # Disable GCP support.

--config=nohdfs # Disable HDFS support.

--config=noignite # Disable Apache Ignite support.

--config=nokafka # Disable Apache Kafka support.

--config=nonccl # Disable NVIDIA NCCL support.

Configuration finished

$ sudo bazel build \

--config=opt \

--config=noaws \

--config=nogcp \

--config=nohdfs \

--config=noignite \

--config=nokafka \

--config=nonccl \

--copt=-ftree-vectorize \

--copt=-funsafe-math-optimizations \

--copt=-ftree-loop-vectorize \

--copt=-fomit-frame-pointer \

--remote_http_cache=http://localhost:9090 \

//tensorflow/tools/pip_package:build_pip_package

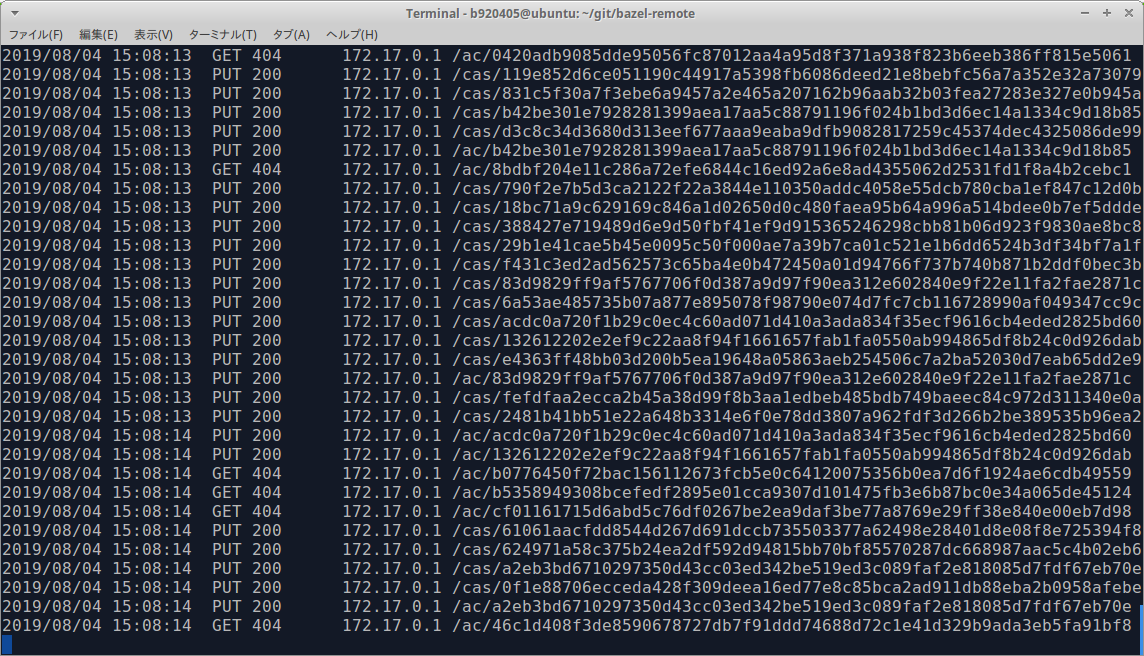

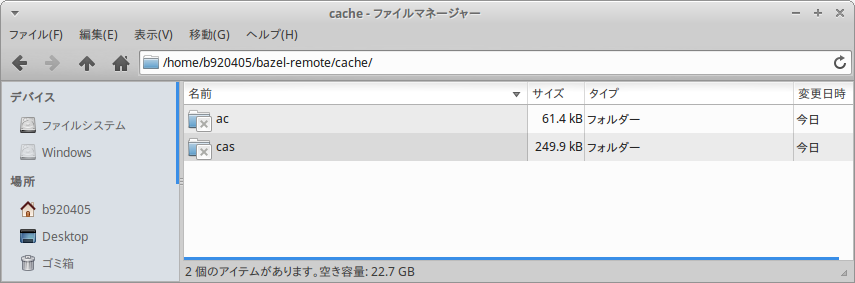

Cache information is saved in the path bazel-remote/cache.

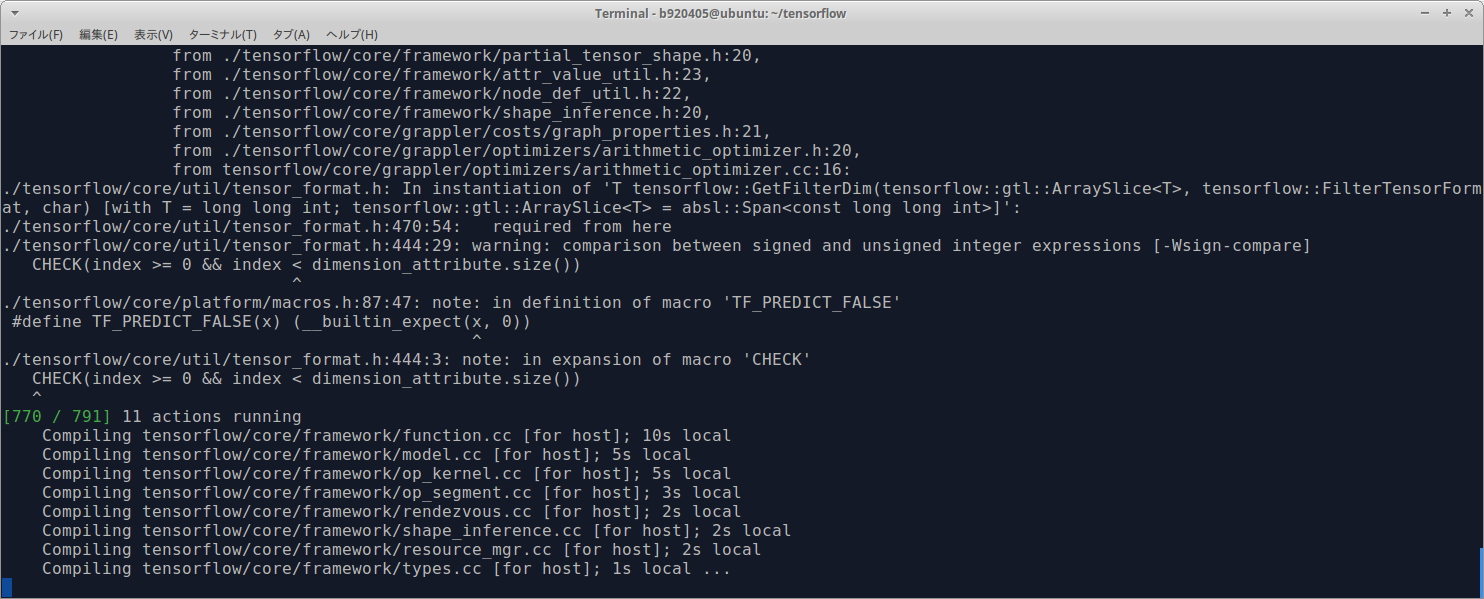

The first build takes about an hour.

3−3.Customize Tensorflow

# Add the following two lines to the last line

def set_num_threads(self, i):

return self._interpreter.SetNumThreads(i)

// Corrected the vicinity of the last line as follows

PyObject* InterpreterWrapper::ResetVariableTensors() {

TFLITE_PY_ENSURE_VALID_INTERPRETER();

TFLITE_PY_CHECK(interpreter_->ResetVariableTensors());

Py_RETURN_NONE;

}

PyObject* InterpreterWrapper::SetNumThreads(int i) {

interpreter_->SetNumThreads(i);

Py_RETURN_NONE;

}

} // namespace interpreter_wrapper

} // namespace tflite

// should be the interpreter object providing the memory.

PyObject* tensor(PyObject* base_object, int i);

PyObject* SetNumThreads(int i);

private:

// Helper function to construct an `InterpreterWrapper` object.

// It only returns InterpreterWrapper if it can construct an `Interpreter`.

BUILD_WITH_NNAPI=false

from tensorflow.contrib import checkpoint

# if os.name != "nt" and platform.machine() != "s390x":

# from tensorflow.contrib import cloud

from tensorflow.contrib import cluster_resolver

from tensorflow.contrib.summary import summary

if os.name != "nt" and platform.machine() != "s390x":

try:

from tensorflow.contrib import cloud

except ImportError:

pass

from tensorflow.python.util.lazy_loader import LazyLoader

ffmpeg = LazyLoader("ffmpeg", globals(),

"tensorflow.contrib.ffmpeg")

3−4.Second build of Tensorflow with Bazel Remote Caching enabled

Erase all pre-built binaries and build a full Tensorflow with bug fixes and modifications. When there are few correction targets, all processing is finished in 90 seconds.

$ sudo bazel clean

$ ./configure

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.24.1- (@non-git) installed.

Please specify the location of python. [Default is /usr/bin/python]: /usr/bin/python3

Found possible Python library paths:

/opt/intel/openvino_2019.2.242/python/python3

/opt/intel/openvino_2019.2.242/python/python3.5

/home/b920405/git/caffe-jacinto/python

/opt/intel/openvino_2019.2.242/deployment_tools/model_optimizer

.

/opt/movidius/caffe/python

/usr/lib/python3/dist-packages

/usr/local/lib/python3.5/dist-packages

/usr/local/lib

Please input the desired Python library path to use. Default is [/opt/intel/openvino_2019.2.242/python/python3]

/usr/local/lib/python3.5/dist-packages

Do you wish to build TensorFlow with XLA JIT support? [Y/n]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]: n

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: n

No CUDA support will be enabled for TensorFlow.

Do you wish to download a fresh release of clang? (Experimental) [y/N]: n

Clang will not be downloaded.

Do you wish to build TensorFlow with MPI support? [y/N]: n

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native -Wno-sign-compare]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: n

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See .bazelrc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

--config=numa # Build with NUMA support.

--config=dynamic_kernels # (Experimental) Build kernels into separate shared objects.

Preconfigured Bazel build configs to DISABLE default on features:

--config=noaws # Disable AWS S3 filesystem support.

--config=nogcp # Disable GCP support.

--config=nohdfs # Disable HDFS support.

--config=noignite # Disable Apache Ignite support.

--config=nokafka # Disable Apache Kafka support.

--config=nonccl # Disable NVIDIA NCCL support.

Configuration finished

$ sudo bazel build \

--config=opt \

--config=noaws \

--config=nogcp \

--config=nohdfs \

--config=noignite \

--config=nokafka \

--config=nonccl \

--copt=-ftree-vectorize \

--copt=-funsafe-math-optimizations \

--copt=-ftree-loop-vectorize \

--copt=-fomit-frame-pointer \

--remote_http_cache=http://localhost:9090 \

//tensorflow/tools/pip_package:build_pip_package