GPT-4o Visionに物体検出させてみる

評判のGPT-4o Visionでバウンディングボックスの物体検出ができるか試してみました。

1. GPT-4o Visionを使ってみる

公式のAPIリファレンスに沿ってAPIでGPT-4o Visionを試します。

import base64

import requests

image_path = "HERE_IS_YOUR_IMAGE_PATH"

api_key = "HERE_IS_YOUR_OPENAI_API_KEY"

# Function to encode the image

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

# Getting the base64 string

base64_image = encode_image(image_path)

prompt_message = "How many people are in this picture?"

print(prompt_message)

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

payload = {

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt_message,

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

],

"max_tokens": 300

}

response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

print(response.json()["choices"][0]["message"]["content"])

Question: 'How many people are in this picture?' (この写真に写っている人は何人ですか?)

Answer: 'There are 12 people in the picture.' (12人です。)

完璧です。単純な人数カウントはGPT-4turbo Visionでは難しかったですができるようになってます。

しかし、このレベルは既存の物体検出モデルでも余裕です。

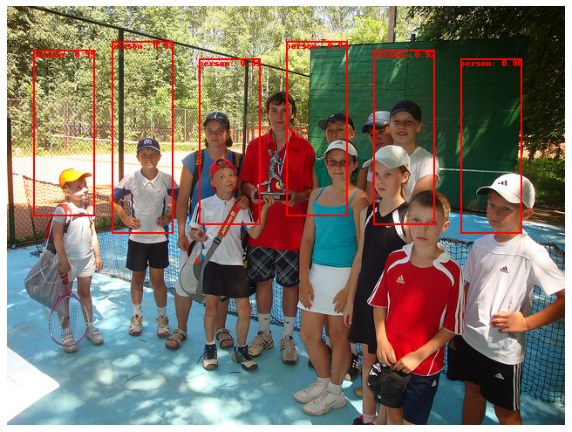

DETR Object Detectionで実行した結果、こっちもちゃんと12人数えられています。

2. どこまで見えているのか

同じ画像で帽子かぶっている人を数えてみます。結果は以下の通り。

Question: 'How many people in this picture are wearing hats and how many are not?' (何人が帽子をかぶっていて、何人はそうではないですか?)

Answer: 'In the picture, there are 12 people in total. Out of these, 9 people are wearing hats and 3 people are not wearing hats.' (12人中、9人が帽子をかぶっていて、3人はかぶってないです。)

目視だと「10人帽子かぶっていて、2人被ってない」ですが、向かって右奥の女性はかぶっているか微妙なとこなので正解で扱いたいと思います。かなり細かい箇所は微妙ですが、大きくは人などの物体が判断できていそうです。

3. 物体検出させてみる

まずは物体検出として振る舞うように画像とプロンプトを与えてみます。

import base64

import requests

import json

image_path = "HERE_IS_YOUR_IMAGE_PATH"

api_key = "HERE_IS_YOUR_OPENAI_API_KEY"

# Function to encode the image

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

image = Image.open(input_path)

# Getting the base64 string

base64_image = encode_image(image_path)

template_text = """

You are an AI model that performs object detection. You are trained by MS COCO dataset.

Please perform object detection and return the coordinates of the bounding box in json format.

Only json is required for the answer, please act as an API. Please use the following format.

{

"detections": [

{

"category": "person",

"bbox": [50, 30, 100, 200],

"score": 0.98

},

{

"category": "dog",

"bbox": [200, 150, 120, 180],

"score": 0.92

},

{

"category": "cat",

"bbox": [300, 100, 80, 160],

"score": 0.85

}

]

}

note: bbox format is [x left coordinate, y top coordinates, x right coordinates, y bottom coordinates]. score is confidence.

"""

additional_info = f"This image size is ({image.size[0]}, {image.size[1]})."

prompt_message = template_text + additional_info

print(prompt_message)

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

payload = {

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt_message,

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

],

"max_tokens": 300

}

response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

data = json.loads(response.json()["choices"][0]["message"]["content"])

data

結果、座標データが返ってきました。

{

"detections": [

{

"category": "person",

"bbox": [30, 50, 100, 240],

"score": 0.95

},

{

"category": "person",

"bbox": [120, 40, 190, 260],

"score": 0.93

},

{

"category": "person",

"bbox": [220, 60, 290, 250],

"score": 0.91

},

{

"category": "person",

"bbox": [320, 40, 390, 240],

"score": 0.94

},

{

"category": "person",

"bbox": [420, 50, 490, 250],

"score": 0.92

},

{

"category": "person",

"bbox": [520, 60, 590, 260],

"score": 0.9

}

]

}

描画してみます。

from PIL import Image, ImageDraw

from io import BytesIO

import matplotlib.pyplot as plt

image = Image.open(image_path)

draw = ImageDraw.Draw(image)

for detection in data['detections']:

bbox = detection['bbox']

category = detection['category']

score = detection['score']

left, top, right, bottom = bbox

box = [(left, top), (right, bottom)]

draw.rectangle(box, outline="red", width=2)

text = f"{category}: {score:.2f}"

draw.text((left, top), text, fill="red")

plt.figure(figsize=(10, 10))

plt.imshow(image)

plt.axis('off')

plt.show()

正しく出力されていないようです。別の画像で試してみます。

正しく出力されないので他の方法を試してみます。

4. GPT-4o Vision + Few-shotプロンプトで物体検出に挑戦

3枚画像データを入力し、内2枚は正解の座標を与え、最後の1枚の結果を求めるようにしてみます。いわゆるFew-shotプロンプトです。

from openai import OpenAI

template_text = """

You are an AI model that performs object detection. You are trained by MS COCO dataset.

Please perform object detection and return the coordinates of the bounding box in json format.

Only json is required for the answer, please act as an API. Please use the following format.

{

"detections": [

{

"category": "person",

"bbox": [50, 30, 100, 200],

"score": 0.98

},

{

"category": "dog",

"bbox": [200, 150, 120, 180],

"score": 0.92

},

{

"category": "cat",

"bbox": [300, 100, 80, 160],

"score": 0.85

}

]

}

note: bbox format is [x left coordinate, y top coordinates, x right coordinates, y bottom coordinates]. score is confidence.

For example, first image's result is below.

{

"detections": [

{

"category": "person",

"bbox": [

280,

44,

218,

346

],

"score": 0.9

},

{

"category": "skis",

"bbox": [

205,

362,

409,

38

],

"score": 0.8

}

]

}

Second image's result is below.

{

"detections": [

{

"category": "sports ball",

"bbox": [

408,

172,

19,

16

],

"score": 0.9

},

{

"category": "person",

"bbox": [

145,

100,

291,

457

],

"score": 0.9

},

{

"category": "person",

"bbox": [

163,

126,

265,

480

],

"score": 0.9

},

{

"category": "baseball glove",

"bbox": [

368,

157,

57,

45

],

"score": 0.8

}

]

}

Third image's result is below.

"""

print(template_text)

client = OpenAI(

api_key=api_key,

)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": template_text,

},

{

"type": "image_url",

"image_url": {

"url": "http://images.cocodataset.org/val2017/000000000785.jpg",

},

},

{

"type": "image_url",

"image_url": {

"url": "http://images.cocodataset.org/val2017/000000000872.jpg",

},

},

{

"type": "image_url",

"image_url": {

"url": "http://images.cocodataset.org/val2017/000000001000.jpg",

},

},

],

}

],

max_tokens=300,

)

print(response.choices[0])

座標データが返ってきました。

{

"detections": [

{ "category": "person", "bbox": [112, 10, 435, 482], "score": 0.98 },

{ "category": "skis", "bbox": [114, 255, 329, 471], "score": 0.95 },

{ "category": "person", "bbox": [150, 108, 366, 478], "score": 0.97 },

{ "category": "sports ball", "bbox": [241, 134, 374, 186], "score": 0.92 },

{ "category": "person", "bbox": [0, 84, 358, 482], "score": 0.99 }

]

}

描画してみます。

人数はしっかりわかっているようですが、どこに人がいつか座標データとして出力することは難しいようです。

プロンプトやファインチューニングによっては結果が正しく出るようになるのでしょうか。

引き続き調査を続けてみます。