Foundry IQでAzure Blob Storageからナレッジベースを作成してみました。

その時の記録です。

Steps

1. ナレッジベース作成

Configure a knowledge base -> Configure a knowledge base を選択してConnect

ここのContext extraction modeによってスキルセットが異なります(Indexは同じ)。

Standardを使えるリージョンが限定されているので注意ください。

ひとまずこんな入力で「Save knowledge base」をクリック

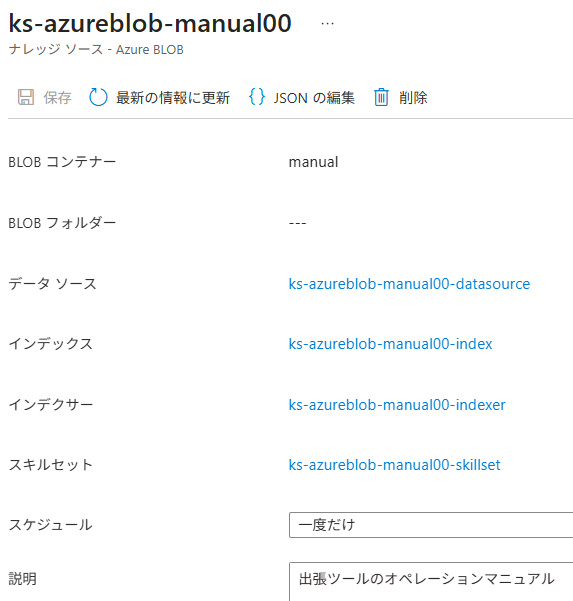

2. 作成された内容

ナレッジベースとナレッジソース

AI Searchではナレッジベースやナレッジソースができている

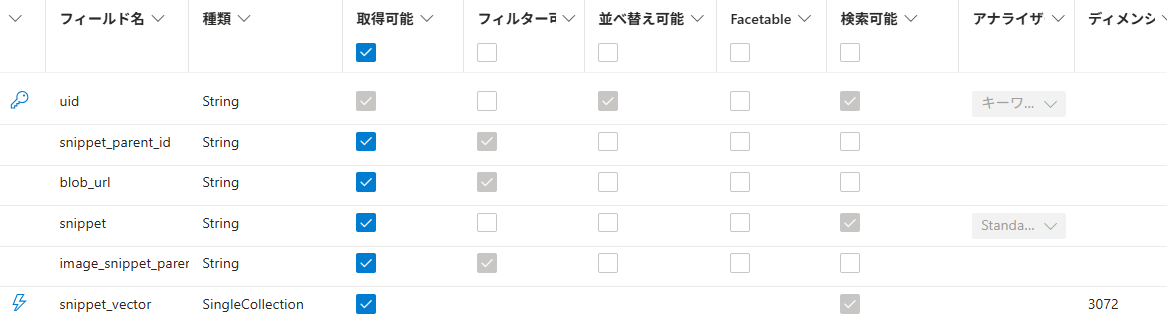

インデックスの定義

index

{

"@odata.etag": "\"0x8DE2CADB658B6AD\"",

"name": "ks-azureblob-manual00-index",

"description": "Search index for knowledge source 'ks-azureblob-manual00'",

"fields": [

{

"name": "uid",

"type": "Edm.String",

"searchable": true,

"filterable": false,

"retrievable": true,

"stored": true,

"sortable": true,

"facetable": false,

"key": true,

"analyzer": "keyword",

"synonymMaps": []

},

{

"name": "snippet_parent_id",

"type": "Edm.String",

"searchable": false,

"filterable": true,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false,

"synonymMaps": []

},

{

"name": "blob_url",

"type": "Edm.String",

"searchable": false,

"filterable": true,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false,

"synonymMaps": []

},

{

"name": "snippet",

"type": "Edm.String",

"searchable": true,

"filterable": false,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false,

"synonymMaps": []

},

{

"name": "image_snippet_parent_id",

"type": "Edm.String",

"searchable": false,

"filterable": true,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false,

"synonymMaps": []

},

{

"name": "snippet_vector",

"type": "Collection(Edm.Single)",

"searchable": true,

"filterable": false,

"retrievable": true,

"stored": true,

"sortable": false,

"facetable": false,

"key": false,

"dimensions": 3072,

"vectorSearchProfile": "ks-azureblob-manual00-vector-search-profile",

"synonymMaps": []

}

],

"scoringProfiles": [],

"suggesters": [],

"analyzers": [],

"normalizers": [],

"tokenizers": [],

"tokenFilters": [],

"charFilters": [],

"similarity": {

"@odata.type": "#Microsoft.Azure.Search.BM25Similarity"

},

"semantic": {

"defaultConfiguration": "ks-azureblob-manual00-semantic-configuration",

"configurations": [

{

"name": "ks-azureblob-manual00-semantic-configuration",

"flightingOptIn": false,

"rankingOrder": "BoostedRerankerScore",

"prioritizedFields": {

"prioritizedContentFields": [

{

"fieldName": "snippet"

}

],

"prioritizedKeywordsFields": []

}

}

]

},

"vectorSearch": {

"algorithms": [

{

"name": "ks-azureblob-manual00-vector-search-algorithm",

"kind": "hnsw",

"hnswParameters": {

"metric": "cosine",

"m": 4,

"efConstruction": 400,

"efSearch": 500

}

}

],

"profiles": [

{

"name": "ks-azureblob-manual00-vector-search-profile",

"algorithm": "ks-azureblob-manual00-vector-search-algorithm",

"vectorizer": "ks-azureblob-manual00-vectorizer",

"compression": "ks-azureblob-manual00-vector-search-scalar-quantization"

}

],

"vectorizers": [

{

"name": "ks-azureblob-manual00-vectorizer",

"kind": "azureOpenAI",

"azureOpenAIParameters": {

"resourceUri": "https://ai-foundry-agent-eus2.openai.azure.com",

"deploymentId": "text-embedding-3-large",

"apiKey": "<redacted>",

"modelName": "text-embedding-3-large"

}

}

],

"compressions": [

{

"name": "ks-azureblob-manual00-vector-search-scalar-quantization",

"kind": "scalarQuantization",

"scalarQuantizationParameters": {

"quantizedDataType": "int8"

},

"rescoringOptions": {

"enableRescoring": true,

"defaultOversampling": 4,

"rescoreStorageMethod": "preserveOriginals"

}

}

]

}

}

スキルセット定義

skillsets

{

"@odata.etag": "\"0x8DE2CADB6728A48\"",

"name": "ks-azureblob-manual00-skillset",

"description": "Skillset for knowledge source 'ks-azureblob-manual00'",

"skills": [

{

"@odata.type": "#Microsoft.Skills.Text.SplitSkill",

"name": "SplitSkill",

"description": "Split document content into chunks",

"context": "/document",

"defaultLanguageCode": "en",

"textSplitMode": "pages",

"maximumPageLength": 2000,

"pageOverlapLength": 200,

"maximumPagesToTake": 0,

"unit": "characters",

"inputs": [

{

"name": "text",

"source": "/document/content",

"inputs": []

}

],

"outputs": [

{

"name": "textItems",

"targetName": "pages"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "AzureOpenAIEmbeddingSkill",

"description": "Generate embeddings",

"context": "/document/pages/*",

"resourceUri": "https://<resource name>.openai.azure.com",

"apiKey": "<redacted>",

"deploymentId": "text-embedding-3-large",

"dimensions": 3072,

"modelName": "text-embedding-3-large",

"inputs": [

{

"name": "text",

"source": "/document/pages/*",

"inputs": []

}

],

"outputs": [

{

"name": "embedding",

"targetName": "text_vector"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Custom.ChatCompletionSkill",

"name": "GenAISkill",

"description": "Generate chat responses for image verbalization",

"context": "/document/normalized_images/*",

"uri": "https://<resource name>.openai.azure.com/openai/deployments/gpt-4.1/chat/completions?api-version=2024-10-21",

"httpMethod": "POST",

"timeout": "PT1M",

"batchSize": 1,

"apiKey": "<redacted>",

"inputs": [

{

"name": "systemMessage",

"source": "='You are tasked with generating concise, accurate descriptions of images, figures, diagrams, or charts in documents.'",

"inputs": []

},

{

"name": "userMessage",

"source": "='Please describe this image.'",

"inputs": []

},

{

"name": "image",

"source": "/document/normalized_images/*/data",

"inputs": []

}

],

"outputs": [

{

"name": "response",

"targetName": "verbalizedImage"

}

],

"httpHeaders": {}

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "VerbalizedImageAzureOpenAIEmbeddingSkill",

"description": "Generate embeddings",

"context": "/document/normalized_images/*",

"resourceUri": "https://<resource name>.openai.azure.com",

"apiKey": "<redacted>",

"deploymentId": "text-embedding-3-large",

"dimensions": 3072,

"modelName": "text-embedding-3-large",

"inputs": [

{

"name": "text",

"source": "/document/normalized_images/*/verbalizedImage",

"inputs": []

}

],

"outputs": [

{

"name": "embedding",

"targetName": "verbalizedImage_vector"

}

]

}

],

"indexProjections": {

"selectors": [

{

"targetIndexName": "ks-azureblob-manual00-index",

"parentKeyFieldName": "snippet_parent_id",

"sourceContext": "/document/pages/*",

"mappings": [

{

"name": "snippet_vector",

"source": "/document/pages/*/text_vector",

"inputs": []

},

{

"name": "snippet",

"source": "/document/pages/*",

"inputs": []

},

{

"name": "blob_url",

"source": "/document/blob_url",

"inputs": []

}

]

},

{

"targetIndexName": "ks-azureblob-manual00-index",

"parentKeyFieldName": "image_snippet_parent_id",

"sourceContext": "/document/normalized_images/*",

"mappings": [

{

"name": "snippet_vector",

"source": "/document/normalized_images/*/verbalizedImage_vector",

"inputs": []

},

{

"name": "snippet",

"source": "/document/normalized_images/*/verbalizedImage",

"inputs": []

},

{

"name": "blob_url",

"source": "/document/blob_url",

"inputs": []

}

]

}

],

"parameters": {

"projectionMode": "skipIndexingParentDocuments"

}

}

}

スキルセットのつながりをデバッガセッションで確認

Indexerで"imageAction": "generateNormalizedImages" の 定義をしているため、事前に画像切り出しをしていて、その各画像に対してGenAISkillを使ってテキスト化しているようです。

ちなみに、Context extraction mode を Standardに設定すると以下のように、Content Understandingを使用します。

そのときのスキルセット定義

SkillSet

{

"@odata.etag": "\"0x8DE4B60097E4A30\"",

"name": "ks-azureblob-standard-skillset",

"description": "Skillset for knowledge source 'ks-azureblob-standard'",

"skills": [

{

"@odata.type": "#Microsoft.Skills.Util.ContentUnderstandingSkill",

"name": "contentUnderstandingSkill",

"context": "/document",

"extractionOptions": [

"images",

"locationMetadata"

],

"inputs": [

{

"name": "file_data",

"source": "/document/file_data",

"inputs": []

}

],

"outputs": [

{

"name": "text_sections",

"targetName": "text_sections"

},

{

"name": "normalized_images",

"targetName": "normalized_images"

}

],

"chunkingProperties": {

"unit": "characters",

"maximumLength": 2000,

"overlapLength": 200

}

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "AzureOpenAIEmbeddingSkill",

"description": "Generate embeddings",

"context": "/document/text_sections/*",

"resourceUri": "https://<resource>.openai.azure.com",

"apiKey": "<redacted>",

"deploymentId": "text-embedding-3-small",

"dimensions": 1536,

"modelName": "text-embedding-3-small",

"inputs": [

{

"name": "text",

"source": "/document/text_sections/*/content",

"inputs": []

}

],

"outputs": [

{

"name": "embedding",

"targetName": "text_vector"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Custom.ChatCompletionSkill",

"name": "GenAISkill",

"description": "Generate chat responses for image verbalization",

"context": "/document/normalized_images/*",

"uri": "https://<resource>.openai.azure.com/openai/deployments/gpt-4.1-mini/chat/completions?api-version=2024-10-21",

"httpMethod": "POST",

"timeout": "PT1M",

"batchSize": 1,

"apiKey": "<redacted>",

"inputs": [

{

"name": "systemMessage",

"source": "='You are tasked with generating concise, accurate descriptions of images, figures, diagrams, or charts in documents.'",

"inputs": []

},

{

"name": "userMessage",

"source": "='Please describe this image.'",

"inputs": []

},

{

"name": "image",

"source": "/document/normalized_images/*/data",

"inputs": []

}

],

"outputs": [

{

"name": "response",

"targetName": "verbalizedImage"

}

],

"httpHeaders": {}

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "VerbalizedImageAzureOpenAIEmbeddingSkill",

"description": "Generate embeddings",

"context": "/document/normalized_images/*",

"resourceUri": "https://<resource>.openai.azure.com",

"apiKey": "<redacted>",

"deploymentId": "text-embedding-3-small",

"dimensions": 1536,

"modelName": "text-embedding-3-small",

"inputs": [

{

"name": "text",

"source": "/document/normalized_images/*/verbalizedImage",

"inputs": []

}

],

"outputs": [

{

"name": "embedding",

"targetName": "verbalizedImage_vector"

}

]

}

],

"cognitiveServices": {

"@odata.type": "#Microsoft.Azure.Search.AIServicesByKey",

"description": "AI Services for knowledge source",

"key": "<redacted>",

"subdomainUrl": "https://<resource>.services.ai.azure.com"

},

"indexProjections": {

"selectors": [

{

"targetIndexName": "ks-azureblob-standard-index",

"parentKeyFieldName": "snippet_parent_id",

"sourceContext": "/document/text_sections/*",

"mappings": [

{

"name": "snippet_vector",

"source": "/document/text_sections/*/text_vector",

"inputs": []

},

{

"name": "snippet",

"source": "/document/text_sections/*/content",

"inputs": []

},

{

"name": "blob_url",

"source": "/document/blob_url",

"inputs": []

}

]

},

{

"targetIndexName": "ks-azureblob-standard-index",

"parentKeyFieldName": "image_snippet_parent_id",

"sourceContext": "/document/normalized_images/*",

"mappings": [

{

"name": "snippet_vector",

"source": "/document/normalized_images/*/verbalizedImage_vector",

"inputs": []

},

{

"name": "snippet",

"source": "/document/normalized_images/*/verbalizedImage",

"inputs": []

},

{

"name": "blob_url",

"source": "/document/blob_url",

"inputs": []

}

]

}

],

"parameters": {

"projectionMode": "skipIndexingParentDocuments"

}

}

}