What to prepare

- Google Colaboratory (*Jupyter Notebook is OK)

- Distributed source code (*not necessary if you want to do it from scratch)

What is TensorBoard?

TensorBoard provides the visualization and tools needed for machine learning.

What's inside.

- Tracking and visualization of metrics (loss, accuracy, etc.)

- Model graph visualization (operations and layers)

- Display histograms of weights, biases, and other tensors as they change over time

- Projection of embeddings into lower dimensional space

- Display of image, text, and audio data

- Profiling of TensorFlow programs

- Misc.

How to start using it quickly

Load TensorBoard.

%load_ext tensorboard

Import the required packages.

import tensorflow as tf

import datetime

MNIST dataset as an example.

Normalize the data and

write a function that creates a simple Keras model that classifies the images into 10 classes.

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

def create_model():

return tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 0s 0us/step

11501568/11490434 [==============================] - 0s 0us/step

TensorBoard in Keras Model.fit().

model = create_model()

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

log_dir = "logs/fit/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir=log_dir, histogram_freq=1)

model.fit(x=x_train,

y=y_train,

epochs=5,

validation_data=(x_test, y_test),

callbacks=[tensorboard_callback])

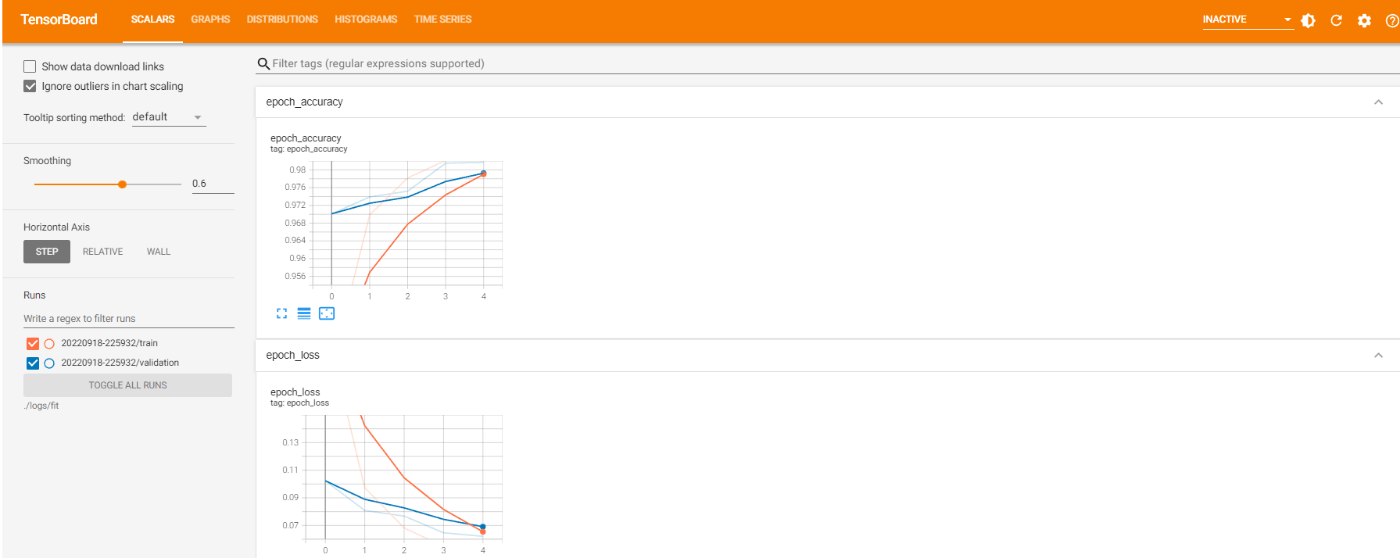

Epoch 1/5

1875/1875 [==============================] - 12s 6ms/step - loss: 0.2179 - accuracy: 0.9365 - val_loss: 0.1300 - val_accuracy: 0.9606

Epoch 2/5

1875/1875 [==============================] - 12s 6ms/step - loss: 0.0967 - accuracy: 0.9705 - val_loss: 0.0777 - val_accuracy: 0.9757

Epoch 3/5

1875/1875 [==============================] - 13s 7ms/step - loss: 0.0680 - accuracy: 0.9787 - val_loss: 0.0686 - val_accuracy: 0.9785

Epoch 4/5

1875/1875 [==============================] - 13s 7ms/step - loss: 0.0530 - accuracy: 0.9832 - val_loss: 0.0755 - val_accuracy: 0.9772

Epoch 5/5

1875/1875 [==============================] - 13s 7ms/step - loss: 0.0431 - accuracy: 0.9859 - val_loss: 0.0642 - val_accuracy: 0.9817

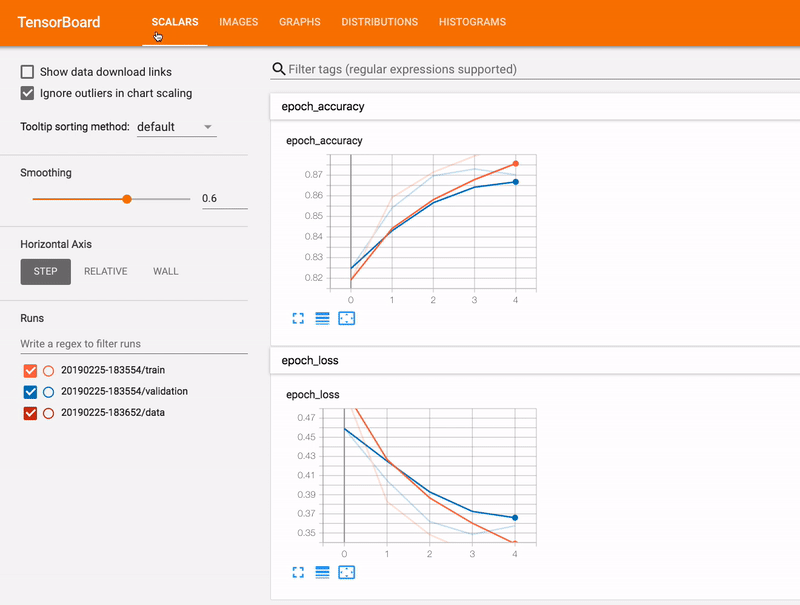

Start TensorflowBoard.

%tensorboard --logdir logs/fit

If you get a 403, please check your browser's

Allow Cokie

Turn off enhanced anti-tracking functionality

https://stackoverflow.com/questions/64218755/getting-error-403-in-google-colab-with-tensorboard-with-firefox

Brief description of the tabs on the top navigation bar of the dashboard

| word | meaning |

|---|---|

| Scalars | Shows loss and metric change per epoch. You can also track training speed, learning rate, and other scalar values. |

| Graphs | The model is visualized. |

| Distributions and Histograms | Shows the distribution of the tensor over time. Visualize weights and biases to help ensure that they are changing in the expected way. |

How do I use TensorBoard with other methods?

When training with methods such as tf.GradientTape(), use

Use tf.summary.

Use the same dataset as before, but use the

convert it to tf.data.Dataset and use the batch function.

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

test_dataset = tf.data.Dataset.from_tensor_slices((x_test, y_test))

train_dataset = train_dataset.shuffle(60000).batch(64)

test_dataset = test_dataset.batch(64)

Training codes should select loss and optimizer.。

loss_object = tf.keras.losses.SparseCategoricalCrossentropy()

optimizer = tf.keras.optimizers.Adam()

Create evaluation indicators that can be logged at any point in time.

train_loss = tf.keras.metrics.Mean('train_loss', dtype=tf.float32)

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy('train_accuracy')

test_loss = tf.keras.metrics.Mean('test_loss', dtype=tf.float32)

test_accuracy = tf.keras.metrics.SparseCategoricalAccuracy('test_accuracy')

Define training and testing functions.

def train_step(model, optimizer, x_train, y_train):

with tf.GradientTape() as tape:

predictions = model(x_train, training=True)

loss = loss_object(y_train, predictions)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

train_loss(loss)

train_accuracy(y_train, predictions)

def test_step(model, x_test, y_test):

predictions = model(x_test)

loss = loss_object(y_test, predictions)

test_loss(loss)

test_accuracy(y_test, predictions)

Prepare a process to write a summary to the logs directory.

current_time = datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

train_log_dir = 'logs/gradient_tape/' + current_time + '/train'

test_log_dir = 'logs/gradient_tape/' + current_time + '/test'

train_summary_writer = tf.summary.create_file_writer(train_log_dir)

test_summary_writer = tf.summary.create_file_writer(test_log_dir)

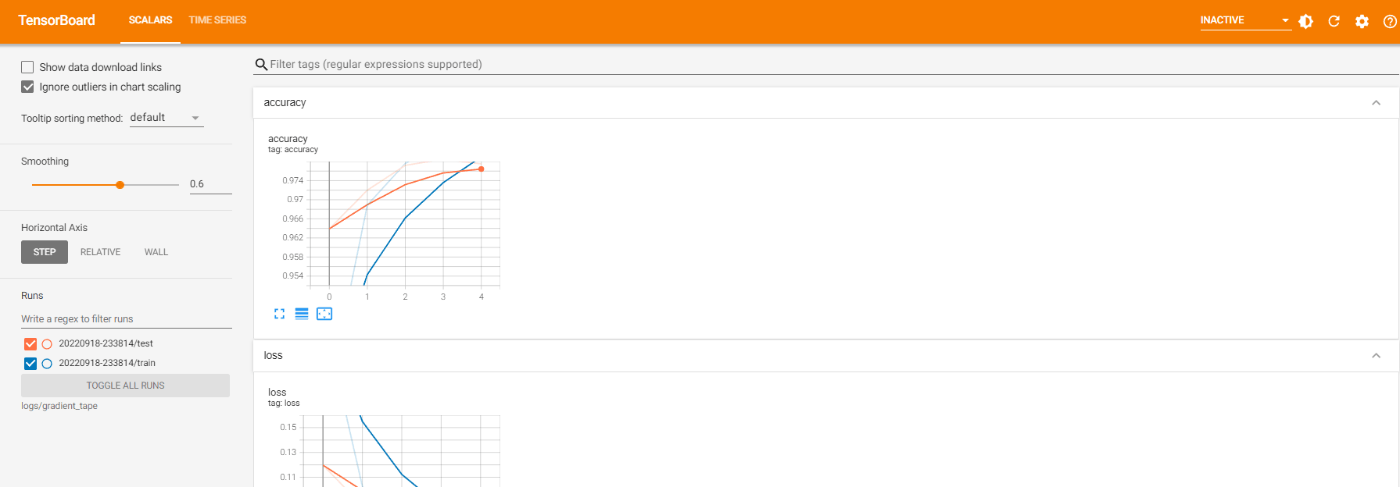

Start training.

Use tf.summary.scalar() to write a summary and

Log losses and accuracies during training/testing.

model = create_model() # Reset model

EPOCHS = 5

for epoch in range(EPOCHS):

for (x_train, y_train) in train_dataset:

train_step(model, optimizer, x_train, y_train)

with train_summary_writer.as_default():

tf.summary.scalar('loss', train_loss.result(), step=epoch)

tf.summary.scalar('accuracy', train_accuracy.result(), step=epoch)

for (x_test, y_test) in test_dataset:

test_step(model, x_test, y_test)

with test_summary_writer.as_default():

tf.summary.scalar('loss', test_loss.result(), step=epoch)

tf.summary.scalar('accuracy', test_accuracy.result(), step=epoch)

template = 'Epoch {}, Loss: {}, Accuracy: {}, Test Loss: {}, Test Accuracy: {}'

print (template.format(epoch+1,

train_loss.result(),

train_accuracy.result()*100,

test_loss.result(),

test_accuracy.result()*100))

# Reset metrix

train_loss.reset_states()

test_loss.reset_states()

train_accuracy.reset_states()

test_accuracy.reset_states()

Epoch 1, Loss: 0.24199137091636658, Accuracy: 93.0050048828125, Test Loss: 0.11972833424806595, Test Accuracy: 96.38999938964844

Epoch 2, Loss: 0.10267779976129532, Accuracy: 96.88833618164062, Test Loss: 0.0870281308889389, Test Accuracy: 97.19999694824219

Epoch 3, Loss: 0.07132437825202942, Accuracy: 97.75999450683594, Test Loss: 0.07331769168376923, Test Accuracy: 97.7199935913086

Epoch 4, Loss: 0.05526307597756386, Accuracy: 98.22833251953125, Test Loss: 0.06401377171278, Test Accuracy: 97.8499984741211

Epoch 5, Loss: 0.0429304875433445, Accuracy: 98.60166931152344, Test Loss: 0.07003398984670639, Test Accuracy: 97.75

Let's visualize again.

%tensorboard --logdir logs/gradient_tape

▼ ワンコインAI無料お試し

▼ DeepRecommendから限定情報を受け取る