始めに

この記事はR AdventCalendar 20日目の記事です。

言語処理以外の記事を書こうと思ったのですが、諸々の進捗が許してくれませんでした。ということで、今回も言語処理系のお話です。

本日は{quanteda}というテキスト処理・解析のためのRパッケージについてご紹介します。

{quanteda}とは

A fast, flexible toolset for for the management, processing, and quantitative analysis of textual data in R.

テキストファイルからのコーパス作成からトークン化・ステミング、N-gramや類似度に可読性指標の計算など、言語処理タスクを手早く手軽にしやすくするためのRパッケージです(ただし、英語文書の解析がメインです)。

下記がパッケージのリンクです。上がCRANで下がGitHubです。

quanteda: Quantitative Analysis of Textual Data

kbenoit/quanteda

テキスト処理には{stringr}と{stringi}を用いており、{data.table}による大規模な文書のインデックス化と{Matrix}による疎行列化がされています。同じようなテキスト処理用フレームワークに{tm}もありますが、{tm}で作成したオブジェクトも一部利用できます。

{quanteda}で何ができるか?

-

コーパス管理

- テキストファイル処理(個別ファイルからディレクトリ単位でのテキストの読み込みや、ヘルパー関数の定義)

- ある言語単位(文や文書など)での集計や抽出、リサンプリング(ノンパラメトリックブートスラッピング)

- KeyWords In Context (KWIC)

-

言語処理ツール

- タイプ数やN-gramなどのテキストの素性量抽出や、ユーザー定義の辞書やシソーラス作成

- ステミングやランダム選択、文書頻度、単語頻度などによる素性の絞り込み

- 英単語用前処理

- 可読性指標や語彙の多様性指標、連語分析、類似度算出

- TFやTF-IDF以外にもコレスポンデンス分析やWordfish modelによる素性の重み付け

- (トピックモデルやNaive Bayesやk-nearest neighbourは現在は未実装)

Rで実行する

定義されている定数や関数を、一部ですが試してみます。

library(dplyr)

library(quanteda)

# The stopword list are SMART English stopwords from the SMART information retrieval system

> quanteda::stopwords(kind = "english") %>%

+ head(n = 5)

[1] "i" "me" "my" "myself" "we"

# 英語の音節

# ex・am・ple

> quanteda::syllables(x = "example")

[1] 3

# sta・tis・ti・cal

> quanteda::syllables(x = "statistical")

[1] 4

# {SnowballC}によるポーターステミング

> quanteda::wordstem(x = c("win", "winning", "wins", "won", "winner"), language = "porter")

[1] "win" "win" "win" "won" "winner"

# N-gram

# 日本語もわかち書きしてもおかしい結果を出すケースがある。

> quanteda::ngrams(

+ text = c("すもも も もも も もも の うち", "吾輩 は 猫 で ある 。", "Why are you using SJIS ?"),

+ n = 2, concatenator = "-"

+ )

[[1]]

[1] "すもも-も" "も-も" "も-も" "も-も" "も-も" "も-も" "も-の" "の-うち"

[[2]]

[1] "吾輩-は" "は-猫" "猫-で" "で-ある" "ある-。"

[[3]]

[1] "Why-are" "are-you" "you-using" "using-SJIS" "SJIS-?"

- コーパス管理

library(dplyr)

library(tm)

library(quanteda)

# {tm}のデータセットcrudeを利用

data(crude)

crude_coupus <- quanteda::corpus(x = crude, encTo = "UTF-8")

> class(x = crude_coupus)

[1] "corpus" "list"

> summary(object = crude_coupus)

Corpus consisting of 20 documents.

Text Types Tokens Sentences

127 58 92 12

144 227 443 52

191 43 55 9

194 52 69 10

211 64 93 12

236 237 458 57

237 236 431 60

242 110 154 18

246 181 324 44

248 187 344 41

273 192 373 44

349 67 92 12

352 70 105 12

353 72 98 12

368 72 109 14

489 94 148 17

502 121 198 22

543 51 83 10

704 142 281 33

708 38 53 8

Source: Converted from tm VCorpus 'crude'.

Created: Sat Dec 19 15:52:18 2015.

Notes: .

# コーパスの一部を取り出す

> stringr::str_split(string = quanteda::texts(x = crude_coupus)[1], pattern = "\n")

[[1]]

[1] "Diamond Shamrock Corp said that"

[2] "effective today it had cut its contract prices for crude oil by"

[3] "1.50 dlrs a barrel."

[4] " The reduction brings its posted price for West Texas"

[5] "Intermediate to 16.00 dlrs a barrel, the copany said."

[6] " \"The price reduction today was made in the light of falling"

[7] "oil product prices and a weak crude oil market,\" a company"

[8] "spokeswoman said."

[9] " Diamond is the latest in a line of U.S. oil companies that"

[10] "have cut its contract, or posted, prices over the last two days"

[11] "citing weak oil markets."

[12] " Reuter"

# 様々な処理ができるトークン化

> tokenized_crude_coupus <- quanteda::tokenize(

+ x = crude_coupus, what = "sentence",

+ removeNumbers = FALSE, removePunct = FALSE, removeSeparators = TRUE, removeTwitter = FALSE,

+ ngrams = 1,

+ verbose = TRUE

+ )

Starting tokenization...

...preserving Twitter characters (#, @)...total elapsed: 0 seconds.

...tokenizing texts

...separating into sentences....total elapsed: 0.008 seconds.

...replacing Twitter characters (#, @)...total elapsed: 0 seconds.

...replacing names...total elapsed: 0.001 seconds.

Finished tokenizing and cleaning 20 texts.

> class(x = tokenized_crude_coupus)

[1] "tokenizedTexts" "list"

# 各文書の可読性指標

> quanteda::readability(x = crude_coupus, measure = "all") %>%

+ head(n = 2)

ARI ARI.NRI ARI.simple Bormuth Bormuth.GP Coleman Coleman.C2 Coleman.Liau

127 3.495942 2.536232 47.97101 -1.090237 2955495 41.47391 53.22391 59.82560

144 5.018712 4.274058 50.91878 -1.283181 5139826 35.80508 46.19447 53.42044

Coleman.Liau.grade Coleman.Liau.short Dale.Chall Dale.Chall.old Dale.Chall.PSK Danielson.Bryan

127 6.671495 6.671304 25.66652 -30.60794 -30.94298 4.752439

144 8.426537 8.426546 22.30909 -33.48056 -33.80707 5.066302

Danielson.Bryan.2 Dickes.Steiwer DRP ELF Farr.Jenkins.Paterson Flesch Flesch.PSK

127 84.80234 199.5486 209.0237 2.583333 -40.28935 80.42942 4.773458

144 82.90171 211.3242 228.3181 3.384615 -41.08444 65.27241 5.608429

Flesch.Kincaid FOG FOG.PSK FOG.NRI FORCAST FORCAST.RGL Fucks Linsear.Write LIW

127 3.945652 6.544928 2.918473 4.058333 10.70652 10.20717 34.33333 -0.41666667 23.97101

144 6.271552 9.096180 3.691583 33.514038 11.36569 10.93226 40.13462 -0.01923077 31.54406

nWS nWS.2 nWS.3 nWS.4 RIX SMOG SMOG.C SMOG.simple SMOG.de Spache

127 4.010888 4.434446 2.922622 2.729354 1.250000 7.793538 7.872500 7.472136 2.472136 5.686667

144 5.781312 6.126124 4.722261 4.472010 1.961538 9.417115 9.300595 9.028777 4.028777 5.336328

Spache.old Strain Traenkle.Bailer Traenkle.Bailer.2 Wheeler.Smith

127 6.220000 3.225000 -246.3363 -232.0354 25.83333

144 5.864591 4.015385 -277.8625 -258.7959 33.84615

# 連語とそのスコア

> quanteda::collocations(x = crude_coupus, method = "all", size = 2)

word1 word2 word3 count G2 X2 pmi dice

1: mln bpd 14 1.213317e+02 1.270675e+03 4.5168446312 0.58333333

2: a barrel 14 1.030286e+02 5.989800e+02 3.7767238174 0.30434783

3: dlrs a 13 8.190325e+01 4.497635e+02 3.5759259844 0.29545455

4: billion riyals 6 7.976580e+01 2.700747e+03 6.1100805691 0.85714286

5: crude oil 13 7.626258e+01 3.749103e+02 3.4020303680 0.26530612

---

2589: of a 2 1.117296e-02 1.143404e-02 0.0738518082 0.02409639

2590: in its 1 2.194860e-03 2.220715e-03 0.0462953604 0.01587302

2591: said a 1 2.517813e-05 1.800434e-05 0.0041718876 0.01652893

2592: was to 1 1.370888e-05 6.505725e-06 -0.0024948038 0.01273885

2593: and the 4 1.070793e-05 3.510900e-06 0.0009024332 0.03030303

# KWIC (KeyWord In Context)

> quanteda::kwic(x = crude_coupus, word = "will", window = 3)

preword word postword

[144, 266] "They will not meet now

[144, 327] next two months will be critical for\nOPEC's

[144, 355] eight weeks\nsince buyers will come back into

[191, 9] the\ncontract price it will pay for crude

[194, 10] contract price it will pay for all

[236, 163] the ability, it will do so," the

[236, 450] such pressure. It will continue through March

[242, 31] over whether OPEC will succeed in halting\nthe

[248, 56] Accord and it will never sell its

[273, 263] a weak market will\ncome this month, when

[349, 8] six Gulf\nArab states will meet in Bahrain

[352, 56] accord and it will never sell its

[704, 19] the energy\ncomplex that will increase the use

[704, 35] April one, NYMEX will allow oil traders

[704, 69] "This will change the way

[704, 92] Foreign traders will be able to

[704, 121] The expanded program "will serve the industry

[704, 257] of the EFP\nprovision will add to globalization

- 言語処理ツール

library(dplyr)

library(quanteda)

# ストップワードと"will"を除去し、{quanteda}の関数が適用しやすいdfmオブジェクトへ変換

> crude_coupus_ignore_stopwords <- quanteda::dfm(

+ x = crude_coupus,

+ ignoredFeatures = c("will", quanteda::stopwords(kind = "english")),

+ stem = TRUE, matrixType = "sparse"

+ )

Creating a dfm from a corpus ...

... lowercasing

... tokenizing

... indexing 20 documents

... indexing 1,052 feature types

... removed 89 features, from 175 supplied feature types

... stemming features (English), trimmed 169 feature variants

... created a 20 x 794 sparse dfm

... complete.

Elapsed time: 0.056 seconds.

> class(x = crude_coupus_ignore_stopwords)

[1] "dfmSparse"

attr(,"package")

[1] "quanta"

# summary(object = crude_coups)のときよりも減っている

> quanteda::ntype(x = crude_coupus_ignore_stopwords)

127 144 191 194 211 236 237 242 246 248 273 349 352 353 368 489 502 543 704 708

36 158 31 33 37 157 179 70 121 128 134 49 49 47 48 60 78 33 101 24

> quanteda::ntoken(x = crude_coupus_ignore_stopwords)

127 144 191 194 211 236 237 242 246 248 273 349 352 353 368 489 502 543 704 708

58 262 38 44 54 263 268 86 188 209 242 65 68 60 66 86 113 54 172 35

# dfmオブジェクトと型変換

# dfmSparse

> str(object = crude_coupus_ignore_stopwords)

Formal class 'dfmSparse' [package "quanteda"] with 9 slots

..@ settings :List of 1

.. ..$ : NULL

..@ weighting: chr "frequency"

..@ smooth : num 0

..@ Dim : int [1:2] 20 794

..@ Dimnames :List of 2

.. ..$ docs : chr [1:20] "127" "144" "191" "194" ...

.. ..$ features: chr [1:794] "diamond" "shamrock" "corp" "said" ...

..@ i : int [1:15880] 0 1 2 3 4 5 6 7 8 9 ...

..@ p : int [1:795] 0 20 40 60 80 100 120 140 160 180 ...

..@ x : num [1:15880] 2 0 0 0 0 0 0 0 0 0 ...

..@ factors : list()

# austin's wfm format

> str(object = quanteda::as.wfm(x = crude_coupus_ignore_stopwords))

wfm [1:20, 1:794] 2 0 0 0 0 0 0 0 0 0 ...

- attr(*, "dimnames")=List of 2

..$ docs : chr [1:20] "127" "144" "191" "194" ...

..$ words: chr [1:794] "diamond" "shamrock" "corp" "said" ...

- attr(*, "class")= chr [1:2] "wfm" "matrix"

# tm's DocumentTermMatrix format

> str(object = quanteda::as.DocumentTermMatrix(x = crude_coupus_ignore_stopwords))

List of 6

$ i : int [1:15880] 1 2 3 4 5 6 7 8 9 10 ...

$ j : int [1:15880] 1 1 1 1 1 1 1 1 1 1 ...

$ v : num [1:15880] 2 0 0 0 0 0 0 0 0 0 ...

$ nrow : int 20

$ ncol : int 794

$ dimnames:List of 2

..$ Docs : chr [1:20] "127" "144" "191" "194" ...

..$ Terms: chr [1:794] "diamond" "shamrock" "corp" "said" ...

- attr(*, "class")= chr [1:2] "DocumentTermMatrix" "simple_triplet_matrix"

- attr(*, "weighting")= chr [1:2] "term frequency" "tf"

# stm package format

> str(object = quanteda::convert(crude_coupus_ignore_stopwords, to = "stm"))

List of 3

$ documents:List of 20

..$ 127: int [1:2, 1:36] 1 2 2 1 3 1 4 3 5 1 ...

..$ 144: int [1:2, 1:158] 4 11 7 1 9 6 11 12 24 6 ...

..$ 191: int [1:2, 1:31] 4 1 5 1 6 1 8 1 9 2 ...

..$ 194: int [1:2, 1:33] 4 1 5 1 6 1 8 1 9 2 ...

..$ 211: int [1:2, 1:37] 4 3 11 1 12 2 21 1 36 1 ...

..$ 236: int [1:2, 1:157] 4 10 6 1 9 8 10 2 11 7 ...

..$ 237: int [1:2, 1:179] 4 1 8 1 9 1 11 3 12 1 ...

..$ 242: int [1:2, 1:70] 4 3 9 2 11 3 26 2 36 1 ...

..$ 246: int [1:2, 1:121] 4 5 6 1 9 2 11 5 13 1 ...

..$ 248: int [1:2, 1:128] 4 7 9 10 11 9 12 4 13 3 ...

..$ 273: int [1:2, 1:134] 3 3 4 8 7 1 9 5 10 5 ...

..$ 349: int [1:2, 1:49] 4 1 6 1 9 1 10 2 11 4 ...

..$ 352: int [1:2, 1:49] 4 2 7 1 9 5 11 5 13 1 ...

..$ 353: int [1:2, 1:47] 4 1 9 2 10 2 11 4 13 1 ...

..$ 368: int [1:2, 1:48] 4 3 6 1 11 3 30 2 36 1 ...

..$ 489: int [1:2, 1:60] 4 2 9 3 11 4 12 1 13 3 ...

..$ 502: int [1:2, 1:78] 4 2 9 3 11 5 12 1 13 3 ...

..$ 543: int [1:2, 1:33] 3 1 4 4 5 1 7 1 9 3 ...

..$ 704: int [1:2, 1:101] 4 4 5 2 8 1 9 3 11 3 ...

..$ 708: int [1:2, 1:24] 4 1 10 1 11 1 13 2 24 1 ...

$ vocab : chr [1:794] "13-member" "13-nation" "200-foot" "20s" ...

$ meta : NULL

# topicmodels package format

> str(object = quanteda::quantedaformat2dtm(x = crude_coupus_ignore_stopwords))

List of 6

$ i : int [1:15880] 1 1 1 1 1 1 1 1 1 1 ...

$ j : int [1:15880] 1 2 3 4 5 6 7 8 9 10 ...

$ v : int [1:15880] 2 1 1 3 1 2 2 2 5 2 ...

$ nrow : int 20

$ ncol : int 794

$ dimnames:List of 2

..$ Docs : chr [1:20] "127" "144" "191" "194" ...

..$ Terms: chr [1:794] "diamond" "shamrock" "corp" "said" ...

- attr(*, "class")= chr [1:2] "DocumentTermMatrix" "simple_triplet_matrix"

- attr(*, "weighting")= chr [1:2] "term frequency" "tf"

# lda package format

> str(object = quanteda::dfm2ldaformat(x = crude_coupus_ignore_stopwords))

List of 2

$ documents:List of 20

..$ 127: int [1:2, 1:794] 0 2 1 1 2 1 3 3 4 1 ...

..$ 144: int [1:2, 1:794] 0 0 1 0 2 0 3 11 4 0 ...

..$ 191: int [1:2, 1:794] 0 0 1 0 2 0 3 1 4 1 ...

..$ 194: int [1:2, 1:794] 0 0 1 0 2 0 3 1 4 1 ...

..$ 211: int [1:2, 1:794] 0 0 1 0 2 0 3 3 4 0 ...

..$ 236: int [1:2, 1:794] 0 0 1 0 2 0 3 10 4 0 ...

..$ 237: int [1:2, 1:794] 0 0 1 0 2 0 3 1 4 0 ...

..$ 242: int [1:2, 1:794] 0 0 1 0 2 0 3 3 4 0 ...

..$ 246: int [1:2, 1:794] 0 0 1 0 2 0 3 5 4 0 ...

..$ 248: int [1:2, 1:794] 0 0 1 0 2 0 3 7 4 0 ...

..$ 273: int [1:2, 1:794] 0 0 1 0 2 3 3 8 4 0 ...

..$ 349: int [1:2, 1:794] 0 0 1 0 2 0 3 1 4 0 ...

..$ 352: int [1:2, 1:794] 0 0 1 0 2 0 3 2 4 0 ...

..$ 353: int [1:2, 1:794] 0 0 1 0 2 0 3 1 4 0 ...

..$ 368: int [1:2, 1:794] 0 0 1 0 2 0 3 3 4 0 ...

..$ 489: int [1:2, 1:794] 0 0 1 0 2 0 3 2 4 0 ...

..$ 502: int [1:2, 1:794] 0 0 1 0 2 0 3 2 4 0 ...

..$ 543: int [1:2, 1:794] 0 0 1 0 2 1 3 4 4 1 ...

..$ 704: int [1:2, 1:794] 0 0 1 0 2 0 3 4 4 2 ...

..$ 708: int [1:2, 1:794] 0 0 1 0 2 0 3 1 4 0 ...

$ vocab : chr [1:794] "diamond" "shamrock" "corp" "said" ...

# サンプリング

> set.seed(seed = 71)

# corpusオブジェクト

> summary(quanteda::sample(x = crude_coupus, size = 5))

Corpus consisting of 5 documents.

Text Types Tokens Sentences

237 236 431 60

273 192 373 44

236 237 458 57

194 52 69 10

543 51 83 10

Source: Converted from tm VCorpus 'crude'.

Created: Sat Dec 19 15:52:18 2015.

Notes: .

# dfmオブジェクトでfeature単位

> summary(quanteda::sample(x = crude_coupus_ignore_stopwords, size = 5, what = "features"))

20 x 5 sparse Matrix of class "dfmSparse", with 100 entries

i j x

1 1 1 0

2 2 1 0

3 3 1 0

4 4 1 0

5 5 1 0

6 6 1 0

7 7 1 0

8 8 1 0

9 9 1 0

10 10 1 0

11 11 1 0

12 12 1 0

13 13 1 0

14 14 1 0

15 15 1 0

16 16 1 0

17 17 1 0

18 18 1 0

19 19 1 1

20 20 1 0

21 1 2 0

22 2 2 0

23 3 2 0

24 4 2 0

25 5 2 0

26 6 2 0

27 7 2 0

28 8 2 0

29 9 2 1

30 10 2 0

31 11 2 0

32 12 2 0

33 13 2 0

34 14 2 0

35 15 2 0

36 16 2 0

37 17 2 0

38 18 2 0

39 19 2 0

40 20 2 0

41 1 3 0

42 2 3 0

43 3 3 0

44 4 3 0

45 5 3 0

46 6 3 0

47 7 3 0

48 8 3 0

49 9 3 0

50 10 3 0

51 11 3 0

52 12 3 0

53 13 3 0

54 14 3 0

55 15 3 0

56 16 3 1

57 17 3 1

58 18 3 0

59 19 3 0

60 20 3 0

61 1 4 0

62 2 4 0

63 3 4 0

64 4 4 0

65 5 4 0

66 6 4 1

67 7 4 0

68 8 4 0

69 9 4 0

70 10 4 0

71 11 4 0

72 12 4 0

73 13 4 0

74 14 4 0

75 15 4 0

76 16 4 0

77 17 4 0

78 18 4 0

79 19 4 0

80 20 4 0

81 1 5 0

82 2 5 0

83 3 5 0

84 4 5 0

85 5 5 0

86 6 5 0

87 7 5 3

88 8 5 0

89 9 5 0

90 10 5 0

91 11 5 2

92 12 5 0

93 13 5 0

94 14 5 0

95 15 5 0

96 16 5 0

97 17 5 0

98 18 5 0

99 19 5 0

100 20 5 0

# 頻度ベースで上位の素性を抽出(10回以上出現して、2文書以上に出現するものに限定)

> quanteda::topfeatures(

+ x = quanteda::trim(x = crude_coupus_ignore_stopwords, minCount = 10, minDoc = 2)

+ )

Features occurring less than 10 times: 758

Features occurring in fewer than 2 documents: 489

oil said price opec mln market barrel last dlrs bpd

85 73 63 47 31 30 26 24 23 23

# 10回以上出現する素性をサンプリング

> quanteda::trim(x = crude_coupus_ignore_stopwords, minCount = 10, nsample = 5)

Features occurring less than 10 times: 758

Retaining a random sample of 5 words

Document-feature matrix of: 20 documents, 5 features.

20 x 5 sparse Matrix of class "dfmSparse"

features

docs dlrs offici accord s sheikh

127 2 0 0 0 0

144 0 0 0 0 0

191 1 0 0 0 0

194 2 0 0 0 0

211 2 0 0 0 0

236 2 5 0 5 3

237 1 1 0 6 0

242 0 1 0 0 0

246 0 0 0 0 5

248 4 1 5 0 2

273 2 4 1 0 0

349 0 3 0 0 0

352 0 1 2 0 0

353 0 0 0 0 1

368 0 0 0 0 0

489 1 0 0 0 0

502 1 0 0 0 0

543 5 0 0 0 0

704 0 1 4 0 0

708 0 0 0 0 0

# 頻度ベースで上位10個の素性を抽出

> quanteda::topfeatures(x = crude_coupus_ignore_stopwords, n = 10, decreasing = TRUE)

oil said price opec mln market barrel last dlrs bpd

85 73 63 47 31 30 26 24 23 23

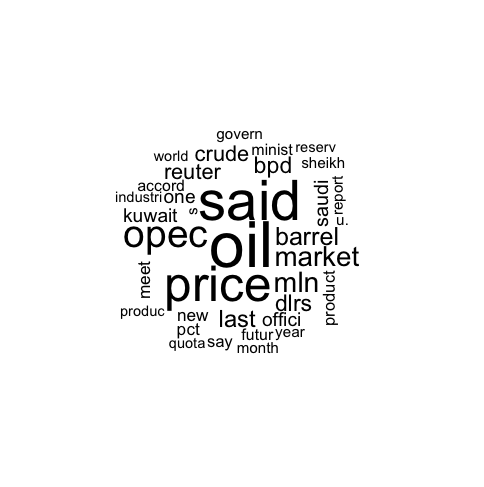

> plot(crude_coupus_ignore_stopwords, min.freq = 10, random.order = FALSE)

# 素性の重み付けを変える

> log_tf <- quanteda::weight(x = crude_coupus_ignore_stopwords, type = "logFreq")

> wfidf <- quanteda::weight(x = log_tf, type = "tfidf", normalize = FALSE)

# "weighting"の属性値が変わっている

> str(object = wfidf)

dfm [1:20, 1:794] 3.29 0 0 0 0 ...

- attr(*, "dimnames")=List of 2

..$ docs : chr [1:20] "127" "144" "191" "194" ...

..$ features: chr [1:794] "diamond" "shamrock" "corp" "said" ...

- attr(*, "class")= chr [1:2] "dfm" "matrix"

- attr(*, "weighting")= chr "tfidf"

# 重みと順番が変わっている

> quanteda::topfeatures(x = log_tf, n = 10, decreasing = TRUE)

oil said price opec reuter barrel mln market last dlrs

30.84361 27.17000 24.34084 15.40452 14.26841 13.51072 12.57072 12.04984 11.71896 11.66685

> quanteda::topfeatures(x = wfidf, n = 10, decreasing = TRUE)

bpd opec mln market saudi kuwait s govern accord crude

11.205873 10.677598 10.037813 9.621890 9.519591 8.690813 8.606302 8.606302 8.357742 8.354686

# 定義されている重み付けの全種類を試す

> sapply(

+ X = mapply(

+ x = rep(x = list(crude_coupus_ignore_stopwords), each = 5),

+ type = c("frequency", "relFreq", "relMaxFreq", "logFreq", "tfidf"),

+ FUN = quanteda::weight,

+ smooth = 0

+ ),

+ FUN = quanteda::topfeatures,

+ n = 10, decreasing = TRUE

+ ) %>%

+ t

oil said price opec mln market barrel last dlrs

[1,] 85.0000000 73.0000000 63.0000000 47.0000000 31.0000000 30.0000000 26.0000000 24.0000000 23.0000000

[2,] 0.8284160 0.6418656 0.6004227 0.3132729 0.3015126 0.2992712 0.2950993 0.2611242 0.2413641

[3,] 14.3948413 11.3892857 10.3662698 5.7662698 4.9095238 4.8055556 4.6305556 4.6083333 4.2900794

[4,] 30.8436127 27.1700007 24.3408417 15.4045172 14.2684087 13.5107230 12.5707158 12.0498398 11.7189635

[5,] 0.3027746 0.3012118 0.2501508 0.2173108 0.2089926 0.2064206 0.1968457 0.1927311 0.1922584

bpd

[1,] 23.0000000

[2,] 0.2178314

[3,] 3.8777778

[4,] 11.6668475

[5,] 0.1892054

# 類似度を計算

> quanteda::similarity(

+ x = crude_coupus_ignore_stopwords,

+ selection = c("oil", "price"), n = 10, margin = "features",

+ method = "cosine"

+ )

$price

oil said reuter compani cut last barrel market effect bring

0.8969 0.7757 0.7409 0.7337 0.7294 0.7152 0.7045 0.6904 0.6751 0.6717

$oil

price reuter said barrel market last opec crude cut today

0.8969 0.8531 0.8507 0.7137 0.6845 0.6765 0.6443 0.6283 0.6163 0.6094

# 語彙の多様性

# 文書単位で計算

> mapply(

+ x = rep(x = list(crude_coupus_ignore_stopwords), each = 7),

+ type = c("TTR", "C", "R", "CTTR", "U", "S", "Maas"),

+ FUN = quanteda::lexdiv,

+ log.base = 10

+ )

[,1] [,2] [,3] [,4] [,5] [,6] [,7]

127 0.6206897 0.6206897 0.6206897 0.6206897 0.6206897 0.6206897 0.6206897

144 0.6030534 0.6030534 0.6030534 0.6030534 0.6030534 0.6030534 0.6030534

191 0.8157895 0.8157895 0.8157895 0.8157895 0.8157895 0.8157895 0.8157895

194 0.7500000 0.7500000 0.7500000 0.7500000 0.7500000 0.7500000 0.7500000

211 0.6851852 0.6851852 0.6851852 0.6851852 0.6851852 0.6851852 0.6851852

236 0.5969582 0.5969582 0.5969582 0.5969582 0.5969582 0.5969582 0.5969582

237 0.6679104 0.6679104 0.6679104 0.6679104 0.6679104 0.6679104 0.6679104

242 0.8139535 0.8139535 0.8139535 0.8139535 0.8139535 0.8139535 0.8139535

246 0.6436170 0.6436170 0.6436170 0.6436170 0.6436170 0.6436170 0.6436170

248 0.6124402 0.6124402 0.6124402 0.6124402 0.6124402 0.6124402 0.6124402

273 0.5537190 0.5537190 0.5537190 0.5537190 0.5537190 0.5537190 0.5537190

349 0.7538462 0.7538462 0.7538462 0.7538462 0.7538462 0.7538462 0.7538462

352 0.7205882 0.7205882 0.7205882 0.7205882 0.7205882 0.7205882 0.7205882

353 0.7833333 0.7833333 0.7833333 0.7833333 0.7833333 0.7833333 0.7833333

368 0.7272727 0.7272727 0.7272727 0.7272727 0.7272727 0.7272727 0.7272727

489 0.6976744 0.6976744 0.6976744 0.6976744 0.6976744 0.6976744 0.6976744

502 0.6902655 0.6902655 0.6902655 0.6902655 0.6902655 0.6902655 0.6902655

543 0.6111111 0.6111111 0.6111111 0.6111111 0.6111111 0.6111111 0.6111111

704 0.5872093 0.5872093 0.5872093 0.5872093 0.5872093 0.5872093 0.5872093

708 0.6857143 0.6857143 0.6857143 0.6857143 0.6857143 0.6857143 0.6857143

# quanteda::textmodel()のexample

> ie2010Corpus_dfm <- quanteda::dfm(x = ie2010Corpus, verbose = FALSE)

> ref_scores <- c(rep(x = NA, each = 4), -1, 1, rep(x = NA, each = 8))

# Wordfish model

> ws <- quanteda::textmodel(

+ x = ie2010Corpus_dfm, y = ref_scores, model = "wordscores", smooth = 1

+ )

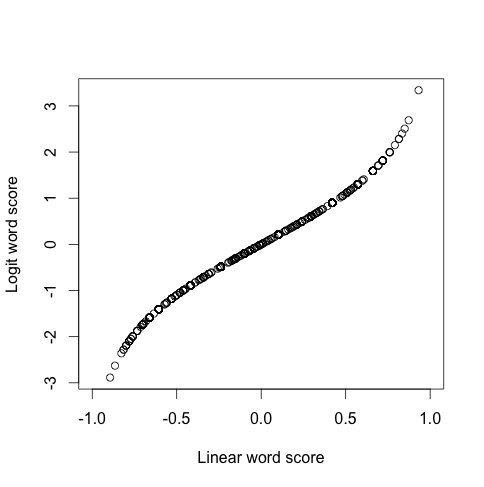

# Wordfish model: scale = "logit"

> bs <- quanteda::textmodel(

+ x = ie2010Corpus_dfm, y = ref_scores, model = "wordscores", smooth = 1, scale = "logit"

+ )

> plot(

+ x = ws@Sw, y = bs@Sw, xlim = c(-1, 1),

+ xlab = "Linear word score", ylab = "Logit word score"

+ )

> wordfish <- quanteda::textmodel(

+ x = ie2010Corpus_dfm, y = NULL, model = "wordfish"

+ ) %>%

+ print

Fitted wordfish model:

Call:

textmodel_wordfish(data = x)

Estimated document positions:

Documents theta SE lower upper

1 2010_BUDGET_01_Brian_Lenihan_FF -1.77842508 NA NA NA

2 2010_BUDGET_02_Richard_Bruton_FG 0.58436869 NA NA NA

3 2010_BUDGET_03_Joan_Burton_LAB 1.14761695 NA NA NA

4 2010_BUDGET_04_Arthur_Morgan_SF 0.09400958 NA NA NA

5 2010_BUDGET_05_Brian_Cowen_FF -1.79211539 NA NA NA

6 2010_BUDGET_06_Enda_Kenny_FG 0.78894787 NA NA NA

7 2010_BUDGET_07_Kieran_ODonnell_FG 0.49306437 NA NA NA

8 2010_BUDGET_08_Eamon_Gilmore_LAB 0.58812988 NA NA NA

9 2010_BUDGET_09_Michael_Higgins_LAB 0.97901464 NA NA NA

10 2010_BUDGET_10_Ruairi_Quinn_LAB 0.92084329 NA NA NA

11 2010_BUDGET_11_John_Gormley_Green -1.12261547 NA NA NA

12 2010_BUDGET_12_Eamon_Ryan_Green -0.21004677 NA NA NA

13 2010_BUDGET_13_Ciaran_Cuffe_Green -0.79133534 NA NA NA

14 2010_BUDGET_14_Caoimhghin_OCaolain_SF 0.09854280 NA NA NA

Estimated feature scores: showing first 30 beta-hats for features

when i presented the supplementary budget

0.13824923 -0.33876765 -0.35600050 -0.21346691 -1.07842554 -0.05457924

to this house last april said

-0.33005556 -0.26757662 -0.16145904 -0.25783983 0.15467183 0.82440376

we could work our way through

-0.44459300 0.57604718 -0.54806584 -0.71558503 -0.29671938 -0.64350927

period of severe economic distress today

-0.52533975 -0.29754960 -1.24430279 -0.44508315 -1.78752195 -0.11651167

can report that notwithstanding difficulties past

-0.33001557 -0.65601898 -0.04671622 -1.78752195 -1.18563671 -0.50345524

最後に

{quanteda}というテキスト処理・解析のためのRパッケージについて、一部の関数を実際に動かしてみました。今回紹介した以外にも様々なテキスト分析の関数が定義されており、手軽に使えるため今後に期待したいです。

しかしながら、日本語でやろうとするとダメなケースも散見し、日本語処理を自前で実装すれば解決できるかどうか、今後調査を続けます。

なお、今回の内容は追加修正してどこかでまとめられる予定です。

明日の更新はgg_hatanoさんが担当してくださいます。