MySQLのベンチマークをとるときには巨大なデータセットが必要になります。

mysqlslapを何回か実行して、データを作ってもいいのですがいちいち作るのも手間なので既存のデータを探してみたところ、PerconaのブログでSample datasets for benchmarking and testingというそのものズバリなエントリが。

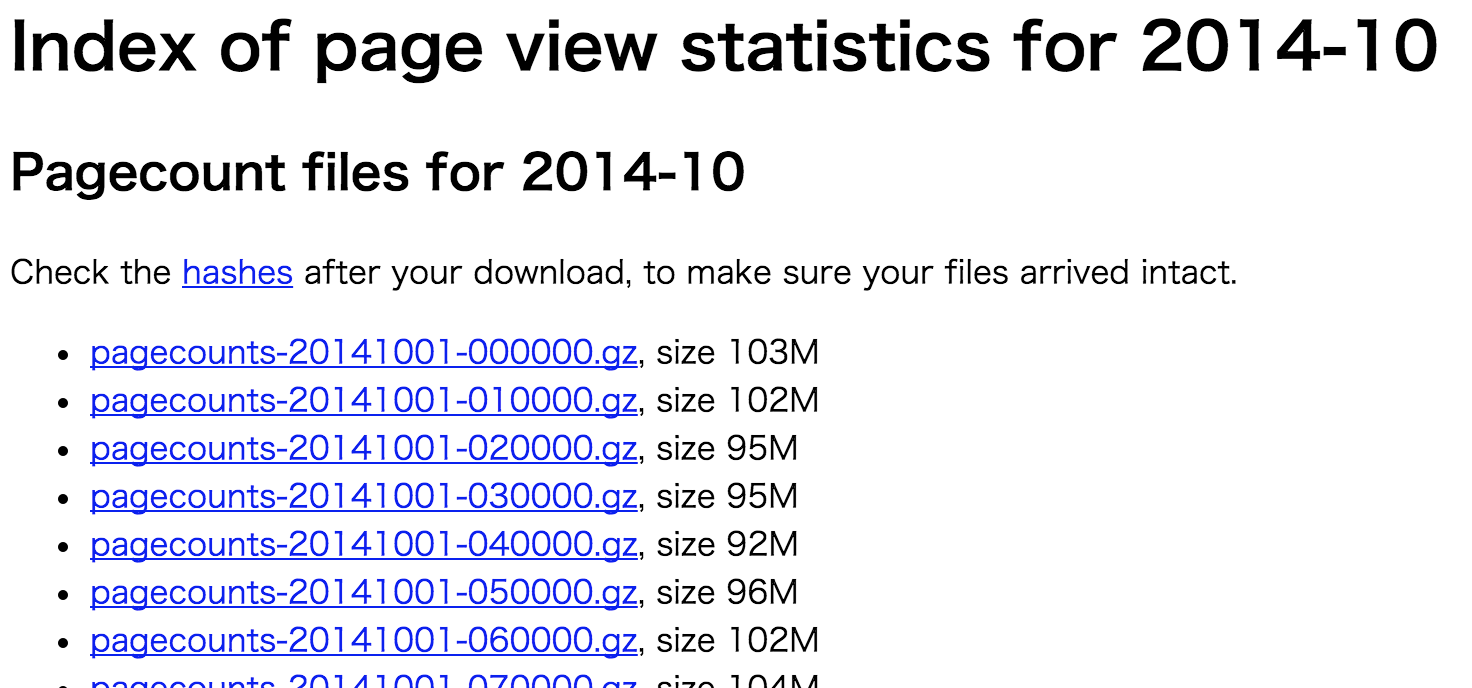

Page view statistics for Wikimedia

軽く読んでみたところ、記事に載っているデータセットのうちPage view statistics for Wikimediaがなかなか取り回しが良さそうな感じなので、MySQLにインポートしてみることに。

「Page view statistics for Wikimedia」はその名の通り、Wikipediaとその関連プロジェクトのページビューを1時間ごとに集計したファイルです。

ファイルの中身は

fr.b Special:Recherche/Achille_Baraguey_d%5C%27Hilliers 1 624

fr.b Special:Recherche/Acteurs_et_actrices_N 1 739

fr.b Special:Recherche/Agrippa_d/%27Aubign%C3%A9 1 743

fr.b Special:Recherche/All_Mixed_Up 1 730

fr.b Special:Recherche/Andr%C3%A9_Gazut.html 1 737

のような感じで、右から「project name」「title」「number of requests」「size of the content」のフィールドが空白区切りで並んでいます。

手動でインポート

まず、インポートするテーブルを作ります。

CREATE TABLE `page_view_statistics` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`time` datetime NOT NULL,

`project_name` varchar(255) NOT NULL,

`title` text NOT NULL,

`requests` int(11) NOT NULL,

`size` bigint(20) NOT NULL,

PRIMARY KEY (`id`),

KEY `idx_time` (`time`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8

そして、適当なファイルをダウンロードして

wget http://dumps.wikimedia.org/other/pagecounts-raw/2015/2015-12/pagecounts-20151201-000000.gz

解凍して先頭に日時を付加。

gunzip pagecounts-20151201-000000.gz

sed -i.bak 's/^/2015-01-01-00 /' pagecounts-20151201-000000

mysqlimportでインポート。

mv pagecounts-20151201-000000 page_view_statistics.txt

mysqlimport -u root -L --fields-terminated-by ' ' -c time,project_name,title,requests,size wikimedia page_view_statistics.txt

mysql> show table status \G

*************************** 1. row ***************************

Name: page_view_statistics

Engine: InnoDB

Version: 10

Row_format: Compact

Rows: 6432695

Avg_row_length: 114

Data_length: 738197504

Max_data_length: 0

Index_length: 99237888

Data_free: 7340032

Auto_increment: 6815641

Create_time: 2015-12-20 12:21:54

Update_time: NULL

Check_time: NULL

Collation: utf8_general_ci

Checksum: NULL

Create_options:

Comment:

1 row in set (0.00 sec)

wikimedia-pagecounts-import

1時間単位の集計なので結構なファイル数になるので、手間を省くためインポート用のスクリプトを作りました。(mysqlimportを使っているため、ローカルのDBにしかインポートできません)

# !/usr/bin/env ruby

require 'optparse'

require 'optparse/time'

require 'tmpdir'

require 'net/http'

require 'uri'

require 'open3'

require 'shellwords'

Version = '1.0.0'

DOWNLOAD_URL = 'http://dumps.wikimedia.org/other/pagecounts-raw/%<year>04d/%<year>04d-%<month>02d/pagecounts-%<year>04d%<month>02d%<day>02d-%<hour>02d00%<sec>02d.gz'

DATABASE = 'wikimedia'

CREATE_DATABASE = "CREATE DATABASE IF NOT EXISTS `#{DATABASE}` DEFAULT CHARACTER SET utf8"

TABLE = 'page_view_statistics'

CREATE_TABLE = <<-EOS

CREATE TABLE `#{TABLE}` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`time` datetime NOT NULL,

`project_name` varchar(255) NOT NULL,

`title` varchar(255) NOT NULL,

`requests` int(11) NOT NULL,

`size` int(11) NOT NULL,

PRIMARY KEY (`id`),

KEY `idx_time` (`time`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8

EOS

COLUMNS = 'time,project_name,title,requests,size'

options = {

host: 'localhost',

user: 'root',

}

ARGV.options do |opt|

begin

#opt.on('-h', '--host HOST') {|v| options[:host] = v }

#opt.on('-P', '--port PORT', Integer) {|v| options[:port] = v }

opt.on('-u', '--user USER') {|v| options[:user] = v }

opt.on('-p', '--password PASSWORD') {|v| options[:password] = v }

opt.on('-s', '--start DATE', Time) {|v| options[:start] = v }

opt.on('-e', '--end DATE', Time) {|v| options[:end] = v }

opt.on('-t', '--trunc') { options[:trunc] = true }

opt.parse!

if options.values_at(:host, :user, :start).any?(&:nil?)

puts opt.help

exit 1

end

rescue => e

$stderr.puts(e.message)

exit 1

end

end

options[:end] ||= options[:start]

def run(cmd, args)

cmd_with_args = args.reject {|arg|

if arg.is_a?(Array)

arg.last.nil?

else

arg.nil?

end

}.flat_map {|arg|

Array(arg).map(&:to_s)

}

cmd_with_args.unshift(cmd.to_s)

cmd_with_args = Shellwords.join(cmd_with_args)

out, err, status = Open3.capture3(cmd_with_args)

raise err unless status.success?

unless out.empty?

out = out.each_line.map {|i| i.chomp.split("\t") }

head = out.shift

out = out.map {|i| Hash[head.zip(i)] }

end

[out, err]

end

def mysql(sql, options)

args = [

'-A',

['-h', options[:host]],

['-P', options[:port]],

['-u', options[:user]],

['-e', sql],

options[:database],

]

args << "-p#{options[:password]}" if options[:password]

run(:mysql, args)

end

def mysqlimport(path, options)

args = [

['-u', options[:user]],

['-c', COLUMNS],

['--fields-terminated-by', ' '],

DATABASE,

path,

]

args << "-p#{options[:password]}" if options[:password]

run(:mysqlimport, args)

end

def download(url)

url = URI.parse(url)

Net::HTTP.start(url.host, url.port) {|http|

http.get(url.path)

}

end

def proc_file(time, offset = 0, &block)

time += offset

url = DOWNLOAD_URL % {year: time.year, month: time.month, day: time.day, hour: time.hour, sec: time.sec}

file = File.basename(url)

Dir.mktmpdir do |dir|

File.chmod(0777, dir)

path = File.join(dir, file)

puts "Downloading: #{url}"

res = download(url)

case res

when Net::HTTPSuccess

open(path, 'wb') {|f| f << res.body }

block.call(dir, file)

when Net::HTTPNotFound

if offset >= 60

res.value

else

puts 'Not Found'

proc_file(time + 1, &block)

end

else

res.value

end

end

end

def create_database(options)

mysql(CREATE_DATABASE, options)

end

def create_table(options)

options = options.merge(database: DATABASE)

if options[:trunc]

mysql("DROP TABLE IF EXISTS `#{TABLE}`", options)

end

out, err = mysql(<<-EOS, options)

SELECT COUNT(1) AS cnt FROM INFORMATION_SCHEMA.TABLES

WHERE table_schema = '#{DATABASE}'

AND table_name = '#{TABLE}'

EOS

count = out.first['cnt'].to_i

if count.zero?

mysql(CREATE_TABLE, options)

end

end

def init_database(options)

puts 'Initializing database'

create_database(options)

create_table(options)

end

def main(options)

init_database(options)

start_time = options[:start]

end_time = options[:end]

(start_time.to_i..end_time.to_i).step(3600) do |time|

time = Time.at(time)

proc_file(time) do |dir, file|

puts "Importing: #{file}"

orig_file = File.join(dir, file)

import_file = File.join(dir, "#{TABLE}.txt")

run(:gunzip, [orig_file])

orig_file.gsub!(/\.gz\z/, '')

run(:sed, ['-i.bak', "s/^/#{time.strftime('%Y-%m-%d-%H:00:00')} /", orig_file])

File.rename(orig_file, import_file)

mysqlimport(import_file, options)

end

end

end

main(options)

とりあえず2014/01/01 00:00:00〜2014/01/01 05:00:00までのデータをインポートしてみます。

$ time wikimedia-pagecounts-import -s 2014/01/01 -e 2014/01/01-05:00 -t

[ec2-user@ip-10-0-246-208 ~]$ time ./wikimedia-pagecounts-import -s 2014/01/01 -e 2014/01/01-05:00 -t

Initializing database

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-000000.gz

Importing: pagecounts-20140101-000000.gz

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-010000.gz

Importing: pagecounts-20140101-010000.gz

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-020000.gz

Importing: pagecounts-20140101-020000.gz

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-030000.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-030001.gz

Importing: pagecounts-20140101-030001.gz

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040000.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040001.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040002.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040003.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040004.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040005.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040006.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040007.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-040008.gz

Importing: pagecounts-20140101-040008.gz

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-050000.gz

Not Found

Downloading: http://dumps.wikimedia.org/other/pagecounts-raw/2014/2014-01/pagecounts-20140101-050001.gz

Importing: pagecounts-20140101-050001.gz

real 9m18.371s

user 0m30.856s

sys 0m40.312s

mysql> select * from page_view_statistics limit 5;

+----+---------------------+--------------+-----------------------------------------------------------------------------------------------------------------------------------------------+----------+--------+

| id | time | project_name | title | requests | size |

+----+---------------------+--------------+-----------------------------------------------------------------------------------------------------------------------------------------------+----------+--------+

| 1 | 2014-01-01 00:00:00 | AR | %D9%86%D9%82%D8%A7%D8%B4_%D8%A7%D9%84%D9%85%D8%B3%D8%AA%D8%AE%D8%AF%D9%85:%D8%B9%D8%A8%D8%A7%D8%AF_%D8%AF%D9%8A%D8%B1%D8%A7%D9%86%D9%8A%D8%A9 | 1 | 230243 |

| 2 | 2014-01-01 00:00:00 | De | %C3%84sthetik | 3 | 194742 |

| 3 | 2014-01-01 00:00:00 | De | Cholesterin | 1 | 0 |

| 4 | 2014-01-01 00:00:00 | De | Steroide | 1 | 0 |

| 5 | 2014-01-01 00:00:00 | De | Vippetangen | 3 | 26119 |

+----+---------------------+--------------+-----------------------------------------------------------------------------------------------------------------------------------------------+----------+--------+

5 rows in set (0.00 sec)

mysql> show table status \G

*************************** 1. row ***************************

Name: page_view_statistics

Engine: InnoDB

Version: 10

Row_format: Compact

Rows: 31374255

Avg_row_length: 97

Data_length: 3073376256

Max_data_length: 0

Index_length: 551550976

Data_free: 4194304

Auto_increment: 37944766

Create_time: 2015-12-20 12:27:24

Update_time: NULL

Check_time: NULL

Collation: utf8_general_ci

Checksum: NULL

Create_options:

Comment:

1 row in set (0.00 sec)