arXivのAPI使いたいけどいい感じで使えそうなライブラリないかな〜と探したらありましたのでそれの紹介です。

- (2017/10/27追記)

- 実際のWEBページからどの部分を取得するのか説明する項目を追加

ライブラリ

紹介するのはこちら、Pythonのライブラリになります。

https://github.com/lukasschwab/arxiv.py

インストール

pip install arxiv

コード

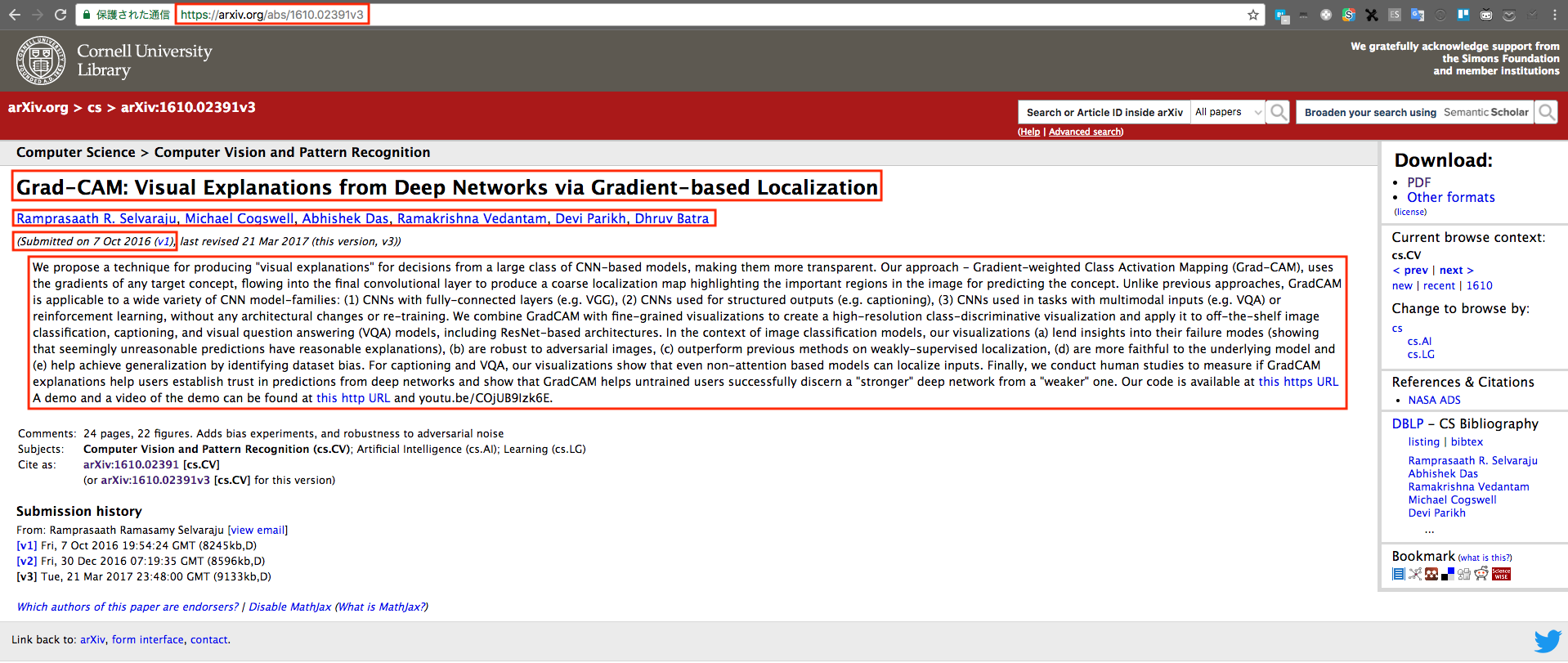

例として扱う論文はこちらを利用します。

https://arxiv.org/abs/1610.02391v3

今回論文に対応しているID(1610.02391)がわかっているのでこのようにして結果を取得します。

import arxiv

# 戻り値の型はリスト

results = arxiv.query(id_list=["1610.02391"])

上記のresultには辞書フォーマットで大量の情報が格納されていますが

我々にとって必要なものは以下の5つだと思っていますので5つのみ説明します。

- PDFのURL

- 著者

- タイトル

- 投稿日

- 概要

import arxiv

results = arxiv.query(id_list=["1610.02391"])

result = results[0]

# PDFのURL

print(result.pdf_url)

# 著者

print(result.authors)

# タイトル

print(result.title)

# 投稿日

print(result.published)

# 概要

print(result.summary)

Grad-CAM: Visual Explanations from Deep Networks via Gradient-based

Localization

http://arxiv.org/pdf/1610.02391v3

2016-10-07T19:54:24Z

['Ramprasaath R. Selvaraju', 'Michael Cogswell', 'Abhishek Das', 'Ramakrishna Vedantam', 'Devi Parikh', 'Dhruv Batra']

We propose a technique for producing "visual explanations" for decisions from

a large class of CNN-based models, making them more transparent. Our approach -

Gradient-weighted Class Activation Mapping (Grad-CAM), uses the gradients of

any target concept, flowing into the final convolutional layer to produce a

coarse localization map highlighting the important regions in the image for

predicting the concept. Unlike previous approaches, GradCAM is applicable to a

wide variety of CNN model-families: (1) CNNs with fully-connected layers (e.g.

VGG), (2) CNNs used for structured outputs (e.g. captioning), (3) CNNs used in

tasks with multimodal inputs (e.g. VQA) or reinforcement learning, without any

architectural changes or re-training. We combine GradCAM with fine-grained

visualizations to create a high-resolution class-discriminative visualization

and apply it to off-the-shelf image classification, captioning, and visual

question answering (VQA) models, including ResNet-based architectures. In the

context of image classification models, our visualizations (a) lend insights

into their failure modes (showing that seemingly unreasonable predictions have

reasonable explanations), (b) are robust to adversarial images, (c) outperform

previous methods on weakly-supervised localization, (d) are more faithful to

the underlying model and (e) help achieve generalization by identifying dataset

bias. For captioning and VQA, our visualizations show that even non-attention

based models can localize inputs. Finally, we conduct human studies to measure

if GradCAM explanations help users establish trust in predictions from deep

networks and show that GradCAM helps untrained users successfully discern a

"stronger" deep network from a "weaker" one. Our code is available at

https://github.com/ramprs/grad-cam. A demo and a video of the demo can be found

at http://gradcam.cloudcv.org and youtu.be/COjUB9Izk6E.

こんな感じでそれぞれ取得できます。

IDわからない場合はどうするんだよというツッコミが聞こえてきそうですがこちらは後日追記したいと思います。

最後に

概要だけGoogle翻訳につっこんで翻訳して、Slackに投稿して興味のある論文は

しっかり読んでみるみたいなことをしたいなと考え中です。