目的

無料かつ適切に短い時間でGANを学習できる環境を作ること。

GAN(Generative Adversarial Networks)

機械学習技術の一種である画像生成技術。画像を描くという創作的な活動を計算機が出来るようになった。

Google Colaboratory

Googleが提供するクラウドサービスでJupyter Notebook環境が無料で使える。機械学習に必要とされるGPUが使えるのでとても有用。

結果

それなりに重い畳み込みが入ったDCGANを10分程度で結果が出せることがわかった。これが無料なのが凄い。やったことはGANのコードをコピペしてColaboratoryで実行しただけ。

0 [D loss: 1.199293, acc.: 29.69%] [G loss: 0.575992]

5000 [D loss: 0.619358, acc.: 65.62%] [G loss: 0.996219]

10000 [D loss: 0.680767, acc.: 54.69%] [G loss: 0.977041]

15000 [D loss: 0.547127, acc.: 73.44%] [G loss: 1.443061]

Discriminatorの精度は上がってますが、Generatorのlossが下がってません。

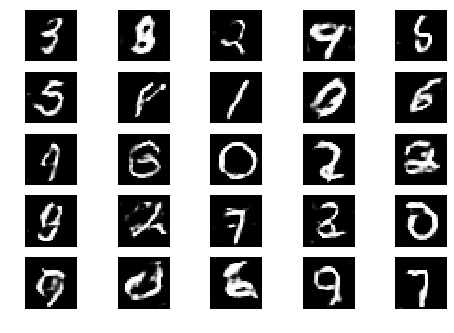

画像生成は出来ているようです。

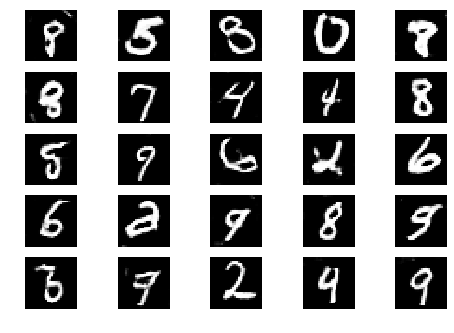

画像生成は出来ているようです。

詳細な手順

モデルにはDCGANのKerasコードを拝借させて頂きました。私はKeras書けないので本当にありがとうございます。

コード

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam

import matplotlib.pyplot as plt

import sys

import numpy as np

class DCGAN():

def __init__(self):

# Input shape

self.img_rows = 28

self.img_cols = 28

self.channels = 1

self.img_shape = (self.img_rows, self.img_cols, self.channels)

self.latent_dim = 100

optimizer = Adam(0.0002, 0.5)

# Build and compile the discriminator

self.discriminator = self.build_discriminator()

self.discriminator.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

# Build the generator

self.generator = self.build_generator()

# The generator takes noise as input and generates imgs

z = Input(shape=(self.latent_dim,))

img = self.generator(z)

# For the combined model we will only train the generator

self.discriminator.trainable = False

# The discriminator takes generated images as input and determines validity

valid = self.discriminator(img)

# The combined model (stacked generator and discriminator)

# Trains the generator to fool the discriminator

self.combined = Model(z, valid)

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

def build_generator(self):

model = Sequential()

model.add(Dense(128 * 7 * 7, activation="relu", input_dim=self.latent_dim))

model.add(Reshape((7, 7, 128)))

model.add(UpSampling2D())

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

model.add(UpSampling2D())

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

model.add(Conv2D(self.channels, kernel_size=3, padding="same"))

model.add(Activation("tanh"))

model.summary()

noise = Input(shape=(self.latent_dim,))

img = model(noise)

return Model(noise, img)

def build_discriminator(self):

model = Sequential()

model.add(Conv2D(32, kernel_size=3, strides=2, input_shape=self.img_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

model.add(ZeroPadding2D(padding=((0,1),(0,1))))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(256, kernel_size=3, strides=1, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

model.summary()

img = Input(shape=self.img_shape)

validity = model(img)

return Model(img, validity)

def train(self, epochs, batch_size=128, save_interval=50):

# Load the dataset

(X_train, _), (_, _) = mnist.load_data()

# Rescale -1 to 1

X_train = X_train / 127.5 - 1.

X_train = np.expand_dims(X_train, axis=3)

# Adversarial ground truths

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

for epoch in range(epochs):

# ---------------------

# Train Discriminator

# ---------------------

# Select a random half of images

idx = np.random.randint(0, X_train.shape[0], batch_size)

imgs = X_train[idx]

# Sample noise and generate a batch of new images

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

gen_imgs = self.generator.predict(noise)

# Train the discriminator (real classified as ones and generated as zeros)

d_loss_real = self.discriminator.train_on_batch(imgs, valid)

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# Train Generator

# ---------------------

# Train the generator (wants discriminator to mistake images as real)

g_loss = self.combined.train_on_batch(noise, valid)

# If at save interval => save generated image samples

if epoch % save_interval == 0:

# Plot the progress

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

self.save_imgs(epoch)

def save_imgs(self, epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, self.latent_dim))

gen_imgs = self.generator.predict(noise)

# Rescale images 0 - 1

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

axs[i,j].axis('off')

cnt += 1

if __name__ == '__main__':

dcgan = DCGAN()

dcgan.train(epochs=20000, batch_size=32, save_interval=5000)

環境はWindows10、Google Chrome、Google Colaboratoryです。Googleアカウントをお持ちであればブラウザからPython3の新しいノートブックを開くだけで環境が整います。ここのワンクリックで環境が整う軽快さが凄い。

GPUはメニューバーの[ランタイム]->[ランタイムのタイプを変更]->[ハードウェアアクセラレータ]->[GPU]を設定することで使えるようになります。

実行はコードをセルに貼り付けた後に ボタンをクリックするか、Ctrl + Enterで行えます。

ボタンをクリックするか、Ctrl + Enterで行えます。

結果は出てくるまでに数分かかるのでコーヒーでも淹れて待ちましょう。

以上。

今後

社に負けずChainerガシガシ書いてGANをブン回したい(希望的観測)。