Lambda(Python) + Rekognition で顔検出

この記事はサーバーレスWebアプリ Mosaicを開発して得た知見を振り返り定着させるためのハンズオン記事の1つです。

以下を見てからこの記事をみるといい感じです。

はじめに

顔検出を実現する手段としてOpenCVが最初に思い立つ古い人間なのですが、OpenCVで納得いく検出をさせようと思うとなかなかしんどいんです。で、このAWS Rekognitionを利用してみたのですが感動しました。角度や回転に影響されず、高精度、そして早い!

コンテンツ

LambdaからRekognitionの利用を許可する

LambdaからRekognitionを利用できるようにするために、Lambda関数のIAMロールにポリシーをアタッチする必要があります。

AWSコンソール > IAM > ロール から目的のIAMロールを選択

「ポリシーをアタッチ」ボタン押下で表示される画面でAmazonRekognitionFullAccessを選択してアタッチしました。

Lambda関数(Python3)の更新

# coding: UTF-8

import boto3

import os

from urllib.parse import unquote_plus

import numpy as np

import cv2

import logging

logger = logging.getLogger()

logger.setLevel(logging.INFO)

s3 = boto3.client("s3")

rekognition = boto3.client('rekognition')

from gql import gql, Client

from gql.transport.requests import RequestsHTTPTransport

ENDPOINT = "https://**************************.appsync-api.ap-northeast-1.amazonaws.com/graphql"

API_KEY = "da2-**************************"

_headers = {

"Content-Type": "application/graphql",

"x-api-key": API_KEY,

}

_transport = RequestsHTTPTransport(

headers = _headers,

url = ENDPOINT,

use_json = True,

)

_client = Client(

transport = _transport,

fetch_schema_from_transport = True,

)

def lambda_handler(event, context):

bucket = event["Records"][0]["s3"]["bucket"]["name"]

key = unquote_plus(event["Records"][0]["s3"]["object"]["key"], encoding="utf-8")

logger.info("Function Start (deploy from S3) : Bucket={0}, Key={1}" .format(bucket, key))

fileName = os.path.basename(key)

dirPath = os.path.dirname(key)

dirName = os.path.basename(dirPath)

orgFilePath = "/tmp/" + fileName

if (not key.startswith("public") or key.startswith("public/processed/")):

logger.info("don't process.")

return

apiCreateTable(dirName, key)

keyOut = key.replace("public", "public/processed", 1)

dirPathOut = os.path.dirname(keyOut)

try:

s3.download_file(Bucket=bucket, Key=key, Filename=orgFilePath)

orgImage = cv2.imread(orgFilePath)

grayImage = cv2.cvtColor(orgImage, cv2.COLOR_RGB2GRAY)

processedFileName = "gray-" + fileName

processedFilePath = "/tmp/" + processedFileName

uploadImage(grayImage, processedFilePath, bucket, os.path.join(dirPathOut, processedFileName), dirName)

detectFaces(bucket, key, fileName, orgImage, dirName, dirPathOut)

except Exception as e:

logger.exception(e)

raise e

finally:

if os.path.exists(orgFilePath):

os.remove(orgFilePath)

def uploadImage(image, localFilePath, bucket, s3Key, group):

logger.info("start uploadImage({0}, {1}, {2}, {3})".format(localFilePath, bucket, s3Key, group))

try:

cv2.imwrite(localFilePath, image)

s3.upload_file(Filename=localFilePath, Bucket=bucket, Key=s3Key)

apiCreateTable(group, s3Key)

except Exception as e:

logger.exception(e)

raise e

finally:

if os.path.exists(localFilePath):

os.remove(localFilePath)

def apiCreateTable(group, path):

logger.info("start apiCreateTable({0}, {1})".format(group, path))

try:

query = gql("""

mutation create {{

createSampleAppsyncTable(input:{{

group: \"{0}\"

path: \"{1}\"

}}){{

group path

}}

}}

""".format(group, path))

_client.execute(query)

except Exception as e:

logger.exception(e)

raise e

def detectFaces(bucket, key, fileName, image, group, dirPathOut):

logger.info("start detectFaces ({0}, {1}, {2}, {3}, {4})".format(bucket, key, fileName, group, dirPathOut))

try:

response = rekognition.detect_faces(

Image={

"S3Object": {

"Bucket": bucket,

"Name": key,

}

},

Attributes=[

"DEFAULT",

]

)

name, ext = os.path.splitext(fileName)

imgHeight = image.shape[0]

imgWidth = image.shape[1]

index = 0

for faceDetail in response["FaceDetails"]:

index += 1

faceFileName = "face_{0:03d}".format(index) + ext

box = faceDetail["BoundingBox"]

x = max(int(imgWidth * box["Left"]), 0)

y = max(int(imgHeight * box["Top"]), 0)

w = int(imgWidth * box["Width"])

h = int(imgHeight * box["Height"])

logger.info("BoundingBox({0},{1},{2},{3})".format(x, y, w, h))

faceImage = image[y:min(y+h, imgHeight-1), x:min(x+w, imgWidth)]

localFaceFilePath = os.path.join("/tmp/", faceFileName)

uploadImage(faceImage, localFaceFilePath, bucket, os.path.join(dirPathOut, faceFileName), group)

cv2.rectangle(image, (x, y), (x+w, y+h), (0, 0, 255), 3)

processedFileName = "faces-" + fileName

processedFilePath = "/tmp/" + processedFileName

uploadImage(image, processedFilePath, bucket, os.path.join(dirPathOut, processedFileName), group)

except Exception as e:

logger.exception(e)

raise e

はい、こんな感じです。コードの通りで取り上げて解説するところもないのですが、でもなんだかそれだと流石に手抜きすぎですかね、、。

処理シーケンスとはしては、以下のような感じです。

・S3から画像をDL

・グレー画像の作成、S3へアップロード、AppSyncで通知

・Rekognitionで顔検出

・検出された顔毎にループを回し、

切り抜き画像の作成、S3へアップロード、AppSyncで通知、

元画像へROIの描画

・顔に対してROIを描画した画像をS3へアップロード、AppSyncで通知

コードは以下においておきます。

https://github.com/ww2or3ww/sample_lambda_py_project/tree/work5

Rekognition呼び出し

下の2行で顔検出結果をJSON(response)で得られるというわけです。あまりにも手軽に利用できてしまいますね。

rekognition = boto3.client('rekognition')

response = rekognition.detect_faces(Image= { "S3Object" : { "Bucket" : bucket, "Name" : key, } }, Attributes=[ "DEFAULT", ])

この画像に対して顔検出をさせた結果、以下のようなjsonが取得できました。

{

"FaceDetails":

[

{

"BoundingBox": {"Width": 0.189957395195961, "Height": 0.439284086227417, "Left": 0.1840812712907791, "Top": 0.41294121742248535},

"Landmarks":

[

{"Type": "eyeLeft", "X": 0.21208296716213226, "Y": 0.5631930232048035},

{"Type": "eyeRight", "X": 0.24809405207633972, "Y": 0.5793104767799377},

{"Type": "mouthLeft", "X": 0.2103935033082962, "Y": 0.7187585234642029},

{"Type": "mouthRight", "X": 0.23671720921993256, "Y": 0.7346710562705994},

{"Type": "nose", "X": 0.18016678094863892, "Y": 0.643562912940979}

],

"Pose": {"Roll": 6.634916305541992, "Yaw": -62.60176086425781, "Pitch": -6.222261905670166},

"Quality": {"Brightness": 73.63239288330078, "Sharpness": 86.86019134521484},

"Confidence": 99.99996185302734

},

{

"BoundingBox": {"Width": 0.19120590388774872, "Height": 0.3650752902030945, "Left": 0.6294564008712769, "Top": 0.18926405906677246},

"Landmarks":

[

{"Type": "eyeLeft", "X": 0.6734799146652222, "Y": 0.30800101161003113},

{"Type": "eyeRight", "X": 0.757991373538971, "Y": 0.33103394508361816},

{"Type": "mouthLeft", "X": 0.661914587020874, "Y": 0.4431125521659851},

{"Type": "mouthRight", "X": 0.7317981719970703, "Y": 0.4621959924697876},

{"Type": "nose", "X": 0.6971173882484436, "Y": 0.37951982021331787}

],

"Pose": {"Roll": 7.885405540466309, "Yaw": -19.28563690185547, "Pitch": 4.210807800292969},

"Quality": {"Brightness": 60.976707458496094, "Sharpness": 92.22801208496094},

"Confidence": 100.0

}

],

"ResponseMetadata":

{

"RequestId": "189aac7c-4357-4293-a424-fc024feeded0", "HTTPStatusCode": 200, "HTTPHeaders":

{

"content-type": "application/x-amz-json-1.1",

"date": "Sat, 04 Jan 2020 14:30:47 GMT",

"x-amzn-requestid": "189aac7c-4357-4293-a424-fc024feeded0",

"content-length": "1322",

"connection": "keep-alive"

},

"RetryAttempts": 0

}

}

なお、サンプルプログラムではAttributesをDEFAULT指定していましたが、ALL指定した場合は以下のような情報が取得できました。

{

"FaceDetails":

[

{

"BoundingBox": {"Width": 0.189957395195961, "Height": 0.439284086227417, "Left": 0.1840812712907791, "Top": 0.41294121742248535},

"AgeRange": {"Low": 22, "High": 34},

"Smile": {"Value": false, "Confidence": 99.91419982910156},

"Eyeglasses": {"Value": false, "Confidence": 97.5216293334961},

"Sunglasses": {"Value": false, "Confidence": 98.94334411621094},

"Gender": {"Value": "Male", "Confidence": 99.5092544555664},

"Beard": {"Value": true, "Confidence": 87.53535461425781},

"Mustache": {"Value": false, "Confidence": 73.32454681396484},

"EyesOpen": {"Value": true, "Confidence": 98.92841339111328},

"MouthOpen": {"Value": false, "Confidence": 98.00538635253906},

"Emotions":

[

{"Type": "FEAR", "Confidence": 0.03440825268626213},

{"Type": "SURPRISED", "Confidence": 0.13240031898021698},

{"Type": "DISGUSTED", "Confidence": 0.03342699632048607},

{"Type": "ANGRY", "Confidence": 0.29975441098213196},

{"Type": "HAPPY", "Confidence": 0.022920485585927963},

{"Type": "CALM", "Confidence": 85.07475280761719},

{"Type": "CONFUSED", "Confidence": 1.6896910667419434},

{"Type": "SAD", "Confidence": 12.712653160095215}

],

"Landmarks":

[

{"Type": "eyeLeft", "X": 0.21208296716213226, "Y": 0.5631930232048035},

{"Type": "eyeRight", "X": 0.24809405207633972, "Y": 0.5793104767799377},

{"Type": "mouthLeft", "X": 0.2103935033082962, "Y": 0.7187585234642029},

{"Type": "mouthRight", "X": 0.23671720921993256, "Y": 0.7346710562705994},

{"Type": "nose", "X": 0.18016678094863892, "Y": 0.643562912940979},

{"Type": "leftEyeBrowLeft", "X": 0.2109173983335495, "Y": 0.5323911309242249},

{"Type": "leftEyeBrowRight", "X": 0.20237770676612854, "Y": 0.5220629572868347},

{"Type": "leftEyeBrowUp", "X": 0.20012125372886658, "Y": 0.5176519751548767},

{"Type": "rightEyeBrowLeft", "X": 0.22496788203716278, "Y": 0.5295209288597107},

{"Type": "rightEyeBrowRight", "X": 0.2825181782245636, "Y": 0.5552548170089722},

{"Type": "rightEyeBrowUp", "X": 0.24639180302619934, "Y": 0.5279281139373779},

{"Type": "leftEyeLeft", "X": 0.21062742173671722, "Y": 0.5640645027160645},

{"Type": "leftEyeRight", "X": 0.21973173320293427, "Y": 0.5715448260307312},

{"Type": "leftEyeUp", "X": 0.2089911699295044, "Y": 0.5593260526657104},

{"Type": "leftEyeDown", "X": 0.21014972031116486, "Y": 0.5721304416656494},

{"Type": "rightEyeLeft", "X": 0.24421700835227966, "Y": 0.5806354284286499},

{"Type": "rightEyeRight", "X": 0.2665697932243347, "Y": 0.5854082107543945},

{"Type": "rightEyeUp", "X": 0.2504902184009552, "Y": 0.5750172138214111},

{"Type": "rightEyeDown", "X": 0.25109195709228516, "Y": 0.5880314707756042},

{"Type": "noseLeft", "X": 0.19916994869709015, "Y": 0.6648411154747009},

{"Type": "noseRight", "X": 0.21807684004306793, "Y": 0.6632155179977417},

{"Type": "mouthUp", "X": 0.20222291350364685, "Y": 0.6922502517700195},

{"Type": "mouthDown", "X": 0.20738232135772705, "Y": 0.7338021993637085},

{"Type": "leftPupil", "X": 0.21208296716213226, "Y": 0.5631930232048035},

{"Type": "rightPupil", "X": 0.24809405207633972, "Y": 0.5793104767799377},

{"Type": "upperJawlineLeft", "X": 0.27225449681282043, "Y": 0.5730943083763123},

{"Type": "midJawlineLeft", "X": 0.2593783736228943, "Y": 0.7156036496162415},

{"Type": "chinBottom", "X": 0.22620755434036255, "Y": 0.8010575771331787},

{"Type": "midJawlineRight", "X": 0.3367012143135071, "Y": 0.74432772397995},

{"Type": "upperJawlineRight", "X": 0.36771708726882935, "Y": 0.6083425879478455}

],

"Pose": {"Roll": 6.634916305541992, "Yaw": -62.60176086425781, "Pitch": -6.222261905670166},

"Quality": {"Brightness": 73.63239288330078, "Sharpness": 86.86019134521484},

"Confidence": 99.99996185302734

},

{

"BoundingBox": {"Width": 0.19120590388774872, "Height": 0.3650752902030945, "Left": 0.6294564008712769, "Top": 0.18926405906677246},

"AgeRange": {"Low": 20, "High": 32},

"Smile": {"Value": false, "Confidence": 99.19612884521484},

"Eyeglasses": {"Value": false, "Confidence": 97.284912109375},

"Sunglasses": {"Value": false, "Confidence": 99.13030242919922},

"Gender": {"Value": "Female", "Confidence": 99.6273422241211},

"Beard": {"Value": false, "Confidence": 99.83914184570312},

"Mustache": {"Value": false, "Confidence": 99.87841033935547},

"EyesOpen": {"Value": true, "Confidence": 98.84789276123047},

"MouthOpen": {"Value": false, "Confidence": 95.55352783203125},

"Emotions":

[

{"Type": "FEAR", "Confidence": 0.3591834008693695},

{"Type": "SURPRISED", "Confidence": 0.5032361149787903},

{"Type": "DISGUSTED", "Confidence": 0.15358874201774597},

{"Type": "ANGRY", "Confidence": 2.0029523372650146},

{"Type": "HAPPY", "Confidence": 0.6409074664115906},

{"Type": "CALM", "Confidence": 89.09111022949219},

{"Type": "CONFUSED", "Confidence": 0.8823814988136292},

{"Type": "SAD", "Confidence": 6.366642475128174}

],

"Landmarks":

[

{"Type": "eyeLeft", "X": 0.6734799146652222, "Y": 0.30800101161003113},

{"Type": "eyeRight", "X": 0.757991373538971, "Y": 0.33103394508361816},

{"Type": "mouthLeft", "X": 0.661914587020874, "Y": 0.4431125521659851},

{"Type": "mouthRight", "X": 0.7317981719970703, "Y": 0.4621959924697876},

{"Type": "nose", "X": 0.6971173882484436, "Y": 0.37951982021331787},

{"Type": "leftEyeBrowLeft", "X": 0.6481514573097229, "Y": 0.2714482247829437},

{"Type": "leftEyeBrowRight", "X": 0.6928644776344299, "Y": 0.2690320312976837},

{"Type": "leftEyeBrowUp", "X": 0.6709408164024353, "Y": 0.2575661838054657},

{"Type": "rightEyeBrowLeft", "X": 0.7426562905311584, "Y": 0.28226032853126526},

{"Type": "rightEyeBrowRight", "X": 0.7986495494842529, "Y": 0.31319472193717957},

{"Type": "rightEyeBrowUp", "X": 0.7705841064453125, "Y": 0.28441154956817627},

{"Type": "leftEyeLeft", "X": 0.6606857180595398, "Y": 0.30426955223083496},

{"Type": "leftEyeRight", "X": 0.6901771426200867, "Y": 0.31324538588523865},

{"Type": "leftEyeUp", "X": 0.6742243766784668, "Y": 0.3005616068840027},

{"Type": "leftEyeDown", "X": 0.6734598278999329, "Y": 0.313093900680542},

{"Type": "rightEyeLeft", "X": 0.7402892112731934, "Y": 0.32695692777633667},

{"Type": "rightEyeRight", "X": 0.7727544903755188, "Y": 0.33527684211730957},

{"Type": "rightEyeUp", "X": 0.757353663444519, "Y": 0.32352718710899353},

{"Type": "rightEyeDown", "X": 0.7553724646568298, "Y": 0.33583202958106995},

{"Type": "noseLeft", "X": 0.6838077902793884, "Y": 0.39679819345474243},

{"Type": "noseRight", "X": 0.7161107659339905, "Y": 0.4051041901111603},

{"Type": "mouthUp", "X": 0.6949385404586792, "Y": 0.43140000104904175},

{"Type": "mouthDown", "X": 0.6908546686172485, "Y": 0.472693532705307},

{"Type": "leftPupil", "X": 0.6734799146652222, "Y": 0.30800101161003113},

{"Type": "rightPupil", "X": 0.757991373538971, "Y": 0.33103394508361816},

{"Type": "upperJawlineLeft", "X": 0.6373797655105591, "Y": 0.3141503930091858},

{"Type": "midJawlineLeft", "X": 0.6338266730308533, "Y": 0.46012476086616516},

{"Type": "chinBottom", "X": 0.6859143972396851, "Y": 0.5467866659164429},

{"Type": "midJawlineRight", "X": 0.7851454615592957, "Y": 0.5020546913146973},

{"Type": "upperJawlineRight", "X": 0.8258264064788818, "Y": 0.3661481738090515}

],

"Pose": {"Roll": 7.885405540466309, "Yaw": -19.28563690185547, "Pitch": 4.210807800292969},

"Quality": {"Brightness": 60.976707458496094, "Sharpness": 92.22801208496094},

"Confidence": 100.0

}

],

"ResponseMetadata":

{

"RequestId": "77b4dbdb-e76b-4940-927e-7548f3e0b602", "HTTPStatusCode": 200, "HTTPHeaders":

{

"content-type": "application/x-amz-json-1.1",

"date": "Sat, 04 Jan 2020 14:48:25 GMT",

"x-amzn-requestid": "77b4dbdb-e76b-4940-927e-7548f3e0b602",

"content-length": "6668", "connection": "keep-alive"

},

"RetryAttempts": 0

}

}

だいぶ情報増えましたね。性別や笑っているかどうか、予想される年齢、感情などの情報が取得できています。ランドマークの種類も増えています。

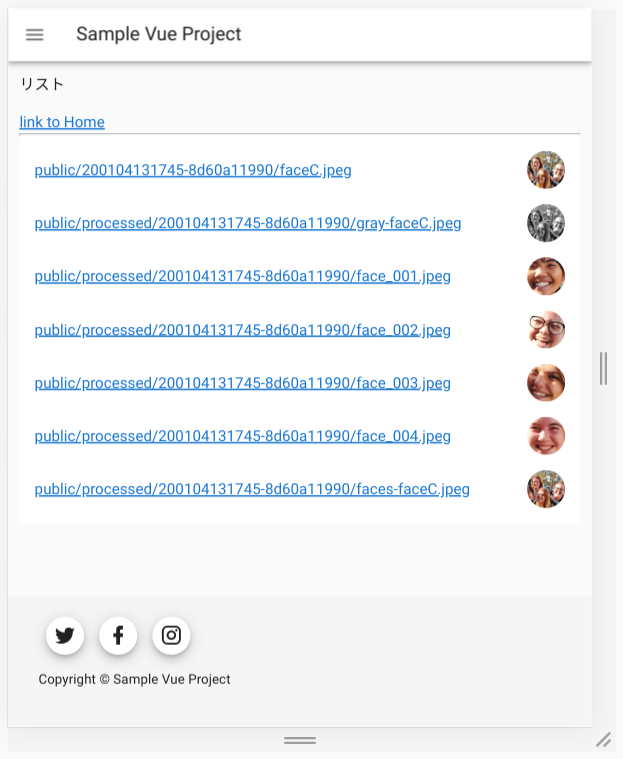

動作確認

Webアプリからファイルをアップロードすると、グレー画像に続き、顔の切り抜き画像、そして全ての顔のROIを表示した画像が表示されてゆきますね。

なんだかアプリっぽくなってきました。

あとがき

OpenCVは非常に便利で強力な画像処理ライブラリなのは確かです。ワタシも、10年ほど前になりますが、以下のようなことで利用してめちゃめちゃ便利じゃんと思っていました。

カメラキャリブレーション、歪み補正、特徴点抽出、ステレオ画像から視差情報取得、パターンマッチング、、etc

ただ今回改めて、こういった便利なライブラリはWebサービス化が進んでより利用しやすくなってくんだろうなと感じました。また、Webサービスの向こう側では機械学習されたモデルが利用され、高速・高精度・高品質な結果が期待できると。素晴らしいですよね。

データを取得する際の環境や条件に気をつけたり、期待する結果が得られるようなパラメータを試行錯誤して探したり、、そういった労力なしにバシッといい感じの結果を返してくれるので、まるで魔法みたいに感じてしまいますね。

ところで10年ほど前はOpenCVのサンプルといえばC++ばかりだったような気がするのですが、今ではすっかりPythonのサンプルの方が多い印象ですね。そんなところでも時代を感じてしまったおじさんです。