汎用的なビデオ通話アプリは機能が多く含まれており、利用シーンによっては運用ミスが発生したり、ユーザーを混乱させたりします。

今回は以下のようなサンプルアプリを作ってみました。

登場人物

- (何かしらの)サービス利用者

- (何かしらの)サービス提供者

システム概要

- サービス利用者はWebアプリで予約&ビデオ通話

- サービス提供者は予約時間になれば自動でビデオ通話に参加

この記事ではElectronを用いたデスクトップアプリとWebアプリで予約制のビデオ通話の簡易なサンプル作成について解説します。

サンプルコードはGithubに公開しています。

実装内容

サービス利用者

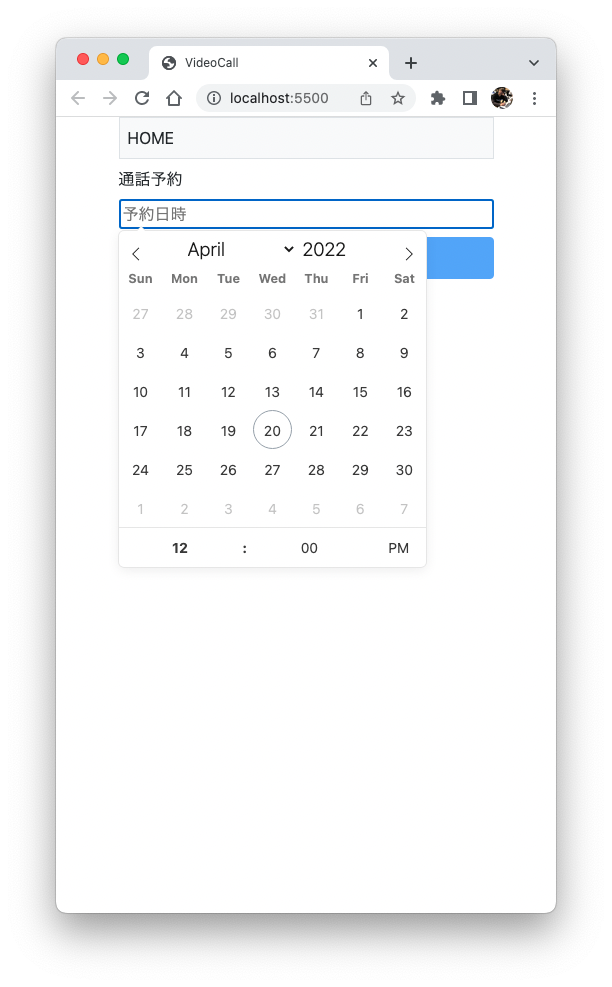

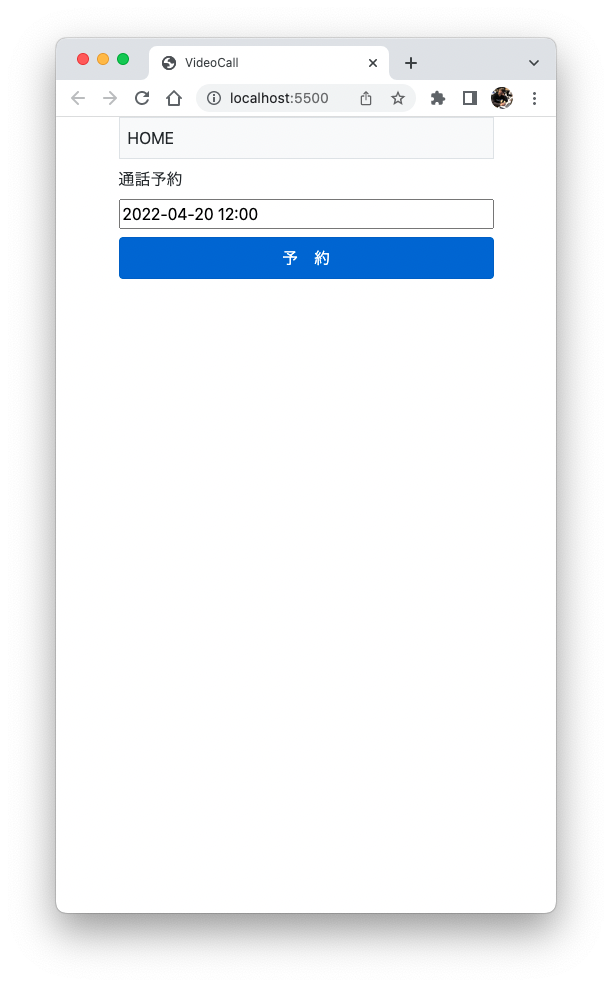

TOPページで予約をします。実際のプロダクトを想定すると、DB等と連携して、予約時間を保持します。また、予約時間になればビデオ入室ボタンがでてきます。

ですがこのデモでは日時を選択する動作と選択後にすぐにビデオ通話に入室できるようにしています。

<body>

<div class="d-grid gap-2 col-9 mx-auto">

<div class="p-2 bg-light border">HOME</div>

通話予約

<input id="flatpickr-input" class="flatpickr flatpickr-input" type="text" placeholder="予約日時" readonly="readonly">

<button id="reservation" class="p-2 btn btn-primary" type="button" disabled>予 約</button>

<button id="videocall" class="p-2 btn btn-primary" type="button" onclick="location.href='./video/index.html'">ビデオ通話開始</button>

</div>

<script src="https://cdn.jsdelivr.net/npm/bootstrap@5.0.2/dist/js/bootstrap.bundle.min.js" integrity="sha384-MrcW6ZMFYlzcLA8Nl+NtUVF0sA7MsXsP1UyJoMp4YLEuNSfAP+JcXn/tWtIaxVXM" crossorigin="anonymous"></script>

<script src="https://cdn.jsdelivr.net/npm/flatpickr"></script>

<script>

flatpickr("#flatpickr-input", {enableTime: true,dateFormat: "Y-m-d H:i",});

document.getElementById("videocall").style.display ="none";

document.getElementById("flatpickr-input").onchange = function() {

document.getElementById('reservation').disabled = false;

};

document.getElementById("reservation").onclick = function() {

document.getElementById('videocall').style.display = "block";

};

</script>

</body>

ビデオ通話画面は「自映像」「リモート映像」「退室ボタン」だけのシンプルな作りになっています。

要件によってはビデオミュートやマイクミュートも必要になるかもしれません。

// create Agora client

var client = AgoraRTC.createClient({ mode: "rtc", codec: "vp8" });

var localTracks = {

videoTrack: null,

audioTrack: null

};

var remoteUsers = {};

// Agora client options

var options = {

appid: "",

channel: "",

uid: null,

token: null

};

// the demo can auto join channel with params in url

$(() => {

join();

})

$("#leave").click(function (e) {

leave();

})

async function join() {

await client.enableDualStream();

// add event listener to play remote tracks when remote user publishs.

client.on("user-published", handleUserPublished);

client.on("user-unpublished", handleUserUnpublished);

// join a channel and create local tracks, we can use Promise.all to run them concurrently

[ options.uid, localTracks.audioTrack, localTracks.videoTrack ] = await Promise.all([

// join the channel

client.join(options.appid, options.channel, options.token || null),

// create local tracks, using microphone and camera

AgoraRTC.createMicrophoneAudioTrack(),

AgoraRTC.createCameraVideoTrack({encoderConfig: "720p_1"})

]);

// play local video track

localTracks.videoTrack.play("local-player");

$("#local-player-name").text(`localVideo(${options.uid})`);

// publish local tracks to channel

await client.publish(Object.values(localTracks));

console.log("publish success");

}

async function leave() {

for (trackName in localTracks) {

var track = localTracks[trackName];

if(track) {

track.stop();

track.close();

localTracks[trackName] = undefined;

}

location.href = "../index.html"

}

// remove remote users and player views

remoteUsers = {};

$("#remote-playerlist").html("");

// leave the channel

await client.leave();

$("#local-player-name").text("");

$("#join").attr("disabled", false);

$("#leave").attr("disabled", true);

console.log("client leaves channel success");

}

async function subscribe(user, mediaType) {

const uid = user.uid;

// subscribe to a remote user

await client.subscribe(user, mediaType);

console.log("subscribe success");

if (mediaType === 'video') {

user.videoTrack.play("remote-player");

}

if (mediaType === 'audio') {

user.audioTrack.play();

}

}

function handleUserPublished(user, mediaType) {

const id = user.uid;

remoteUsers[id] = user;

subscribe(user, mediaType);

}

function handleUserUnpublished(user) {

const id = user.uid;

delete remoteUsers[id];

$(`#player-wrapper-${id}`).remove();

}

サービス提供者側

サービス提供者はElectronで実装しています。

デスクトップアプリで、常にフルスクリーン表示をしており、サービス提供者が入室するとビデオ通話がはじまります。

import React, { Component } from 'react';

import AgoraRtcEngine from 'agora-electron-sdk';

import { List } from 'immutable';

import path from 'path';

import os from 'os'

import desktopCapturer from 'electron'

import {videoProfileList, audioProfileList, audioScenarioList} from '../utils/settings'

import {readImage} from '../utils/base64'

export default class App extends Component {

constructor(props) {

super(props)

console.log(window.innerWidth);

console.log(window.innerHeight);

this.state = {

appid: '',

token: '',

local: '',

users: [],

channel: '',

videoDevices: [],

audioDevices: [],

audioPlaybackDevices: [],

camera: 0,

mic: 0,

speaker: 0,

encoderConfiguration: 3,

}

this.handleJoin()

}

getRtcEngine() {

if(!this.state.appid){

alert("Please enter appid")

return

}

if(!this.rtcEngine) {

this.rtcEngine = new AgoraRtcEngine()

this.rtcEngine.initialize(this.state.appid)

this.subscribeEvents(this.rtcEngine)

window.rtcEngine = this.rtcEngine;

this.setState({

videoDevices: rtcEngine.getVideoDevices(),

audioDevices: rtcEngine.getAudioRecordingDevices(),

audioPlaybackDevices: rtcEngine.getAudioPlaybackDevices(),

})

}

return this.rtcEngine

}

componentDidMount() {

}

subscribeEvents = (rtcEngine) => {

rtcEngine.on('joinedchannel', (channel, uid, elapsed) => {

console.log(`onJoinChannel channel: ${channel} uid: ${uid} version: ${JSON.stringify(rtcEngine.getVersion())})`)

this.setState({

local: uid

});

});

rtcEngine.on('userjoined', (uid, elapsed) => {

console.log(`userJoined ---- ${uid}`)

rtcEngine.muteRemoteVideoStream(uid, false)

document.getElementById("wait-text").style.display ="none";

this.setState({

users: this.state.users.concat([uid])

})

})

rtcEngine.on('removestream', (uid, reason) => {

console.log(`useroffline ${uid}`)

document.getElementById("wait-text").style.display ="block";

this.setState({

users: this.state.users.filter(u => u != uid)

})

})

rtcEngine.on('leavechannel', (rtcStats) => {

console.log(`onleaveChannel----`)

this.sharingPrepared = false

this.setState({

local: '',

users: [],

localSharing: false,

localVideoSource: ''

})

})

rtcEngine.on('audiodevicestatechanged', () => {

this.setState({

audioDevices: rtcEngine.getAudioRecordingDevices(),

audioPlaybackDevices: rtcEngine.getAudioPlaybackDevices()

})

})

rtcEngine.on('videodevicestatechanged', () => {

this.setState({

videoDevices: rtcEngine.getVideoDevices()

})

})

rtcEngine.on('streamPublished', (url, error) => {

console.log(`url: ${url}, err: ${error}`)

})

rtcEngine.on('streamUnpublished', (url) => {

console.log(`url: ${url}`)

})

rtcEngine.on('lastmileProbeResult', result => {

console.log(`lastmileproberesult: ${JSON.stringify(result)}`)

})

rtcEngine.on('lastMileQuality', quality => {

console.log(`lastmilequality: ${JSON.stringify(quality)}`)

})

rtcEngine.on('audiovolumeindication', (

uid,

volume,

speakerNumber,

totalVolume

) => {

console.log(`uid${uid} volume${volume} speakerNumber${speakerNumber} totalVolume${totalVolume}`)

})

rtcEngine.on('error', err => {

console.error(err)

})

rtcEngine.on('executefailed', funcName => {

console.error(funcName, 'failed to execute')

})

}

handleJoin = () => {

if(!this.state.channel){

alert("Please enter channel")

return

}

let rtcEngine = this.getRtcEngine()

rtcEngine.setChannelProfile(0)

rtcEngine.setAudioProfile(5, 8)

rtcEngine.enableVideo()

let encoderProfile = videoProfileList[this.state.encoderConfiguration]

let rett = rtcEngine.setVideoEncoderConfiguration({width: encoderProfile.width, height: encoderProfile.height, frameRate: encoderProfile.fps, bitrate: encoderProfile.bitrate})

console.log(`setVideoEncoderConfiguration --- ${JSON.stringify(encoderProfile)} ret: ${rett}`)

if(this.state.videoDevices.length > 0) {

rtcEngine.setVideoDevice(this.state.videoDevices[this.state.camera].deviceid)

}

if(this.state.audioDevices.length > 0) {

rtcEngine.setAudioRecordingDevice(this.state.audioDevices[this.state.mic].deviceid);

}

if(this.state.audioPlaybackDevices.length > 0) {

rtcEngine.setAudioPlaybackDevice(this.state.audioDevices[this.state.speaker].deviceid);

}

rtcEngine.enableDualStreamMode(true)

rtcEngine.joinChannel(this.state.token || null, this.state.channel, '', Number(`${new Date().getTime()}`.slice(7)))

}

handleLeave = () => {

let rtcEngine = this.getRtcEngine()

rtcEngine.leaveChannel()

rtcEngine.videoSourceLeave()

}

handleCameraChange = e => {

this.setState({camera: e.currentTarget.value});

this.getRtcEngine().setVideoDevice(this.state.videoDevices[e.currentTarget.value].deviceid);

}

handleMicChange = e => {

this.setState({mic: e.currentTarget.value});

this.getRtcEngine().setAudioRecordingDevice(this.state.audioDevices[e.currentTarget.value].deviceid);

}

handleSpeakerChange = e => {

this.setState({speaker: e.currentTarget.value});

this.getRtcEngine().setAudioPlaybackDevice(this.state.audioPlaybackDevices[e.currentTarget.value].deviceid);

}

handleEncoderConfiguration = e => {

this.setState({

encoderConfiguration: Number(e.currentTarget.value)

})

}

handleRelease = () => {

this.setState({

localVideoSource: "",

users: [],

localSharing: false,

local: ''

})

if(this.rtcEngine) {

this.sharingPrepared = false

this.rtcEngine.release();

this.rtcEngine = null;

}

}

render() {

return (

<div>

{this.state.users.map((item, key) => (

<Window key={item} uid={item} rtcEngine={this.rtcEngine} role={'remote'}></Window>

))}

<div id="wait-text">入室までおまちください</div>

</div>

)

}

}

class Window extends Component {

constructor(props) {

super(props)

this.state = {

loading: false,

stylesVideo: {width: window.innerWidth+"px",height: window.innerHeight+"px", background: "#000000"}

}

}

componentDidMount() {

let dom = document.querySelector(`#video-${this.props.uid}`)

if (this.props.role === 'remote') {

dom && this.props.rtcEngine.subscribe(this.props.uid, dom)

this.props.rtcEngine.setupViewContentMode(this.props.uid, 1);

}

}

render() {

return (

<div id={'video-' + this.props.uid} style={this.state.stylesVideo} ></div>

)

}

}

サービス提供者側にはいっさいボタン等を配置していません。シンプルに相手の映像が見えているだけの仕組みになっています。リアルな対面での会話の体験を再現する設計になっています。リテラシーの高くない方でも簡単に利用できます。