参考資料

概要

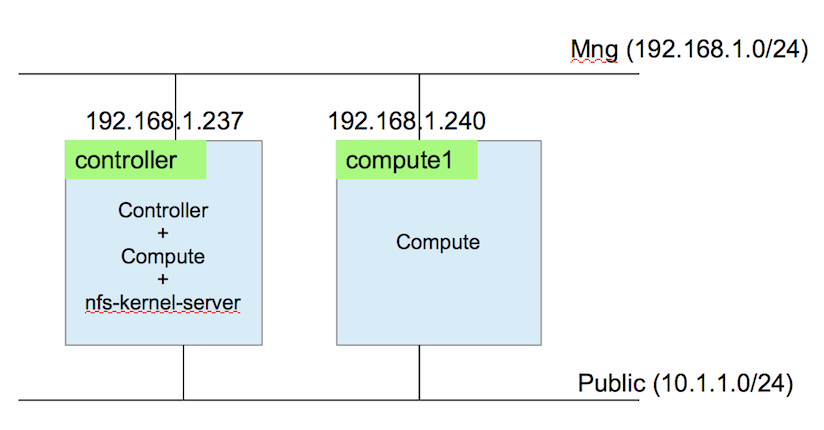

devstackで2ノード構成のOpenStackを構築し、片方のノードでvmを立ち上げ、該当vmにsshログインしたまま別ノードにmigrationできることを確認する。

今回は、環境の制限があるため2台のサーバで検証をすることとする。

本来なら、4台でcontroller,compute2台,nfs-serverと4台のサーバ構成でやりたいところだが、めんどくさいのでcontroller,compute,nfs-serverを1台で構築し、もう1台をcomputeにすることにする。

作る環境

- 2ノード作成

- controller

- controller兼computeにする

- nfs-serverもcontrollerに作成

- compute1

- computeに必要なプロセスのみ動かす

- nova-compute,neutron-plugin-agent(linuxbridge)

- computeに必要なプロセスのみ動かす

- controller

Controller(兼Compute,NFS-SV)の構築

devstackでopenstackの構築

ukinau$ git clone https://git.openstack.org/openstack-dev/devstack

ukinau$ vi devstack/local.conf

local.confはこんな感じ(今回は関係ないけど、linuxbridgeで作ってみた)

# localrc compatible overrides

[[local|localrc]]

RECLONE=false

LOGFILE=/opt/stack/logs/stack.sh.log

# Switch to Neutron

disable_service n-net

enable_service n-cond

enable_service q-svc

enable_service q-agt

enable_service q-dhcp

enable_service q-l3

enable_service q-meta

enable_service neutron

# Enable multi-host

MULTI_HOST=1

# Disable cinder/heat/tempest for faster testing

disable_service c-sch c-api c-vol h-eng h-api h-api-cfn h-api-cw tempest

# Secrets

ADMIN_PASSWORD=secrete

DATABASE_PASSWORD=$ADMIN_PASSWORD

RABBIT_PASSWORD=$ADMIN_PASSWORD

SERVICE_PASSWORD=$ADMIN_PASSWORD

SERVICE_TOKEN=a682f596-76f3-11e3-b3b2-e716f9080d50

## Use ML2 + LinuxBridge

# PUBLIC_INTERFACE=eth2

LB_PHYSICAL_INTERFACE=eth2

PHYSICAL_NETWORK=public

# Set ML2 plugin + LB agent

Q_PLUGIN=ml2

Q_AGENT=linuxbridge

# Set ML2 mechanism drivers to LB and l2pop

Q_ML2_PLUGIN_MECHANISM_DRIVERS=linuxbridge,l2population

# Set type drivers

Q_ML2_PLUGIN_TYPE_DRIVERS=flat,vlan,vxlan

# Use Neutron security groups

Q_USE_SECGROUP=True

# Set possible tenant network types

Q_ML2_TENANT_NETWORK_TYPE=flat,vlan,vxlan

FIXED_RANGE=10.0.0.0/24

NETWORK_GATEWAY=10.0.0.1

# PUBLIC_NETWORK_GATEWAY=172.16.0.254

HOST_IP=192.168.1.237

# VLAN configuration -- WORKS

# ENABLE_TENANT_VLANS=True

# TENANT_VLAN_RANGE=100:200

# Simple GRE tunnel configuration -- overrides extra opts

ENABLE_TENANT_TUNNELS=True

[[post-config|/$Q_PLUGIN_CONF_FILE]]

[vxlan]

l2_population = True

enable_vxlan = True

local_ip = 192.168.1.237

# [linux_bridge]

# l2_population=True[[local|localrc]]

stack.shの実行

ukinau$ cd devstack && ./stack.sh

nfs-serverのインストール、設定

ukinau$ sudo apt-get install nfs-kernel-server

ukinau$ sudo mkdir /instances

ukinau$ sudo chown ukinau /instances

ukinau$ sudo vi /etc/exports

exportsは下記のように変更

/instances 192.168.1.0/255.255.255.0(rw,sync,no_root_squash,no_subtree_check)

nfs-server再起動

ukinau$ sudo /etc/init.d/nfs-kernel-server restart

controller側のcomputeのvmイメージ格納場所をnfs公開ディレクトリにする

ukinau$ rm -fr /opt/stack/data/nova/instances

ukinau$ ln -s /instances /opt/stack/data/nova/instances

nova.confにlive-migraion用の設定[libvirt]セクションを下記のように変更

[libvirt]

vif_driver = nova.virt.libvirt.vif.LibvirtGenericVIFDriver

inject_partition = -2

# live_migration_uri = qemu+ssh://ukinau@%s/system

live_migration_uri = qemu+tcp://%s/system #ここを変更

use_usb_tablet = False

cpu_mode = none

virt_type = qemu

live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_TUNNELLED #これを追加

nova-computeの再起動(devstackなのでscreenに入って該当windowでCtr+cして再度processを上げ直す)

ukinau$ screen -r

Ctrl + a + ダブルクォーテーション <= コマンドではなくてキーボードをこのように打つ

Num Name Flags

0 shell $

1 dstat $(L)

2 key $(L)

3 key-access $(L)

4 horizon $(L)

5 g-reg $(L)

6 g-api $(L)

7 n-api $(L)

8 q-svc $(L)

9 q-agt $(L)

10 q-dhcp $(L)

11 q-l3 $(L)

12 q-meta $(L)

13 n-cond $(L)

14 n-crt $(L)

15 n-sch $(L)

16 n-novnc $(L)

17 n-cpu $(L)

18 shell

n-cpuのwindowに入りCtrl + c

2015-05-29 13:38:34.609 DEBUG oslo_concurrency.lockutils [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Lock "compute_resources" acquired by "_update_available_resource" :: waited 0.000s from (pid=27791) inner /usr/local/lib/python2.7/dist-packages/oslo_concurrency/lockutils.py:444

2015-05-29 13:38:34.724 INFO nova.compute.resource_tracker [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Total usable vcpus: 1, total allocated vcpus: 2

2015-05-29 13:38:34.725 INFO nova.compute.resource_tracker [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Final resource view: name=upstream phys_ram=3953MB used_ram=1536MB phys_disk=34GB used_disk=2GB total_vcpus=1 used_vcpus=2 pci_stats=<nova.pci.stats.PciDeviceStats object at 0x7f8534f37410>

2015-05-29 13:38:34.748 INFO nova.scheduler.client.report [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Compute_service record updated for ('upstream', 'upstream')

2015-05-29 13:38:34.750 INFO nova.compute.resource_tracker [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Compute_service record updated for upstream:upstream

2015-05-29 13:38:34.751 DEBUG oslo_concurrency.lockutils [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Lock "compute_resources" released by "_update_available_resource" :: held 0.142s from (pid=27791) inner /usr/local/lib/python2.7/dist-packages/oslo_concurrency/lockutils.py:456

2015-05-29 13:38:34.752 DEBUG nova.service [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Creating RPC server for service compute from (pid=27791) start /opt/stack/nova/nova/service.py:178

2015-05-29 13:38:34.754 INFO oslo_messaging._drivers.impl_rabbit [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Connecting to AMQP server on 192.168.1.237:5672

2015-05-29 13:38:34.765 INFO oslo_messaging._drivers.impl_rabbit [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Connected to AMQP server on 192.168.1.237:5672

2015-05-29 13:38:34.771 DEBUG nova.service [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] Join ServiceGroup membership for this service compute from (pid=27791) start /opt/stack/nova/nova/service.py:196

2015-05-29 13:38:34.772 DEBUG nova.servicegroup.drivers.db [req-13ae2dd2-1010-4628-a599-a39d8a1eee66 None None] DB_Driver: join new ServiceGroup member upstream to the compute group, service = <nova.service.Service object at 0x7f8538ee1110> from (pid=27791) join /opt/stack/nova/nova/servicegroup/drivers/db.py:47

^C2015-05-29 13:39:52.493 INFO nova.openstack.common.service [req-81048739-e33e-4ed9-9a6b-122a65f3d468 None None] Caught SIGINT, exiting

2015-05-29 13:39:53.179 ERROR oslo_messaging._drivers.impl_rabbit [-] Failed to consume message from queue:

再度processを起動

ukinau$sg libvirtd '/usr/local/bin/nova-compute --config-file /etc/nova/nova.conf' & echo $! >/opt/stack/status/stack/n-cpu.pid; fg || echo "n-cpu failed to start" | tee "/opt/stack/status/stack/n-cpu.failure"

[1] 27972

sg libvirtd '/usr/local/bin/nova-compute --config-file /etc/nova/nova.conf'

2015-05-29 13:41:06.777 DEBUG nova.servicegroup.api [-] ServiceGroup driver defined as an instance of db from (pid=27973) __init__ /opt/stack/nova/nova/servicegroup/api.py:68

libvirtd.confを下記の部分をコメントのように編集

# listen_tls = 0 <= アンコメント

listen_tls = 0

# listen_tcp = 1 <= アンコメント

listen_tcp = 1

# tcp_port = "16509" <= アンコメント

tcp_port = "16509"

# auth_tcp = sasl <= auth_tcpの値を変更

auth_tcp = "none"

/etc/default/libvirt-binを下記のように編集(optionに-lを追加)

# Defaults for libvirt-bin initscript (/etc/init.d/libvirt-bin)

# This is a POSIX shell fragment

# Start libvirtd to handle qemu/kvm:

start_libvirtd="yes"

# options passed to libvirtd, add "-l" to listen on tcp

libvirtd_opts="-d -l"

# pass in location of kerberos keytab

# export KRB5_KTNAME=/etc/libvirt/libvirt.keytab

libvirt-binを再起動(libvirtd)

ukinau$ sudo /etc/init.d/libvirt-bin restart

Compute1ノードの構築

devstackでopenstackの構築

ukinau$ git clone https://git.openstack.org/openstack-dev/devstack

ukinau$ vi devstack/local.conf

local.confはこんな感じ(今回は関係ないけど、linuxbridgeで作ってみた)

controllerとは内容が異なるので注意

# localrc compatible overrides

[[local|localrc]]

RECLONE=false

LOGFILE=/opt/stack/logs/stack.sh.log

# Secrets

ADMIN_PASSWORD=secrete

DATABASE_PASSWORD=$ADMIN_PASSWORD

RABBIT_PASSWORD=$ADMIN_PASSWORD

SERVICE_PASSWORD=$ADMIN_PASSWORD

SERVICE_TOKEN=a682f596-76f3-11e3-b3b2-e716f9080d50

# Compute node services

ENABLED_SERVICES=n-cpu,neutron,q-agt

SERVICE_HOST=192.168.1.237

MYSQL_HOST=$SERVICE_HOST

RABBIT_HOST=$SERVICE_HOST

Q_HOST=$SERVICE_HOST

GLANCE_HOSTPORT=$SERVICE_HOST:9292

KEYSTONE_AUTH_HOST=$SERVICE_HOST

KEYSTONE_SERVICE_HOST=$SERVICE_HOST

## Use ML2 + LinuxBridge

# PUBLIC_INTERFACE=eth2

LB_PHYSICAL_INTERFACE=eth2

PHYSICAL_NETWORK=public

# Set ML2 plugin + LB agent

Q_PLUGIN=ml2

Q_AGENT=linuxbridge

# Set ML2 mechanism drivers to LB and l2pop

Q_ML2_PLUGIN_MECHANISM_DRIVERS=linuxbridge,l2population

# Set type drivers

Q_ML2_PLUGIN_TYPE_DRIVERS=flat,vlan,vxlan

# Use Neutron security groups

Q_USE_SECGROUP=True

# Set possible tenant network types

Q_ML2_TENANT_NETWORK_TYPE=flat,vlan,vxlan

FIXED_RANGE=10.0.0.0/24

NETWORK_GATEWAY=10.0.0.1

# PUBLIC_NETWORK_GATEWAY=172.16.0.254

HOST_IP=192.168.1.240

# VLAN configuration -- WORKS

# ENABLE_TENANT_VLANS=True

# TENANT_VLAN_RANGE=100:200

# Simple GRE tunnel configuration -- overrides extra opts

ENABLE_TENANT_TUNNELS=True

[[post-config|/$Q_PLUGIN_CONF_FILE]]

[vxlan]

l2_population = True

enable_vxlan = True

local_ip = 192.168.1.240

# [linux_bridge]

# l2_population=True

stack.shの実行

ukinau$ cd devstack && ./stack.sh

nfs-mountするためにnfs-commonをインストール

ukinau$ sudo apt-get install nfs-common

instanceディレクトリをnfs mount

ukinau$ sudo mount -t nfs4 192.168.1.237:/instances /opt/stack/data/nova/instances

下記の処理を実行(controllerと同じなので、controllerの方を見てください)

- nova-computeのlive-migrationの設定&再起動

- libvirt-binの設定ファイルの変更&再起動

ここまで来たらlive migrationができるopenstack(2台構成が構築完了)

続いてlive-migrationを試してみる

Live migrationをやってみる

hypervisorが2つ認識されていることを確認

ukinau@controller:/instances$ nova hypervisor-list

+----+---------------------+-------+---------+

| ID | Hypervisor hostname | State | Status |

+----+---------------------+-------+---------+

| 1 | controller | up | enabled |

| 2 | compute | up | enabled |

+----+---------------------+-------+---------+

VMを作成 (imageとnetworkのidは事前に調べる[neutron net-list,glance image-list])

ukinau@controller:/instances$ nova boot --flavor 1 --image 42668193-861a-4879-944a-892c95b25c94 --nic net-id=7444574f-156c-44fc-845c-fe8c9cc52cb9 test

+--------------------------------------+----------------------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | - |

| OS-EXT-SRV-ATTR:hypervisor_hostname | - |

| OS-EXT-SRV-ATTR:instance_name | instance-0000000c |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | NL9XiCMgoPZJ |

| config_drive | |

| created | 2015-05-29T18:19:40Z |

| flavor | m1.tiny (1) |

| hostId | |

| id | be410575-1305-4462-bed9-381b34abe1af |

| image | cirros-0.3.2-x86_64-uec (42668193-861a-4879-944a-892c95b25c94) |

| key_name | - |

| metadata | {} |

| name | test |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | BUILD |

| tenant_id | fc92a873bbff4ffd8e02c3273c4fb488 |

| updated | 2015-05-29T18:19:40Z |

| user_id | d54a543f7f8a48c58ab645e4eaf35efb |

+--------------------------------------+----------------------------------------------------------------+

どっちのhostで動いているか確認

ukinau@controller$ nova show test

+--------------------------------------+----------------------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | controller |

| OS-EXT-SRV-ATTR:hypervisor_hostname | controller |

| OS-EXT-SRV-ATTR:instance_name | instance-0000000c |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-STS:task_state | - |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2015-05-29T18:19:47.000000 |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | True |

| created | 2015-05-29T18:19:40Z |

| flavor | m1.tiny (1) |

| hostId | d1ddf3dcea22d897311a57dd202a1e011315f4685ae9352eebcc6e77 |

| id | be410575-1305-4462-bed9-381b34abe1af |

| image | cirros-0.3.2-x86_64-uec (42668193-861a-4879-944a-892c95b25c94) |

| key_name | - |

| metadata | {} |

| name | test |

| os-extended-volumes:volumes_attached | [] |

| private network | 10.0.0.14 |

| progress | 0 |

| security_groups | default |

| status | ACTIVE |

| tenant_id | fc92a873bbff4ffd8e02c3273c4fb488 |

| updated | 2015-05-29T18:19:48Z |

| user_id | d54a543f7f8a48c58ab645e4eaf35efb |

+--------------------------------------+----------------------------------------------------------------+

libvirt-qemuやkvmや実行ユーザのuidが違うと、permissionエラーでlivemigrationに失敗することがあるので、uidが違う場合は、chmodで全ユーザから読み書きできるようにする。(本番環境ではuidを揃えるべきです)

ukinau@controller:~$ sudo chmod 777 -R /instances

別windowからvmにpingを打ちつづける(live-migrationの確認)

root@controller:~# ip netns

qrouter-6c739d5b-c890-44c8-879d-321eabfb16d3

qdhcp-7444574f-156c-44fc-845c-fe8c9cc52cb9

root@controller:~# ip netns exec qdhcp-7444574f-156c-44fc-845c-fe8c9cc52cb9 ping 10.0.0.14

PING 10.0.0.14 (10.0.0.14) 56(84) bytes of data.

64 bytes from 10.0.0.14: icmp_seq=1 ttl=64 time=1.10 ms

64 bytes from 10.0.0.14: icmp_seq=2 ttl=64 time=0.593 ms

64 bytes from 10.0.0.14: icmp_seq=3 ttl=64 time=0.612 ms

64 bytes from 10.0.0.14: icmp_seq=4 ttl=64 time=0.501 ms

64 bytes from 10.0.0.14: icmp_seq=5 ttl=64 time=0.269 ms

・・・・

・・・・

Live Migrationを実行

ukinau@controller:~$ nova live-migration test compute

ukinau@controller:~$

成功とか、失敗とか特に出力はされない見たいです。

nova-listでmigration中のstatusを確認してみると、こんなんになってる

ukinau@controller:~$ nova list

+--------------------------------------+-------+-----------+------------+-------------+-------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-------+-----------+------------+-------------+-------------------+

| 3bc53322-c7fc-4d29-a9ca-ea1f0bd8d91d | test | MIGRATING | migrating | Running | private=10.0.0.14 |

+--------------------------------------+-------+-----------+------------+-------------+-------------------+

migrationの成功をnova show で確認

hostとhypervisor_hostnameが変わってる!

virsh listで確認をしても、移動は確認できます(今回はしない)。

ukinau@controller:~$ nova show test

+--------------------------------------+----------------------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | compute |

| OS-EXT-SRV-ATTR:hypervisor_hostname | compute |

| OS-EXT-SRV-ATTR:instance_name | instance-0000000c |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-STS:task_state | - |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2015-05-29T18:37:46.000000 |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | True |

| created | 2015-05-29T18:37:39Z |

| flavor | m1.tiny (1) |

| hostId | 6f4633e1a94dbc8d8abbdff01cf5a5dfc6f8009fd8985d374806b26b |

| id | 3bc53322-c7fc-4d29-a9ca-ea1f0bd8d91d |

| image | cirros-0.3.2-x86_64-uec (42668193-861a-4879-944a-892c95b25c94) |

| key_name | - |

| metadata | {} |

| name | test |

| os-extended-volumes:volumes_attached | [] |

| private network | 10.0.0.14 |

| progress | 0 |

| security_groups | default |

| status | ACTIVE |

| tenant_id | fc92a873bbff4ffd8e02c3273c4fb488 |

| updated | 2015-05-29T18:38:32Z |

| user_id | d54a543f7f8a48c58ab645e4eaf35efb |

+--------------------------------------+----------------------------------------------------------------+

pingの画面を確認

64 bytes from 10.0.0.14: icmp_seq=89 ttl=64 time=0.634 ms

64 bytes from 10.0.0.14: icmp_seq=90 ttl=64 time=0.544 ms

64 bytes from 10.0.0.14: icmp_seq=91 ttl=64 time=0.438 ms

64 bytes from 10.0.0.14: icmp_seq=92 ttl=64 time=34.2 ms

64 bytes from 10.0.0.14: icmp_seq=93 ttl=64 time=0.727 ms

64 bytes from 10.0.0.14: icmp_seq=94 ttl=64 time=0.418 ms

34.2ミリ秒とping応答に時間がかかっているところがmigration中のdownタイムと思われます。TCPに気づかれない程度のdown timeですね。

所感

割と簡単に、Live Migrationができることが確認できました。

ただ、live-migrationができることは確認できましたが、いくつか課題が見えます。

-

live-migrationのapiを叩いても、requestが成功したかどうかは確認できますが、live-migrationに成功したかどうかは、「nova show」で確認してhostが変わっているかどうかを定期確認しないといけないという点

-

「nova show」でhostが変わっていない場合は、失敗とみなせますが明示的に「nova show」の出力からエラーであるということは判断できません。

移行前ホストのnova-compute,移行予定ホストのnova-computeのログより失敗していること、理由を確認しないといけません。

最後に、ハマッたエラーとその解決策について残しておきます。

live-migrationの関係でエラーが出た場合は参考にしてください

参考(遭遇エラーについて)

1. 特にエラーがないけど、live-migrationされない(解決)

- nova.confのlibvirtdに設定が足りなかった。

- 回避策

-

nova.confの中の設定に下記を追加して回避

- live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_TUNNELLED

-

2. nova-compute.logでssh authenticate error(解決)

エラーサンプル

2015-05-29 09:45:07.779 ERROR nova.virt.libvirt.driver [-] [instance: 5e6c8f45-bc5d-44cb-a154-0101ad071f48] Live Migration failure: operation failed: Failed to connect to remote libvirt URI qemu+ssh://ukinau@controller/system: Cannot recv data: Host key verification failed.: Connection reset by peer

sshで移行先のlibvirtにアクセスしようとして、authエラーになっている、鍵を配置してもよいが、秘密鍵をばらまくのも微妙なので、移行先libvirtdにsshではなく、tcp(with none-auth)で接続するようにする

回避策

- nova.confのlive_migration_uriを「qemu+tcp://%s/system」に変更

- libvirtdをtcpでlistenするように変更

- libvirtd.confと/etc/default/libvirt-binを上記のcontrollerの設定と同じように変更

3. nova-compute.logでport 16509 connection refused(解決)

エラーサンプル

2015-05-29 10:07:00.002 ERROR nova.virt.libvirt.driver [-] [instance: 5e6c8f45-bc5d-44cb-a154-0101ad071f48] Live Migration failure: operation failed: Failed to connect to remote libvirt URI qemu+tcp://controller/system: unable to connect to server at 'controller:16509': Connection refused

libvirtdがtcpリッスンしていないのが原因

回避策

- libvirtdをtcpでlistenするように変更して回避

- libvirtd.confと/etc/default/libvirt-binを上記のcontrollerの設定と同じように変更

4. nova-compute.logでsasl認証エラー(解決)

エラーサンプル

2015-05-29 10:15:08.838 ERROR nova.virt.libvirt.driver [-] [instance: 5e6c8f45-bc5d-44cb-a154-0101ad071f48] Live Migration failure: operation failed: Failed to connect to remote libvirt URI qemu+tcp://controller/system: authentication failed: Failed to start SASL negotiation: -4 (SASL(-4): no mechanism available: No worthy mechs found)

回避策

- libvirtdで認証をオフにすることで回避

- libvirtd.confと/etc/default/libvirt-binを上記のcontrollerの設定と同じように変更

5. compute.logでUnable to pre-create chardev file '/opt/stack/data/nova/instances/5e6c8f45-bc5d-44cb-a154-0101ad071f48/console.log' (解決)

エラーサンプル

2015-05-29 20:45:57.908 ERROR nova.virt.libvirt.driver [-] [instance: 5e6c8f45-bc5d-44cb-a154-0101ad071f48] Live Migration failure: Unable to pre-create chardev file '/opt/stack/data/nova/instances/5e6c8f45-bc5d-44cb-a154-0101ad071f48/console.log': No such file or directory

NFSでディレクトリが共有されていないことが原因、または、nova.confのinstances_pathがnodeごとに異なる場合もこのエラーが出る場合がある

現在のnovaでは、live-migrationする際にimageファイルを共有する際に、migration元のファイルパスを共有して、共有をしようとするから、起きてしまう(のでinstance格納パスがノードごとに違うとfileにアクセスできないのは当然である) => バグと認識してみるので後日パッチを書いてGerritに出してみます

回避策

- シンボリックリンクを張るなどして、各ノードでinstances格納ディレクトリにアクセスできるパスを揃える

6. Glanceのイメージのcacheが見つからない(instance/_base/hogehogeファイルがない)(解決)

エラーサンプル

2015-05-29 11:54:30.960 ERROR nova.virt.libvirt.driver [-] [instance: ffd848d9-d892-421a-9af9-6a1fb865a812] Live Migration failure: invalid argument: Backing file '/instances/_base/0df9eade44f3dcc944bdec4cf6660e977518981a' of image '/instances/ffd848d9-d892-421a-9af9-6a1fb865a812/disk' is missing.

/opt/stack/data/nova/instances/_baseディレクトリは、glanceからのnova用にイメージをDLしたものを、キャッシュするディレクトリだが、nfsなどで各ノードで共有している場合このcacheが消えてしまうことがあるらしい。そうするとlive-migrationできなくなってしまう。

長期的な回避策としては、この_baseディレクトリはcomputeノードごとに分けることがベストだ。

やり方としては、base_dir_nameを指定することで各ノードで別のディレクトリを使用することができる(http://d.hatena.ne.jp/pyde/20121216/p1)

回避策

- imageを参照される予定の名前に変更して_baseディレクトリ配下にコピー

- 上記エラーでは、0df9eade44f3dcc944bdec4cf6660e977518981aという名前でimageをコピーすればよい

7. Qemuがクラッシュしたっぽい(未解決/再現条件調査中)

最後のエラーは何回か、live-migrationを試してたら一回起きたエラー。

エラーの再現条件もよくわからないので、今後調べる必要がある。

たまに、こういうことも起きるみたい。安定して使えないのかな?

エラーサンプル

015-05-31 01:09:49.577 DEBUG oslo_concurrency.lockutils [-] Lock "compute_resources" released by "_update_available_resource" :: held 0.384s from (pid=4228) inner /usr/local/lib/python2.7/dist-packages/oslo_concurrency/lockutils.py:456

2015-05-31 01:09:49.590 INFO nova.compute.manager [-] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] Migrating instance to upstream finished successfully.

2015-05-31 01:09:49.591 INFO nova.compute.manager [-] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] You may see the error "libvirt: QEMU error: Domain not found: no domain with matching name." This error can be safely ignored.

2015-05-31 01:09:49.592 DEBUG nova.virt.libvirt.driver [-] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] Live migration monitoring is all done from (pid=4228) _live_migration /opt/stack/nova/nova/virt/libvirt/driver.py:5711

2015-05-31 01:09:49.870 DEBUG nova.virt.driver [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] Emitting event <LifecycleEvent: 1433048989.87, fd33baec-51de-4952-b194-8a51149d8a4f => Resumed> from (pid=4228) emit_event /opt/stack/nova/nova/virt/driver.py:1256

2015-05-31 01:09:49.871 INFO nova.compute.manager [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] VM Resumed (Lifecycle Event)

2015-05-31 01:09:49.878 ERROR nova.virt.libvirt.driver [-] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] Live Migration failure: Unable to read from monitor: Connection reset by peer

2015-05-31 01:09:49.879 DEBUG nova.virt.libvirt.driver [-] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] Migration operation thread notification from (pid=4228) thread_finished /opt/stack/nova/nova/virt/libvirt/driver.py:5691

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/eventlet/hubs/hub.py", line 457, in fire_timers

timer()

File "/usr/local/lib/python2.7/dist-packages/eventlet/hubs/timer.py", line 58, in __call__

cb(*args, **kw)

File "/usr/local/lib/python2.7/dist-packages/eventlet/event.py", line 168, in _do_send

waiter.switch(result)

File "/usr/local/lib/python2.7/dist-packages/eventlet/greenthread.py", line 214, in main

result = function(*args, **kwargs)

File "/opt/stack/nova/nova/virt/libvirt/driver.py", line 5486, in _live_migration_operation

instance=instance)

File "/usr/local/lib/python2.7/dist-packages/oslo_utils/excutils.py", line 85, in __exit__

six.reraise(self.type_, self.value, self.tb)

File "/opt/stack/nova/nova/virt/libvirt/driver.py", line 5455, in _live_migration_operation

CONF.libvirt.live_migration_bandwidth)

File "/usr/local/lib/python2.7/dist-packages/eventlet/tpool.py", line 183, in doit

result = proxy_call(self._autowrap, f, *args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/eventlet/tpool.py", line 141, in proxy_call

rv = execute(f, *args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/eventlet/tpool.py", line 122, in execute

six.reraise(c, e, tb)

File "/usr/local/lib/python2.7/dist-packages/eventlet/tpool.py", line 80, in tworker

rv = meth(*args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/libvirt.py", line 1586, in migrateToURI2

if ret == -1: raise libvirtError ('virDomainMigrateToURI2() failed', dom=self)

libvirtError: Unable to read from monitor: Connection reset by peer

2015-05-31 01:09:50.021 DEBUG nova.compute.manager [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] Synchronizing instance power state after lifecycle event "Resumed"; current vm_state: active, current task_state: migrating, curr

ent DB power_state: 1, VM power_state: 1 from (pid=4228) handle_lifecycle_event /opt/stack/nova/nova/compute/manager.py:1234

2015-05-31 01:09:50.124 INFO nova.compute.manager [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] During sync_power_state the instance has a pending task (migrating). Skip.

2015-05-31 01:09:50.126 DEBUG nova.virt.driver [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] Emitting event <LifecycleEvent: 1433048989.88, fd33baec-51de-4952-b194-8a51149d8a4f => Resumed> from (pid=4228) emit_event /opt/stack/nova/nova/virt/driver.py:1256

2015-05-31 01:09:50.127 INFO nova.compute.manager [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] VM Resumed (Lifecycle Event)

2015-05-31 01:09:50.258 DEBUG nova.compute.manager [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] Synchronizing instance power state after lifecycle event "Resumed"; current vm_state: active, current task_state: migrating, current DB power_state: 1, VM power_state: 1 from (pid=4228) handle_lifecycle_event /opt/stack/nova/nova/compute/manager.py:1234

2015-05-31 01:09:50.413 INFO nova.compute.manager [req-d1a62a49-311c-44f5-b085-8477b0a273e9 None None] [instance: fd33baec-51de-4952-b194-8a51149d8a4f] During sync_power_state the instance has a pending task (migrating). Skip.

2015-05-31 01:10:10.697 DEBUG nova.openstack.common.periodic_task [req-19629779-4253-4213-b4cb-7033a4817e2a None None] Running periodic task ComputeManager.update_available_resource from (pid=4228) run_periodic_tasks /opt/stack/nova/nova/openstack/common/periodic_task.py:219

2015-05-31 01:10:10.713 INFO nova.compute.resource_tracker [req-19629779-4253-4213-b4cb-7033a4817e2a None None] Auditing locally available compute resources for node compute

2015-05-31 01:10:10.726 DEBUG oslo_concurrency.processutils [req-19629779-4253-4213-b4cb-7033a4817e2a None None] Running cmd (subprocess): env LC_ALL=C LANG=C qemu-img info /instances/fd33baec-51de-4952-b194-8a51149d8a4f/disk from (pid=4228) execute /usr/local/lib/python2.7/dist-packages/oslo_concurrency/processutils.py:203

2015-05-31 01:10:10.756 DEBUG oslo_concurrency.processutils [req-19629779-4253-4213-b4cb-7033a4817e2a None None] CMD "env LC_ALL=C LANG=C qemu-img info /instances/fd33baec-51de-4952-b194-8a51149d8a4f/disk" returned: 0 in 0.030s from (pid=4228) execute /usr/local/lib/python2.7/dist-packages/oslo_concurrency/processutils.py:229

2015-05-31 01:10:10.757 DEBUG oslo_concurrency.processutils [req-19629779-4253-4213-b4cb-7033a4817e2a None None] Running cmd (subprocess): env LC_ALL=C LANG=C qemu-img info /instances/fd33baec-51de-4952-b194-8a51149d8a4f/disk from (pid=4228) execute /usr/local/lib/python2.7/dist-