GoBGPには、InfluxDBとの情報流通に関わる仕組みが備わっております。

そこで、今回は、GoBGP運用監視の自動化を目指して、InfluxDB連携を試してみたいと思います。

ちなみに、GoBGP設定ファイルに、InfluxDBのアドレス情報と、DB名を記述するだけなので、とても簡単にInfluxDB連携を試すことができます。

[collector.config]

url = "http://xxx.xxx.xxx.xxx:8086"

db-name = "gobgp"

⬛︎ BGP監視環境の準備

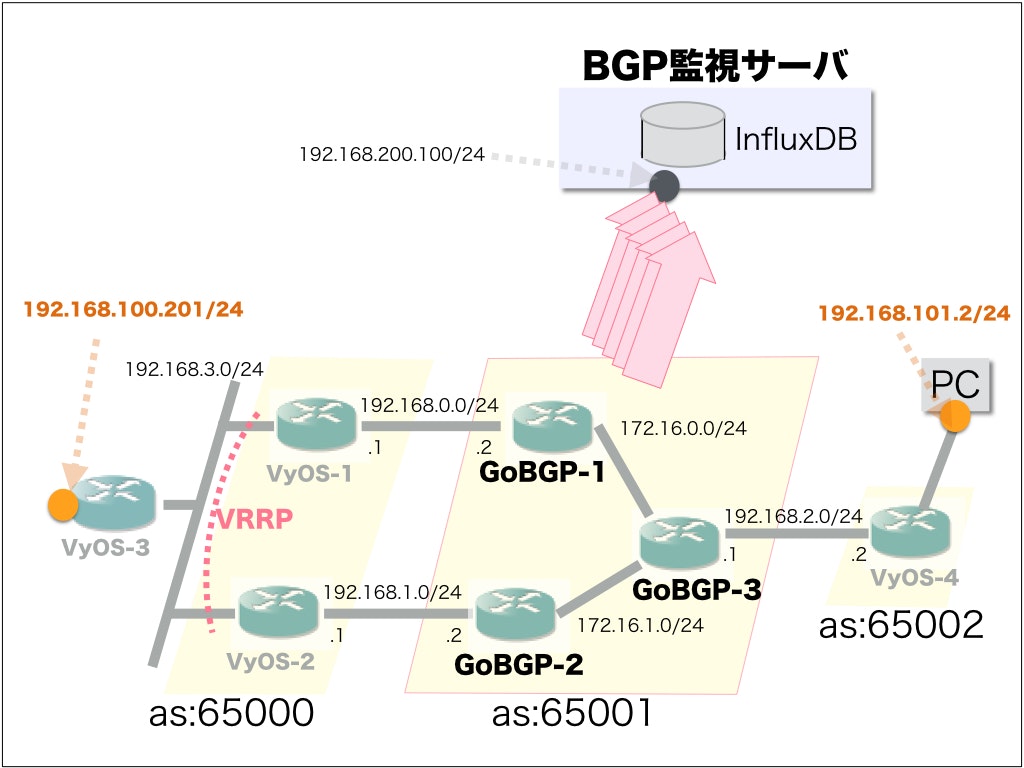

GoBGP <-> InfluxDB連携の動作確認環境は、以下のような構成としました。

なお、今回は、BGP実運用を想定した動作環境を準備しました。

手間と稼働の相談で、BGP面をもっとシンプルにしても構いません。

(1) InfluxDB環境の準備

過去のQiita記事"golangで、時系列データベース「InfluxDB」を試してみる"を参考にしてください。

(2) GoBGP環境の準備

以下、"GoBGP-1"側の構築手順を記述します。

- Linuxネットワークのアドレス設定を行っておきます

$ sudo vi /etc/network/interfaces

...(snip)

auto eth1

iface eth1 inet static

address 172.16.0.2

netmask 255.255.255.0

auto eth2

iface eth2 inet static

address 172.16.1.2

netmask 255.255.255.0

...(snip)

auto eth4

iface eth4 inet static

address 192.168.200.105

netmask 255.255.255.0

- golangをインストールします

$ vi $HOME/.profile

...(snip)

export GOPATH=$HOME/golang

export PATH=$GOPATH/bin:/usr/local/go/bin:$PATH

$ wget --no-check-certificate https://storage.googleapis.com/golang/go1.6.2.linux-amd64.tar.gz

$ sudo tar -C /usr/local -xzf go1.6.2.linux-amd64.tar.gz

$ mkdir $HOME/golang

$ source .profile

$ go version

go version go1.6.2 linux/amd64

- GoBGPをインストールします

$ sudo apt-get update

$ sudo apt-get install git

$ go get github.com/osrg/gobgp/gobgpd

$ go get github.com/osrg/gobgp/gobgp

- GoBGP設定ファイルを準備しておきます

[global]

[global.config]

as = 65001

router-id = "10.0.1.3"

[global.apply-policy.config]

export-policy-list = ["policy1"]

[zebra]

[zebra.config]

enabled = true

url = "unix:/var/run/quagga/zserv.api"

[collector.config]

url = "http://192.168.200.100:8086"

db-name = "gobgp"

[[neighbors]]

[neighbors.config]

peer-type = "internal"

neighbor-address = "172.16.0.1"

peer-as = 65001

local-as = 65001

[[neighbors]]

[neighbors.config]

peer-type = "internal"

neighbor-address = "172.16.1.1"

peer-as = 65001

local-as = 65001

[[neighbors]]

[neighbors.config]

peer-type = "external"

neighbor-address = "192.168.2.2"

peer-as = 65002

local-as = 65001

[[policy-definitions]]

name = "policy1"

[[policy-definitions.statements]]

name = "statement1"

[policy-definitions.statements.actions.bgp-actions]

set-next-hop = "self"

[policy-definitions.statements.actions.route-disposition]

accept-route = true

- GoBGPのオプション機能"FIB manipulation"を活用して、BGPルータ動作を試してみるを参考にして、zebra環境を準備します

(3) VyOS環境の準備

VyOSユーザガイドあたりのドキュメントを参考にして、構築していきます。

⬛︎ GoBGP運用監視(平常運用)の自動化を試してみる

まずは、平常運用での状態監視から...

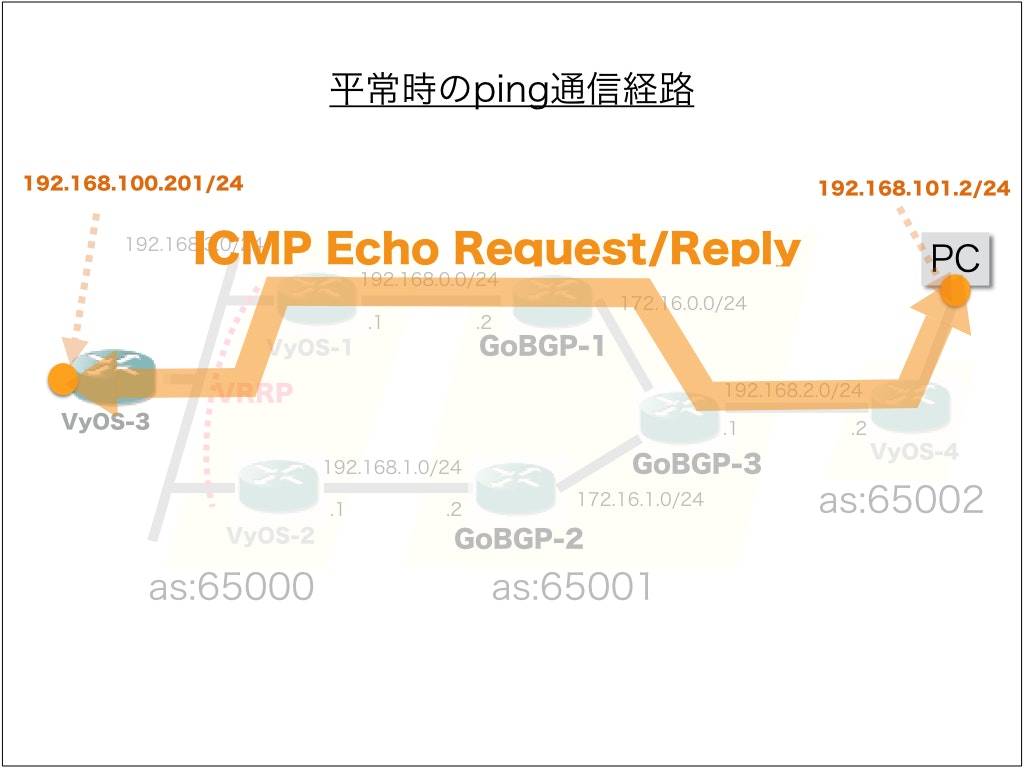

実際、エンドエンドでPingを実施してみると、次のような通信経路で、ICMP Echo Request/Replyのやり取りが行われます。この時の、BGP動作の様子をInfluxDBで確認してみます。

(1) GoBGP側の作業手順

- GoBGPを起動します。InfluxDBに"gobgp"データベースが作成されます。

$ cd $GOPATH/bin

$ sudo ./gobgpd -f gobgpd.conf -l debug -p

... (snip)

INFO[0000] Peer 192.168.0.1 is added

INFO[0000] Add a peer configuration for 192.168.0.1

INFO[0000] Peer 172.16.0.2 is added

INFO[0000] Add a peer configuration for 172.16.0.2

DEBU[0000] IdleHoldTimer expired Duration=0 Key=192.168.0.1 Topic=Peer

DEBU[0000] state changed Key=192.168.0.1 Topic=Peer new=BGP_FSM_ACTIVE old=BGP_FSM_IDLE reason=idle-hold-timer-expired

DEBU[0000] IdleHoldTimer expired Duration=0 Key=172.16.0.2 Topic=Peer

DEBU[0000] state changed Key=172.16.0.2 Topic=Peer new=BGP_FSM_ACTIVE old=BGP_FSM_IDLE reason=idle-hold-timer-expired

DEBU[0010] state changed Key=192.168.0.1 Topic=Peer new=BGP_FSM_OPENSENT old=BGP_FSM_ACTIVE reason=new-connection

DEBU[0010] state changed Key=192.168.0.1 Topic=Peer new=BGP_FSM_OPENCONFIRM old=BGP_FSM_OPENSENT reason=open-msg-received

INFO[0010] Peer Up Key=192.168.0.1 State=BGP_FSM_OPENCONFIRM Topic=Peer

DEBU[0010] state changed Key=192.168.0.1 Topic=Peer new=BGP_FSM_ESTABLISHED old=BGP_FSM_OPENCONFIRM reason=open-msg-negotiated

DEBU[0011] received update Key=192.168.0.1 Topic=Peer attributes=[{Origin: ?} 65000 {Nexthop: 192.168.0.1} {Med: 0}] nlri=[192.168.100.0/24] withdrawals=[]

DEBU[0011] create Destination Key=192.168.100.0/24 Topic=Table

DEBU[0011] Processing destination Key=192.168.100.0/24 Topic=table

DEBU[0011] computeKnownBestPath known pathlist: 1

DEBU[0011] From me, ignore. Data={ 192.168.100.0/24 | src: { 192.168.0.1 | as: 65000, id: 10.0.0.1 }, nh: 192.168.0.1 } Key=192.168.0.1 Topic=Peer

DEBU[0011] send command to zebra Body=type: ROUTE_BGP, flags: , message: 9, prefix: 192.168.100.0, length: 24, nexthop: 192.168.0.1, distance: 0, metric: 0 Header={Len:6 Marker:255 Version:2 Command:IPV4_ROUTE_ADD} Topic=Zebra

DEBU[0018] state changed Key=172.16.0.2 Topic=Peer new=BGP_FSM_OPENSENT old=BGP_FSM_ACTIVE reason=new-connection

DEBU[0018] state changed Key=172.16.0.2 Topic=Peer new=BGP_FSM_OPENCONFIRM old=BGP_FSM_OPENSENT reason=open-msg-received

INFO[0018] Peer Up Key=172.16.0.2 State=BGP_FSM_OPENCONFIRM Topic=Peer

DEBU[0018] state changed Key=172.16.0.2 Topic=Peer new=BGP_FSM_ESTABLISHED old=BGP_FSM_OPENCONFIRM reason=open-msg-negotiated

DEBU[0018] sent update Key=172.16.0.2 State=BGP_FSM_ESTABLISHED Topic=Peer attributes=[{Origin: ?} 65000 {Nexthop: 172.16.0.1} {Med: 0} {LocalPref: 100}] nlri=[192.168.100.0/24] withdrawals=[]

DEBU[0018] received update Key=172.16.0.2 Topic=Peer attributes=[{Origin: i} 65002 {Nexthop: 172.16.0.2} {Med: 1} {LocalPref: 100}] nlri=[192.168.101.0/24] withdrawals=[]

DEBU[0018] create Destination Key=192.168.101.0/24 Topic=Table

DEBU[0018] Processing destination Key=192.168.101.0/24 Topic=table

DEBU[0018] computeKnownBestPath known pathlist: 1

DEBU[0018] From same AS, ignore. Data={ 192.168.101.0/24 | src: { 172.16.0.2 | as: 65001, id: 10.0.1.3 }, nh: 172.16.0.2 } Key=172.16.0.2 Topic=Peer

DEBU[0018] sent update Key=192.168.0.1 State=BGP_FSM_ESTABLISHED Topic=Peer attributes=[{Origin: i} 65001 65002 {Nexthop: 192.168.0.2}] nlri=[192.168.101.0/24] withdrawals=[]

DEBU[0018] send command to zebra Body=type: ROUTE_BGP, flags: , message: 9, prefix: 192.168.101.0, length: 24, nexthop: 172.16.0.2, distance: 0, metric: 1 Header={Len:6 Marker:255 Version:2 Command:IPV4_ROUTE_ADD} Topic=Zebra

DEBU[0040] sent Key=192.168.0.1 State=BGP_FSM_ESTABLISHED Topic=Peer data=&{Header:{Marker:[] Len:19 Type:4} Body:0x1236d98}

DEBU[0048] sent Key=172.16.0.2 State=BGP_FSM_ESTABLISHED Topic=Peer data=&{Header:{Marker:[] Len:19 Type:4} Body:0x1236d98}

- しばらくして、エンドエンドで、pingを動作させてみます

$ ping 192.168.100.201

PING 192.168.100.201 (192.168.100.201) 56(84) bytes of data.

64 bytes from 192.168.100.201: icmp_seq=1 ttl=60 time=1.92 ms

64 bytes from 192.168.100.201: icmp_seq=2 ttl=60 time=2.00 ms

64 bytes from 192.168.100.201: icmp_seq=3 ttl=60 time=2.00 ms

64 bytes from 192.168.100.201: icmp_seq=4 ttl=60 time=1.96 ms

64 bytes from 192.168.100.201: icmp_seq=5 ttl=60 time=2.00 ms

^C

--- 192.168.100.201 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4008ms

rtt min/avg/max/mdev = 1.926/1.979/2.004/0.057 ms

(2) InfluxDB側での作業手順

- InfluxのCLIにて、"gobgp"データベースが作成されたことを確認します

$ influx

Visit https://enterprise.influxdata.com to register for updates, InfluxDB server management, and monitoring.

Connected to http://localhost:8086 version 0.13.0

InfluxDB shell version: 0.13.0

> SHOW DATABASES

name: databases

---------------

name

_internal

mydb

systemstats

gobgp

- "gobgp"データベースのデータ属性を確認してみます

> USE gobgp

Using database gobgp

> precision rfc3339

> SHOW SERIES

key

peer,PeerAS=65000,PeerAddress=192.168.0.1,State=Established

peer,PeerAS=65000,PeerAddress=192.168.1.1,State=Established

peer,PeerAS=65001,PeerAddress=172.16.0.1,State=Established

peer,PeerAS=65001,PeerAddress=172.16.0.2,State=Established

peer,PeerAS=65001,PeerAddress=172.16.1.1,State=Established

peer,PeerAS=65001,PeerAddress=172.16.1.2,State=Established

peer,PeerAS=65002,PeerAddress=192.168.2.2,State=Established

update,PeerAS=65000,PeerAddress=192.168.0.1,Prefix=192.168.100.0,PrefixLen=24,Timestamp=2016-07-23\ 10:01:59.164095163\ +0900\ JST,Withdraw=false

update,PeerAS=65000,PeerAddress=192.168.1.1,Prefix=192.168.100.0,PrefixLen=24,Timestamp=2016-07-23\ 10:01:52.187386753\ +0900\ JST,Withdraw=false

update,PeerAS=65001,PeerAddress=172.16.0.1,Prefix=192.168.100.0,PrefixLen=24,Timestamp=2016-07-23\ 10:02:06.160201193\ +0900\ JST,Withdraw=false

update,PeerAS=65001,PeerAddress=172.16.0.2,Prefix=192.168.101.0,PrefixLen=24,Timestamp=2016-07-23\ 10:02:06.16290857\ +0900\ JST,Withdraw=false

update,PeerAS=65001,PeerAddress=172.16.1.1,Prefix=192.168.100.0,PrefixLen=24,Timestamp=2016-07-23\ 10:01:52.046672364\ +0900\ JST,Withdraw=false

update,PeerAS=65001,PeerAddress=172.16.1.2,Prefix=192.168.101.0,PrefixLen=24,Timestamp=2016-07-23\ 10:01:46.446475225\ +0900\ JST,Withdraw=false

update,PeerAS=65002,PeerAddress=192.168.2.2,Prefix=192.168.101.0,PrefixLen=24,Timestamp=2016-07-23\ 10:01:46.30349535\ +0900\ JST,Withdraw=false

- まずは、GoBGP運用監視として、GoBGPで保持しているPeer情報を確認します

> SELECT * FROM peer

name: peer

----------

time PeerAS PeerAddress PeerID State

2016-07-23T01:01:44.299Z 65001 172.16.1.1 10.0.1.2 Established

2016-07-23T01:01:44.44Z 65001 172.16.1.2 10.0.1.3 Established

2016-07-23T01:01:45.3Z 65002 192.168.2.2 10.0.0.4 Established

2016-07-23T01:01:51.185Z 65000 192.168.1.1 10.0.0.2 Established

2016-07-23T01:01:58.163Z 65000 192.168.0.1 10.0.0.1 Established

2016-07-23T01:02:06.159Z 65001 172.16.0.1 10.0.1.1 Established

2016-07-23T01:02:06.162Z 65001 172.16.0.2 10.0.1.3 Established

>

- つづいて、これまでGoBGPがやり取りしたBGP UPDATE情報を確認します

> SELECT * FROM update

name: update

------------

time ASPath Med NextHop Origin OriginAS PeerAS PeerAddress Prefix PrefixLen RouterID Timestamp Withdraw

2016-07-23T01:01:46.303Z 65002 1 192.168.2.2 i 65002 65002 192.168.2.2 192.168.101.0 24 10.0.0.4 2016-07-23 10:01:46.30349535 +0900 JST false

2016-07-23T01:01:46.447Z 65002 1 172.16.1.2 i 65002 65001 172.16.1.2 192.168.101.0 24 10.0.1.3 2016-07-23 10:01:46.446475225 +0900 JST false

2016-07-23T01:01:52.047Z 65000 0 172.16.1.1 ? 65000 65001 172.16.1.1 192.168.100.0 24 10.0.1.2 2016-07-23 10:01:52.046672364 +0900 JST false

2016-07-23T01:01:52.188Z 65000 0 192.168.1.1 ? 65000 65000 192.168.1.1 192.168.100.0 24 10.0.0.2 2016-07-23 10:01:52.187386753 +0900 JST false

2016-07-23T01:01:59.165Z 65000 0 192.168.0.1 ? 65000 65000 192.168.0.1 192.168.100.0 24 10.0.0.1 2016-07-23 10:01:59.164095163 +0900 JST false

2016-07-23T01:02:06.164Z 65000 0 172.16.0.1 ? 65000 65001 172.16.0.1 192.168.100.0 24 10.0.1.1 2016-07-23 10:02:06.160201193 +0900 JST false

2016-07-23T01:02:06.165Z 65002 1 172.16.0.2 i 65002 65001 172.16.0.2 192.168.101.0 24 10.0.1.3 2016-07-23 10:02:06.16290857 +0900 JST false

>

⬛︎ GoBGP運用監視(BGP障害時)の自動化を試してみる

つづいて、BGP経路迂回時の運用監視 ...

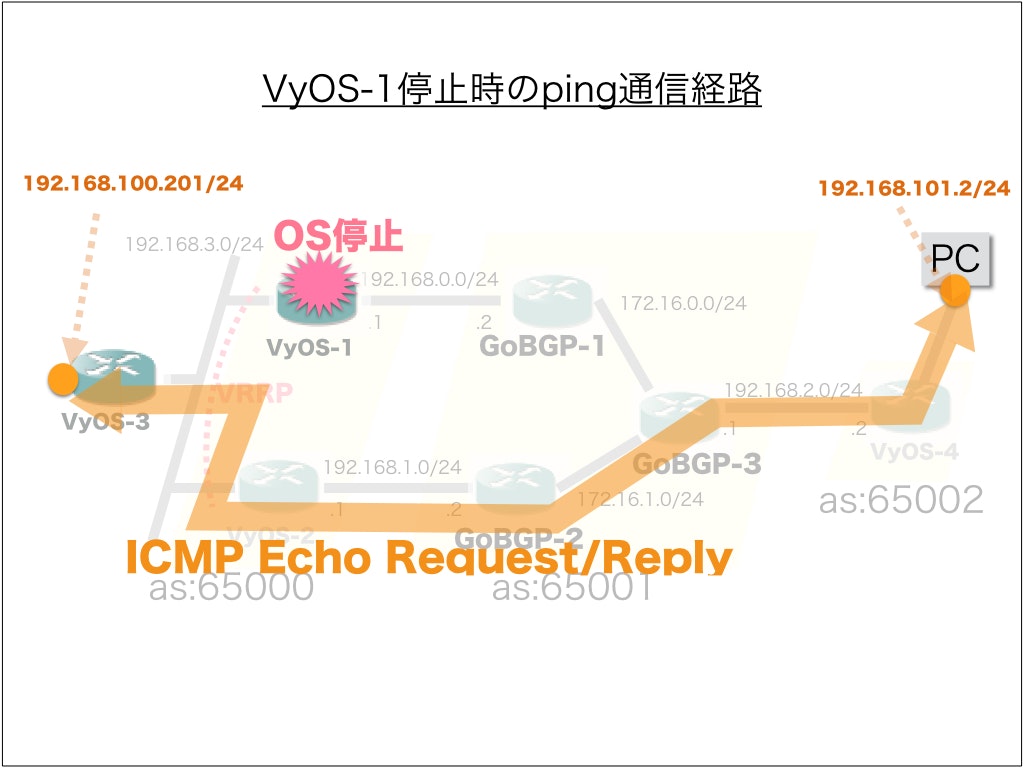

エンドエンドでpingが正しく動作している状態において、VyOS-1側にて、OS停止してみます。

(1) BGP障害発生させる

-

VyOS-1側でOS停止します

-

すると、エンドエンドping動作で、icmp_seq=10が欠損しているようです。BGP経路迂回に伴い、1パケットのロストで回復している様子が確認できました。

$ ping 192.168.100.201

PING 192.168.100.201 (192.168.100.201) 56(84) bytes of data.

64 bytes from 192.168.100.201: icmp_seq=1 ttl=60 time=1.72 ms

64 bytes from 192.168.100.201: icmp_seq=2 ttl=60 time=2.08 ms

64 bytes from 192.168.100.201: icmp_seq=3 ttl=60 time=2.18 ms

64 bytes from 192.168.100.201: icmp_seq=4 ttl=60 time=1.26 ms

64 bytes from 192.168.100.201: icmp_seq=5 ttl=60 time=2.11 ms

64 bytes from 192.168.100.201: icmp_seq=6 ttl=60 time=1.86 ms

64 bytes from 192.168.100.201: icmp_seq=7 ttl=60 time=2.11 ms

64 bytes from 192.168.100.201: icmp_seq=8 ttl=60 time=2.79 ms

64 bytes from 192.168.100.201: icmp_seq=9 ttl=60 time=2.12 ms

64 bytes from 192.168.100.201: icmp_seq=11 ttl=60 time=1.27 ms

64 bytes from 192.168.100.201: icmp_seq=12 ttl=60 time=1.84 ms

64 bytes from 192.168.100.201: icmp_seq=13 ttl=60 time=2.03 ms

64 bytes from 192.168.100.201: icmp_seq=14 ttl=60 time=12.9 ms

64 bytes from 192.168.100.201: icmp_seq=15 ttl=60 time=2.06 ms

64 bytes from 192.168.100.201: icmp_seq=16 ttl=60 time=1.80 ms

64 bytes from 192.168.100.201: icmp_seq=17 ttl=60 time=3.09 ms

64 bytes from 192.168.100.201: icmp_seq=18 ttl=60 time=1.88 ms

64 bytes from 192.168.100.201: icmp_seq=19 ttl=60 time=2.33 ms

64 bytes from 192.168.100.201: icmp_seq=20 ttl=60 time=2.04 ms

64 bytes from 192.168.100.201: icmp_seq=21 ttl=60 time=2.69 ms

64 bytes from 192.168.100.201: icmp_seq=22 ttl=60 time=3.90 ms

64 bytes from 192.168.100.201: icmp_seq=23 ttl=60 time=2.67 ms

64 bytes from 192.168.100.201: icmp_seq=24 ttl=60 time=1.98 ms

64 bytes from 192.168.100.201: icmp_seq=25 ttl=60 time=2.71 ms

64 bytes from 192.168.100.201: icmp_seq=26 ttl=60 time=2.02 ms

64 bytes from 192.168.100.201: icmp_seq=27 ttl=60 time=2.60 ms

64 bytes from 192.168.100.201: icmp_seq=28 ttl=60 time=2.00 ms

64 bytes from 192.168.100.201: icmp_seq=29 ttl=60 time=1.96 ms

64 bytes from 192.168.100.201: icmp_seq=30 ttl=60 time=2.05 ms

64 bytes from 192.168.100.201: icmp_seq=31 ttl=60 time=1.93 ms

64 bytes from 192.168.100.201: icmp_seq=32 ttl=60 time=2.06 ms

64 bytes from 192.168.100.201: icmp_seq=33 ttl=60 time=1.95 ms

64 bytes from 192.168.100.201: icmp_seq=34 ttl=60 time=1.77 ms

64 bytes from 192.168.100.201: icmp_seq=35 ttl=60 time=2.01 ms

64 bytes from 192.168.100.201: icmp_seq=36 ttl=60 time=2.05 ms

64 bytes from 192.168.100.201: icmp_seq=37 ttl=60 time=1.99 ms

64 bytes from 192.168.100.201: icmp_seq=38 ttl=60 time=1.98 ms

64 bytes from 192.168.100.201: icmp_seq=39 ttl=60 time=1.64 ms

64 bytes from 192.168.100.201: icmp_seq=40 ttl=60 time=1.97 ms

64 bytes from 192.168.100.201: icmp_seq=41 ttl=60 time=1.83 ms

64 bytes from 192.168.100.201: icmp_seq=42 ttl=60 time=2.01 ms

64 bytes from 192.168.100.201: icmp_seq=43 ttl=60 time=1.62 ms

64 bytes from 192.168.100.201: icmp_seq=44 ttl=60 time=2.03 ms

64 bytes from 192.168.100.201: icmp_seq=45 ttl=60 time=2.30 ms

^C

--- 192.168.100.201 ping statistics ---

45 packets transmitted, 44 received, 2% packet loss, time 44108ms

rtt min/avg/max/mdev = 1.264/2.349/12.901/1.669 ms

(2) GoBGP-1側での事後確認

- GoBGP-1側での動作メッセージを確認してみると、「VyOS-1から、BGP notificationメッセージを受信して、直ちに、BGP Peer切断し、GoBGP-3向けに、"192.168.100.0/24”のフレフィックス情報を取り下げるために、BGP UPDATEメッセージ[withdraw]を送信していた」ことが確認できました。

WARN[1261] received notification Code=6 Data=[] Key=192.168.0.1 Subcode=3 Topic=Peer

INFO[1261] Peer Down Key=192.168.0.1 Reason=notification-received code 6(cease) subcode 3(peer deconfigured) State=BGP_FSM_ESTABLISHED Topic=Peer

DEBU[1261] state changed Key=192.168.0.1 Topic=Peer new=BGP_FSM_IDLE old=BGP_FSM_ESTABLISHED reason=notification-received code 6(cease) subcode 3(peer deconfigured)

DEBU[1261] Processing destination Key=192.168.100.0/24 Topic=table

DEBU[1261] Removing withdrawals Key=192.168.100.0/24 Length=1 Topic=Table

DEBU[1261] sent update Key=172.16.0.2 State=BGP_FSM_ESTABLISHED Topic=Peer attributes=[] nlri=[] withdrawals=[192.168.100.0/24]

DEBU[1261] send command to zebra Body=type: ROUTE_BGP, flags: , message: 9, prefix: 192.168.100.0, length: 24, nexthop: 192.168.0.1, distance: 0, metric: 0 Header={Len:6 Marker:255 Version:2 Command:IPV4_ROUTE_DELETE} Topic=Zebra

DEBU[1266] IdleHoldTimer expired Duration=5 Key=192.168.0.1 Topic=Peer

DEBU[1266] state changed Key=192.168.0.1 Topic=Peer new=BGP_FSM_ACTIVE old=BGP_FSM_IDLE reason=idle-hold-timer-expired

DEBU[1278] sent Key=172.16.0.2 State=BGP_FSM_ESTABLISHED Topic=Peer data=&{Header:{Marker:[] Len:19 Type:4} Body:0x1236d98}

DEBU[1286] failed to connect: dial tcp 0.0.0.0:0->192.168.0.1:179: i/o timeout Key=192.168.0.1 Topic=Peer

(3) InfluxDB側での事後確認

- InfluxDBのCLIから、GoBGPのPeer情報を参照してみると、"2016-07-23T01:22:49(UTC)"に、GoBGP-1でBGP Peerが切断させたことが確認できました。

> SELECT * FROM peer

name: peer

----------

time PeerAS PeerAddress PeerID State

2016-07-23T01:01:44.299Z 65001 172.16.1.1 10.0.1.2 Established

2016-07-23T01:01:44.44Z 65001 172.16.1.2 10.0.1.3 Established

2016-07-23T01:01:45.3Z 65002 192.168.2.2 10.0.0.4 Established

2016-07-23T01:01:51.185Z 65000 192.168.1.1 10.0.0.2 Established

2016-07-23T01:01:58.163Z 65000 192.168.0.1 10.0.0.1 Established

2016-07-23T01:02:06.159Z 65001 172.16.0.1 10.0.1.1 Established

2016-07-23T01:02:06.162Z 65001 172.16.0.2 10.0.1.3 Established

2016-07-23T01:22:49.214Z 65000 192.168.0.1 10.0.0.1 Idle

>

- さらに、 InfluxDBのCLIから、GoBGPでの過去のBGP UPDATE履歴を参照してみると、"2016-07-23T01:22:49(UTC)"に、GoBGP-3でBGP UPDATE[withdraw]を受信していたことが確認できました。

> SELECT * FROM update

name: update

------------

time ASPath Med NextHop Origin OriginAS PeerAS PeerAddress Prefix PrefixLen RouterID Timestamp Withdraw

2016-07-23T01:01:46.303Z 65002 1 192.168.2.2 i 65002 65002 192.168.2.2 192.168.101.0 24 10.0.0.4 2016-07-23 10:01:46.30349535 +0900 JST false

2016-07-23T01:01:46.447Z 65002 1 172.16.1.2 i 65002 65001 172.16.1.2 192.168.101.0 24 10.0.1.3 2016-07-23 10:01:46.446475225 +0900 JST false

2016-07-23T01:01:52.047Z 65000 0 172.16.1.1 ? 65000 65001 172.16.1.1 192.168.100.0 24 10.0.1.2 2016-07-23 10:01:52.046672364 +0900 JST false

2016-07-23T01:01:52.188Z 65000 0 192.168.1.1 ? 65000 65000 192.168.1.1 192.168.100.0 24 10.0.0.2 2016-07-23 10:01:52.187386753 +0900 JST false

2016-07-23T01:01:59.165Z 65000 0 192.168.0.1 ? 65000 65000 192.168.0.1 192.168.100.0 24 10.0.0.1 2016-07-23 10:01:59.164095163 +0900 JST false

2016-07-23T01:02:06.164Z 65000 0 172.16.0.1 ? 65000 65001 172.16.0.1 192.168.100.0 24 10.0.1.1 2016-07-23 10:02:06.160201193 +0900 JST false

2016-07-23T01:02:06.165Z 65002 1 172.16.0.2 i 65002 65001 172.16.0.2 192.168.101.0 24 10.0.1.3 2016-07-23 10:02:06.16290857 +0900 JST false

2016-07-23T01:22:49.212Z 65001 172.16.0.1 192.168.100.0 24 10.0.1.1 2016-07-23 10:22:49.212085213 +0900 JST true

>

⬛︎ 最後に、

GoBGPの監視設定は、とてもシンプルなものでした。にも関わらず、BGP監視データを、一元的に、かつ、時系列に保管可能であることが確認できました。

なお、BGP監視データは、BGP UPDATEメッセージ形式で、そのまま保管されるので、BGPオペレータ目線で必要十分な情報だと感じました。

さらに、「InfluxDBに保管されたBGP監視データを対象に、GoBGP運用監視に関わる集計・分析等の適切なクエリで抽出するロジックを組み上げれば、最強なBGP監視の自動化が実現できそうだ」という手応えを感じることができました。

⬛︎ 追記(2018.11.9)

GoBGPのInfluxDB連携は、すでに、使えなくなっているようです。

server: remove collector support