最近話題のChatGPTを利用してChatbotを作ってみようと思いました。

今回は、Dockerで立ち上げた際のメモとしてこの記事を残そうと思います。

LlamaIndexやStreamlitは初めて触るので、記述が甘い点等あるかと思います。

利用したフレームワーク等について

今回利用している主なフレームワーク等です。こちらは解説している方がたくさんいらっしゃるので説明を省かせていただきます。

Streamlit

https://streamlit.io/

LlamaIndex

https://www.llamaindex.ai/

LangChain

https://www.langchain.com/

Faiss

https://ai.meta.com/tools/faiss/

ソースコード

実行手順

- ログインユーザー、パスワードはconfig.yamlで設定しています。

詳しい設定手順は、こちらの記事で確認できます。

https://blog.streamlit.io/streamlit-authenticator-part-1-adding-an-authentication-component-to-your-app/ - .envにChatGPTで取得したAPIKEYを記載します。(利用料金がかかります。)

- コマンドプロンプトで

docker compose up -dを叩くとコンテナが立ち上がります。

streamlit==1.25.0

langchain==0.0.303

openai==1.1.0

duckduckgo-search==3.8.5

anthropic==0.3.10

llama-index==0.8.68

pypdf==3.9.0

faiss-cpu==1.7.4

html2text==2020.1.16

streamlit-authenticator==0.2.2

extra_streamlit_components==0.1.56

FROM python:3.9

WORKDIR /app

COPY . .

RUN pip3 install --upgrade pip && \

pip3 install --no-cache-dir -r requirements.txt

EXPOSE 8501

version: '3.8'

services:

app:

restart: always

build:

context: .

dockerfile: Dockerfile

ports:

- 8501:8501

volumes:

- .:/app

env_file:

- .env

command: streamlit run app.py

cookie:

expiry_days: 10

key: some_signature_keys

name: some_cookie_name

credentials:

usernames:

test:

email: test@example.co.jp

name: test user

password: xxxxxxxxx

preauthorized:

emails:

- test@example.co.jp

OPENAI_API_KEY=「chatGPTのAPIKEY」

import extra_streamlit_components as stx

import streamlit as st

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("__name__")

logger.debug("調査用ログ")

#ログインの確認

def check_login():

if 'authentication_status' not in st.session_state:

st.session_state['authentication_status'] = None

if st.session_state["authentication_status"] is None or False:

st.warning("**ログインしてください**")

st.stop()

import streamlit as st

import os

import pickle

import faiss

import logging

from multiprocessing import Lock

from multiprocessing.managers import BaseManager

from llama_index.callbacks import CallbackManager, LlamaDebugHandler

from llama_index import VectorStoreIndex, Document,Prompt, SimpleDirectoryReader, ServiceContext, StorageContext, load_index_from_storage

from llama_index.chat_engine import CondenseQuestionChatEngine;

from llama_index.node_parser import SimpleNodeParser

from llama_index.langchain_helpers.text_splitter import TokenTextSplitter

from llama_index.constants import DEFAULT_CHUNK_OVERLAP

from llama_index.response_synthesizers import get_response_synthesizer

from llama_index.vector_stores.faiss import FaissVectorStore

from llama_index.graph_stores import SimpleGraphStore

from llama_index.storage.docstore import SimpleDocumentStore

from llama_index.storage.index_store import SimpleIndexStore

import tiktoken

import streamlit_authenticator as stauth

import yaml

from logging import getLogger, StreamHandler, Formatter

index_name = "./data/storage"

pkl_name = "./data/stored_documents.pkl"

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("__name__")

logger.debug("調査用ログ")

def initialize_index():

logger.info("initialize_index start")

text_splitter = TokenTextSplitter(separator="。", chunk_size=1500

, chunk_overlap=DEFAULT_CHUNK_OVERLAP

, tokenizer=tiktoken.encoding_for_model("gpt-3.5-turbo").encode)

node_parser = SimpleNodeParser(text_splitter=text_splitter)

d = 1536

faiss_index = faiss.IndexFlatL2(d)

llama_debug_handler = LlamaDebugHandler()

callback_manager = CallbackManager([llama_debug_handler])

service_context = ServiceContext.from_defaults(node_parser=node_parser,callback_manager=callback_manager)

lock = Lock()

with lock:

if os.path.exists(index_name):

vectorStorePath = index_name + "/" + "default__vector_store.json"

storage_context = StorageContext.from_defaults(

docstore=SimpleDocumentStore.from_persist_dir(persist_dir=index_name),

graph_store=SimpleGraphStore.from_persist_dir(persist_dir=index_name),

vector_store=FaissVectorStore.from_persist_path(persist_path=vectorStorePath),

index_store=SimpleIndexStore.from_persist_dir(persist_dir=index_name),

)

st.session_state.index = load_index_from_storage(storage_context=storage_context,service_context=service_context)

response_synthesizer = get_response_synthesizer(response_mode='refine')

st.session_state.query_engine = st.session_state.index.as_query_engine(response_synthesizer=response_synthesizer,service_context=service_context)

st.session_state.chat_engine = CondenseQuestionChatEngine.from_defaults(

query_engine=st.session_state.query_engine,

verbose=True

)

else:

documents = SimpleDirectoryReader("./documents").load_data()

vector_store = FaissVectorStore(faiss_index=faiss_index)

storage_context = StorageContext.from_defaults(vector_store=vector_store)

st.session_state.index = VectorStoreIndex.from_documents(documents, storage_context=storage_context,service_context=service_context)

st.session_state.index.storage_context.persist(persist_dir=index_name)

response_synthesizer = get_response_synthesizer(response_mode='refine')

st.session_state.query_engine = st.session_state.index.as_query_engine(response_synthesizer=response_synthesizer,service_context=service_context)

st.session_state.chat_engine = CondenseQuestionChatEngine.from_defaults(

query_engine=st.session_state.query_engine,

verbose=True

)

if os.path.exists(pkl_name):

with open(pkl_name, "rb") as f:

st.session_state.stored_docs = pickle.load(f)

else:

st.session_state.stored_docs=list()

with open('config.yaml') as file:

config = yaml.load(file, Loader=yaml.SafeLoader)

authenticator = stauth.Authenticate(

config['credentials'],

config['cookie']['name'],

config['cookie']['key'],

config['cookie']['expiry_days'],

config['preauthorized'],

)

name, authentication_status, username = authenticator.login('Login', 'main')

if 'authentication_status' not in st.session_state:

st.session_state['authentication_status'] = None

if st.session_state["authentication_status"]:

authenticator.logout('Logout', 'main')

st.write(f'ログインに成功しました')

initialize_index()

elif st.session_state["authentication_status"] is False:

st.error('ユーザ名またはパスワードが間違っています')

elif st.session_state["authentication_status"] is None:

st.warning('ユーザ名やパスワードを入力してください')

import streamlit as st

import logging

from llama_index import Prompt

import common

index_name = "./data/storage"

pkl_name = "./data/stored_documents.pkl"

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("__name__")

logger.debug("調査用ログ")

common.check_login()

st.title("💬 Chatbot")

if st.button("リセット",use_container_width=True):

st.session_state.chat_engine.reset()

st.session_state.messages = [{"role": "assistant", "content": "質問をどうぞ"}]

st.experimental_rerun()

logger.info("reset")

if "messages" not in st.session_state:

st.session_state["messages"] = [{"role": "assistant", "content": "質問をどうぞ"}]

for msg in st.session_state.messages:

st.chat_message(msg["role"]).write(msg["content"])

if prompt := st.chat_input():

st.session_state.messages.append({"role": "user", "content": prompt})

st.chat_message("user").write(prompt)

response = st.session_state.chat_engine.chat(prompt)

msg = str(response)

st.session_state.messages.append({"role": "assistant", "content": msg})

st.chat_message("assistant").write(msg)

import openai

import streamlit as st

import os

import pickle

import logging

from llama_index import SimpleDirectoryReader

from llama_index.chat_engine import CondenseQuestionChatEngine;

from llama_index.response_synthesizers import get_response_synthesizer

from llama_index import Prompt, SimpleDirectoryReader

from logging import getLogger, StreamHandler, Formatter

import common

index_name = "./data/storage"

pkl_name = "./data/stored_documents.pkl"

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("__name__")

logger.debug("調査用ログ")

common.check_login()

if "file_uploader_key" not in st.session_state:

st.session_state["file_uploader_key"] = 0

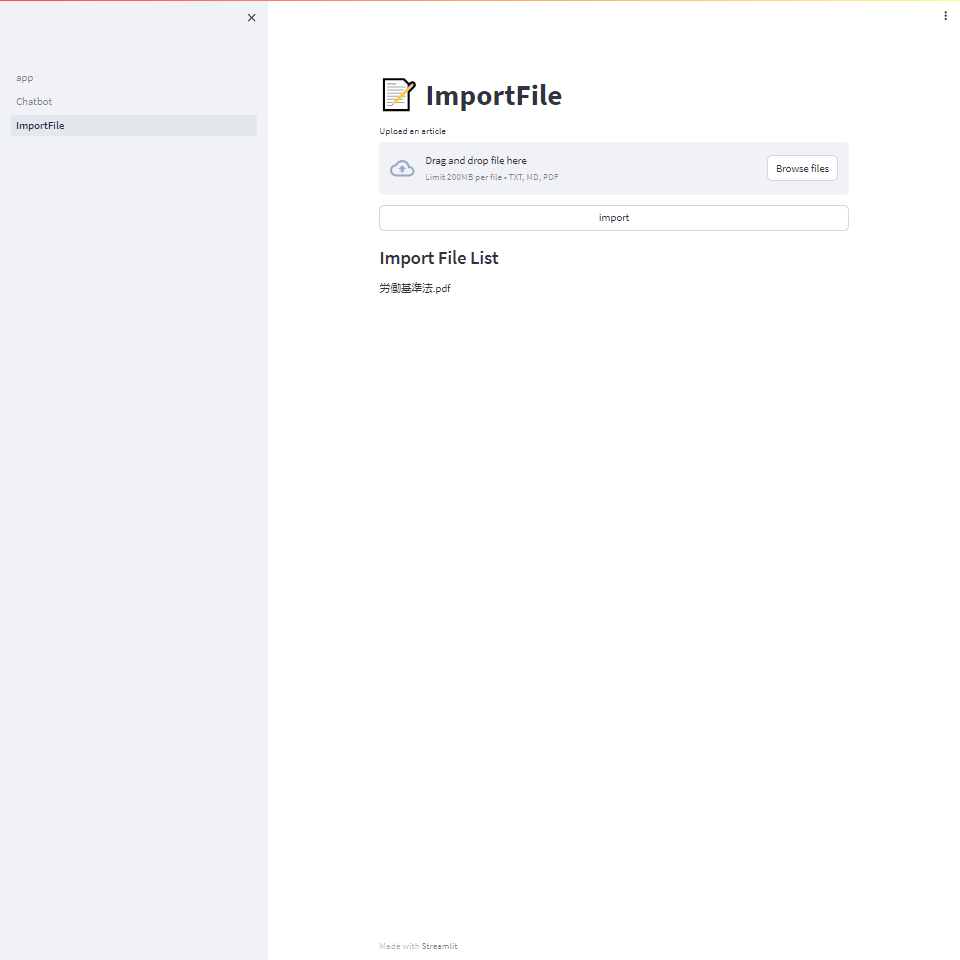

st.title("📝 ImportFile")

uploaded_file = st.file_uploader("Upload an article", type=("txt", "md","pdf"),key=st.session_state["file_uploader_key"])

if st.button("import",use_container_width=True):

filepath = None

try:

filepath = os.path.join('documents', os.path.basename( uploaded_file.name))

logger.info(filepath)

with open(filepath, 'wb') as f:

f.write(uploaded_file.getvalue())

f.close()

document = SimpleDirectoryReader(input_files=[filepath]).load_data()[0]

logger.info(document)

st.session_state.stored_docs.append(uploaded_file.name)

logger.info(st.session_state.stored_docs)

st.session_state.index.insert(document=document)

st.session_state.index.storage_context.persist(persist_dir=index_name)

response_synthesizer = get_response_synthesizer(response_mode='refine')

st.session_state.query_engine = st.session_state.index.as_query_engine(response_synthesizer=response_synthesizer)

st.session_state.chat_engine = CondenseQuestionChatEngine.from_defaults(

query_engine=st.session_state.query_engine,

verbose=True

)

with open(pkl_name, "wb") as f:

print("pickle")

pickle.dump(st.session_state.stored_docs, f)

st.session_state["file_uploader_key"] += 1

st.experimental_rerun()

except Exception as e:

# cleanup temp file

logger.error(e)

if filepath is not None and os.path.exists(filepath):

os.remove(filepath)

st.subheader("Import File List")

if "stored_docs" in st.session_state:

logger.info(st.session_state.stored_docs)

for docname in st.session_state.stored_docs:

st.write(docname)

demoの様子

http://localhost:8501/にアクセスすると確認できます。

ImportFileでファイルを取り込み、Chatbotで質問することで、取り込んだファイルの内容から回答してくれます。

感想

プロンプトや設定を細かくするとより、精度の高い回答になりそうですね。

まだまだ、知識が浅いのでいろいろ記事を見ていこうと思います。