start_neurons = 64

# 8 -> 16

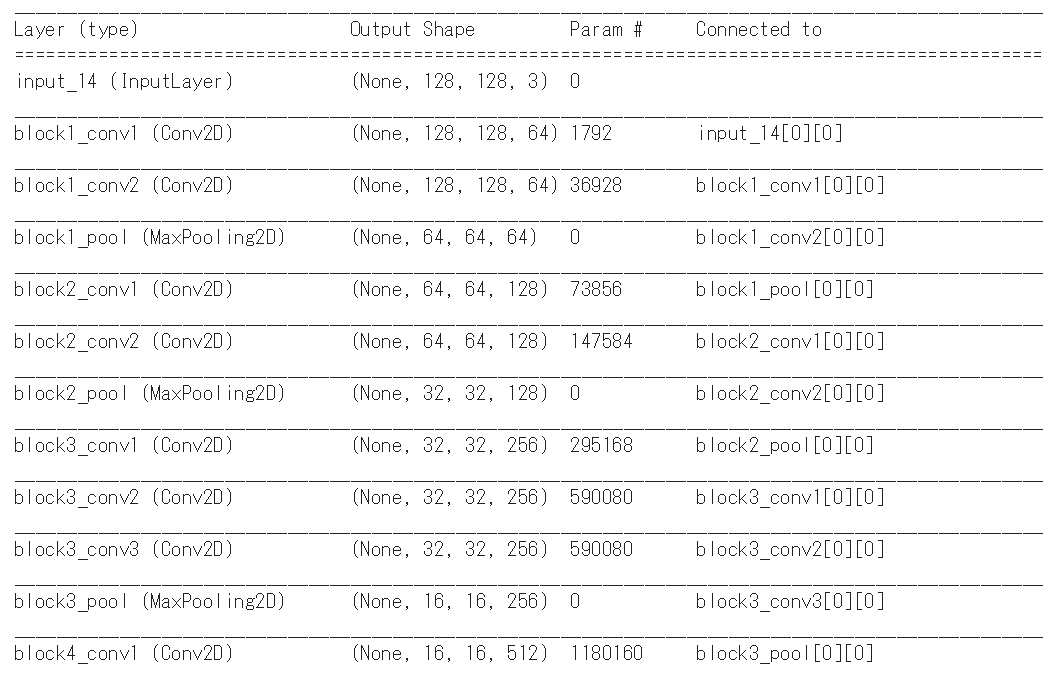

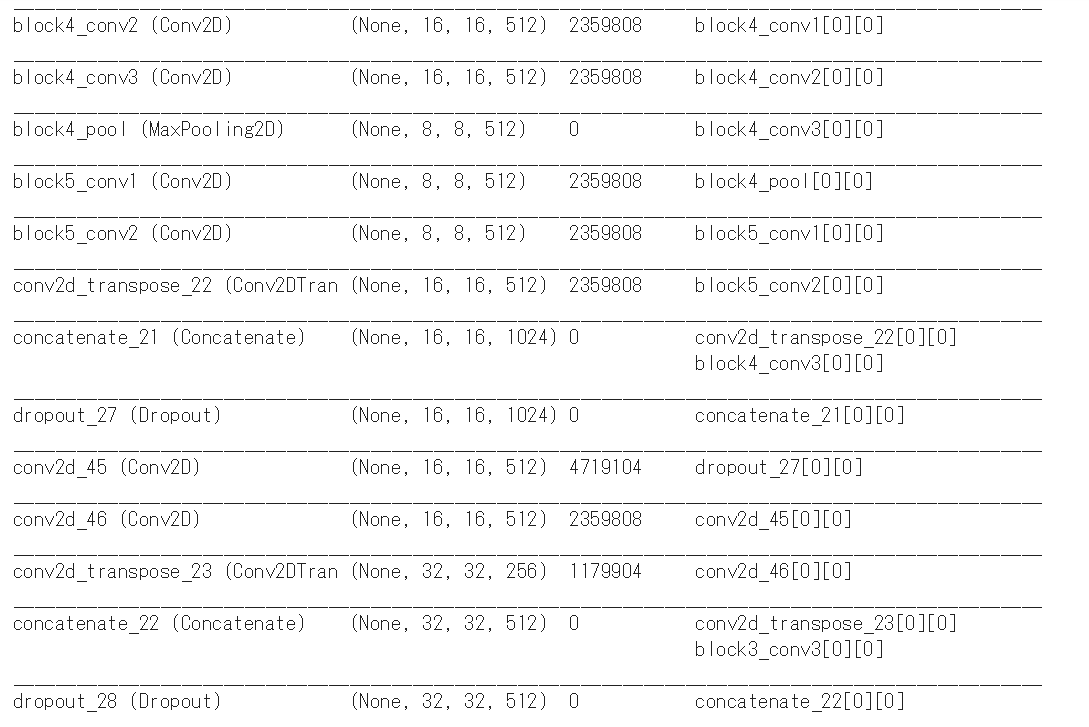

deconv4 = Conv2DTranspose(start_neurons * 8, (3, 3), strides=(2, 2), padding='same')(vgg_top)

uconv4 = concatenate([deconv4, block4_conv3])

uconv4 = Dropout(0.5)(uconv4)

uconv4 = Conv2D(start_neurons * 8, (3, 3), activation='relu', padding='same')(uconv4)

uconv4 = Conv2D(start_neurons * 8, (3, 3), activation='relu', padding='same')(uconv4)

# 16 -> 32

deconv3 = Conv2DTranspose(start_neurons * 4, (3, 3), strides=(2, 2), padding="same")(uconv4)

uconv3 = concatenate([deconv3, block3_conv3])

uconv3 = Dropout(0.5)(uconv3)

uconv3 = Conv2D(start_neurons * 4, (3, 3), activation="relu", padding="same")(uconv3)

uconv3 = Conv2D(start_neurons * 4, (3, 3), activation="relu", padding="same")(uconv3)

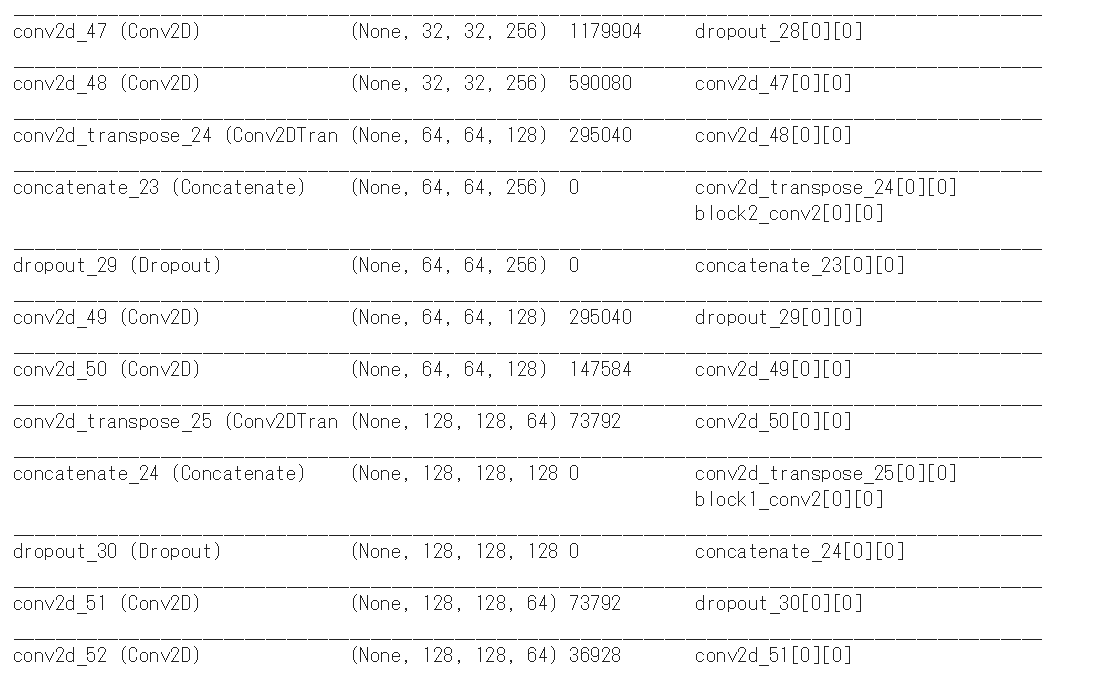

# 32 -> 64

deconv2 = Conv2DTranspose(start_neurons * 2, (3, 3), strides=(2, 2), padding="same")(uconv3)

uconv2 = concatenate([deconv2, block2_conv2])

uconv2 = Dropout(0.5)(uconv2)

uconv2 = Conv2D(start_neurons * 2, (3, 3), activation="relu", padding="same")(uconv2)

uconv2 = Conv2D(start_neurons * 2, (3, 3), activation="relu", padding="same")(uconv2)

# 64 -> 128

deconv1 = Conv2DTranspose(start_neurons * 1, (3, 3), strides=(2, 2), padding="same")(uconv2)

uconv1 = concatenate([deconv1, block1_conv2])

uconv1 = Dropout(0.5)(uconv1)

uconv1 = Conv2D(start_neurons * 1, (3, 3), activation="relu", padding="same")(uconv1)

uconv1 = Conv2D(start_neurons * 1, (3, 3), activation="relu", padding="same")(uconv1)

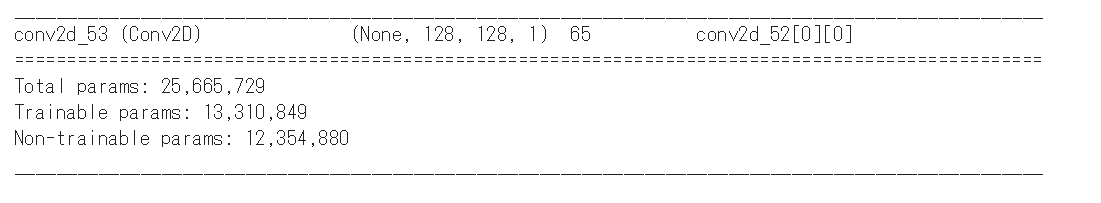

out = Conv2D(1, (1,1), padding="same", activation="sigmoid")(uconv1)

model = Model(inputs=input_tensor, outputs=out)

# for layer in model.layers[:17]:

# layer.trainable = False

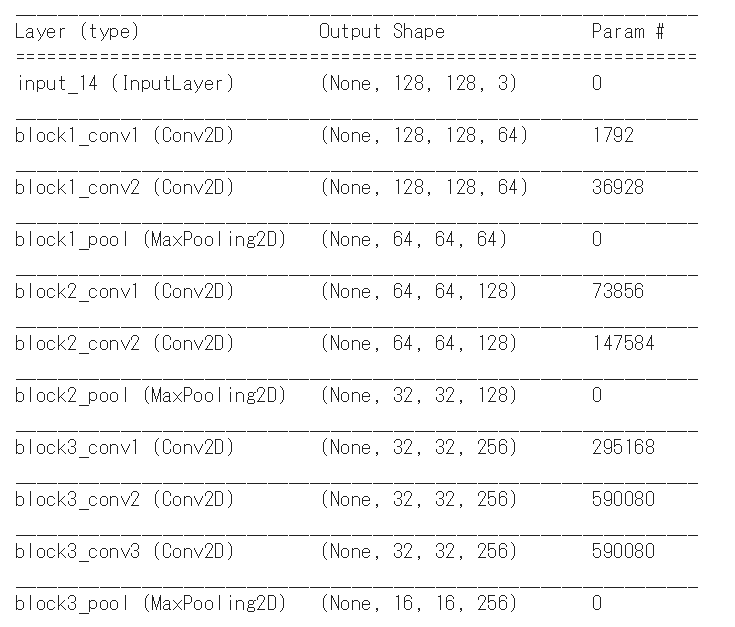

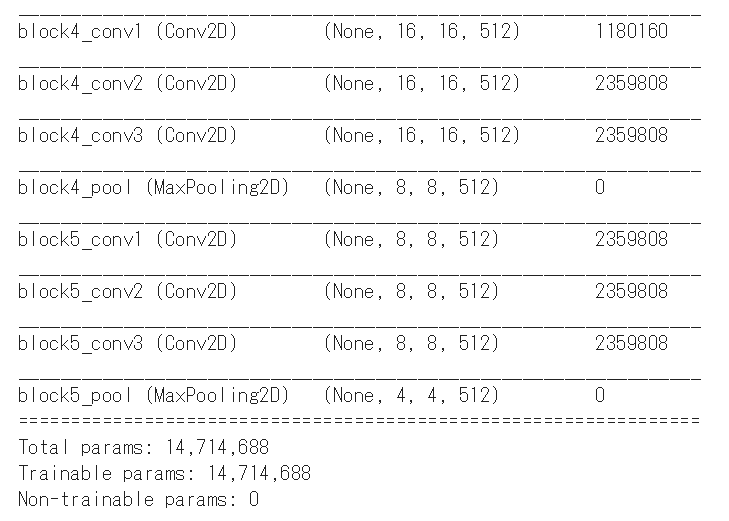

for layer in vgg_model.layers:

layer.trainable = False

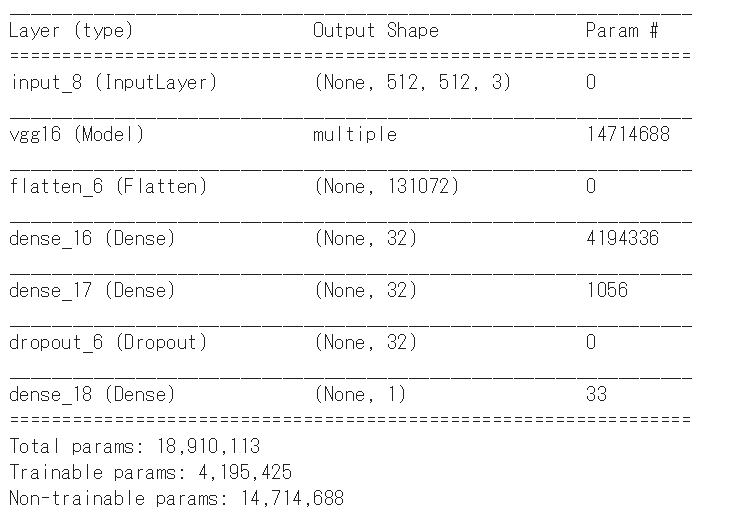

model.summary()