Library

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from tqdm import tqdm

from sklearn.model_selection import train_test_split

import tensorflow as tf

from keras.models import Sequential

from keras.layers import InputLayer, Activation ,Dropout ,Flatten, Dense

from keras.layers import LeakyReLU, Conv2D, MaxPooling2D, BatchNormalization

from keras.layers import GlobalAveragePooling2D

from keras.layers.noise import GaussianNoise

from keras.optimizers import Adam

from keras.callbacks import ReduceLROnPlateau, EarlyStopping

Data

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data()

n_classes = 10

pos = 1

plt.figure(figsize=(10, 10))

for targetClass in tqdm(range(n_classes)):

targetIdx = []

# クラスclassIDの画像のインデックスリストを取得

for i in range(len(y_train)):

if y_train[i] == targetClass:

targetIdx.append(i)

# 各クラスからランダムに選んだ最初の10個の画像を描画

np.random.shuffle(targetIdx)

for idx in targetIdx[:10]:

img = x_train[idx]

plt.subplot(10, 10, pos)

plt.imshow(img)

plt.axis('off')

pos += 1

plt.show()

n_classes = 10

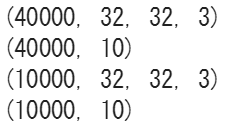

x_train = x_train.astype(np.float)/255.0

y_train_oh = np.identity(n_classes)[y_train]

x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train_oh,

test_size=0.20,

stratify=y_train,

random_state=100)

print (x_train.shape)

print (y_train.shape)

print (x_valid.shape)

print (y_valid.shape)

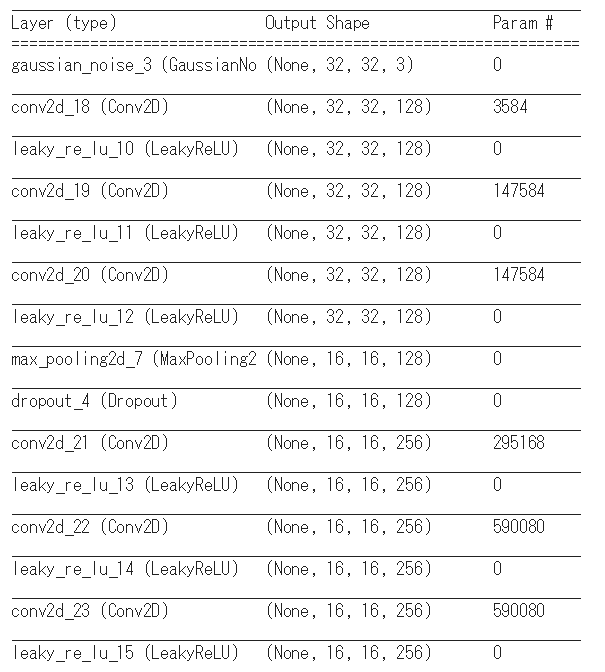

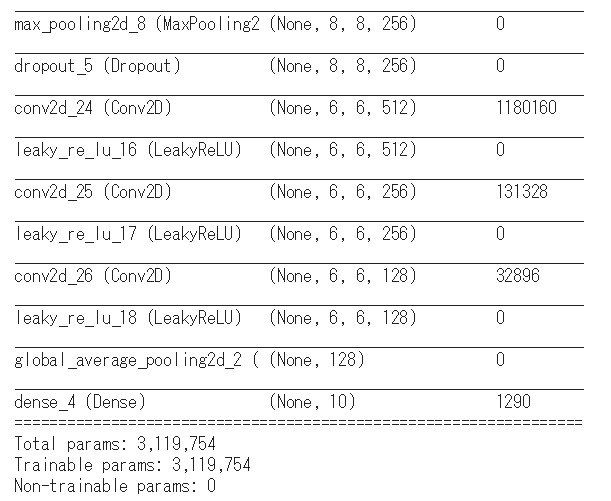

Model 1 (no data augmentation)

input_shape = x_train.shape[1:]

def create_model():

model = Sequential()

model.add(InputLayer(input_shape=input_shape))

model.add(GaussianNoise(0.15))

model.add(Conv2D(128, (3, 3), padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(128, (3, 3), padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(128, (3, 3), padding='same'))

model.add(LeakyReLU(0.1))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

model.add(Conv2D(256, (3, 3), padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(256, (3, 3), padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(256, (3, 3), padding='same'))

model.add(LeakyReLU(0.1))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

model.add(Conv2D(512, (3, 3), padding='valid'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(256, (1, 1)))

model.add(LeakyReLU(0.1))

model.add(Conv2D(128, (1, 1)))

model.add(LeakyReLU(0.1))

model.add(GlobalAveragePooling2D())

model.add(Dense(n_classes, activation='softmax'))

return model

model = create_model()

model.summary()

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics =['accuracy'])

early_stopping = EarlyStopping(monitor='val_acc', patience=5, mode='max',

verbose=1)

lr_reduction = ReduceLROnPlateau(monitor='val_acc', patience=5,

factor=0.5, min_lr=0.00001, verbose=1)

callbacks = [early_stopping, lr_reduction]

batch_size = 32

epochs = 10

history = model.fit(x_train, y_train,

batch_size=batch_size,

epochs=epochs,

validation_data=(x_valid, y_valid),

callbacks=callbacks,

verbose=1)

plt.figure(figsize =(5,3))

plt.plot(history.history['loss'], marker='.', label='train')

plt.plot(history.history['val_loss'], marker='.', label='validation')

plt.title('Loss')

plt.grid(True)

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(loc='best')

plt.show()

plt.figure(figsize =(5,3))

plt.plot(history.history['acc'], marker='.', label='train')

plt.plot(history.history['val_acc'], marker='.', label='validation')

plt.title('Accuracy')

plt.grid(True)

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.legend(loc='best')

plt.show()

Model 2 (data augmentation)

datagen = ImageDataGenerator(

#featurewise_center=True,

#featurewise_std_normalization=True,

rotation_range=20,

width_shift_range=0.2,

height_shift_range=0.2,

horizontal_flip=True)

datagen.fit(x_train)

epochs = 10

batch_size = 32

model.fit_generator(datagen.flow(x_train, y_train, batch_size=batch_size),

steps_per_epoch=len(x_train)//batch_size,

epochs=epochs,

validation_data=(x_valid, y_valid),

validation_steps=len(x_valid)//batch_size,

callbacks=callbacks,

verbose=1)