Library

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

from sklearn.metrics import confusion_matrix, f1_score,recall_score, precision_score

from sklearn.metrics import recall_score, precision_score

Data

y_true = np.array([0, 0, 0, 0, 1, 1, 1, 0, 1, 0])

y_pred = np.array([0, 0, 0, 0, 1, 1, 1, 1, 0, 1])

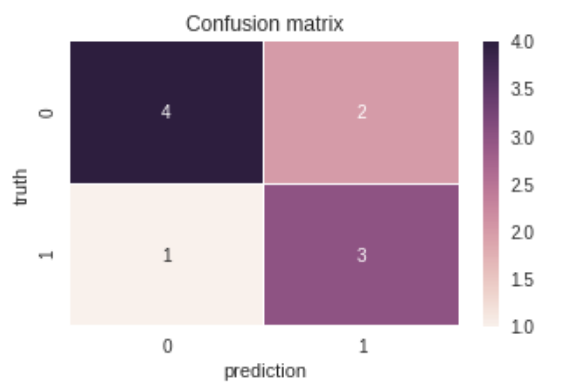

plt.figure(figsize = (5, 3))

sns.heatmap(confusion_matrix(y_true, y_pred), annot=True, linewidths=0.1,

linecolor='white')

plt.title('Confusion matrix')

plt.xlabel('prediction')

plt.ylabel('truth')

plt.show()

Sample code

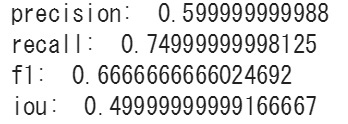

def precision(y_true, y_pred):

true_positives = np.sum(np.round(np.clip(y_true * y_pred, 0, 1)))

predicted_positives = np.sum(np.round(np.clip(y_pred, 0, 1)))

precision = true_positives / (predicted_positives + 1e-10)

return precision

def recall(y_true, y_pred):

true_positives = np.sum(np.round(np.clip(y_true * y_pred, 0, 1)))

possible_positives = np.sum(np.round(np.clip(y_true, 0, 1)))

recall = true_positives / (possible_positives + 1e-10)

return recall

def f1(y_true, y_pred):

pr = precision(y_true, y_pred)

re = recall(y_true, y_pred)

return 2*((pr * re) / (pr + re +1e-10))

def iou(y_true, y_pred):

true_positives = np.sum(np.round(np.clip(y_true * y_pred, 0, 1)))

false_positives = np.sum(np.round(np.clip((1-y_true) * y_pred, 0, 1)))

false_negatives = np.sum(np.round(np.clip(y_true * (1-y_pred), 0, 1)))

return true_positives / (true_positives + false_positives +

false_negatives + 1e-10)

print ('precision: ', precision(y_true, y_pred))

print ('recall: ', recall(y_true, y_pred))

print ('f1: ', f1(y_true, y_pred))

print ('iou: ', iou(y_true, y_pred))

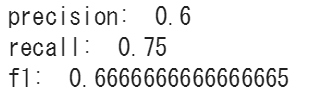

sklearn

print ('precision: ', precision_score(y_true, y_pred))

print ('recall: ', recall_score(y_true, y_pred))

print ('f1: ', f1_score(y_true, y_pred))

Focal loss

def iou_loss(y_true, y_pred):

true_positives = K.sum(K.clip(y_true * y_pred, 0, 1))

false_positives = K.sum(K.clip((1-y_true) * y_pred, 0, 1))

false_negatives = K.sum(K.clip(y_true * (1-y_pred), 0, 1))

io = true_positives / (true_positives + false_positives +

false_negatives + K.epsilon())

return 1-io

def focal_loss(gamma=2., alpha=.25):

def focal_loss_fixed(y_true, y_pred):

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, tf.ones_like(y_pred))

pt_0 = tf.where(tf.equal(y_true, 0), y_pred, tf.zeros_like(y_pred))

ret = -K.sum(alpha * K.pow(1. - pt_1, gamma) *

K.log(pt_1)) -K.sum((1-alpha) * K.pow( pt_0, gamma) *

K.log(1. - pt_0))

return ret

return focal_loss_fixed

#model.compile(loss=[loss_iou], optimizer='adam', metrics=['accuracy', iou])

model.compile(loss=[focal_loss(gamma=2,alpha=0.6)], optimizer='adam', metrics=['accuracy', iou])