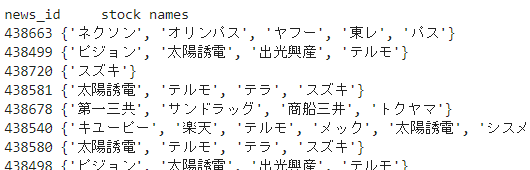

文書中のキーワード(企業名)を検索する

import pandas as pd

import re

df_meigara = pd.read_csv('xxx.csv')

df_news = pd.read_csv('xxx.csv')

stock_code_list = df_meigara['STOCK_CODE'].values

news_id_list = df_news['ID'].values

news_stock = {}

for news_id in news_id_list:

news_text = df_news[df_news['ID']==news_id]['NEWS_DETAIL'].values[0]

stock_list = set()

for stock_code in stock_code_list:

stock_name = df_meigara[df_meigara['STOCK_CODE']==stock_code]['STOCK_NAME'].values[0]

s = re.search(stock_name, news_text)

if s:

stock_list.add(stock_name)

news_stock[news_id] = stock_list

print('news_id', ' stock names')

for news_id in news_id_list[:30]:

if news_stock[news_id]:

print(news_id, news_stock[news_id])

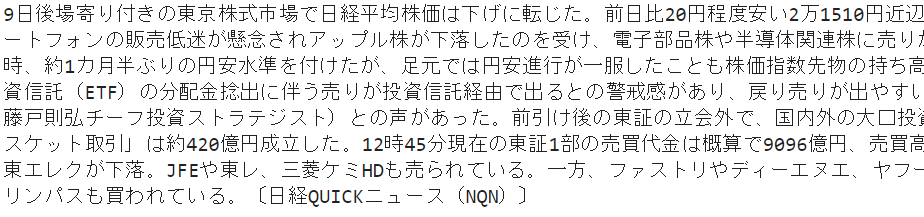

news_id = 438663

text = df_news[df_news['ID']==news_id]['NEWS_DETAIL'].values[0]

print(text)

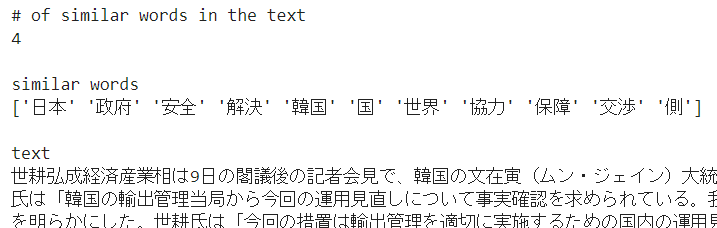

Word2Vec で類似語リストを作成し、文書中に含まれる類似語の数をカウントする

import pandas as pd

import numpy as np

import MeCab

from gensim.models import word2vec

# コーパスの作成

df_news = pd.read_csv('xxx.csv')

tagger = MeCab.Tagger("-Ochasen")

news_list = df_news['NEWS_DETAIL'].values

corpus = []

for text in news_list:

word_list = []

node = tagger.parseToNode(text)

while node:

word = node.surface

hinshi = node.feature.split(",")[0]

if hinshi=='名詞':

word_list.append(word)

node = node.next

corpus.append(word_list)

# 学習

model = word2vec.Word2Vec(corpus, size=100, min_count=1, window=10,

seed=1, workers=1, sg=1, hs=0, negative=5, iter=1)

vocab = model.wv.vocab

index2word = model.wv.index2word

# 各単語に対する類似度リストの作成

similar_words_dict = {}

for word in index2word:

temp = model.wv.most_similar(positive=[word], topn=10)

similar_words = [t[0] for t in temp]

similar_words_dict[word] = similar_words

# 使用例

keyword = '日本'

similar_words = np.concatenate(([keyword] ,similar_words_dict[keyword]))

text = news_list[0]

word_list = set()

node = tagger.parseToNode(text)

while node:

word = node.surface

hinshi = node.feature.split(",")[0]

if hinshi=='名詞':

word_list.add(word)

word_list2.append(word)

node = node.next

word_list = list(word_list)

count = 0

for word in word_list:

if word in similar_words:

count += 1

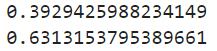

print('# of similar words in the text')

print(count)

print()

print('similar words')

print(similar_words)

print()

print('text')

print(text)

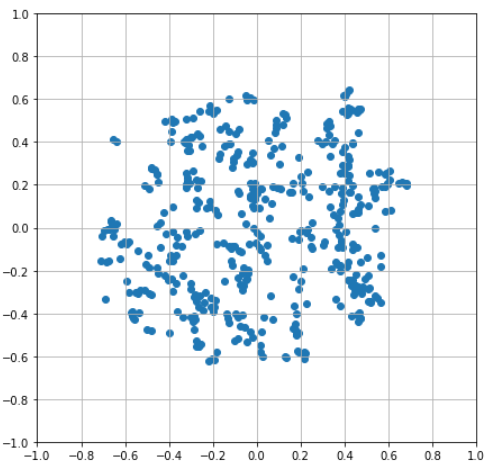

Gensim の Poincaré Embeddings を試す

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import MeCab

from gensim.models.poincare import PoincareModel, PoincareRelations

from gensim.viz.poincare import poincare_2d_visualization

# 階層データの作成

df_news = pd.read_csv('xxx.csv')

news_list = df_news['NEWS_DETAIL'].values

tagger = MeCab.Tagger("-Ochasen")

# relations = set()

relations = [] # 重複があってもよい

for text in news_list[:10]:

word_list = []

node = tagger.parseToNode(text)

while node:

word = node.surface

hinshi = node.feature.split(",")[0]

if hinshi=='名詞':

word_list.append(word)

node = node.next

for i in range(len(word_list)-1):

#relations.add((word_list[i], word_list[i+1]))

relations.append((word_list[i], word_list[i+1]))

# relations = list(relations)

# 学習

model = PoincareModel(relations, size=2, negative=2, burn_in=10, seed=1)

model.train(epochs=100)

# 作図

relations_set = set(relations)

figure_title = ''

show_node_labels = model.kv.vocab.keys()

vis = poincare_2d_visualization(model, relations_set,

figure_title, num_nodes=None,

show_node_labels=show_node_labels)

x = vis['data'][1]['x']

y = vis['data'][1]['y']

text = vis['data'][1]['text']

plt.figure(figsize=(7, 7))

plt.scatter(x, y)

# for i, _ in enumerate(x):

# plt.text(x[i], y[i], text[i])

plt.xticks(np.arange(-1.0, 1.2, 0.2))

plt.yticks(np.arange(-1.0, 1.2, 0.2))

plt.grid('on')

plt.show()

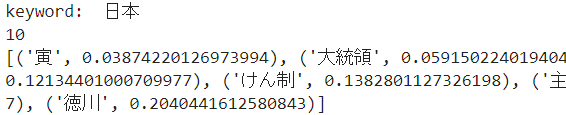

keyword = '日本'

similar_words = model.kv.most_similar(keyword)

print('keyword: ', keyword)

print(len(similar_words))

print(similar_words)

print(model.kv.norm('日本'))

print(model.kv.norm('政府'))

[参照論文・記事]

Poincaré Embeddings for Learning Hierarchical Representations

異空間への埋め込み!Poincare Embeddingsが拓く表現学習の新展開