Riakとは

- Key-Value StoreのNoSQL分散データベース

- AWSのDynamoDB論文をベースに設計された

- マスターレス構成で可用性・耐障害性が非常に高い。一貫性はEventually Consistency

- erlang/otpを分散システムのベースにしている

説明はいろんなサイトにあるので詳細はそちらを。

- basho/riak

- Riak

- Riak Compared to DynamoDB DynamoDBとの比較

- 運用が楽になる分散データベース Riak

- 雲になったコンピュータ: BashoのRiakとは何か?-SDS7-

- Riakはなぜ良いのか

セットアップ

Amazon Linux環境に、記載時点で最新のRiak 2.1.1をダウンロードしてセットアップしてみる Downloads

以下サイトを参考

インストール

$ sudo yum install http://s3.amazonaws.com/downloads.basho.com/riak/2.1/2.1.1/rhel/6/riak-2.1.1-1.el6.x86_64.rpm

証明書の作成

Webベースの管理コンソール(Admin Panel)をHTTPSで使うために証明書が必要なので作成しておく。

$ openssl genrsa -aes128 -out key.pem 1024

$ openssl req -new -key key.pem -out cert.csr

$ mv key.pem key.pem.org

$ openssl rsa -in key.pem.org -out key.pem # 秘密鍵からパスフレーズを削除

$ openssl x509 -req -days 365 -in cert.csr -signkey key.pem -out cert.pem

$ sudo cp key.pem cert.pem /etc/riak/

設定

riak.conf

以下の設定部分を変更

- ノード設定

- SSL証明書設定

- Admin PanelのListenerの設定

- Admin Panelのユーザー設定

$ diff -u riak.conf.orig riak.conf

--- riak.conf.orig 2015-06-22 05:19:16.221995000 +0000

+++ riak.conf 2015-06-22 05:39:59.169302319 +0000

@@ -103,7 +103,7 @@

##

## Acceptable values:

## - text

-nodename = riak@127.0.0.1

+nodename = riak@10.1.11.211

## Cookie for distributed node communication. All nodes in the

@@ -204,14 +204,14 @@

##

## Acceptable values:

## - the path to a file

-## ssl.certfile = $(platform_etc_dir)/cert.pem

+ssl.certfile = $(platform_etc_dir)/cert.pem

## Default key location for https can be overridden with the ssl

## config variable, for example:

##

## Acceptable values:

## - the path to a file

-## ssl.keyfile = $(platform_etc_dir)/key.pem

+ssl.keyfile = $(platform_etc_dir)/key.pem

## Default signing authority location for https can be overridden

## with the ssl config variable, for example:

@@ -283,7 +283,7 @@

##

## Acceptable values:

## - an IP/port pair, e.g. 127.0.0.1:10011

-listener.http.internal = 127.0.0.1:8098

+#listener.http.internal = 127.0.0.1:8098

## listener.protobuf.<name> is an IP address and TCP port that the Riak

## Protocol Buffers interface will bind.

@@ -292,7 +292,7 @@

##

## Acceptable values:

## - an IP/port pair, e.g. 127.0.0.1:10011

-listener.protobuf.internal = 127.0.0.1:8087

+listener.protobuf.internal = 0.0.0.0:8087

## The maximum length to which the queue of pending connections

## may grow. If set, it must be an integer > 0. If you anticipate a

@@ -310,7 +310,7 @@

##

## Acceptable values:

## - an IP/port pair, e.g. 127.0.0.1:10011

-## listener.https.internal = 127.0.0.1:8098

+listener.https.internal = 0.0.0.0:8098

## How Riak will repair out-of-sync keys. Some features require

## this to be set to 'active', including search.

@@ -417,7 +417,7 @@

##

## Acceptable values:

## - on or off

-riak_control = off

+riak_control = on

## Authentication mode used for access to the admin panel.

##

@@ -425,7 +425,7 @@

##

## Acceptable values:

## - one of: off, userlist

-riak_control.auth.mode = off

+riak_control.auth.mode = userlist

## If riak control's authentication mode (riak_control.auth.mode)

## is set to 'userlist' then this is the list of usernames and

@@ -437,7 +437,7 @@

##

## Acceptable values:

## - text

-## riak_control.auth.user.admin.password = pass

+riak_control.auth.user.admin.password = passw0rd

## This parameter defines the percentage of total server memory

## to assign to LevelDB. LevelDB will dynamically adjust its internal

ulimit変更

そのまま起動しようとすると起動時に以下のようなエラーが表示され、open filesの上限を上げるように警告される。

$ sudo /etc/init.d/riak restart

Starting riak: !!!!

!!!! WARNING: ulimit -n is 1024; 65536 is the recommended minimum.

!!!!

^C

Session terminated, killing shell... ...killed.

[FAILED]

ulimitを変更して対応。

$ sudo vi /etc/security/limits.conf

root soft nofile 65536

root hard nofile 65536

システムを再起動して反映。

# ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 7826

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 65536

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 7826

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

Riakサービスの起動

$ sudo /etc/init.d/riak restart

Starting riak: [ OK ]

動作確認

ping

$ curl -ik https://127.0.0.1:8098/ping

HTTP/1.1 200 OK

Server: MochiWeb/1.1 WebMachine/1.10.8 (that head fake, tho)

Date: Mon, 29 Jun 2015 09:58:23 GMT

Content-Type: text/html

Content-Length: 2

OK

統計情報 statistics

$ curl -sk https://127.0.0.1:8098/stats | jq .

{

"connected_nodes": [],

"consistent_get_objsize_100": 0,

"consistent_get_objsize_95": 0,

"consistent_get_objsize_99": 0,

"consistent_get_objsize_mean": 0,

"consistent_get_objsize_median": 0,

"consistent_get_time_100": 0,

"consistent_get_time_95": 0,

"consistent_get_time_99": 0,

"consistent_get_time_mean": 0,

"consistent_get_time_median": 0,

"consistent_gets": 0,

"consistent_gets_total": 0,

"consistent_put_objsize_100": 0,

"consistent_put_objsize_95": 0,

...

}

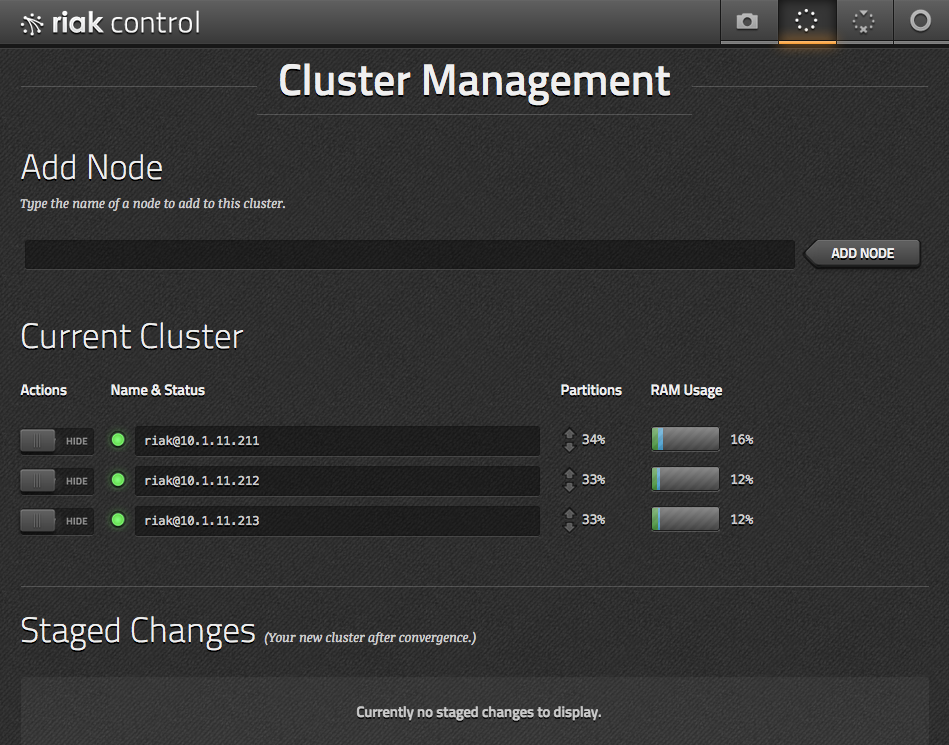

Admin Panel へのアクセス

https://<HOSTNAME>:8098/adminにアクセスすると以下のようなadmin panelが表示される(外部からのアクセスを許可しておく必要がある)。

riak.confで、listener.https.internal = 0.0.0.0:8098のようにHTTPSを有効にしている場合は、https://を明示的に指定しないと画面が表示されないので注意。

クラスタの構成

Riakではスケーラブルなマスターレスのクラスタを簡単に構成できる。

初期状態

まずはクラスタが構成されてない状態での確認。

- ring status

[riak@10.1.11.211 ~]$ sudo riak-admin ring_status

================================== Claimant ===================================

Claimant: 'riak@10.1.11.211'

Status: up

Ring Ready: true

============================== Ownership Handoff ==============================

No pending changes.

============================== Unreachable Nodes ==============================

All nodes are up and reachable

- クラスタのステータス

[riak@10.1.11.211 ~]$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| (C) riak@10.1.11.211 |valid | up |100.0| -- |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

- クラスタ関連の統計情報

$ curl -sk https://127.0.0.1:8098/stats | jq '{vnode_gets, vnode_puts, vnode_index_reads, ring_members, connected_nodes, ring_num_partitions, ring_ownership }'

{

"vnode_gets": 0,

"vnode_puts": 0,

"vnode_index_reads": 0,

"ring_members": [

"riak@10.1.11.211"

],

"connected_nodes": [],

"ring_num_partitions": 64,

"ring_ownership": "[{'riak@10.1.11.211',64}]"

}

ノードの複製

AMIからインスタンスを作成して、riak.confでnodenameを書き換えて起動しただけでは、以下の様なエラーがでてriakが起動できない。

2015-06-29 10:50:40.364 [error] <0.201.0> gen_server riak_core_capability terminated with reason: no function clause matching orddict:fetch('riak@10.1.11.212', []) line 72

2015-06-29 10:50:40.365 [error] <0.201.0> CRASH REPORT Process riak_core_capability with 0 neighbours exited with reason: no function clause matching orddict:fetch('riak@10.1.11.212', []) line 72

in gen_server:terminate/6 line 744

2015-06-29 10:50:40.365 [error] <0.164.0> Supervisor riak_core_sup had child riak_core_capability started with riak_core_capability:start_link() at <0.201.0> exit with reason no function clause ma

tching orddict:fetch('riak@10.1.11.212', []) line 72 in context child_terminated

以下のリンクに解決方法あり。古いring情報が残っているため不整合になっている。

gen_server riak_core_capability terminated with reason: no function clause matching orddict:fetch('Node', [])

The Node has been changed, either through change of IP or vm.args -name without notifying the ring. Either use the riak-admin cluster replace command, or remove the corrupted ring files rm -rf /var/lib/riak/ring/* and rejoin to the cluster

riak-admin cluster replaceを実行するか、/var/lib/riak/ring/*を削除して再起動して解決。

$ sudo /etc/init.d/riak stop

$ sudo rm -f /var/lib/riak/ring/*

$ sudo /etc/init.d/riak start

Starting riak: [ OK ]

クラスタへのノード追加

クラスタの構成は、コマンドとWebのAdmin Panelから実行できるが、ここではコマンドで追加してみる。

主に使うコマンドは以下のとおり。

riak-admin ring_status # ringステータスを確認

riak-admin cluster join <NODE> # コマンドを実行したノードを<NODE>が所属するクラスタに追加

riak-admin cluster plan # 変更を内容を確認

riak-admin cluster commit # 変更をコミット

riak-admin cluster status # クラスタのステータスを確認

クラスタへのノード追加

# riak@10.1.11.212のクラスタに、riak@10.1.11.211を追加

[riak@10.1.11.211 ~]$ sudo riak-admin cluster join riak@10.1.11.212

Success: staged join request for 'riak@10.1.11.211' to 'riak@10.1.11.212'

# riak@10.1.11.211は既にクラスタに追加されているので、riak@10.1.11.213とクラスタにはなれない

[riak@10.1.11.211 ~]$ sudo riak-admin cluster join riak@10.1.11.213

Failed: This node is already a member of a cluster

# クラスタへの変更内容を確認

[riak@10.1.11.211 ~]$ sudo riak-admin cluster plan

=============================== Staged Changes ================================

Action Details(s)

-------------------------------------------------------------------------------

join 'riak@10.1.11.211'

-------------------------------------------------------------------------------

NOTE: Applying these changes will result in 1 cluster transition

###############################################################################

After cluster transition 1/1

###############################################################################

================================= Membership ==================================

Status Ring Pending Node

-------------------------------------------------------------------------------

valid 0.0% 50.0% 'riak@10.1.11.211'

valid 100.0% 50.0% 'riak@10.1.11.212'

-------------------------------------------------------------------------------

Valid:2 / Leaving:0 / Exiting:0 / Joining:0 / Down:0

WARNING: Not all replicas will be on distinct nodes

Transfers resulting from cluster changes: 32

32 transfers from 'riak@10.1.11.212' to 'riak@10.1.11.211'

# 変更をcommit

[riak@10.1.11.211 ~]$ sudo riak-admin cluster commit

Cluster changes committed

riak@10.1.11.211とriak@10.1.11.211がクラスタになった状態

[riak@10.1.11.211 ~]$ sudo riak-admin ring_status

================================== Claimant ===================================

Claimant: 'riak@10.1.11.212'

Status: up

Ring Ready: true

============================== Ownership Handoff ==============================

No pending changes.

============================== Unreachable Nodes ==============================

All nodes are up and reachable

[riak@10.1.11.211 ~]$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.211 |valid | up | 50.0| -- |

| (C) riak@10.1.11.212 |valid | up | 50.0| -- |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

別のノードを追加

# riak@10.1.11.213を、riak@10.1.11.211が含まれるクラスタに追加

[riak@10.1.11.213 ~]$ sudo riak-admin cluster join riak@10.1.11.211

Success: staged join request for 'riak@10.1.11.213' to 'riak@10.1.11.211'

[riak@10.1.11.213 ~]$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+-------+-------+-----+-------+

| node |status | avail |ring |pending|

+----------------------+-------+-------+-----+-------+

| riak@10.1.11.213 |joining| up | 0.0| -- |

| riak@10.1.11.211 | valid | up | 50.0| -- |

| (C) riak@10.1.11.212 | valid | up | 50.0| -- |

+----------------------+-------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

# 変更の確認

[riak@10.1.11.213 ~]$ sudo riak-admin cluster plan

=============================== Staged Changes ================================

Action Details(s)

-------------------------------------------------------------------------------

join 'riak@10.1.11.213'

-------------------------------------------------------------------------------

NOTE: Applying these changes will result in 1 cluster transition

###############################################################################

After cluster transition 1/1

###############################################################################

================================= Membership ==================================

Status Ring Pending Node

-------------------------------------------------------------------------------

valid 50.0% 34.4% 'riak@10.1.11.211'

valid 50.0% 32.8% 'riak@10.1.11.212'

valid 0.0% 32.8% 'riak@10.1.11.213'

-------------------------------------------------------------------------------

Valid:3 / Leaving:0 / Exiting:0 / Joining:0 / Down:0

WARNING: Not all replicas will be on distinct nodes

Transfers resulting from cluster changes: 21

10 transfers from 'riak@10.1.11.211' to 'riak@10.1.11.213'

11 transfers from 'riak@10.1.11.212' to 'riak@10.1.11.213'

# 変更のcommit

[riak@10.1.11.213 ~]$ sudo riak-admin cluster commit

Cluster changes committed

クラスタのステータス確認

コマンドで確認

$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.211 |valid | up | 34.4| -- |

| (C) riak@10.1.11.212 |valid | up | 32.8| -- |

| riak@10.1.11.213 |valid | up | 32.8| -- |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

REST APIから確認

[riak@10.1.11.211 ~]$ curl -sk https://127.0.0.1:8098/stats | jq '{vnode_gets, vnode_puts, vnode_index_reads, ring_members, connected_nodes, ring_num_partitions, ring_ownership }'

{

"vnode_gets": 0,

"vnode_puts": 0,

"vnode_index_reads": 0,

"ring_members": [

"riak@10.1.11.211",

"riak@10.1.11.212",

"riak@10.1.11.213"

],

"connected_nodes": [

"riak@10.1.11.212",

"riak@10.1.11.213"

],

"ring_num_partitions": 64,

"ring_ownership": "[{'riak@10.1.11.211',21},{'riak@10.1.11.212',22},{'riak@10.1.11.213',21}]"

}

Admin Panelから確認

ノード障害発生時の挙動

ノードを1つダウンしてみる

$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.211 |valid | up | 34.4| -- |

| (C) riak@10.1.11.212 |valid | up | 32.8| -- |

| riak@10.1.11.213 |valid | down! | 32.8| -- |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

[ec2-user@ip-10-1-11-211 ~]$ sudo riak-admin ring_status

================================== Claimant ===================================

Claimant: 'riak@10.1.11.212'

Status: up

Ring Ready: true

============================== Ownership Handoff ==============================

No pending changes.

============================== Unreachable Nodes ==============================

The following nodes are unreachable: ['riak@10.1.11.213']

WARNING: The cluster state will not converge until all nodes

are up. Once the above nodes come back online, convergence

will continue. If the outages are long-term or permanent, you

can either mark the nodes as down (riak-admin down NODE) or

forcibly remove the nodes from the cluster (riak-admin

force-remove NODE) to allow the remaining nodes to settle.

down!マークが付いて、ノード障害が認識されていることがわかる。

Riakはデータを複数ノードに分散して保存しているため、この状態でもサービスに影響はない。

障害が長期に渡る場合は、riak-admin down <NODE>で明示的にダウンした状態として認識させておくか、riak-admin force-remove <NODE>でクラスタから外す。

riak-admin down

$ sudo riak-admin down riak@10.1.11.213

Success: "riak@10.1.11.213" marked as down

$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.213 | down | down | 32.8| -- |

| riak@10.1.11.211 |valid | up | 34.4| -- |

| (C) riak@10.1.11.212 |valid | up | 32.8| -- |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

$ sudo riak-admin ring_status

================================== Claimant ===================================

Claimant: 'riak@10.1.11.212'

Status: up

Ring Ready: true

============================== Ownership Handoff ==============================

No pending changes.

============================== Unreachable Nodes ==============================

All nodes are up and reachable

明示的にdownの状態にしたので、Unreachable Nodesにはダウンしたノードが表示されなくなった。

riak-admin force-remove

$ sudo riak-admin cluster force-remove riak@10.1.11.213

Success: staged remove request for 'riak@10.1.11.213'

$ sudo riak-admin cluster plan

=============================== Staged Changes ================================

Action Details(s)

-------------------------------------------------------------------------------

force-remove 'riak@10.1.11.213'

-------------------------------------------------------------------------------

WARNING: All of 'riak@10.1.11.213' replicas will be lost

NOTE: Applying these changes will result in 1 cluster transition

###############################################################################

After cluster transition 1/1

###############################################################################

================================= Membership ==================================

Status Ring Pending Node

-------------------------------------------------------------------------------

valid 67.2% 50.0% 'riak@10.1.11.211'

valid 32.8% 50.0% 'riak@10.1.11.212'

-------------------------------------------------------------------------------

Valid:2 / Leaving:0 / Exiting:0 / Joining:0 / Down:0

WARNING: Not all replicas will be on distinct nodes

Partitions reassigned from cluster changes: 21

21 reassigned from 'riak@10.1.11.213' to 'riak@10.1.11.211'

Transfers resulting from cluster changes: 21

16 transfers from 'riak@10.1.11.211' to 'riak@10.1.11.212'

5 transfers from 'riak@10.1.11.212' to 'riak@10.1.11.211'

$ sudo riak-admin cluster commit

Cluster changes committed

ring状態が徐々に収束して均等になる。

$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.211 |valid | up | 67.2| 50.0 |

| (C) riak@10.1.11.212 |valid | up | 32.8| 50.0 |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.211 |valid | up | 54.7| 50.0 |

| (C) riak@10.1.11.212 |valid | up | 45.3| 50.0 |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected

$ sudo riak-admin cluster status

---- Cluster Status ----

Ring ready: true

+----------------------+------+-------+-----+-------+

| node |status| avail |ring |pending|

+----------------------+------+-------+-----+-------+

| riak@10.1.11.211 |valid | up | 50.0| -- |

| (C) riak@10.1.11.212 |valid | up | 50.0| -- |

+----------------------+------+-------+-----+-------+

Key: (C) = Claimant; availability marked with '!' is unexpected