はじめに

pythonのプログラムで最良経路探索問題を解いてみました.

教科書として『強化学習』を使いました.

本記事の構成

- はじめに

- 最良経路探索

- ルール

- Q 値の更新

- 実装

- 結果

- おわりに

最良経路探索

ルール

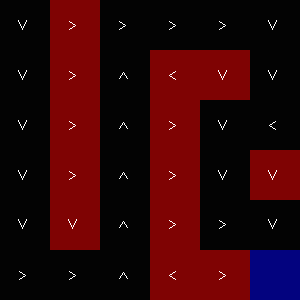

図のような迷路での探索問題を考えます.白い丸がエージェント,赤がマイナス領域,青がゴールになります.

ここでいう探索問題とは,ゴールに辿り着くまでの報酬を最大にする経路を探索することを指します.

以下にルールを示します.

- 迷路のサイズ: $6\times6$

- それぞれの領域における報酬:黒の領域 $0$,赤の領域 $-1$,青の領域 $+3$

- 青のゴールに辿り着いたら左上のスタート地点からやり直す

Q 値の更新

以下の式により $Q$ 値を更新します.

Q_{new}(s_t, a) = Q_{old}(s_t, a) + \alpha\bigl[r_{t+1} + \gamma max_{a'}Q(s_{t+1}, a') - Q_{old}(s_t, a)\bigr]

$Q(s_t, a)$ は,ある状態 $s_t$ での行動 $a$ の価値を表します.状態 $s_t$ での行動 $a$ により報酬 $r_{t+1}$ が得られます.

$Q$ 値を更新する際,直近の報酬 $r_{t+1}$ に,その次の状態 $s_{t+1}$ における行動 $a'$ によって得られる価値の最大値に $\gamma$ を乗じたものを加えます.

これは,ある状態での行動を直近の報酬だけでなく,その後の状態における価値を加味して行動を選択することを意味します.

$\gamma$ を割引率,$\alpha$ を学習率と呼びます.

実装

pythonで実装しました.

ランダムな行動をとる確率 $\varepsilon = 0.1$,学習率 $\alpha = 0.2$,割引率 $\gamma = 0.9$ としています.

$300$ 回ゴールしたら学習終了としています.

.

|- agent.py エージェントクラス

|- mazeimage.py 表示・保存用のクラス

|- main.py

|- resources

|- maze.csv

import numpy

import cv2

import random

import copy

class Agent:

# constructor

def __init__(self, maze_shape):

self.state = numpy.array([0, 0])

self.actions = self.__create_actions(maze_shape)

self.moves = {0: numpy.array([0, -1]), 1: numpy.array([1, 0]), 2: numpy.array([0, 1]), 3: numpy.array([-1, 0])}

self.q = numpy.zeros((4, ) + maze_shape)

# public method

def act(self, maze, epsilon, alpha, gamma):

act_index = self.__select_action(epsilon)

move = self.moves.get(act_index)

reward = maze[tuple(self.state + move)]

self.update_q(act_index, move, reward, alpha, gamma)

self.state += move

def update_q(self, act_index, move, reward, alpha, gamma):

y, x = self.state

_q = self.q[act_index, y, x]

self.q[act_index, y, x] = _q + alpha * (reward + gamma * self.__get_max_q(move) - _q)

def goal(self, maze_shape):

return numpy.array_equal(self.state, numpy.array(maze_shape) - 1)

def reset(self):

self.state = numpy.array([0, 0])

# private method

def __create_actions(self, maze_shape):

actions = []

maze_h, maze_w = maze_shape

for j in range(maze_h):

actions.append([])

for i in range(maze_w):

action = [0, 1, 2, 3]

self.__remove_actions(action, maze_h, maze_w, j, i)

actions[j].append(action)

return numpy.array(actions)

def __remove_actions(self, action, maze_h, maze_w, j, i):

if i == 0:

action.remove(0)

if i == maze_w - 1:

action.remove(2)

if j == 0:

action.remove(3)

if j == maze_h - 1:

action.remove(1)

def __select_action(self, epsilon):

y, x = self.state

action = copy.deepcopy(self.actions[y, x])

if numpy.random.rand() > epsilon:

mode = '!!!greedy!!!'

act_index = self.__select_greedy_action(action)

else:

mode = '!!!random!!!'

act_index = self.__select_random_action(action)

print u'%s state: (%d, %d), action: %d' % (mode, y, x, act_index)

return act_index

def __select_greedy_action(self, action):

y, x = self.state

_max = self.q[action, y, x].max()

_indexes = list(numpy.argwhere(self.q[action, y, x] == _max))

random.shuffle(_indexes)

return action[_indexes[0]]

def __select_random_action(self, action):

random.shuffle(action)

return action[0]

def __get_max_q(self, move):

y, x = self.state + move

move = self.actions[y, x]

return self.q[move, y, x].max()

import numpy

import cv2

class MazeImage:

# constructor

def __init__(self, maze, height, width):

self.height, self.width = (height, width)

self.maze_h, self.maze_w = maze.shape

self.ystride = height / self.maze_h

self.xstride = width / self.maze_w

self.map_org = self.__create_image(maze)

self.map_now = self.map_org

self.writer = cv2.VideoWriter('q_learning.mv4', cv2.cv.CV_FOURCC('m', 'p', '4', 'v'), 30, (self.map_org.shape[1], self.map_org.shape[0]))

# public method

def show(self, agent):

self.map_now = self.map_org.copy()

_y, _x = agent.state

center = (int((_x + 0.5) * self.xstride), int((_y + 0.5) * self.ystride))

cv2.circle(self.map_now, center, 11, (255, 255, 255), -1, cv2.cv.CV_AA)

cv2.imshow('', self.map_now)

return cv2.waitKey(10)

def save_movie(self):

self.writer.write(self.map_now)

def shortest_path(self, q):

shortest_map = self.map_org.copy()

q_arrow = numpy.vectorize(lambda x: {0: '<', 1: 'V', 2: '>', 3: '^'}.get(x))(q.argmax(axis = 0))

for j in range(self.maze_h):

for i in range(self.maze_w):

pt = (int(self.xstride * (i + 0.35)), int(self.ystride * (j + 0.6)))

cv2.putText(shortest_map, q_arrow[j, i], pt, cv2.FONT_HERSHEY_PLAIN, 1, [255, 255, 255])

return shortest_map

# private method

def __create_image(self, maze):

image = numpy.zeros((self.height, self.width, 3)).astype('uint8')

for j in range(self.maze_h):

for i in range(self.maze_w):

tl = (self.xstride * i, self.ystride * j)

br = (self.xstride * (i + 1) - 1, self.ystride * (j + 1) - 1)

cv2.rectangle(image, tl, br, self.__color(maze[j, i]), -1)

return image

def __color(self, score):

if score == 0.0:

return [0, 0, 0]

elif score == -1.0:

return [0, 0, 127]

else:

return [127, 0, 0]

from agent import *

from mazeimage import *

if __name__ == '__main__':

# init

epsilon = 0.1

alpha = 0.2

gamma = 0.9

maze = numpy.loadtxt('./resources/maze.csv', delimiter = ',')

agent = Agent(maze.shape)

maze_image = MazeImage(maze, 300, 300)

trial = 0

while True:

if maze_image.show(agent) == 27:

print '!!!escape!!!'

break

agent.act(maze, epsilon, alpha, gamma)

maze_image.save_movie()

if agent.goal(maze.shape):

print '\033[32m' + '!!!goal!!!' + '\033[0m'

trial += 1

print 'next trial: %d' % trial

agent.reset()

if trial == 300:

break

maze_image.save_movie()

cv2.imwrite('shortest.png', maze_image.shortest_path(agent.q))

cv2.destroyAllWindows()

maze.csv

| 0 | -1 | 0 | 0 | 0 | 0 |

| 0 | -1 | 0 | -1 | -1 | 0 |

| 0 | -1 | 0 | -1 | 0 | 0 |

| 0 | -1 | 0 | -1 | 0 | -1 |

| 0 | -1 | 0 | -1 | 0 | 0 |

| 0 | 0 | 0 | -1 | -1 | 3 |

結果

学習により得られた最良経路の図を示します.マイナスの領域を通らずにゴールに辿り着く経路が学習できています.

学習の様子を動画で保存するように実装したので,興味のある方は動かしてみてください.

おわりに

最良経路探索問題を解くことができました.

$Q(s_t, a)$ の更新式が示すように,$1$ つ先の状態における価値を考慮することで,ゴール側から価値が染み出してくるということが理解できました.