以前仕事でUbuntuのデスクトップでpix2pixの環境を設定して動かしたりしていましたが、自宅で遊ぶため、Colaboratoryでも動かせないか試してみます。

Kerasで、ベーシックなfacadesデータセットで学習が進むところまで進めてみます。

事前準備として、学習にGPUを使いたいため、Colaboratoryのメニューから、「編集」→「ノートブックの設定」→「ハードウェア アクセラレータ」にGPUを選択します。

まずはBuilding the dataのところの指示に従って進めていきます。

Colaboratoryで扱う都合、各コマンドをコマンドラインからではなく、ノート上で!マークを付けてコマンドを実行していきます。

Colaboratory上で、gitコマンドでgithub.com/phillipi/pix2pixのコードをcloneします。

!git clone https://github.com/phillipi/pix2pix.git

別途、Kerasで書かれたものを扱いたいため、github.com/tdeboissiere/DeepLearningImplementationsのコードをcloneします。

!git clone https://github.com/tdeboissiere/DeepLearningImplementations.git

tdeboissiere/DeepLearningImplementationsのリポジトリ内のpix2pixのフォルダのものとなります。これの中身を、phillipi/pix2pixのcloneしたフォルダ内に入れます。

!rsync -a DeepLearningImplementations/pix2pix/ pix2pix/

一応中身を確認。

ls pix2pix/

data/ figures/ LICENSE models.lua* scripts/ test.lua* util/

datasets/ imgs/ models/ README.md src/ train.lua

DeepLearningImplementations側のsrcフォルダなど追加されたので大丈夫そうです。

用意されているfacadesデータセットをダウンロードします。

download_datasetというスクリプトが用意されているので、そちらを実行します。

29MB程度のライトなデータセットになります。

Colaboratory上から実行しているのと、download_dataset.sh側で相対パスの指定がされているので、リポジトリの説明に書かれている通りに、./datasets/download_dataset.shという指定ができるようにディレクトリを切り替えます。

コマンドラインを使わず、Colaboratory上からどうやるんだろう?と調べたところ、os.chdir関数でシンプルにいけるようです。

参考 : Changing directory in Google collab (breaking out of the python interpreter)

import os

os.chdir('pix2pix/')

!bash ./datasets/download_dataset.sh facades

ダウンロードしたデータを、HDF5形式にする必要があります。

make_dataset.pyというスクリプトが用意されているので、そちらを動かします。

※以前、UbuntuのデスクトップPCで動かした際には、python2.7系だと躓きました。そのため、このスクリプトはpython3系で動かします。

!python -V

Python 3.6.3

また、リポジトリで指定されているいくつかのライブラリをpipで設定しておきます。(時間短縮のため、KerasやTensorFlowなど、Colaboratoryで最初から使えるものは一旦省略します。もし進めてみてバージョンの差異でエラーになったら調整)

!pip install parmap==1.5.1

!pip install tqdm==4.17.0

!pip install opencv_python==3.3.0.10

!pip install h5py==2.7.0

!pip install numpy==1.13.3

make_dataset.pyのスクリプトが、内部で相対パスで指定されており、デフォルトでノートの実行している現在のディレクトリからmake_dataset.pyを実行するとエラーになってしまうので、リポジトリの記載に合わせて現在のディレクトリを変更します。(make_dataset.pyの設置してあるディレクトリへ)

os.chdir('./src/data/')

ls

make_dataset.py README.md

!python make_dataset.py ../../datasets/facades/ 3 --img_size 256

※jpgが設置されている(=facadesデータセットの)ディレクトリ、カラーチャンネル数(今回は普通のjpg画像なので3)、画像のサイズ(縦256横256ピクセルのデータセットなので256)を指定します。

100%|█████████████████████████████████████████████| 4/4 [00:02<00:00, 1.67it/s]

100%|█████████████████████████████████████████████| 1/1 [00:00<00:00, 1.19it/s]

100%|█████████████████████████████████████████████| 1/1 [00:00<00:00, 1.65it/s]

Training and evaluatingの説明を参考に進めていきます。

os.chdir('../../src/model/')

gaphvizなどがないとKerasで怒られてしまうので、そちらも入れておきます。

!pip install pydot==1.2.3

!pip install graphviz==0.8.1

!pip install pydot3==1.0.9

!pip install pydot-ng==1.0.0

!apt-get install graphviz

学習を動かします。今回の記事ではpix2pixが動かせることを確認するのが目的なので、エポック数は10だけにします。(デフォルトだと400エポックなのと、現時点ではColaboratoryでファイルが消えていように設定しているわけではないので、一旦すぐに終わるようにします。)

!python main.py 64 64 --backend tensorflow --nb_epoch 10

最初にGPUの設定をしていないと、10エポックでも結構時間がかかってしまうと思うのでご注意を。

Using TensorFlow backend.

2018-06-30 10:16:00.491896: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:897] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2018-06-30 10:16:00.492350: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1392] Found device 0 with properties:

name: Tesla K80 major: 3 minor: 7 memoryClockRate(GHz): 0.8235

pciBusID: 0000:00:04.0

totalMemory: 11.17GiB freeMemory: 11.10GiB

2018-06-30 10:16:00.492402: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1471] Adding visible gpu devices: 0

2018-06-30 10:16:00.725139: I tensorflow/core/common_runtime/gpu/gpu_device.cc:952] Device interconnect StreamExecutor with strength 1 edge matrix:

2018-06-30 10:16:00.725203: I tensorflow/core/common_runtime/gpu/gpu_device.cc:958] 0

2018-06-30 10:16:00.725249: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0: N

2018-06-30 10:16:00.725546: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1084] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 10765 MB memory) -> physical GPU (device: 0, name: Tesla K80, pci bus id: 0000:00:04.0, compute capability: 3.7)

KerasのGANsモデルの各レイヤー情報などが出力され、学習が始まります。

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

unet_input (InputLayer) (None, 256, 256, 3) 0

__________________________________________________________________________________________________

unet_conv2D_1 (Conv2D) (None, 128, 128, 64) 1792 unet_input[0][0]

__________________________________________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 128, 128, 64) 0 unet_conv2D_1[0][0]

__________________________________________________________________________________________________

unet_conv2D_2 (Conv2D) (None, 64, 64, 128) 73856 leaky_re_lu_1[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 64, 64, 128) 512 unet_conv2D_2[0][0]

__________________________________________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 64, 64, 128) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

unet_conv2D_3 (Conv2D) (None, 32, 32, 256) 295168 leaky_re_lu_2[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 32, 32, 256) 1024 unet_conv2D_3[0][0]

__________________________________________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 32, 32, 256) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

unet_conv2D_4 (Conv2D) (None, 16, 16, 512) 1180160 leaky_re_lu_3[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 16, 16, 512) 2048 unet_conv2D_4[0][0]

__________________________________________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 16, 16, 512) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

unet_conv2D_5 (Conv2D) (None, 8, 8, 512) 2359808 leaky_re_lu_4[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 8, 8, 512) 2048 unet_conv2D_5[0][0]

__________________________________________________________________________________________________

leaky_re_lu_5 (LeakyReLU) (None, 8, 8, 512) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

unet_conv2D_6 (Conv2D) (None, 4, 4, 512) 2359808 leaky_re_lu_5[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 4, 4, 512) 2048 unet_conv2D_6[0][0]

__________________________________________________________________________________________________

leaky_re_lu_6 (LeakyReLU) (None, 4, 4, 512) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

unet_conv2D_7 (Conv2D) (None, 2, 2, 512) 2359808 leaky_re_lu_6[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 2, 2, 512) 2048 unet_conv2D_7[0][0]

__________________________________________________________________________________________________

leaky_re_lu_7 (LeakyReLU) (None, 2, 2, 512) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

unet_conv2D_8 (Conv2D) (None, 1, 1, 512) 2359808 leaky_re_lu_7[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 1, 1, 512) 2048 unet_conv2D_8[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 1, 1, 512) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

up_sampling2d_1 (UpSampling2D) (None, 2, 2, 512) 0 activation_1[0][0]

__________________________________________________________________________________________________

unet_upconv2D_1 (Conv2D) (None, 2, 2, 512) 2359808 up_sampling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 2, 2, 512) 2048 unet_upconv2D_1[0][0]

__________________________________________________________________________________________________

dropout_1 (Dropout) (None, 2, 2, 512) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 2, 2, 1024) 0 dropout_1[0][0]

batch_normalization_6[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 2, 2, 1024) 0 concatenate_1[0][0]

__________________________________________________________________________________________________

up_sampling2d_2 (UpSampling2D) (None, 4, 4, 1024) 0 activation_2[0][0]

__________________________________________________________________________________________________

unet_upconv2D_2 (Conv2D) (None, 4, 4, 512) 4719104 up_sampling2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 4, 4, 512) 2048 unet_upconv2D_2[0][0]

__________________________________________________________________________________________________

dropout_2 (Dropout) (None, 4, 4, 512) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

concatenate_2 (Concatenate) (None, 4, 4, 1024) 0 dropout_2[0][0]

batch_normalization_5[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 4, 4, 1024) 0 concatenate_2[0][0]

__________________________________________________________________________________________________

up_sampling2d_3 (UpSampling2D) (None, 8, 8, 1024) 0 activation_3[0][0]

__________________________________________________________________________________________________

unet_upconv2D_3 (Conv2D) (None, 8, 8, 512) 4719104 up_sampling2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 8, 8, 512) 2048 unet_upconv2D_3[0][0]

__________________________________________________________________________________________________

dropout_3 (Dropout) (None, 8, 8, 512) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

concatenate_3 (Concatenate) (None, 8, 8, 1024) 0 dropout_3[0][0]

batch_normalization_4[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 8, 8, 1024) 0 concatenate_3[0][0]

__________________________________________________________________________________________________

up_sampling2d_4 (UpSampling2D) (None, 16, 16, 1024) 0 activation_4[0][0]

__________________________________________________________________________________________________

unet_upconv2D_4 (Conv2D) (None, 16, 16, 256) 2359552 up_sampling2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 16, 16, 256) 1024 unet_upconv2D_4[0][0]

__________________________________________________________________________________________________

concatenate_4 (Concatenate) (None, 16, 16, 768) 0 batch_normalization_11[0][0]

batch_normalization_3[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 16, 16, 768) 0 concatenate_4[0][0]

__________________________________________________________________________________________________

up_sampling2d_5 (UpSampling2D) (None, 32, 32, 768) 0 activation_5[0][0]

__________________________________________________________________________________________________

unet_upconv2D_5 (Conv2D) (None, 32, 32, 128) 884864 up_sampling2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 32, 32, 128) 512 unet_upconv2D_5[0][0]

__________________________________________________________________________________________________

concatenate_5 (Concatenate) (None, 32, 32, 384) 0 batch_normalization_12[0][0]

batch_normalization_2[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 32, 32, 384) 0 concatenate_5[0][0]

__________________________________________________________________________________________________

up_sampling2d_6 (UpSampling2D) (None, 64, 64, 384) 0 activation_6[0][0]

__________________________________________________________________________________________________

unet_upconv2D_6 (Conv2D) (None, 64, 64, 64) 221248 up_sampling2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 64, 64, 64) 256 unet_upconv2D_6[0][0]

__________________________________________________________________________________________________

concatenate_6 (Concatenate) (None, 64, 64, 192) 0 batch_normalization_13[0][0]

batch_normalization_1[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 64, 64, 192) 0 concatenate_6[0][0]

__________________________________________________________________________________________________

up_sampling2d_7 (UpSampling2D) (None, 128, 128, 192 0 activation_7[0][0]

__________________________________________________________________________________________________

unet_upconv2D_7 (Conv2D) (None, 128, 128, 64) 110656 up_sampling2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 128, 128, 64) 256 unet_upconv2D_7[0][0]

__________________________________________________________________________________________________

concatenate_7 (Concatenate) (None, 128, 128, 128 0 batch_normalization_14[0][0]

unet_conv2D_1[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 128, 128, 128 0 concatenate_7[0][0]

__________________________________________________________________________________________________

up_sampling2d_8 (UpSampling2D) (None, 256, 256, 128 0 activation_8[0][0]

__________________________________________________________________________________________________

last_conv (Conv2D) (None, 256, 256, 3) 3459 up_sampling2d_8[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 256, 256, 3) 0 last_conv[0][0]

==================================================================================================

Total params: 26,387,971

Trainable params: 26,377,987

Non-trainable params: 9,984

__________________________________________________________________________________________________

PatchGAN summary

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

discriminator_input (InputLa (None, 64, 64, 3) 0

_________________________________________________________________

disc_conv2d_1 (Conv2D) (None, 32, 32, 64) 1792

_________________________________________________________________

batch_normalization_15 (Batc (None, 32, 32, 64) 256

_________________________________________________________________

leaky_re_lu_8 (LeakyReLU) (None, 32, 32, 64) 0

_________________________________________________________________

disc_conv2d_2 (Conv2D) (None, 16, 16, 128) 73856

_________________________________________________________________

batch_normalization_16 (Batc (None, 16, 16, 128) 512

_________________________________________________________________

leaky_re_lu_9 (LeakyReLU) (None, 16, 16, 128) 0

_________________________________________________________________

disc_conv2d_3 (Conv2D) (None, 8, 8, 256) 295168

_________________________________________________________________

batch_normalization_17 (Batc (None, 8, 8, 256) 1024

_________________________________________________________________

leaky_re_lu_10 (LeakyReLU) (None, 8, 8, 256) 0

_________________________________________________________________

disc_conv2d_4 (Conv2D) (None, 4, 4, 512) 1180160

_________________________________________________________________

batch_normalization_18 (Batc (None, 4, 4, 512) 2048

_________________________________________________________________

leaky_re_lu_11 (LeakyReLU) (None, 4, 4, 512) 0

_________________________________________________________________

disc_conv2d_5 (Conv2D) (None, 2, 2, 512) 2359808

_________________________________________________________________

batch_normalization_19 (Batc (None, 2, 2, 512) 2048

_________________________________________________________________

leaky_re_lu_12 (LeakyReLU) (None, 2, 2, 512) 0

_________________________________________________________________

disc_conv2d_6 (Conv2D) (None, 1, 1, 512) 2359808

_________________________________________________________________

batch_normalization_20 (Batc (None, 1, 1, 512) 2048

_________________________________________________________________

leaky_re_lu_13 (LeakyReLU) (None, 1, 1, 512) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 512) 0

_________________________________________________________________

disc_dense (Dense) (None, 2) 1026

=================================================================

Total params: 6,279,554

Trainable params: 6,275,586

Non-trainable params: 3,968

_________________________________________________________________

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

disc_input_0 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_1 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_2 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_3 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_4 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_5 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_6 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_7 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_8 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_9 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_10 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_11 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_12 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_13 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_14 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

disc_input_15 (InputLayer) (None, 64, 64, 3) 0

__________________________________________________________________________________________________

PatchGAN (Model) [(None, 2), (None, 5 6279554 disc_input_0[0][0]

disc_input_1[0][0]

disc_input_2[0][0]

disc_input_3[0][0]

disc_input_4[0][0]

disc_input_5[0][0]

disc_input_6[0][0]

disc_input_7[0][0]

disc_input_8[0][0]

disc_input_9[0][0]

disc_input_10[0][0]

disc_input_11[0][0]

disc_input_12[0][0]

disc_input_13[0][0]

disc_input_14[0][0]

disc_input_15[0][0]

__________________________________________________________________________________________________

concatenate_8 (Concatenate) (None, 32) 0 PatchGAN[17][0]

PatchGAN[18][0]

PatchGAN[19][0]

PatchGAN[20][0]

PatchGAN[21][0]

PatchGAN[22][0]

PatchGAN[23][0]

PatchGAN[24][0]

PatchGAN[25][0]

PatchGAN[26][0]

PatchGAN[27][0]

PatchGAN[28][0]

PatchGAN[29][0]

PatchGAN[30][0]

PatchGAN[31][0]

PatchGAN[32][0]

__________________________________________________________________________________________________

disc_output (Dense) (None, 2) 66 concatenate_8[0][0]

==================================================================================================

Total params: 6,279,620

Trainable params: 6,275,652

Non-trainable params: 3,968

__________________________________________________________________________________________________

しばらく放置します。

Start training

396/400 [============================>.] - ETA: 1s - D logloss: 0.7930 - G tot: 12.9405 - G L1: 1.2084 - G logloss: 0.8563

Epoch 1/10, Time: 113.86415982246399

396/400 [============================>.] - ETA: 0s - D logloss: 0.7527 - G tot: 12.0374 - G L1: 1.1294 - G logloss: 0.7433

Epoch 2/10, Time: 69.81179237365723

396/400 [============================>.] - ETA: 0s - D logloss: 0.7399 - G tot: 11.9709 - G L1: 1.1166 - G logloss: 0.8051

Epoch 3/10, Time: 69.62254095077515

96/400 [======>.......................] - ETA: 52s - D logloss: 0.7626 - G tot: 11.4249 - G L1: 1.0630 - G logloss: 0.7946

396/400 [============================>.] - ETA: 0s - D logloss: 0.7396 - G tot: 11.4491 - G L1: 1.0694 - G logloss: 0.7550

Epoch 4/10, Time: 69.69684600830078

396/400 [============================>.] - ETA: 0s - D logloss: 0.7545 - G tot: 11.1620 - G L1: 1.0439 - G logloss: 0.7231

Epoch 5/10, Time: 69.6496753692627

396/400 [============================>.] - ETA: 0s - D logloss: 0.7141 - G tot: 11.2265 - G L1: 1.0580 - G logloss: 0.6469

Epoch 6/10, Time: 69.64432549476624

112/400 [=======>......................] - ETA: 50s - D logloss: 0.7090 - G tot: 11.6766 - G L1: 1.0990 - G logloss: 0.6865

396/400 [============================>.] - ETA: 0s - D logloss: 0.6971 - G tot: 11.2397 - G L1: 1.0525 - G logloss: 0.7151

Epoch 7/10, Time: 69.55629849433899

396/400 [============================>.] - ETA: 0s - D logloss: 0.6883 - G tot: 11.2827 - G L1: 1.0555 - G logloss: 0.7281

Epoch 8/10, Time: 69.65778112411499

396/400 [============================>.] - ETA: 0s - D logloss: 0.6923 - G tot: 11.1760 - G L1: 1.0490 - G logloss: 0.6862

Epoch 9/10, Time: 69.43872261047363

116/400 [=======>......................] - ETA: 49s - D logloss: 0.6928 - G tot: 11.0454 - G L1: 1.0365 - G logloss: 0.6802

396/400 [============================>.] - ETA: 0s - D logloss: 0.6940 - G tot: 11.0641 - G L1: 1.0375 - G logloss: 0.6891

Epoch 10/10, Time: 69.7152578830719

(なんだか、途中で止まっているエポックが・・Colaboratoryの表示上の問題なのか、なんとも言えません・・。)

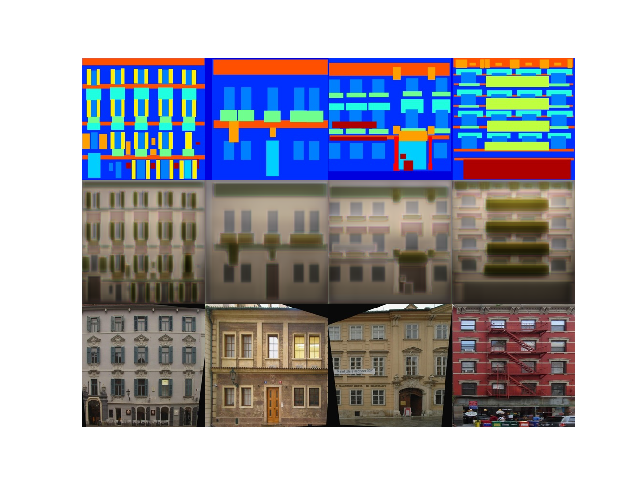

pix2pix/figures/ 以下に、現在のエポックによる推論結果などの画像が出力されるので、少し確認してみます。

ls ../../figures/

current_batch_training.png DCGAN_discriminator.png img_pix2pix.png

current_batch_validation.png generator_unet_upsampling.png

from PIL import Image

img = Image.open('../../figures/current_batch_validation.png')

img

上が入力画像、真ん中が推論結果、下が実際の建物の画像(ground truth)となります。

まだ10エポックだけだと、合成画像っぽさが強く残っていますが、とりあえずColaboratoryでも無事pix2pixのGANsモデルを動かせたようです![]()

チューリングテスト的に、人が区別付かないレベルの画像を推論できるようにするには、1000を超えるエポック数、学習させたりすると安定したりしたという過去の経験があり、本格的なものは結構他にも色々考える必要があります。

e.g. Colaboratoryだと時間制限があるため、エポックを一定数で分割して、学習済みの重みのデータを次回読み込んでエポック数を稼いだり、あとはColaboratoryでファイルが消えないように設定したりなどなど・・

ただ、アノテーションをがっつり、丁寧に行って、分野を絞る(推論対象のジャンルを絞ったデータセットでのモデルを組む)と、数千程度のデータセットでもかなりいい感じの画像を推論できたりしているため、線画着色モデルを組んでみたりとか、写真からイラストを生み出すモデルを組んでみたりとか、綺麗に画像を拡大したり、カラバリ推論モデルを組んだり、モノクロ画像に色を付けたり、Adobe Sensei的なことをしてみて遊んだり・・色々楽しそうです![]()

(アノテーション周りの大切さはより良い機械学習のためのアノテーションの機械学習の記事によくまとまっていましたのでご参照ください)

今回の記事の検証で、Colaboratoryでpix2pixが動かせることが分かりました。

そしてなにより、Colaboratoryが無料で使える点、UbuntuとGPUとPythonとTensorFlowなどでの依存関係による環境作ったりで大きく躓いたりといったことがなく、この辺りは流石Googleのサービスで、TensorFlowやKerasなどを動かす手軽さがとても敷居が低くて助かります。

近いうち、ある程度データセットを集めてみてアノテーションの作業などが終わったら、実際にpix2pixで遊んでみようと思います。