dockerコンテナを利用してelasticsearchとkibanaを起動

以下を参照してdockerをインストールする

elasticsearchとkibanaを起動するためのファイル(docker-compose.yaml)を用意する

mkdir ~/test

cd ~/test

vim docker-compose.yaml

version: "3"

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

environment:

- discovery.type=single-node

- cluster.name=docker-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

ports:

- 9200:9200

volumes:

- es-data:/usr/share/elasticsearch/data

kibana:

image: docker.elastic.co/kibana/kibana:7.6.2

ports:

- 5601:5601

volumes:

es-data:

dockerコンテナを起動

docker-compose up -d

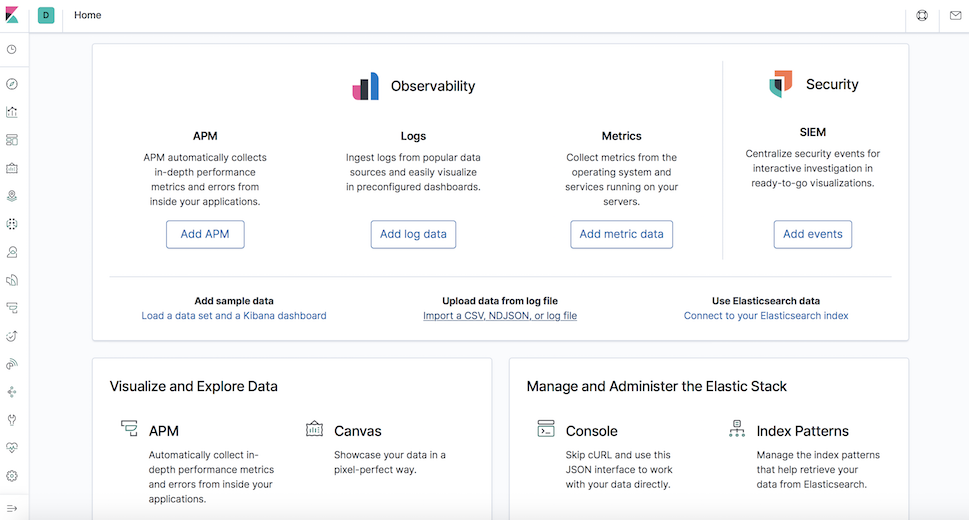

localhost:5601 にブラウザからアクセスして以下の通りkibanaがみえればOK

お試し評価

以下を参考に、お試しでkibanaを利用してみます。

Elasticsearch 6.1から追加されたMachine Learningの新機能を使ってみた。予測機能が面白いっ!

ニューヨーク市のタクシー乗降データでMachine Learningを体験する

ログデータを取得

wget https://s3.amazonaws.com/nyc-tlc/trip+data/yellow_tripdata_2016-11.csv

curl https://s3.amazonaws.com/nyc-tlc/misc/taxi+_zone_lookup.csv | cut -d, -f 1,3 | tail +2 > taxi.csv

elastic searchに与えるログフォーマットへ変換するためのlogstashをインストール

brew install logstash

logstashのconfigを用意

input {

stdin { type => "tripdata" }

}

filter {

csv {

columns => ["VendorID","tpep_pickup_datetime","tpep_dropoff_datetime","passenger_count","trip_distance","RatecodeID","store_and_fwd_flag","PULocationID","DOLocationID","payment_type","fare_amount","extra","mta_tax","tip_amount","tolls_amount","improvement_surcharge","total_amount"]

convert => {"extra" => "float"}

convert => {"fare_amount" => "float"}

convert => {"improvement_surcharge" => "float"}

convert => {"mta_tax" => "float"}

convert => {"tip_amount" => "float"}

convert => {"tolls_amount" => "float"}

convert => {"total_amount" => "float"}

convert => {"trip_distance" => "float"}

convert => {"passenger_count" => "integer"}

}

date {

match => ["tpep_pickup_datetime", "yyyy-MM-dd HH:mm:ss", "ISO8601"]

timezone => "EST"

}

date {

match => ["tpep_pickup_datetime", "yyyy-MM-dd HH:mm:ss", "ISO8601"]

target => ["@tpep_pickup_datetime"]

remove_field => ["tpep_pickup_datetime"]

timezone => "EST"

}

date {

match => ["tpep_dropoff_datetime", "yyyy-MM-dd HH:mm:ss", "ISO8601"]

target => ["@tpep_dropoff_datetime"]

remove_field => ["tpep_dropoff_datetime"]

timezone => "EST"

}

translate {

field => "RatecodeID"

destination => "RatecodeID"

dictionary => [

"1", "Standard rate",

"2", "JFK",

"3", "Newark",

"4", "Nassau or Westchester",

"5", "Negotiated fare",

"6", "Group ride"

]

}

translate {

field => "VendorID"

destination => "VendorID_t"

dictionary => [

"1", "Creative Mobile Technologies",

"2", "VeriFone Inc"

]

}

translate {

field => "payment_type"

destination => "payment_type_t"

dictionary => [

"1", "Credit card",

"2", "Cash",

"3", "No charge",

"4", "Dispute",

"5", "Unknown",

"6", "Voided trip"

]

}

translate {

field => "PULocationID"

destination => "PULocationID_t"

dictionary_path => "taxi.csv"

}

translate {

field => "DOLocationID"

destination => "DOLocationID_t"

dictionary_path => "taxi.csv"

}

mutate {

remove_field => ["message", "column18", "column19", "RatecodeID", "VendorID", "payment_type", "PULocationID", "DOLocationID"]

}

}

output {

file {

path => "./output.log"

codec => json_lines

}

}

実行

tail +2 yellow_tripdata_2016-11.csv | logstash -f nyc-taxi-yellow-translate-logstash.conf

cat output.log | head -100000 > output2.log

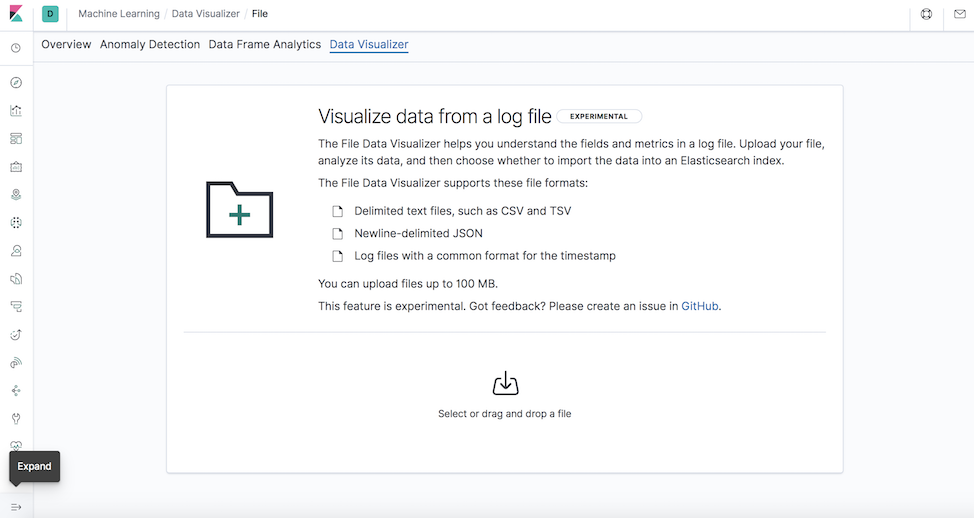

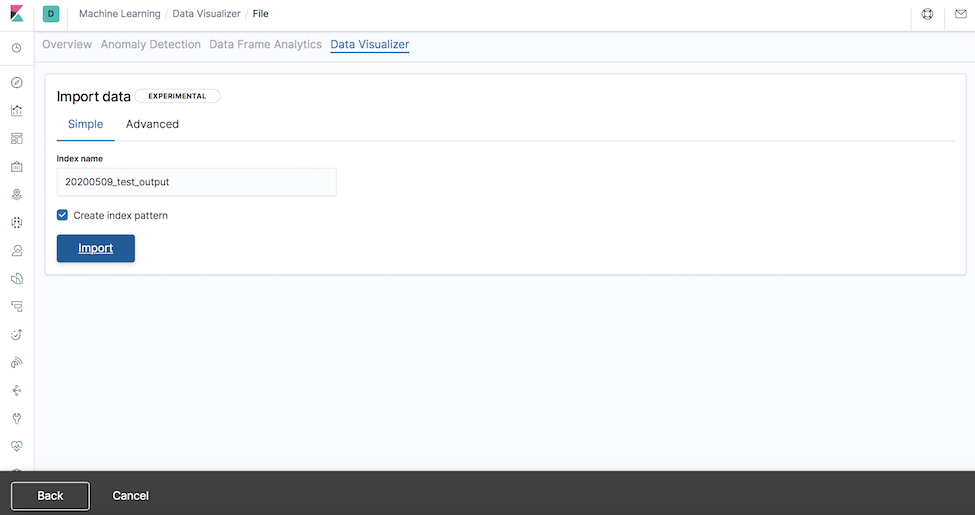

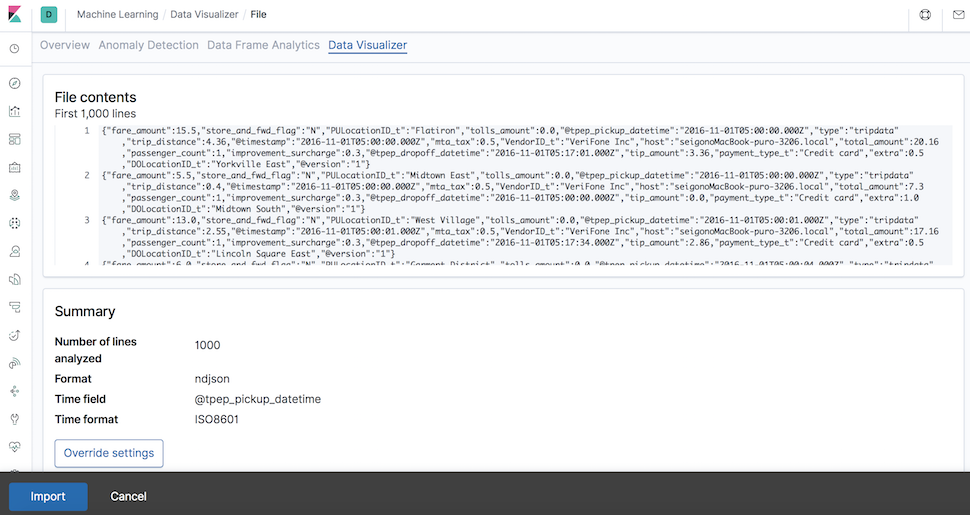

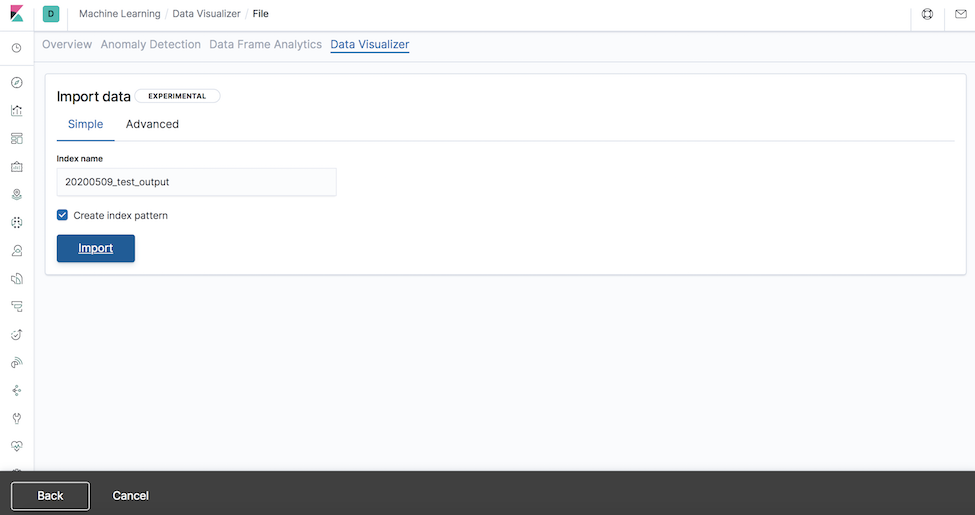

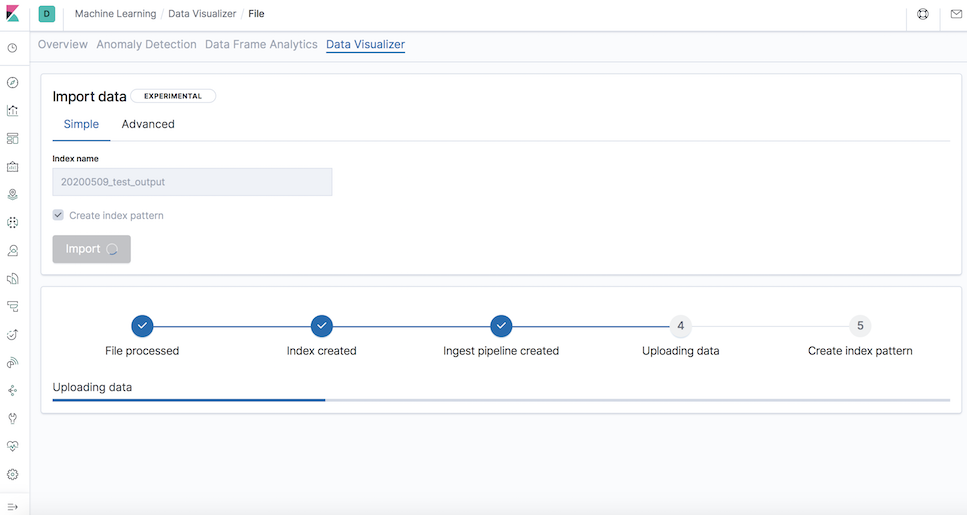

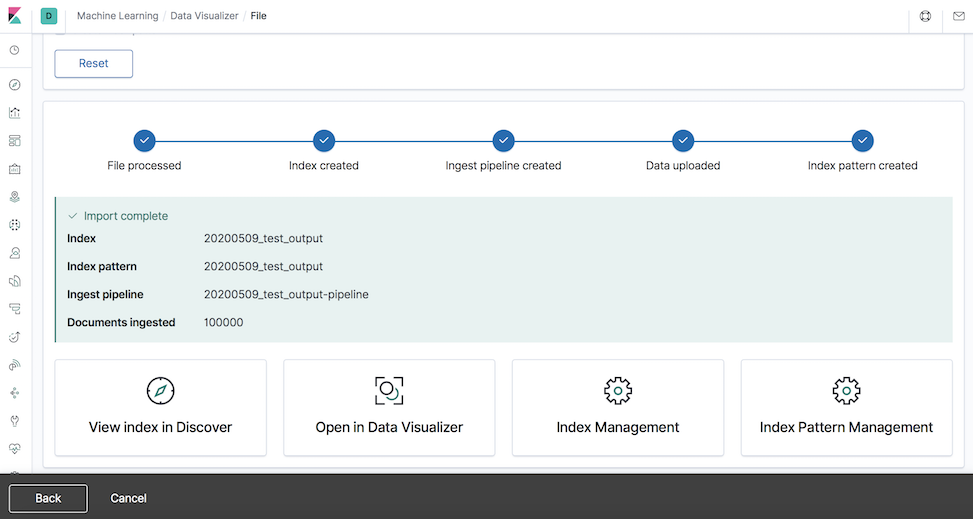

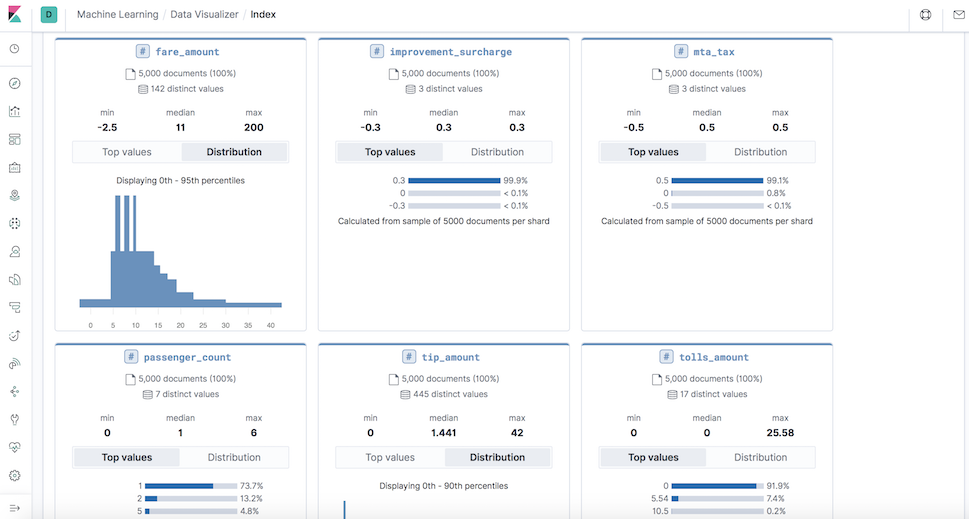

kibanaにアップロードする

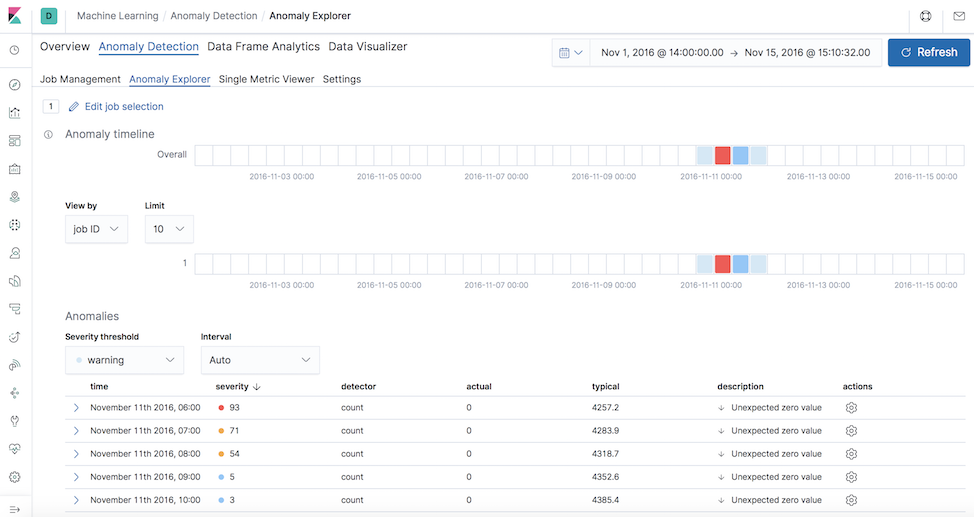

Anomaly Detection

Machine learning --> Anomaly Detectionから異常検知ができるみたいです。

一旦、同じデータ(output2.log)を与えてみる。

異常かどうかをscoreで判定してくれていそう。

正しく異常検出するにはデータの与え方に工夫が要りそう。

Machine Learning shown as unavailable

Machine Learning shown as unavailable

ライセンスに依存するようです、

一旦、Trialライセンスにすると利用できました。

参考

Elasticsearch + Kibana を docker-compose でさくっと動かす

Running Kibana on Dockeredit

Install Elasticsearch with Docker-edit

Install Compose on Linux

MacにELK(Elasticsearch, Logstash, Kibana)インストールしてみた