本記事はQualiArts Advent Calendar 2022 10日目の記事です。

オブジェクトモーションブラーとは?

オブジェクトモーションブラーとはその名前の通り、高速に変化するオブジェクトに対してかかるブラーです。

| オブジェクトモーションブラーOFF | オブジェクトモーションブラーON |

|---|---|

|

|

URPに搭載されているカメラモーションブラー

URPにはMotionBlurのVolumeが存在します。

しかしこれはカメラモーションブラーといい、カメラの移動によってかかるブラーのため、上記のような高速で回転するオブジェクトにはブラーがかかりません。

| URPのモーションブラーOFF | URPのモーションブラーON |

|---|---|

|

|

PostProcessingStack V2に搭載されているオブジェクトモーションブラー

一方でPostProcessingStack V2(以下PostProcessing)にはオブジェクトモーションブラーが実装されています。

そこで今回はPostProcessingに搭載されているオブジェクトモーションブラーをURPに移植することでURPでオブジェクトモーションブラーを使えるようにします。

配布プロジェクト

本解説で作成したプロジェクトはこちらで公開しています。

解説

本記事ではURPのRenderFeatureやVolumeの仕組みをある程度理解している方を対象にしています。

またオブジェクトモーションブラーの動作原理については、私自身が十分に理解しておらず、詳細な情報を伝えることができませんが、興味がある方はA Fast and Stable Feature-Aware Motion Blur Filterの論文をお読みください。

環境

- Unity2021.3.13f1

- Universal Render Pipeline 12.1.7

処理を移植する

VolumeComponentの移植

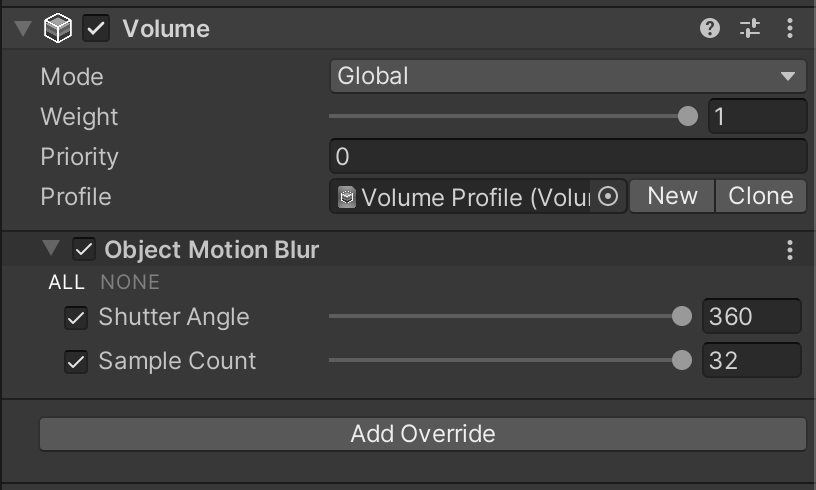

まずオブジェクトモーションブラーパラメーターをURPで実装します。

PostProcessingではここで実装さています。

これによりオブジェクトモーションブラーのパラメーターをVolumeComponentから設定できるようになります。

using UnityEngine.Rendering;

[System.Serializable, VolumeComponentMenu("Custom/Object Motion Blur")]

public class ObjectMotionBlur : VolumeComponent

{

public ClampedFloatParameter shutterAngle = new ClampedFloatParameter(0, 0, 360);

public ClampedIntParameter sampleCount = new ClampedIntParameter(8, 4, 32);

public bool IsActive()

{

return active && shutterAngle.overrideState && shutterAngle.value > 0 && sampleCount.value > 0;

}

}

シェーダーの移植

次にオブジェクトモーションブラーを実現するシェーダーをPostProcessingから移植します。

PostProcessingではここで実装されている処理になります。

移植後のシェーダー

Shader "Hidden/PostEffect/ObjectMotionBlur"

{

HLSLINCLUDE

#pragma target 3.0

#include "Packages/com.unity.render-pipelines.universal/Shaders/PostProcessing/Common.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

// SourceTexture

TEXTURE2D(_SourceTex); float4 _SourceTex_TexelSize;

// Camera depth texture

TEXTURE2D_X_FLOAT(_CameraDepthTexture); SAMPLER(sampler_CameraDepthTexture);

// Camera motion vectors texture

TEXTURE2D(_MotionVectorTexture); SAMPLER(sampler_MotionVectorTexture);

float4 _MotionVectorTexture_TexelSize;

// Packed velocity texture (2/10/10/10)

TEXTURE2D(_VelocityTex); SAMPLER(sampler_VelocityTex);

float2 _VelocityTex_TexelSize;

// NeighborMax texture

TEXTURE2D(_NeighborMaxTex); SAMPLER(sampler_NeighborMaxTex);

float2 _NeighborMaxTex_TexelSize;

// Velocity scale factor

float _VelocityScale;

// TileMax filter parameters

int _TileMaxLoop;

float2 _TileMaxOffs;

// Maximum blur radius (in pixels)

half _MaxBlurRadius;

float _RcpMaxBlurRadius;

// Filter parameters/coefficients

half _LoopCount;

// struct VaryingsDefault

// {

// float4 vertex : SV_POSITION;

// float2 texcoord : TEXCOORD0;

// float2 texcoordStereo : TEXCOORD1;

// };

// -----------------------------------------------------------------------------

// Prefilter

float Linear01DepthPPV2(float z)

{

float isOrtho = unity_OrthoParams.w;

float isPers = 1.0 - unity_OrthoParams.w;

z *= _ZBufferParams.x;

return (1.0 - isOrtho * z) / (isPers * z + _ZBufferParams.y);

}

// Velocity texture setup

half4 FragVelocitySetup(Varyings i) : SV_Target

{

// Sample the motion vector.

float2 v = SAMPLE_TEXTURE2D(_MotionVectorTexture, sampler_MotionVectorTexture, i.uv).rg;

// Apply the exposure time and convert to the pixel space.

v *= (_VelocityScale * 0.5) * _MotionVectorTexture_TexelSize.zw;

// Clamp the vector with the maximum blur radius.

v /= max(1.0, length(v) * _RcpMaxBlurRadius);

// Sample the depth of the pixel.

half d = Linear01Depth(SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, sampler_CameraDepthTexture, i.uv),_ZBufferParams);

// Pack into 10/10/10/2 format.

return half4((v * _RcpMaxBlurRadius + 1.0) * 0.5, d, 0.0);

}

half2 MaxV(half2 v1, half2 v2)

{

return dot(v1, v1) < dot(v2, v2) ? v2 : v1;

}

// TileMax filter (2 pixel width with normalization)

half4 FragTileMax1(Varyings i) : SV_Target

{

float4 d = _SourceTex_TexelSize.xyxy * float4(-0.5, -0.5, 0.5, 0.5);

half2 v1 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.xy).rg;

half2 v2 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.zy).rg;

half2 v3 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.xw).rg;

half2 v4 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.zw).rg;

v1 = (v1 * 2.0 - 1.0) * _MaxBlurRadius;

v2 = (v2 * 2.0 - 1.0) * _MaxBlurRadius;

v3 = (v3 * 2.0 - 1.0) * _MaxBlurRadius;

v4 = (v4 * 2.0 - 1.0) * _MaxBlurRadius;

return half4(MaxV(MaxV(MaxV(v1, v2), v3), v4), 0.0, 0.0);

}

// TileMax filter (2 pixel width)

half4 FragTileMax2(Varyings i) : SV_Target

{

float4 d = _SourceTex_TexelSize.xyxy * float4(-0.5, -0.5, 0.5, 0.5);

half2 v1 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.xy).rg;

half2 v2 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.zy).rg;

half2 v3 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.xw).rg;

half2 v4 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.zw).rg;

return half4(MaxV(MaxV(MaxV(v1, v2), v3), v4), 0.0, 0.0);

}

// TileMax filter (variable width)

half4 FragTileMaxV(Varyings i) : SV_Target

{

float2 uv0 = i.uv + _SourceTex_TexelSize.xy * _TileMaxOffs.xy;

float2 du = float2(_SourceTex_TexelSize.x, 0.0);

float2 dv = float2(0.0, _SourceTex_TexelSize.y);

half2 vo = 0.0;

UNITY_LOOP

for (int ix = 0; ix < _TileMaxLoop; ix++)

{

UNITY_LOOP

for (int iy = 0; iy < _TileMaxLoop; iy++)

{

float2 uv = uv0 + du * ix + dv * iy;

vo = MaxV(vo, SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, uv).rg);

}

}

return half4(vo, 0.0, 0.0);

}

// NeighborMax filter

half4 FragNeighborMax(Varyings i) : SV_Target

{

const half cw = 1.01; // Center weight tweak

float4 d = _SourceTex_TexelSize.xyxy * float4(1.0, 1.0, -1.0, 0.0);

half2 v1 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv - d.xy).rg;

half2 v2 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv - d.wy).rg;

half2 v3 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv - d.zy).rg;

half2 v4 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv - d.xw).rg;

half2 v5 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv).rg * cw;

half2 v6 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.xw).rg;

half2 v7 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.zy).rg;

half2 v8 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.wy).rg;

half2 v9 = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv + d.xy).rg;

half2 va = MaxV(v1, MaxV(v2, v3));

half2 vb = MaxV(v4, MaxV(v5, v6));

half2 vc = MaxV(v7, MaxV(v8, v9));

return half4(MaxV(va, MaxV(vb, vc)) * (1.0 / cw), 0.0, 0.0);

}

// -----------------------------------------------------------------------------

// Reconstruction

// Interleaved gradient function from Jimenez 2014

// http://www.iryoku.com/next-generation-post-processing-in-call-of-duty-advanced-warfare

float GradientNoise(float2 uv)

{

uv = floor(uv * _ScreenParams.xy);

float f = dot(float2(0.06711056, 0.00583715), uv);

return frac(52.9829189 * frac(f));

}

// Returns true or false with a given interval.

bool Interval(half phase, half interval)

{

return frac(phase / interval) > 0.499;

}

// Jitter function for tile lookup

float2 JitterTile(float2 uv)

{

float rx, ry;

sincos(GradientNoise(uv + float2(2.0, 0.0)) * TWO_PI, ry, rx);

return float2(rx, ry) * _NeighborMaxTex_TexelSize.xy * 0.25;

}

// Velocity sampling function

half3 SampleVelocity(float2 uv)

{

half3 v = SAMPLE_TEXTURE2D_LOD(_VelocityTex, sampler_VelocityTex, uv, 0.0).xyz;

return half3((v.xy * 2.0 - 1.0) * _MaxBlurRadius, v.z);

}

// Reconstruction filter

half4 FragReconstruction(Varyings i) : SV_Target

{

// Color sample at the center point

const float4 c_p = SAMPLE_TEXTURE2D(_SourceTex, sampler_LinearClamp, i.uv);

// Velocity/Depth sample at the center point

const float3 vd_p = SampleVelocity(i.uv);

const float l_v_p = max(length(vd_p.xy), 0.5);

const float rcp_d_p = 1.0 / vd_p.z;

// NeighborMax vector sample at the center point

const float2 v_max = SAMPLE_TEXTURE2D(_NeighborMaxTex, sampler_NeighborMaxTex, i.uv + JitterTile(i.uv)).xy;

const float l_v_max = length(v_max);

const float rcp_l_v_max = 1.0 / l_v_max;

// Escape early if the NeighborMax vector is small enough.

if (l_v_max < 2.0) return c_p;

// Use V_p as a secondary sampling direction except when it's too small

// compared to V_max. This vector is rescaled to be the length of V_max.

const half2 v_alt = (l_v_p * 2.0 > l_v_max) ? vd_p.xy * (l_v_max / l_v_p) : v_max;

// Determine the sample count.

const half sc = floor(min(_LoopCount, l_v_max * 0.5));

// Loop variables (starts from the outermost sample)

const half dt = 1.0 / sc;

const half t_offs = (GradientNoise(i.uv) - 0.5) * dt;

float t = 1.0 - dt * 0.5;

float count = 0.0;

// Background velocity

// This is used for tracking the maximum velocity in the background layer.

float l_v_bg = max(l_v_p, 1.0);

// Color accumlation

float4 acc = 0.0;

UNITY_LOOP

while (t > dt * 0.25)

{

// Sampling direction (switched per every two samples)

const float2 v_s = Interval(count, 4.0) ? v_alt : v_max;

// Sample position (inverted per every sample)

const float t_s = (Interval(count, 2.0) ? -t : t) + t_offs;

// Distance to the sample position

const float l_t = l_v_max * abs(t_s);

// UVs for the sample position

const float2 uv0 = i.uv + v_s * t_s * _SourceTex_TexelSize.xy;

const float2 uv1 = i.uv + v_s * t_s * _VelocityTex_TexelSize.xy;

// Color sample

const float3 c = SAMPLE_TEXTURE2D_LOD(_SourceTex, sampler_LinearClamp, uv0, 0.0).rgb;

// Velocity/Depth sample

const float3 vd = SampleVelocity(uv1);

// Background/Foreground separation

const float fg = saturate((vd_p.z - vd.z) * 20.0 * rcp_d_p);

// Length of the velocity vector

const float l_v = lerp(l_v_bg, length(vd.xy), fg);

// Sample weight

// (Distance test) * (Spreading out by motion) * (Triangular window)

const float w = saturate(l_v - l_t) / l_v * (1.2 - t);

// Color accumulation

acc += half4(c, 1.0) * w;

// Update the background velocity.

l_v_bg = max(l_v_bg, l_v);

// Advance to the next sample.

t = Interval(count, 2.0) ? t - dt : t;

count += 1.0;

}

// Add the center sample.

acc += float4(c_p.rgb, 1.0) * (1.2 / (l_v_bg * sc * 2.0));

//return half4(0,0,1,1);

return half4(acc.rgb / acc.a, c_p.a);

}

ENDHLSL

SubShader

{

Cull Off ZWrite Off ZTest Always

// (0) Velocity texture setup

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment FragVelocitySetup

ENDHLSL

}

// (1) TileMax filter (2 pixel width with normalization)

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment FragTileMax1

ENDHLSL

}

// (2) TileMax filter (2 pixel width)

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment FragTileMax2

ENDHLSL

}

// (3) TileMax filter (variable width)

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment FragTileMaxV

ENDHLSL

}

// (4) NeighborMax filter

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment FragNeighborMax

ENDHLSL

}

// (5) Reconstruction filter

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment FragReconstruction

ENDHLSL

}

}

}

パスの移植

次にCPU側の処理を移植します。

このコードは上記のシェーダーで定義したパスの呼び出しやパラメーターを制御します。

これは後述するScriptableRenderPassを継承したクラスのExecuteメソッド中に入れる事もできますが、今回はわかりやすくするためにPostProcessingMotionBlurというクラスに分離しました。

PostProcessingではここで実装されている処理です。

コード全文

using UnityEngine;

using UnityEngine.Rendering;

public class PostProcessingMotionBlur

{

enum Pass

{

VelocitySetup,

TileMax1,

TileMax2,

TileMaxV,

NeighborMax,

Reconstruction

}

class ShaderIDs

{

internal static readonly int VelocityScale = Shader.PropertyToID("_VelocityScale");

internal static readonly int MaxBlurRadius = Shader.PropertyToID("_MaxBlurRadius");

internal static readonly int RcpMaxBlurRadius = Shader.PropertyToID("_RcpMaxBlurRadius");

internal static readonly int VelocityTex = Shader.PropertyToID("_VelocityTex");

internal static readonly int Tile2RT = Shader.PropertyToID("_Tile2RT");

internal static readonly int Tile4RT = Shader.PropertyToID("_Tile4RT");

internal static readonly int Tile8RT = Shader.PropertyToID("_Tile8RT");

internal static readonly int TileMaxOffs = Shader.PropertyToID("_TileMaxOffs");

internal static readonly int TileMaxLoop = Shader.PropertyToID("_TileMaxLoop");

internal static readonly int TileVRT = Shader.PropertyToID("_TileVRT");

internal static readonly int NeighborMaxTex = Shader.PropertyToID("_NeighborMaxTex");

internal static readonly int LoopCount = Shader.PropertyToID("_LoopCount");

}

private void CreateTemporaryRT(CommandBuffer cmd, RenderTextureDescriptor rtDesc, int nameID, int width,

int height,

RenderTextureFormat rtFormat)

{

rtDesc.width = width;

rtDesc.height = height;

rtDesc.colorFormat = rtFormat;

cmd.GetTemporaryRT(nameID, rtDesc, FilterMode.Point);

}

public void ObjectMotionBlur(

CommandBuffer cmd,

Material material,

RenderTargetIdentifier source,

RenderTargetIdentifier destination,

RenderTextureDescriptor desc)

{

var objectMotionBlur = VolumeManager.instance.stack.GetComponent<ObjectMotionBlur>();

const float kMaxBlurRadius = 5f;

var vectorRTFormat = RenderTextureFormat.RGHalf;

var packedRTFormat = SystemInfo.SupportsRenderTextureFormat(RenderTextureFormat.ARGB2101010)

? RenderTextureFormat.ARGB2101010

: RenderTextureFormat.ARGB32;

// var desc = GetCompatibleDescriptor();

var width = desc.width;

var height = desc.height;

desc.colorFormat = packedRTFormat;

// Calculate the maximum blur radius in pixels.

// int maxBlurPixels = (int)(kMaxBlurRadius * context.height / 100);

int maxBlurPixels = (int)(kMaxBlurRadius * height / 100);

// Calculate the TileMax size.

// It should be a multiple of 8 and larger than maxBlur.

int tileSize = ((maxBlurPixels - 1) / 8 + 1) * 8;

// Pass 1 - Velocity/depth packing

var velocityScale = objectMotionBlur.shutterAngle.value / 360f;

material.SetFloat(ShaderIDs.VelocityScale, velocityScale);

material.SetFloat(ShaderIDs.MaxBlurRadius, maxBlurPixels);

material.SetFloat(ShaderIDs.RcpMaxBlurRadius, 1f / maxBlurPixels);

int vbuffer = ShaderIDs.VelocityTex;

CreateTemporaryRT(cmd, desc, vbuffer, width, height, packedRTFormat);

// cmd.Blit(BuiltinRenderTextureType.None, vbuffer, material, (int)Pass.VelocitySetup);

Blit(cmd, BuiltinRenderTextureType.None, vbuffer, material, (int)Pass.VelocitySetup);

// Pass 2 - First TileMax filter (1/2 downsize)

int tile2 = ShaderIDs.Tile2RT;

CreateTemporaryRT(cmd, desc, tile2, width / 2, height / 2, vectorRTFormat);

Blit(cmd, vbuffer, tile2, material, (int)Pass.TileMax1);

// Pass 3 - Second TileMax filter (1/2 downsize)

int tile4 = ShaderIDs.Tile4RT;

CreateTemporaryRT(cmd, desc, tile4, width / 4, height / 4, vectorRTFormat);

Blit(cmd, tile2, tile4, material, (int)Pass.TileMax2);

cmd.ReleaseTemporaryRT(tile2);

// Pass 4 - Third TileMax filter (1/2 downsize)

int tile8 = ShaderIDs.Tile8RT;

CreateTemporaryRT(cmd, desc, tile8, width / 8, height / 8, vectorRTFormat);

Blit(cmd, tile4, tile8, material, (int)Pass.TileMax2);

cmd.ReleaseTemporaryRT(tile4);

// Pass 5 - Fourth TileMax filter (reduce to tileSize)

var tileMaxOffs = Vector2.one * (tileSize / 8f - 1f) * -0.5f;

material.SetVector(ShaderIDs.TileMaxOffs, tileMaxOffs);

material.SetFloat(ShaderIDs.TileMaxLoop, (int)(tileSize / 8f));

int tile = ShaderIDs.TileVRT;

CreateTemporaryRT(cmd, desc, tile, width / tileSize, height / tileSize, vectorRTFormat);

Blit(cmd, tile8, tile, material, (int)Pass.TileMaxV);

cmd.ReleaseTemporaryRT(tile8);

// Pass 6 - NeighborMax filter

int neighborMax = ShaderIDs.NeighborMaxTex;

CreateTemporaryRT(cmd, desc, neighborMax, width / tileSize, height / tileSize, vectorRTFormat);

Blit(cmd, tile, neighborMax, material, (int)Pass.NeighborMax);

cmd.ReleaseTemporaryRT(tile);

// Pass 7 - Reconstruction pass

material.SetFloat(ShaderIDs.LoopCount, Mathf.Clamp(objectMotionBlur.sampleCount.value / 2, 1, 64));

Blit(cmd, source, destination, material, (int)Pass.Reconstruction);

cmd.ReleaseTemporaryRT(vbuffer);

cmd.ReleaseTemporaryRT(neighborMax);

}

private void Blit(CommandBuffer cmd, RenderTargetIdentifier source, RenderTargetIdentifier destination,

Material material, int passIndex = 0)

{

cmd.SetGlobalTexture(Shader.PropertyToID("_SourceTex"), source);

cmd.Blit(source, destination, material, passIndex);

}

}

ScriptableRenderPassを継承したPassの実装

ScriptableRenderPassを継承したパスを実装します。

オブジェクトモーションブラーを実現するためには、モーションベクターが必要になるためConfigureInput(ScriptableRenderPassInput.Motion)を指定して、URP側にモーションベクターパスの描画を要求します。

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

public class ObjectMotionBlurPass : ScriptableRenderPass

{

private readonly ProfilingSampler _objectMotionBlurSampler = new("Object Motion Blur");

private readonly PostProcessingMotionBlur _postProcessingMotionBlur;

private readonly RenderTargetHandle _tmpColorBuffer;

private Material _material;

public ObjectMotionBlurPass(Shader shader)

{

// MotionVector要求する

ConfigureInput(ScriptableRenderPassInput.Motion);

_postProcessingMotionBlur = new PostProcessingMotionBlur();

_tmpColorBuffer.Init("_TempColorBuffer");

_material = CoreUtils.CreateEngineMaterial(shader);

}

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

ref var cameraData = ref renderingData.cameraData;

// SceneViewではブラーをかけない

if(cameraData.cameraType == CameraType.SceneView) return;

CommandBuffer cmd = CommandBufferPool.Get();

using (new ProfilingScope(cmd, _objectMotionBlurSampler))

{

var descriptor = cameraData.cameraTargetDescriptor;

var colorTarget = cameraData.renderer.cameraColorTarget;

// カメラの画像を_TempColorBufferにコピーする

cmd.GetTemporaryRT(_tmpColorBuffer.id, descriptor);

Blit(cmd, colorTarget, _tmpColorBuffer.id);

// オブジェクトモーションブラー

_postProcessingMotionBlur.ObjectMotionBlur(cmd, _material, _tmpColorBuffer.id, colorTarget, descriptor);

cmd.ReleaseTemporaryRT(_tmpColorBuffer.id);

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

}

}

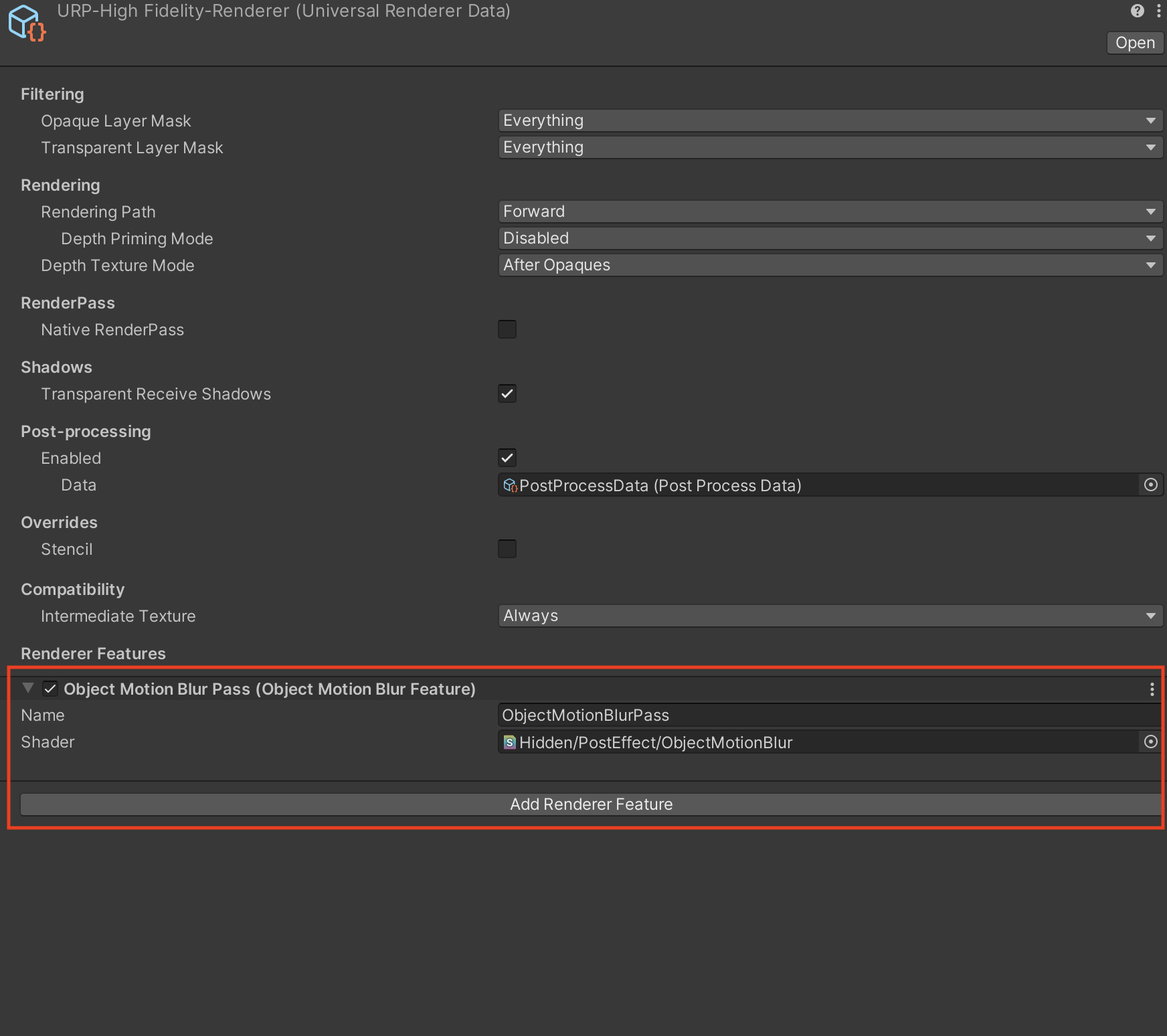

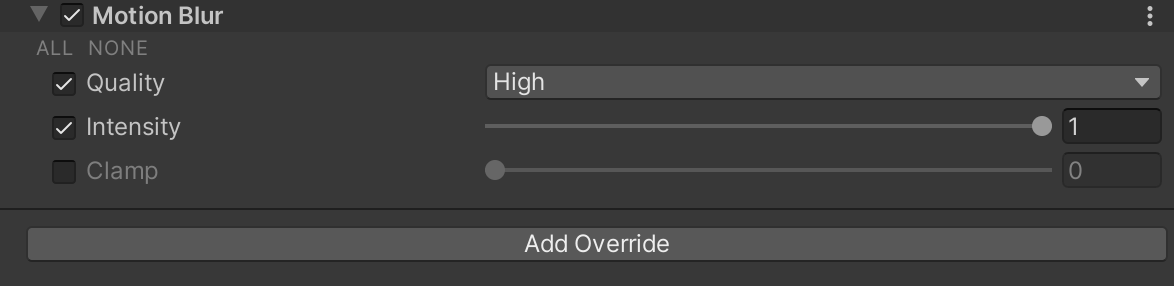

RenderFeatureの設定

最後にRendererにRenderFeatureを設定し、作成したシェーダーを割り当てたら完成です。

Shuntter Angleを変えることでブラーのかかり具合、Sample Countを変える事でブラーの精度(処理負荷)を制御できます。