ciliumというCNIを試してみました。

前提

- 以下の手順はquick start用のciliumインストール方法なので、product用には別途やりかたを調べてください

clusterの準備

eksでやりました。

$ eksctl create cluster --name=cluster-1 --nodes=3 --node-type=t3.medium --vpc-cidr=10.10.0.0/16

ciliumのレポジトリをclone

$ git clone https://github.com/cilium/cilium

ciliumをinstall

unchangedとかでるけど問題ないです

$ kubectl apply -f cilium/examples/kubernetes/1.10

configmap/cilium-config created

daemonset.apps/cilium created

configmap/cilium-config unchanged

daemonset.apps/cilium unchanged

clusterrole.rbac.authorization.k8s.io/cilium-etcd-operator created

clusterrolebinding.rbac.authorization.k8s.io/cilium-etcd-operator created

clusterrole.rbac.authorization.k8s.io/etcd-operator created

clusterrolebinding.rbac.authorization.k8s.io/etcd-operator created

serviceaccount/cilium-etcd-operator created

serviceaccount/cilium-etcd-sa created

deployment.apps/cilium-etcd-operator created

deployment.apps/cilium-operator created

serviceaccount/cilium-operator created

clusterrole.rbac.authorization.k8s.io/cilium-operator created

clusterrolebinding.rbac.authorization.k8s.io/cilium-operator created

clusterrolebinding.rbac.authorization.k8s.io/cilium created

clusterrole.rbac.authorization.k8s.io/cilium created

serviceaccount/cilium created

daemonset.apps/cilium configured

clusterrole.rbac.authorization.k8s.io/cilium-etcd-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/cilium-etcd-operator unchanged

clusterrole.rbac.authorization.k8s.io/etcd-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/etcd-operator unchanged

serviceaccount/cilium-etcd-operator unchanged

serviceaccount/cilium-etcd-sa unchanged

deployment.apps/cilium-etcd-operator unchanged

deployment.apps/cilium-operator unchanged

serviceaccount/cilium-operator unchanged

clusterrole.rbac.authorization.k8s.io/cilium-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/cilium-operator unchanged

daemonset.apps/cilium-pre-flight-check created

clusterrolebinding.rbac.authorization.k8s.io/cilium unchanged

clusterrole.rbac.authorization.k8s.io/cilium unchanged

serviceaccount/cilium unchanged

configmap/cilium-config unchanged

daemonset.apps/cilium unchanged

clusterrole.rbac.authorization.k8s.io/cilium-etcd-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/cilium-etcd-operator unchanged

clusterrole.rbac.authorization.k8s.io/etcd-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/etcd-operator unchanged

serviceaccount/cilium-etcd-operator unchanged

serviceaccount/cilium-etcd-sa unchanged

deployment.apps/cilium-etcd-operator unchanged

deployment.apps/cilium-operator unchanged

serviceaccount/cilium-operator unchanged

clusterrole.rbac.authorization.k8s.io/cilium-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/cilium-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/cilium unchanged

clusterrole.rbac.authorization.k8s.io/cilium unchanged

serviceaccount/cilium unchanged

以下のコマンドで全てREADY状態になっているか確認

$ kubectl -n kube-system get all

NAME READY STATUS RESTARTS AGE

pod/aws-node-g9cvv 1/1 Running 0 12m

pod/aws-node-rwtjw 1/1 Running 1 12m

pod/cilium-etcd-ddrzks7bnl 1/1 Running 0 14s

pod/cilium-etcd-kjdd2r52fr 1/1 Running 0 3m

pod/cilium-etcd-operator-9fbc5f54d-879fl 1/1 Running 0 4m

pod/cilium-etcd-zxlzx4tm5j 1/1 Running 0 3m

pod/cilium-operator-7d75f5fcc-sgzzt 1/1 Running 1 4m

pod/cilium-ppwxl 1/1 Running 0 4m

pod/cilium-pre-flight-check-2p4c2 1/1 Running 0 4m

pod/cilium-pre-flight-check-wrcsm 1/1 Running 0 4m

pod/cilium-xqzhn 1/1 Running 0 4m

pod/etcd-operator-58cf6d756d-5jjd7 1/1 Running 0 4m

pod/kube-dns-64b69465b4-2fbsz 3/3 Running 0 18m

pod/kube-proxy-lkd4t 1/1 Running 0 12m

pod/kube-proxy-qxzlh 1/1 Running 0 12m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cilium-etcd ClusterIP None <none> 2379/TCP,2380/TCP 4m

service/cilium-etcd-client ClusterIP 172.20.138.230 <none> 2379/TCP 4m

service/kube-dns ClusterIP 172.20.0.10 <none> 53/UDP,53/TCP 18m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/aws-node 2 2 2 2 2 <none> 18m

daemonset.apps/cilium 2 2 2 2 2 <none> 4m

daemonset.apps/cilium-pre-flight-check 2 2 2 2 2 <none> 4m

daemonset.apps/kube-proxy 2 2 2 2 2 <none> 18m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/cilium-etcd-operator 1 1 1 1 4m

deployment.apps/cilium-operator 1 1 1 1 4m

deployment.apps/etcd-operator 1 1 1 1 4m

deployment.apps/kube-dns 1 1 1 1 18m

NAME DESIRED CURRENT READY AGE

replicaset.apps/cilium-etcd-operator-9fbc5f54d 1 1 1 4m

replicaset.apps/cilium-operator-7d75f5fcc 1 1 1 4m

replicaset.apps/etcd-operator-58cf6d756d 1 1 1 4m

replicaset.apps/kube-dns-64b69465b4 1 1 1 18m

NetworkPolicyの作成

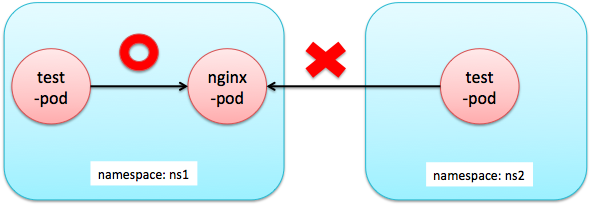

- 公式ドキュメントを参考にpolicyを作成

- 今回はnamespace単位でのNetrokPolicyを作成してみる

- 将来的にはKubernetesのNetworkPolicyリソースに統合予定らしいが、今はciliumのカスタムリソースを利用する必要がある

まずは利用するnamespaceの作成

$ kubectl create ns ns1

namespace/ns1 created

$ kubectl create ns ns2

namespace/ns2 created

次に以下のネットワークポリシーファイルをapply

ciliumNetworkPolicy.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "isolate-ns1"

namespace: ns1

spec:

endpointSelector:

matchLabels:

{}

ingress:

- fromEndpoints:

- matchLabels:

{}

---

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "isolate-ns1"

namespace: ns2

spec:

endpointSelector:

matchLabels:

{}

ingress:

- fromEndpoints:

- matchLabels:

{}

$ kubectl apply -f ciliumNetworkPolicy.yaml

ciliumnetworkpolicy.cilium.io/isolate-ns1 created

ciliumnetworkpolicy.cilium.io/isolate-ns1 created

このポリシーにより以下のようなアクセス制御ができれば成功です

試してみる

ns1にnginxを作成

$ kubectl run -n ns1 nginx --image=nginx

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/nginx created

$ kubectl expose -n ns1 deployment nginx --port 80

service/nginx exposed

ns1内にtestpodを立ててアクセスできることを確認する

kubectl run -n ns1 --image=centos:7 --restart=Never --rm -ti testpod

If you don't see a command prompt, try pressing enter.

[root@testpod /]# curl nginx.ns1.svc

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@testpod /]# exit

exit

pod "testpod" deleted

ns2内からはアクセスできないことを確認する

kubectl run -n ns2 --image=centos:7 --restart=Never --rm -ti testpod

If you don't see a command prompt, try pressing enter.

[root@testpod /]# curl nginx.ns1.svc

アクセスできないことが確認できました。

ネットワークポリシー外してns2からアクセスしてみる

一応試しておきましょう

$ kubectl delete -f ciliumNetworkPolicy.yaml

ciliumnetworkpolicy.cilium.io "isolate-ns1" deleted

ciliumnetworkpolicy.cilium.io "isolate-ns1" deleted

$ kubectl run -n ns2 --image=centos:7 --restart=Never --rm -ti testpod

If you don't see a command prompt, try pressing enter.

[root@testpod /]#

[root@testpod /]# curl nginx.ns1.svc

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

アクセスできますね。

まとめ

ciliumを利用してネットワークポリシーを利用してみました。