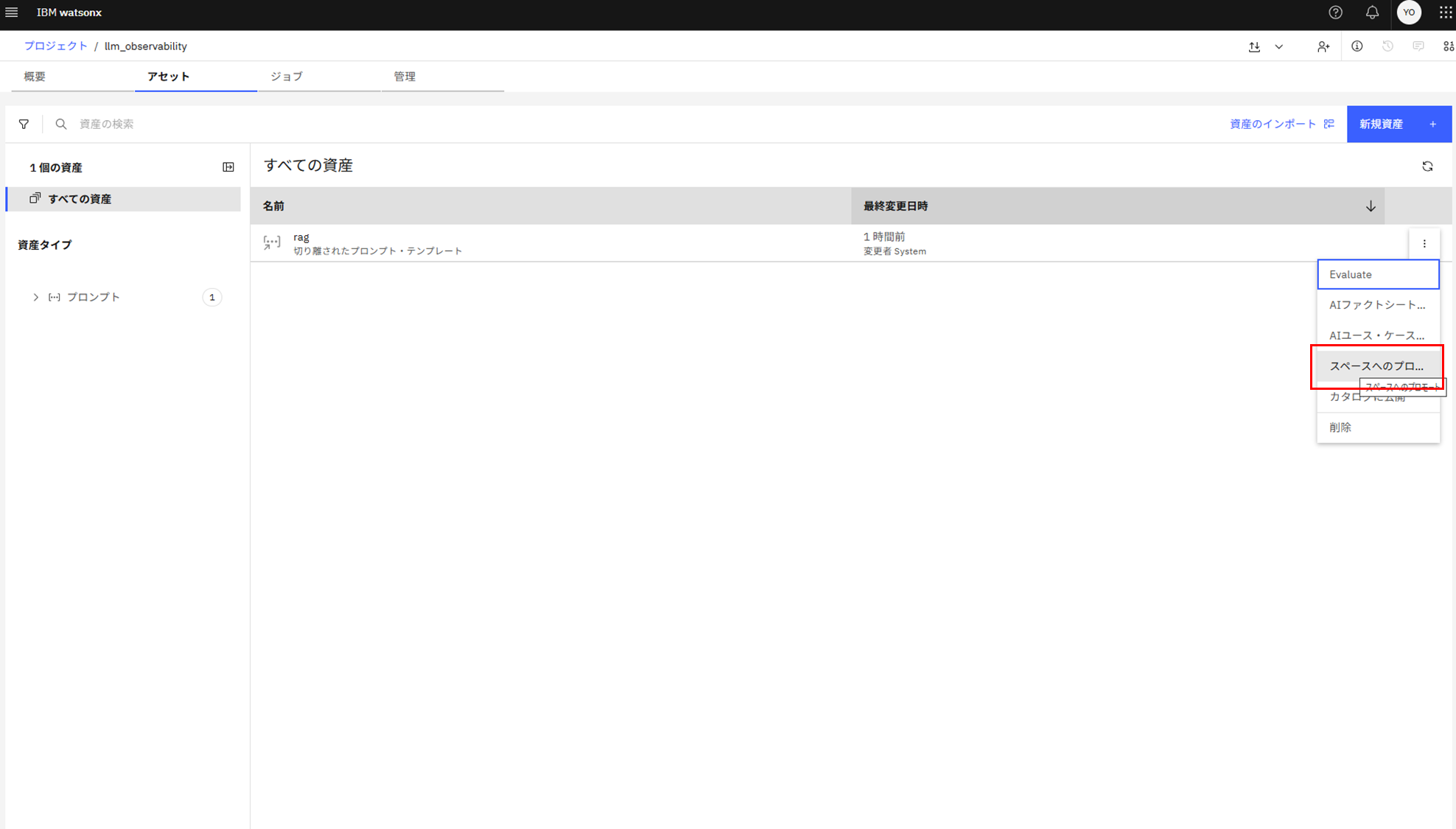

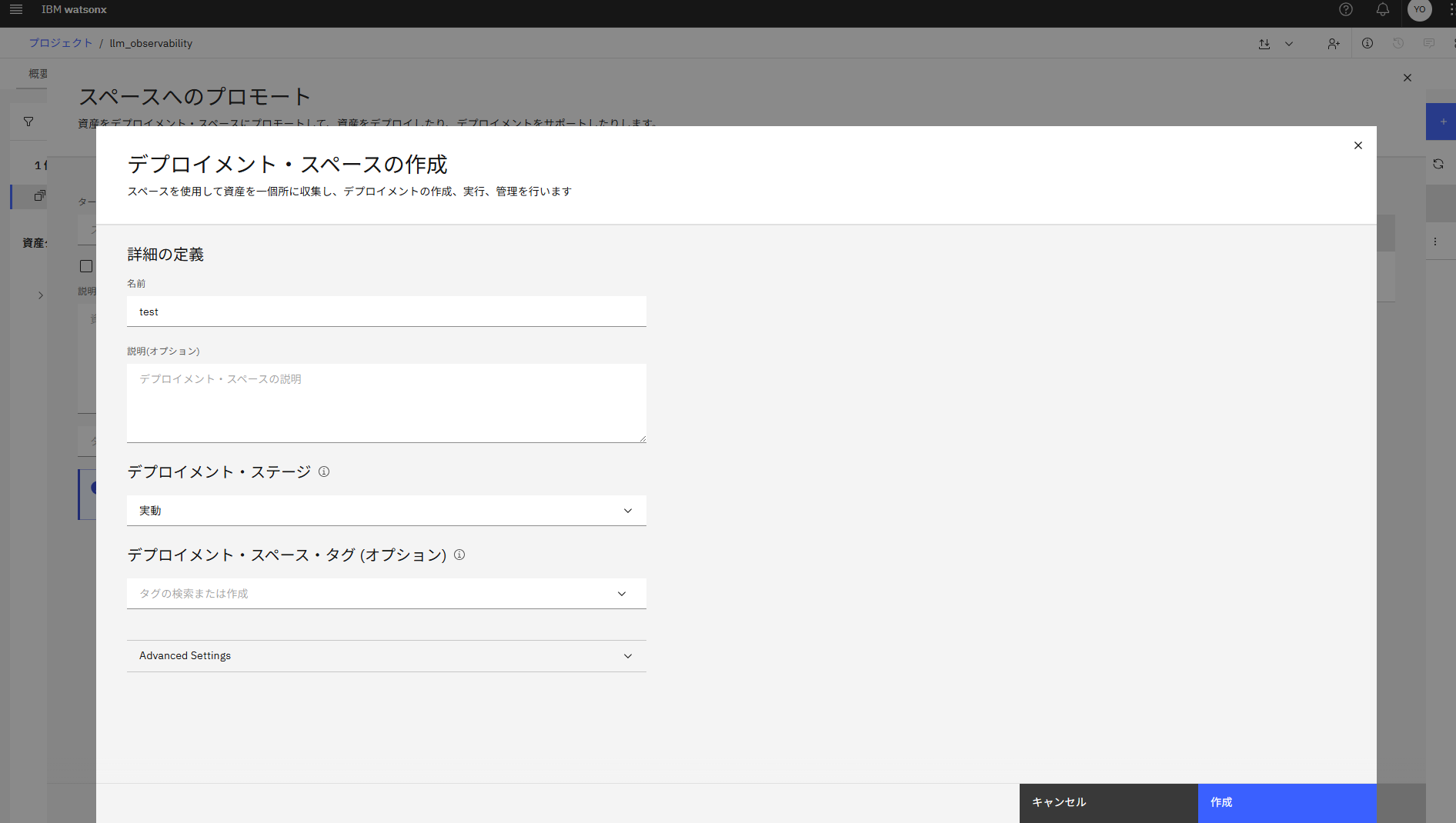

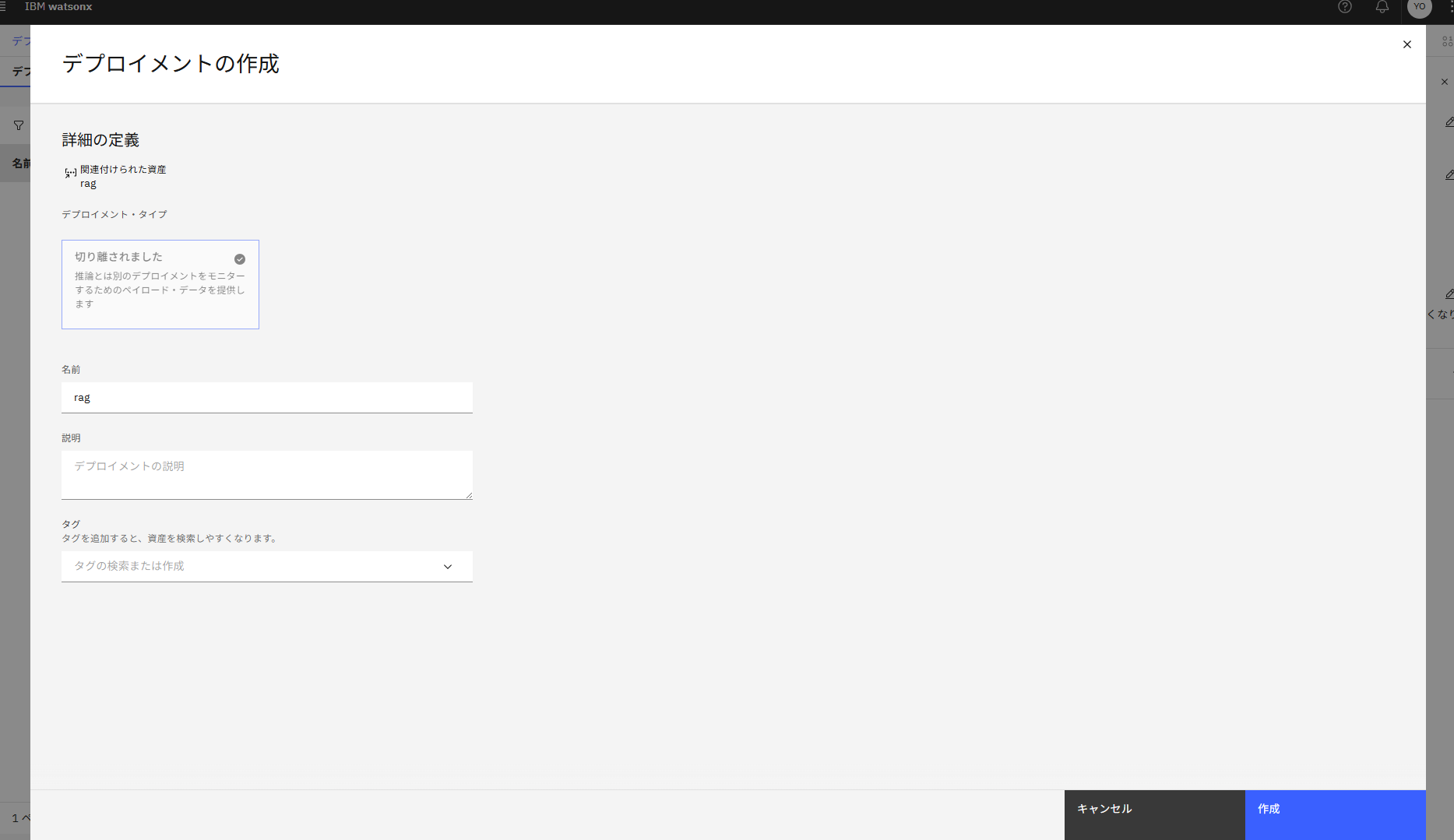

Detached Prompt Templateを本番スペースへプロモート&デプロイ

SPACE_ID、PROMPTE_TEMPLATE_ASSET_ID、DEPLOYMENT_IDをメモ。

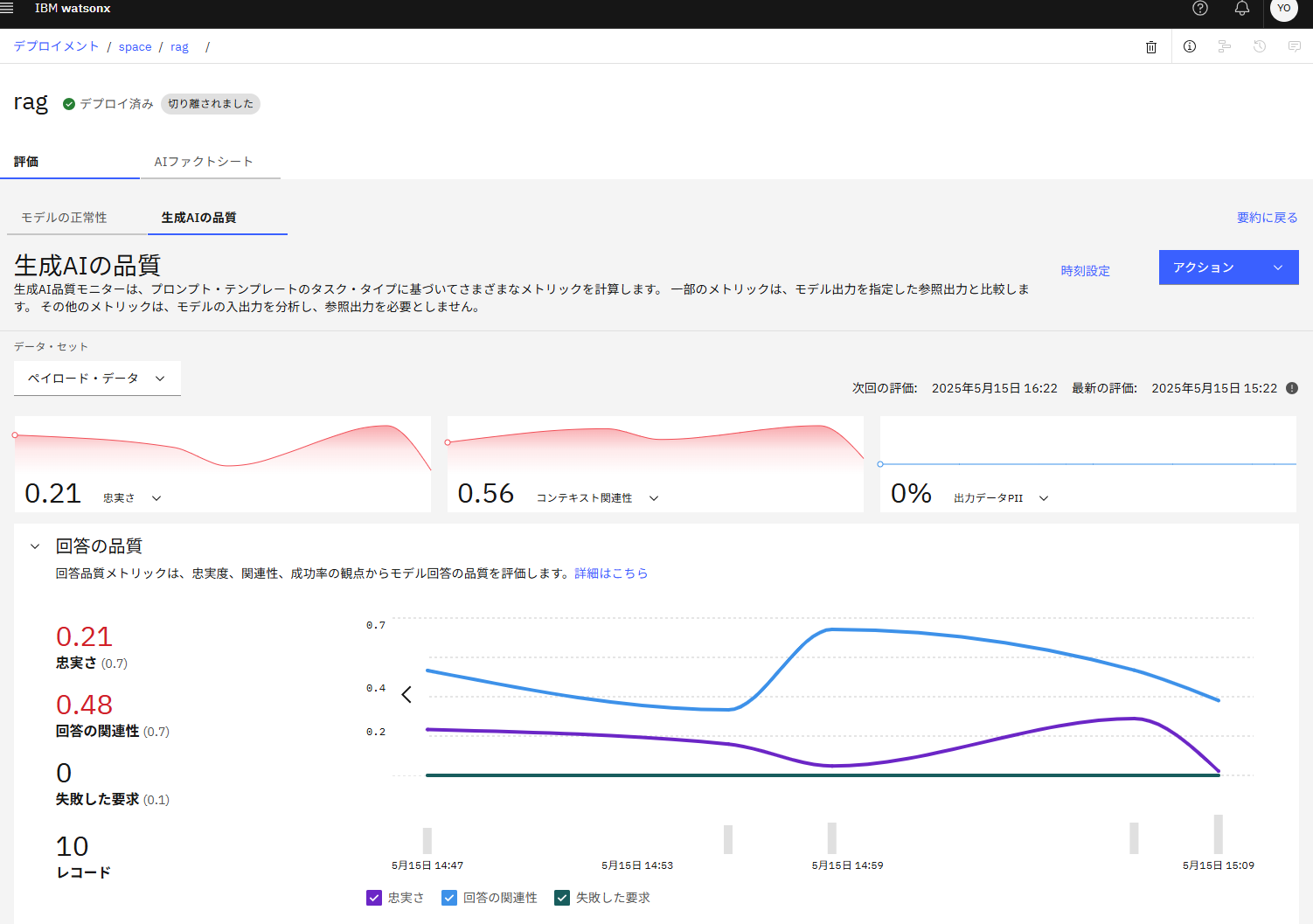

評価指標の設定

get_prompt_setup.py

# %%

#!pip install spacy

#!pip install python-dotenv

#!pip install ibm_watson_openscale

#!pip install "pandas<=2.1.9"

# %%

#import spacy

#spacy.cli.download("en_core_web_sm")

#spacy.cli.download("ja_core_news_sm")

#!python -m nltk.downloader punkt

# %%

from dotenv import load_dotenv

load_dotenv(override=True)

# %%

import os

CPD_URL = os.environ.get("CPD_URL")

CPD_USERNAME = os.environ.get("CPD_USERNAME")

CPD_PASSWORD = os.environ.get("CPD_PASSWORD")

CPD_API_KEY = os.environ.get("CPD_API_KEY")

PROJECT_ID = os.environ.get("PROJECT_ID")

SPACE_ID = os.environ.get("SPACE_ID")

PROMPTE_TEMPLATE_ASSET_ID = os.environ.get("PROMPTE_TEMPLATE_ASSET_ID")

print(PROMPTE_TEMPLATE_ASSET_ID)

DEPLOYMENT_ID = os.environ.get("DEPLOYMENT_ID")

# %%

from ibm_cloud_sdk_core.authenticators import CloudPakForDataAuthenticator

from ibm_watson_openscale import *

from ibm_watson_openscale.supporting_classes.enums import *

from ibm_watson_openscale.supporting_classes import *

authenticator = CloudPakForDataAuthenticator(

url=CPD_URL,

username=CPD_USERNAME,

password=CPD_PASSWORD,

disable_ssl_verification=True

)

wos_client = APIClient(

service_url=CPD_URL,

authenticator=authenticator,

)

data_mart_id = wos_client.service_instance_id

print(data_mart_id)

print(wos_client.version)

# %%

from ibm_watson_openscale.base_classes import ApiRequestFailure

try:

wos_client.wos.add_instance_mapping(

service_instance_id=data_mart_id,

space_id=SPACE_ID # <- ここが大切!

)

except ApiRequestFailure as arf:

if arf.response.status_code == 409:

# Instance mapping already exists

pass

else:

raise arf

# %%

language_code = "ja"

supporting_monitors = {

"generative_ai_quality": {

"parameters": {

"min_sample_size": 10,

"metrics_configuration": {

"faithfulness": {},

"answer_relevance": {},

"rouge_score": {},

"exact_match": {},

"bleu": {},

"unsuccessful_requests": {},

"hap_input_score": {},

"hap_score": {},

"pii": {

"language_code" : language_code

},

"pii_input": {

"language_code" : language_code

},

"retrieval_quality": {},

}

}

}

}

# %%

language_code = "ja"

response = wos_client.wos.execute_prompt_setup(

prompt_template_asset_id=PROMPTE_TEMPLATE_ASSET_ID,

space_id=SPACE_ID,

deployment_id=DEPLOYMENT_ID,

label_column="reference_text",

operational_space_id="production",

problem_type="retrieval_augmented_generation",

input_data_type="unstructured_text",

data_input_locale=[language_code],

generated_output_locale=[language_code],

context_fields=["context"],

question_field="question",

supporting_monitors=supporting_monitors,

background_mode=True)

response.result.to_dict()

# %%

response = wos_client.monitor_instances.mrm.get_prompt_setup(

prompt_template_asset_id=PROMPTE_TEMPLATE_ASSET_ID,

deployment_id=DEPLOYMENT_ID,

space_id=SPACE_ID)

response.result.to_dict()

# %%

RAGアプリケーションの実装

rag.py

# %%

#!pip install python-dotenv

#!pip install azure.identity

#!pip install langchain_openai

# %%

from dotenv import load_dotenv

load_dotenv(override=True)

# %%

import os

AZURE_OPENAI_ENDPOINT = os.environ.get('AZURE_OPENAI_ENDPOINT')

API_VERSION = os.environ.get("API_VERSION")

AZURE_OPENAI_DEPLOYMENT_NAME = os.environ.get("AZURE_OPENAI_DEPLOYMENT_NAME")

AZURE_CLIENT_ID = os.environ.get("AZURE_CLIENT_ID")

AZURE_CLIENT_SECRET = os.environ.get("AZURE_CLIENT_SECRET")

AZURE_TENANT_ID = os.environ.get("AZURE_TENANT_ID")

# %%

from azure.identity import ClientSecretCredential, get_bearer_token_provider

credential = ClientSecretCredential(

tenant_id=AZURE_TENANT_ID,

client_id=AZURE_CLIENT_ID,

client_secret=AZURE_CLIENT_SECRET

)

# %%

scopes = "https://cognitiveservices.azure.com/.default"

azure_ad_token_provider = get_bearer_token_provider(credential, scopes)

# %%

from langchain_openai import AzureChatOpenAI

temperature = 0

max_tokens = 4096

llm = AzureChatOpenAI(

azure_endpoint=AZURE_OPENAI_ENDPOINT,

api_version=API_VERSION,

azure_deployment=AZURE_OPENAI_DEPLOYMENT_NAME,

azure_ad_token_provider=azure_ad_token_provider,

temperature=temperature,

max_tokens=max_tokens,

)

# %%

from langchain_core.prompts import PromptTemplate

template = """# 指示:

与えられた文脈にもとづいて質問に回答してください。

# 文脈:

{context}

# 質問:

{question}

# 回答:

"""

prompt_template = PromptTemplate.from_template(template=template)

# %%

#question = "私の名前は?"

#context = "はじめまして。私の名前はonoyu1012です。"

#response = llm.invoke(input=prompt_template.format(context=context, question=question))

#print(response)

# %%

store_records.py

# %%

from dotenv import load_dotenv

load_dotenv(override=True)

# %%

import os

CPD_URL = os.environ.get("CPD_URL")

CPD_USERNAME = os.environ.get("CPD_USERNAME")

CPD_API_KEY = os.environ.get("CPD_API_KEY")

PROJECT_ID = os.environ.get("PROJECT_ID")

SPACE_ID = os.environ.get("SPACE_ID")

SUBSCRIPTION_ID = os.environ.get("SUBSCRIPTION_ID")

# %%

from ibm_cloud_sdk_core.authenticators import CloudPakForDataAuthenticator

from ibm_watson_openscale import *

from ibm_watson_openscale.supporting_classes.enums import *

from ibm_watson_openscale.supporting_classes import *

authenticator = CloudPakForDataAuthenticator(

url=CPD_URL,

username=CPD_USERNAME,

apikey=CPD_API_KEY,

disable_ssl_verification=True

)

wos_client = APIClient(

service_url=CPD_URL,

authenticator=authenticator,

)

data_mart_id = wos_client.service_instance_id

data_mart_id

# %%

wos_client.version

# %%

from ibm_watson_openscale.supporting_classes.enums import *

data_set_id = None

response = wos_client.data_sets.list(

type=DataSetTypes.PAYLOAD_LOGGING,

target_target_id=SUBSCRIPTION_ID,

target_target_type=TargetTypes.SUBSCRIPTION)

response.result.to_dict()

# %%

data_set_id = response.result.to_dict()['data_sets'][0]['metadata']['id']

data_set_id

# %%

#import pandas as pd

#df = pd.read_csv("../evaluate.csv")

#df

# %%

#i = 1

# %%

#request_body = []

#question = df.iloc[i]["question"]

#context = df.iloc[i]["context"]

#generated_text = df.iloc[i]["generated_text"]

#tmp = {

# "request": {

# "parameters": {

# "template_variables": {

# "context": context,

# "question": question

# }

# }

# },

# "response": {

# "results": [

# {

# "generated_text": generated_text

# }

# ]

# }

#}

#request_body.append(tmp)

# %%

#import json

#print(json.dumps(request_body, indent=2, ensure_ascii=False))

# %%

#response = wos_client.data_sets.store_records(

# data_set_id=data_set_id,

# request_body=request_body,

# background_mode=True)

#response.result.to_dict()

# %%

#wos_client.data_sets.get_records_count(data_set_id=data_set_id)

# %%

app.py

# %%

#!pip install gradio

# %%

import gradio as gr

from rag import prompt_template, llm

from store_records import wos_client, data_set_id

# %%

def rag(question, context):

try:

response = llm.invoke(input=prompt_template.format(context=context, question=question))

generated_text = response.content

request_body = []

question = question

context = context

generated_text = generated_text

tmp = {

"request": {

"parameters": {

"template_variables": {

"context": context,

"question": question

}

}

},

"response": {

"results": [

{

"generated_text": generated_text

}

]

}

}

request_body.append(tmp)

response = wos_client.data_sets.store_records(

data_set_id=data_set_id,

request_body=request_body,

background_mode=True)

response.result.to_dict()

except Exception as e:

generated_text = ""

return generated_text

return generated_text

# %%

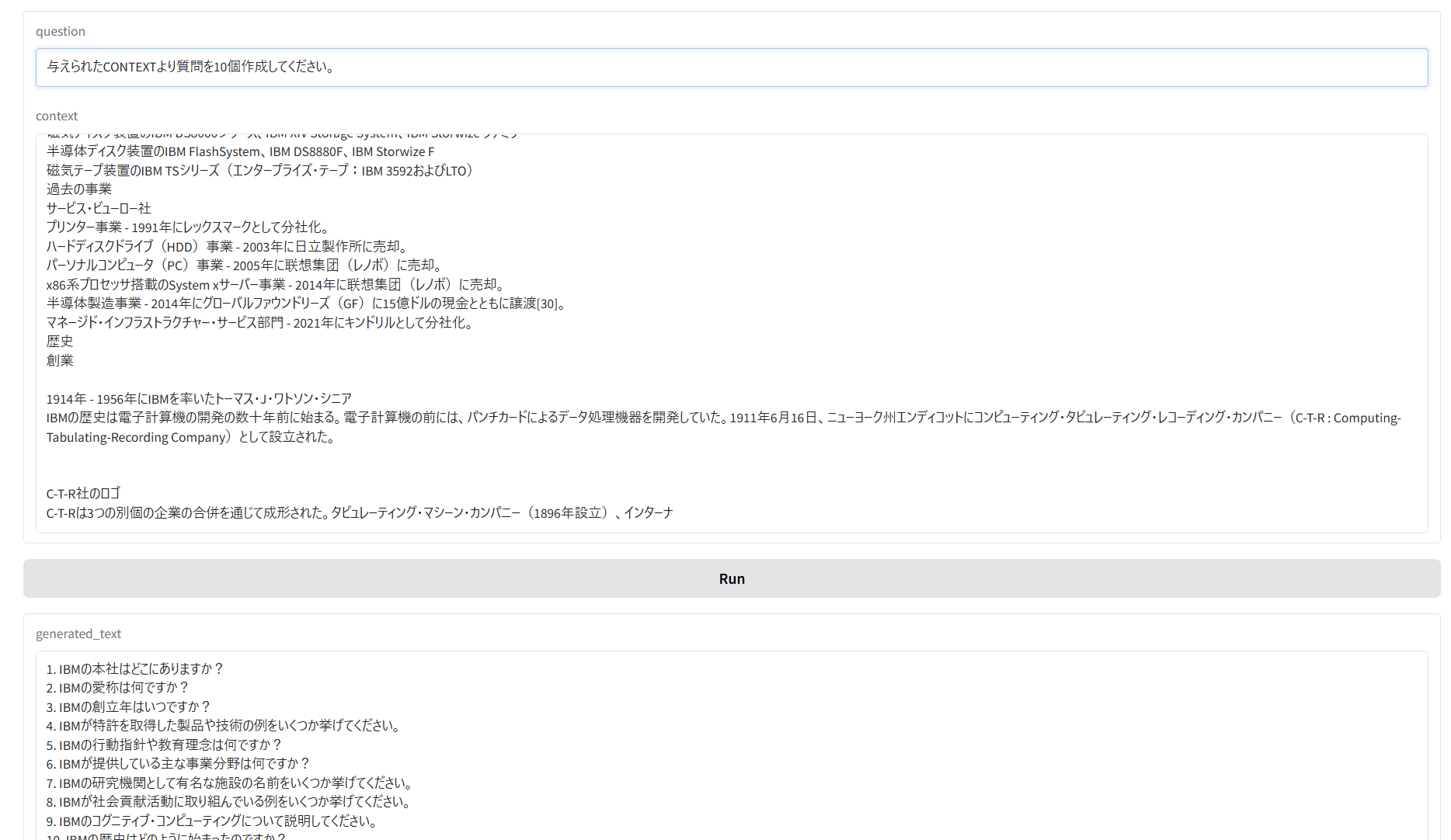

with gr.Blocks() as app:

question = gr.Textbox(label="question", value="")

context = gr.Textbox(label="context", value="")

button = gr.Button()

generated_text = gr.Textbox(label="generated_text")

button.click(fn=rag, inputs=[question, context], outputs=[generated_text])

question.submit(fn=rag, inputs=[question, context], outputs=[generated_text])

# %%

app.launch(server_name="0.0.0.0")

RAGアプリケーションの実行

python app.py

question(質問)とcontext(外部情報)を与えて実行するとanswerが出力される裏で、ログをwatsonx.governanceに送信している。

定期間隔、もしくは今すぐ評価をクリックすれば評価指標の計算が実施。