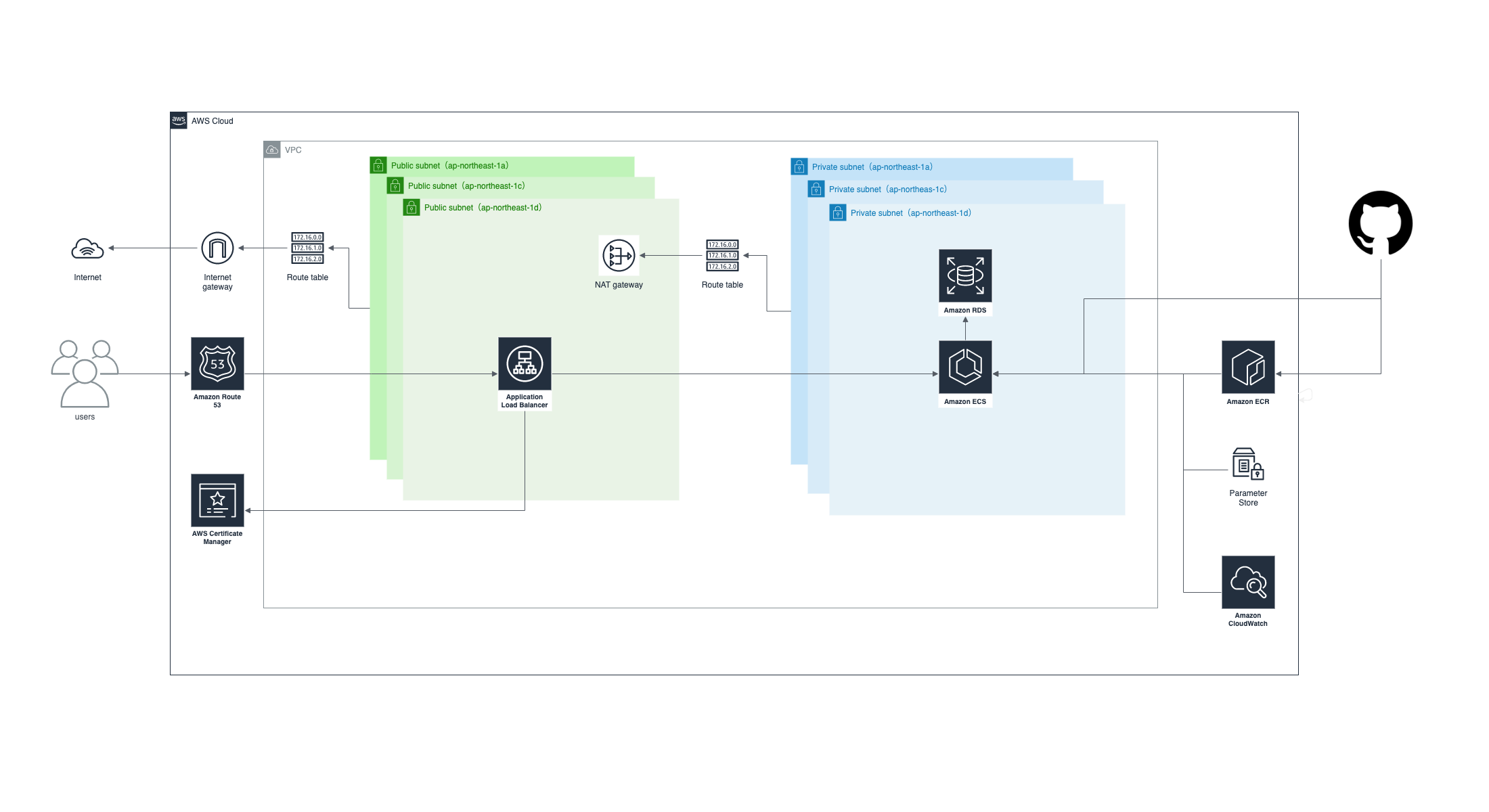

基本構成は以下の通りで

- あまり変更が少ないインフラ周りはterraformから更新

- 頻繁に変更されるソースやタスク定義はGitHub Actionsから更新

するようにしています。

環境ごとにディレクトリも分けて、実運用を考えた構成してみたので、参考になれば幸いです

サンプルコード

https://github.com/okdyy75/dev-laravel-ecs-terraform-sample

ディレクトリ構成

.

├── docker ...ローカル開発用Docker

├── docker-compose.yml ...ローカル開発用Docker Compose

├── docker-compose.yml.dev ...ECR用Dockerfileのビルド参考用Docker Compose

├── ecs ...ecs関連(ECR・タスク定義)

│ └── dev

│ ├── container ...ECR用Dockerfile

│ └── task_definition ...タスク定義ファイル

├── system.drawio ...システム構成図

├── terraform ...terraform関連(インフラ周り)

│ ├── environments

│ │ └── dev ...dev環境向けterraform

│ ├── example.tfvars ...環境変数ファイル

│ └── modules

│ ├── ecs ...ecs関連の構成

│ └── rds ...rds関連の構成

└── web

└── laravel ... Laravel本体

実行環境・必要なツール

- terraform

- aws cli

- session-manager-plugin

$ terraform -v

Terraform v1.4.6

on darwin_amd64

$ aws --version

aws-cli/2.11.16 Python/3.11.3 Darwin/22.4.0 exe/x86_64 prompt/off

事前設定

# tfstate管理用にS3バケット作成

aws s3 mb s3://y-oka-ecs-dev

# ECRリポジトリ作成

aws ecr create-repository --repository-name y-oka-ecs/dev/nginx

aws ecr create-repository --repository-name y-oka-ecs/dev/php-fpm

# tfvarsコピー

cp example.tfvars ./environments/dev/dev.tfvars

# terraform初期化

terraform init

ECSタスク定義作成

ECS本体の設定はterraform側で管理するが、ECR・タスク定義はGitHub Actionsから管理するので、ロール名やロググループのパスはterraformとタスク定義jsonで事前に揃えておく

- family(例:

y-oka-ecs-dev) - taskRoleArn(例:

y-oka-ecs-task-execution) - logConfigurationのawslogs-group(例:

/y-oka-ecs/ecs) - secretsのvalueFrom(例:

/y-oka-ecs/dev/APP_KEY)

を揃える

ecs/dev/task_definition/y-oka-ecs.json

"family": "y-oka-ecs-dev",

"taskRoleArn": "arn:aws:iam::<awsのアカウントID>:role/y-oka-ecs-dev-task-execution",

"executionRoleArn": "arn:aws:iam::<awsのアカウントID>:role/y-oka-ecs-dev-task-execution",

...

"secrets": [

{

"name": "APP_KEY",

"valueFrom": "/y-oka-ecs/dev/APP_KEY"

},

...

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-region": "ap-northeast-1",

"awslogs-group": "/y-oka-ecs/ecs",

"awslogs-stream-prefix": "dev"

}

}

clusterとserviceはterraformでまだ作成されていないのでコメントアウト

- name: Deploy to ECS TaskDefinition

uses: aws-actions/amazon-ecs-deploy-task-definition@v1

with:

task-definition: ${{ steps.render-nginx-container.outputs.task-definition }}

# cluster: ${{ env.ECS_CLUSTER }}

# service: ${{ env.ECS_SERVICE }}

GitHub Actionsからタスク定義作成CIを実行

CIを実行する前にGitHub ActionsのSecretsを設定しておく。Secretsを設定したらプッシュしてECSデプロイCIを実行

git push origin develop

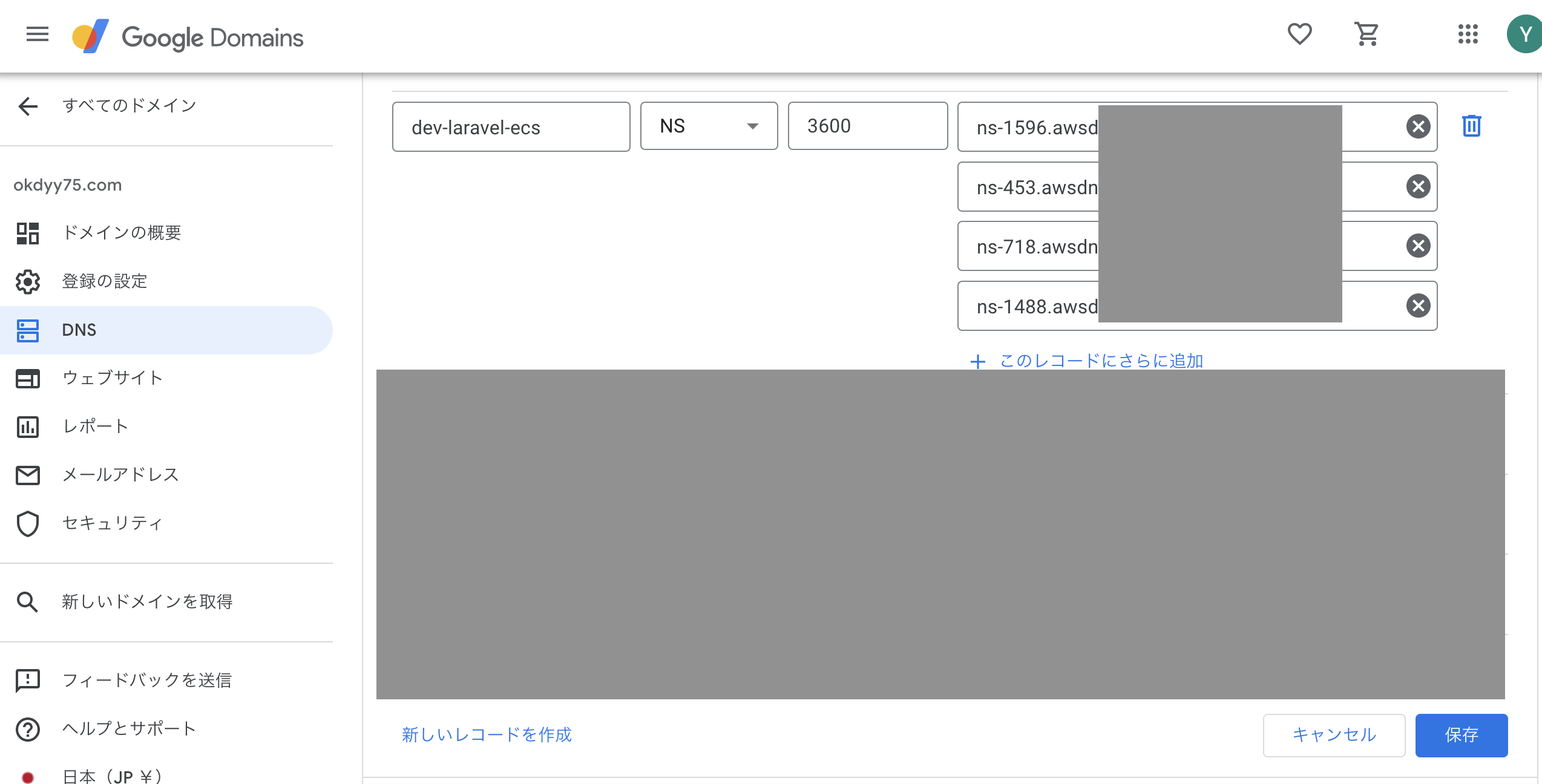

ドメイン設定

今回はhttps接続できるようにドメインも設定しておく。

自分は「okdyy75.com」というドメインをGoogleドメインに持っているので、新しく「dev-laravel-ecs.okdyy75.com」というドメインをRoute53からレコード作成して証明書を設定していく

Route53からドメイン作成

Route53から「dev-laravel-ecs.okdyy75.com」というドメイン名でホストゾーンを作成

ホストゾーンを作成するとNSレコードとSOAレコードが作成されるので

そのNSレコードのネームサーバーをGoogleドメインに登録

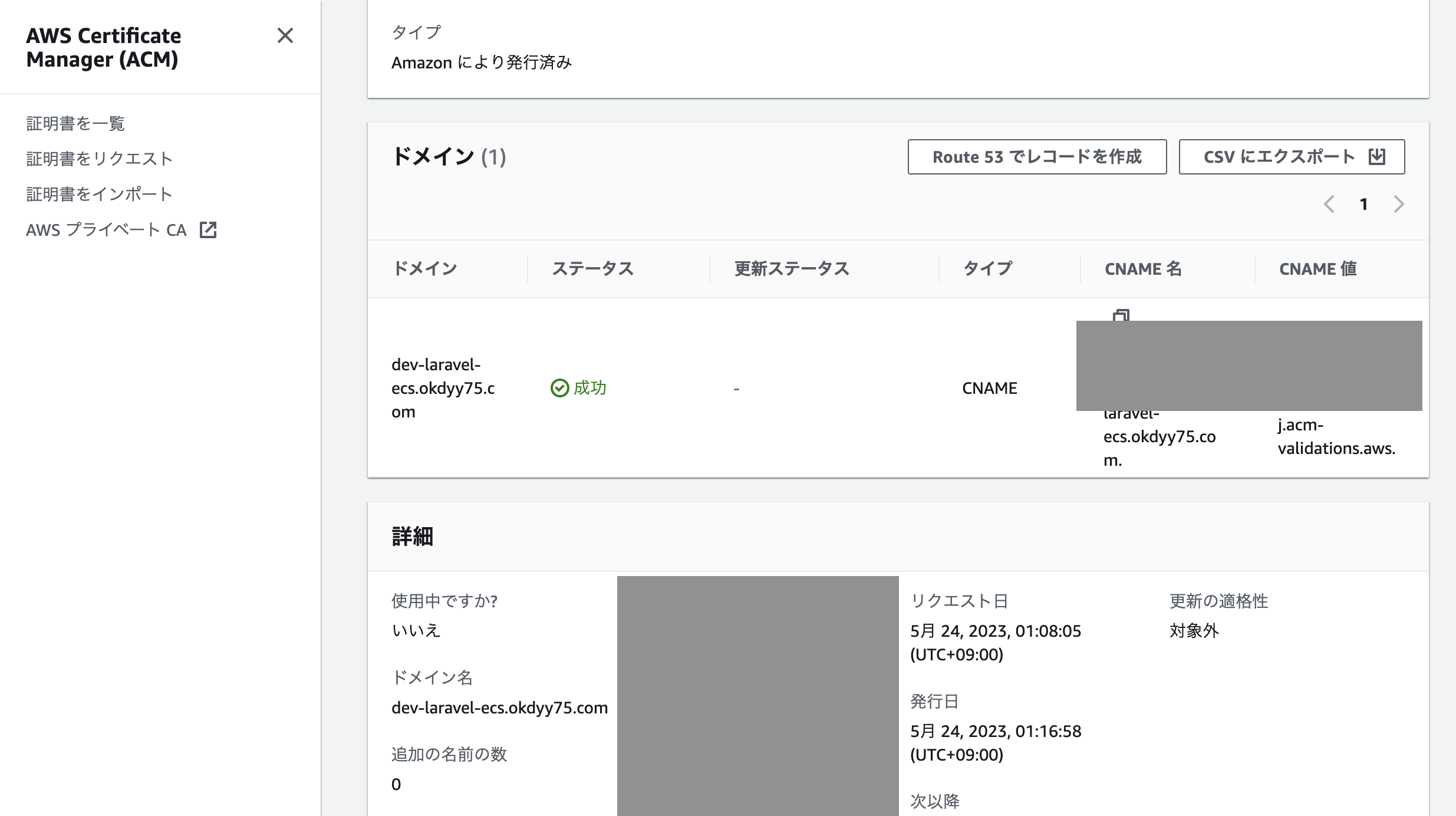

作成したドメインの証明書作成

AWS Certificate Manager (ACM)から「dev-laravel-ecs.okdyy75.com」のドメインの証明書をリクエスト

作成後、証明書の詳細画面から「Route53でレコード作成」を実行

以上で下準備は完了です!!

開発の流れ

- developブランチからトピックブランチを切る

- インフラを更新したい場合は

terraform/以下のディレクトリを修正したブランチを切る - タスク定義・ソースを更新したい場合は

ecs/、web/以下のディレクトリを修正したブランチを切る

- インフラを更新したい場合は

- terraform周りの修正したブランチはマージする前に一度GitHub ActionsからTerraformプランCI(

terraform_plan_dev.yml)を手動実行して確認する。 - トピックブランチをdevelopブランチにマージする。マージされると各GitHub Actionsが動作する

-

terraform/以下のディレクトリを修正した場合はterrform applyが実行される -

ecs/、web/以下のディレクトリを修正した場合はタスク定義が更新され、新しいタスクがデプロイされる

-

- リリース後artisanコマンドを実行したい場合はGitHub ActionsのECS Exec command CI(

ecs_exec_cmd_dev.yml)を実行する- たとえばSeederを実行したい場合は

"php","/var/www/web/laravel/artisan","db:seed","--class=UserSeeder","--force"のように実行する

- たとえばSeederを実行したい場合は

Terraform解説

基本的には各環境ごとにterraform/environments/以下にディレクトリを分けてterraform applyを実行

メインのtfファイル

ネットワーク周りはあえてmodule化せず各環境ごとに作るようにした。

tfstateファイル名はベタ書きしているので注意。

事前にRoute53でドメイン登録と証明書を作成しておく

terraform/environments/dev/main.tf

terraform {

required_version = "~> 1.4.6"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.65.0"

}

}

backend "s3" {

bucket = "y-oka-ecs-dev"

region = "ap-northeast-1"

key = "y-oka-ecs-dev.tfstate"

encrypt = true

}

}

provider "aws" {

region = "ap-northeast-1"

default_tags {

tags = {

env = var.env

service = var.app_name

Name = var.app_name

}

}

}

variable "env" {

type = string

}

variable "app_domain" {

type = string

}

variable "app_name" {

type = string

}

variable "app_key" {

type = string

}

variable "db_name" {

type = string

}

variable "db_username" {

type = string

}

variable "db_password" {

type = string

}

output "variable_env" {

value = var.env

}

output "variable_app_name" {

value = var.app_name

}

###########################################################

### ネットワーク

############################################################

### VPC ####################

resource "aws_vpc" "main" {

cidr_block = "10.0.0.0/16"

tags = {

Name = "${var.app_name}-${var.env}-vpc"

}

}

### Public ####################

## Subnet

resource "aws_subnet" "public_1a" {

vpc_id = aws_vpc.main.id

availability_zone = "ap-northeast-1a"

cidr_block = "10.0.1.0/24"

tags = {

Name = "${var.app_name}-${var.env}-subnet-public-1a"

}

}

resource "aws_subnet" "public_1c" {

vpc_id = aws_vpc.main.id

availability_zone = "ap-northeast-1c"

cidr_block = "10.0.2.0/24"

tags = {

Name = "${var.app_name}-${var.env}-subnet-public-1c"

}

}

resource "aws_subnet" "public_1d" {

vpc_id = aws_vpc.main.id

availability_zone = "ap-northeast-1d"

cidr_block = "10.0.3.0/24"

tags = {

Name = "${var.app_name}-${var.env}-subnet-public-1d"

}

}

## IGW

resource "aws_internet_gateway" "main" {

tags = {

Name = "${var.app_name}-${var.env}-igw"

}

}

resource "aws_internet_gateway_attachment" "igw_main_attach" {

vpc_id = aws_vpc.main.id

internet_gateway_id = aws_internet_gateway.main.id

}

## RTB

resource "aws_route_table" "public" {

vpc_id = aws_vpc.main.id

tags = {

Name = "${var.app_name}-${var.env}-rtb-public"

}

}

resource "aws_route" "public" {

destination_cidr_block = "0.0.0.0/0"

route_table_id = aws_route_table.public.id

gateway_id = aws_internet_gateway.main.id

}

resource "aws_route_table_association" "public_1a" {

subnet_id = aws_subnet.public_1a.id

route_table_id = aws_route_table.public.id

}

resource "aws_route_table_association" "public_1c" {

subnet_id = aws_subnet.public_1c.id

route_table_id = aws_route_table.public.id

}

resource "aws_route_table_association" "public_1d" {

subnet_id = aws_subnet.public_1d.id

route_table_id = aws_route_table.public.id

}

### Private ####################

## Subnet

resource "aws_subnet" "private_1a" {

vpc_id = aws_vpc.main.id

availability_zone = "ap-northeast-1a"

cidr_block = "10.0.10.0/24"

tags = {

Name = "${var.app_name}-${var.env}-subnet-private-1a"

}

}

resource "aws_subnet" "private_1c" {

vpc_id = aws_vpc.main.id

availability_zone = "ap-northeast-1c"

cidr_block = "10.0.20.0/24"

tags = {

Name = "${var.app_name}-${var.env}-subnet-private-1c"

}

}

resource "aws_subnet" "private_1d" {

vpc_id = aws_vpc.main.id

availability_zone = "ap-northeast-1d"

cidr_block = "10.0.30.0/24"

tags = {

Name = "${var.app_name}-${var.env}-subnet-private-1d"

}

}

## NGW

resource "aws_eip" "ngw_1a" {

vpc = true

tags = {

Name = "${var.app_name}-${var.env}-eip-ngw-1a"

}

}

resource "aws_eip" "ngw_1c" {

vpc = true

tags = {

Name = "${var.app_name}-${var.env}-eip-ngw-1c"

}

}

resource "aws_eip" "ngw_1d" {

vpc = true

tags = {

Name = "${var.app_name}-${var.env}-eip-ngw-1d"

}

}

resource "aws_nat_gateway" "ngw_1a" {

subnet_id = aws_subnet.public_1a.id

allocation_id = aws_eip.ngw_1a.id

tags = {

Name = "${var.app_name}-${var.env}-ngw-1a"

}

}

resource "aws_nat_gateway" "ngw_1c" {

subnet_id = aws_subnet.public_1c.id

allocation_id = aws_eip.ngw_1c.id

tags = {

Name = "${var.app_name}-${var.env}-ngw-1c"

}

}

resource "aws_nat_gateway" "ngw_1d" {

subnet_id = aws_subnet.public_1d.id

allocation_id = aws_eip.ngw_1d.id

tags = {

Name = "${var.app_name}-${var.env}-ngw-1d"

}

}

## RTB

resource "aws_route_table" "private_1a" {

vpc_id = aws_vpc.main.id

tags = {

Name = "${var.app_name}-${var.env}-rtb-private-1a"

}

}

resource "aws_route_table" "private_1c" {

vpc_id = aws_vpc.main.id

tags = {

Name = "${var.app_name}-${var.env}-rtb-private-1c"

}

}

resource "aws_route_table" "private_1d" {

vpc_id = aws_vpc.main.id

tags = {

Name = "${var.app_name}-${var.env}-rtb-private-1d"

}

}

resource "aws_route" "private_1a" {

destination_cidr_block = "0.0.0.0/0"

route_table_id = aws_route_table.private_1a.id

nat_gateway_id = aws_nat_gateway.ngw_1a.id

}

resource "aws_route" "private_1c" {

destination_cidr_block = "0.0.0.0/0"

route_table_id = aws_route_table.private_1c.id

nat_gateway_id = aws_nat_gateway.ngw_1c.id

}

resource "aws_route" "private_1d" {

destination_cidr_block = "0.0.0.0/0"

route_table_id = aws_route_table.private_1d.id

nat_gateway_id = aws_nat_gateway.ngw_1d.id

}

resource "aws_route_table_association" "private_1a" {

subnet_id = aws_subnet.private_1a.id

route_table_id = aws_route_table.private_1a.id

}

resource "aws_route_table_association" "private_1c" {

subnet_id = aws_subnet.private_1c.id

route_table_id = aws_route_table.private_1c.id

}

resource "aws_route_table_association" "private_1d" {

subnet_id = aws_subnet.private_1d.id

route_table_id = aws_route_table.private_1d.id

}

############################################################

### RDS

############################################################

module "rds" {

source = "../../modules/rds"

env = var.env

app_name = var.app_name

db_name = var.db_name

db_username = var.db_username

db_password = var.db_password

vpc_id = aws_vpc.main.id

vpc_cidr_block = aws_vpc.main.cidr_block

private_subnet_ids = [

aws_subnet.private_1a.id,

aws_subnet.private_1c.id,

aws_subnet.private_1d.id

]

}

############################################################

### ECS

############################################################

module "ecs" {

source = "../../modules/ecs"

env = var.env

app_name = var.app_name

app_key = var.app_key

db_host = module.rds.endpoint

db_name = var.db_name

db_username = var.db_username

db_password = var.db_password

vpc_id = aws_vpc.main.id

vpc_cidr_block = aws_vpc.main.cidr_block

acm_cert_app_domain_arn = data.aws_acm_certificate.app_domain.arn

public_subnet_ids = [

aws_subnet.public_1a.id,

aws_subnet.public_1c.id,

aws_subnet.public_1d.id

]

private_subnet_ids = [

aws_subnet.private_1a.id,

aws_subnet.private_1c.id,

aws_subnet.private_1d.id

]

}

############################################################

### Route 53

############################################################

data "aws_route53_zone" "app_domain" {

name = var.app_domain

}

resource "aws_route53_record" "app_domain_a" {

zone_id = data.aws_route53_zone.app_domain.zone_id

name = var.app_domain

type = "A"

alias {

name = module.ecs.lb_dns_name

zone_id = module.ecs.lb_zone_id

evaluate_target_health = true

}

}

data "aws_acm_certificate" "app_domain" {

domain = var.app_domain

}

output "app_domain_nameserver" {

value = join(", ", data.aws_route53_zone.app_domain.name_servers)

}

RDS用tfファイル

aws_rds_clusterのengine_versionはAWSのGUIからとドキュメントを参考に設定する

Aurora MySQL のバージョン番号と特殊バージョン

aws_rds_cluster_instanceのinstance_classはmysql8から最小のインスタンスタイプであるdb.t3.smallが使えずdb.t3.mediumからなので注意

terraform/modules/rds/main.tf

variable "env" {

type = string

}

variable "app_name" {

type = string

}

variable "db_name" {

type = string

}

variable "db_username" {

type = string

}

variable "db_password" {

type = string

}

variable "vpc_id" {

type = string

}

variable "vpc_cidr_block" {

type = string

}

variable "private_subnet_ids" {

type = list(string)

}

### DBサブネットグループ ####################

resource "aws_db_subnet_group" "this" {

name = "${var.app_name}-${var.env}-db-subnet-group"

subnet_ids = var.private_subnet_ids

}

# SG

resource "aws_security_group" "rds" {

name = "${var.app_name}-${var.env}-rds-sg"

vpc_id = var.vpc_id

}

# アウトバウンド(外に出る)ルール

resource "aws_security_group_rule" "rds_out_all" {

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

security_group_id = aws_security_group.rds.id

}

# インバウンド(受け入れる)ルール

resource "aws_security_group_rule" "rds_in_mysql" {

type = "ingress"

from_port = 3306

to_port = 3306

protocol = "tcp"

cidr_blocks = [

var.vpc_cidr_block

]

security_group_id = aws_security_group.rds.id

}

resource "aws_db_parameter_group" "this" {

name = "${var.app_name}-${var.env}-db-parameter-group"

family = "aurora-mysql8.0"

}

resource "aws_rds_cluster_parameter_group" "this" {

name = "${var.app_name}-${var.env}-db-cluster-parameter-group"

family = "aurora-mysql8.0"

parameter {

name = "character_set_server"

value = "utf8mb4"

}

parameter {

name = "collation_server"

value = "utf8mb4_bin"

}

parameter {

name = "time_zone"

value = "Asia/Tokyo"

apply_method = "immediate"

}

}

resource "aws_rds_cluster" "this" {

cluster_identifier = "${var.app_name}-${var.env}"

database_name = var.db_name

master_username = var.db_username

master_password = var.db_password

port = 3306

apply_immediately = false # apply時に再起動するか

skip_final_snapshot = true # インスタンス削除時にスナップショットを取るかどうか

engine = "aurora-mysql"

engine_version = "8.0.mysql_aurora.3.03.1"

vpc_security_group_ids = [

aws_security_group.rds.id

]

db_subnet_group_name = aws_db_subnet_group.this.name

db_cluster_parameter_group_name = aws_rds_cluster_parameter_group.this.name

}

resource "aws_rds_cluster_instance" "this" {

identifier = "${var.app_name}-${var.env}"

cluster_identifier = aws_rds_cluster.this.id

instance_class = "db.t3.medium"

apply_immediately = false # apply時に再起動するか

engine = "aurora-mysql"

engine_version = "8.0.mysql_aurora.3.03.1"

db_subnet_group_name = aws_db_subnet_group.this.name

db_parameter_group_name = aws_db_parameter_group.this.name

}

output "endpoint" {

value = aws_rds_cluster.this.endpoint

}

output "reader_endpoint" {

value = aws_rds_cluster.this.reader_endpoint

}

ECS用tfファイル

aws_lb_listenerのdefault_actionはlistener_ruleが適用されずに最後に実行される表示なので、デフォルトの固定レスポンスが表示される=>想定外の表示なので503エラーを返している

ECSクラスターを削除するタイミングでlocal-execからstop-tasks.shを実行しているのは、事前にクラスターのタスク数を0にしておかないとクラスターが削除できず「aws_ecs_service.service: Still destroying...」が無限に続いてしまうので、bashから直接タスクを0に更新している

詳しくはこちらのissueが参考になる

Destroy aws_ecs_service.service on Fargate gets stuck #3414

https://github.com/hashicorp/terraform-provider-aws/issues/3414

事前にGitHub Actionsからタスク定義を作成しておくこと

terraform/modules/ecs/main.tf

variable "env" {

type = string

}

variable "app_name" {

type = string

}

variable "app_key" {

type = string

}

variable "db_host" {

type = string

}

variable "db_name" {

type = string

}

variable "db_username" {

type = string

}

variable "db_password" {

type = string

}

variable "vpc_id" {

type = string

}

variable "vpc_cidr_block" {

type = string

}

variable "public_subnet_ids" {

type = list(string)

}

variable "private_subnet_ids" {

type = list(string)

}

variable "acm_cert_app_domain_arn" {

type = string

}

### ALB ####################

### SG

resource "aws_security_group" "alb" {

name = "${var.app_name}-${var.env}-alb-sg"

vpc_id = var.vpc_id

}

# アウトバウンド(外に出る)ルール

resource "aws_security_group_rule" "alb_out_all" {

security_group_id = aws_security_group.alb.id

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

# インバウンド(受け入れる)ルール

resource "aws_security_group_rule" "alb_in_http" {

security_group_id = aws_security_group.alb.id

type = "ingress"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

resource "aws_security_group_rule" "alb_in_https" {

security_group_id = aws_security_group.alb.id

type = "ingress"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

resource "aws_lb" "this" {

name = "${var.app_name}-${var.env}-lb"

load_balancer_type = "application"

security_groups = [aws_security_group.alb.id]

subnets = var.public_subnet_ids

}

resource "aws_lb_listener" "http" {

port = "80"

protocol = "HTTP"

load_balancer_arn = aws_lb.this.arn

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "503 Service Unavailable"

status_code = "503"

}

}

}

resource "aws_lb_listener" "https" {

port = "443"

protocol = "HTTPS"

load_balancer_arn = aws_lb.this.arn

certificate_arn = var.acm_cert_app_domain_arn

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "503 Service Unavailable"

status_code = "503"

}

}

}

resource "aws_lb_listener_rule" "http" {

listener_arn = aws_lb_listener.http.arn

action {

type = "forward"

target_group_arn = aws_lb_target_group.this.id

}

condition {

path_pattern {

values = ["*"]

}

}

tags = {

Name = "${var.app_name}-${var.env}-lb-listener-rule-http"

}

}

resource "aws_lb_listener_rule" "https" {

listener_arn = aws_lb_listener.https.arn

action {

type = "forward"

target_group_arn = aws_lb_target_group.this.id

}

condition {

path_pattern {

values = ["*"]

}

}

tags = {

Name = "${var.app_name}-${var.env}-lb-listener-rule-https"

}

}

resource "aws_lb_target_group" "this" {

name = "${var.app_name}-${var.env}-lb-target-group"

vpc_id = var.vpc_id

port = 80

protocol = "HTTP"

target_type = "ip"

health_check {

port = 80

path = "/api/health_check"

}

}

### ECS ####################

## SG

resource "aws_security_group" "ecs" {

name = "${var.app_name}-${var.env}-sg"

vpc_id = var.vpc_id

}

# アウトバウンド(外に出る)ルール

resource "aws_security_group_rule" "ecs_out_all" {

security_group_id = aws_security_group.ecs.id

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

# インバウンド(受け入れる)ルール

resource "aws_security_group_rule" "ecs_in_http" {

security_group_id = aws_security_group.ecs.id

type = "ingress"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = [

var.vpc_cidr_block

]

}

# ECSのロールはタスク定義から参照される

resource "aws_iam_role" "ecs_task_execution" {

name = "${var.app_name}-${var.env}-task-execution"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = "sts:AssumeRole"

Principal = {

Service = "ecs-tasks.amazonaws.com"

}

}

]

})

}

resource "aws_iam_role_policy_attachment" "ecs_task_execution" {

role = aws_iam_role.ecs_task_execution.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy"

}

resource "aws_iam_role_policy_attachment" "ecs_task_execution_ssm" {

role = aws_iam_role.ecs_task_execution.name

policy_arn = "arn:aws:iam::aws:policy/AmazonSSMReadOnlyAccess"

}

# タスク定義はGithubActionsのCIから作成・更新する

data "aws_ecs_task_definition" "this" {

task_definition = "${var.app_name}-${var.env}"

}

resource "aws_ecs_cluster" "this" {

name = "${var.app_name}-${var.env}"

provisioner "local-exec" {

when = destroy

command = "${path.module}/scripts/stop-tasks.sh"

environment = {

CLUSTER = self.name

}

}

}

resource "aws_ecs_service" "this" {

name = "${var.app_name}-${var.env}"

depends_on = [

aws_lb_listener_rule.http,

aws_lb_listener_rule.https,

]

cluster = aws_ecs_cluster.this.name

launch_type = "FARGATE"

desired_count = "1"

task_definition = data.aws_ecs_task_definition.this.arn

network_configuration {

subnets = var.private_subnet_ids

security_groups = [

aws_security_group.ecs.id

]

}

load_balancer {

target_group_arn = aws_lb_target_group.this.arn

container_name = "nginx"

container_port = "80"

}

}

### Cloudwatch Log ####################

resource "aws_cloudwatch_log_group" "this" {

name = "/${var.app_name}/ecs"

retention_in_days = 30

}

### Parameter Store ####################

resource "aws_ssm_parameter" "app_key" {

name = "/${var.app_name}/${var.env}/APP_KEY"

type = "SecureString"

value = var.app_key

}

resource "aws_ssm_parameter" "db_host" {

name = "/${var.app_name}/${var.env}/DB_HOST"

type = "SecureString"

value = var.db_host

}

resource "aws_ssm_parameter" "db_username" {

name = "/${var.app_name}/${var.env}/DB_USERNAME"

type = "SecureString"

value = var.db_username

}

resource "aws_ssm_parameter" "db_password" {

name = "/${var.app_name}/${var.env}/DB_PASSWORD"

type = "SecureString"

value = var.db_password

}

output "lb_dns_name" {

value = aws_lb.this.dns_name

}

output "lb_zone_id" {

value = aws_lb.this.zone_id

}

terraform/modules/ecs/scripts/stop-tasks.sh

#!/bin/bash

SERVICES="$(aws ecs list-services --cluster "${CLUSTER}" | grep "${CLUSTER}" || true | sed -e 's/"//g' -e 's/,//')"

for SERVICE in $SERVICES ; do

# Idle the service that spawns tasks

aws ecs update-service --cluster "${CLUSTER}" --service "${SERVICE}" --desired-count 0

# Stop running tasks

TASKS="$(aws ecs list-tasks --cluster "${CLUSTER}" --service "${SERVICE}" | grep "${CLUSTER}" || true | sed -e 's/"//g' -e 's/,//')"

for TASK in $TASKS; do

aws ecs stop-task --task "$TASK"

done

# Delete the service after it becomes inactive

aws ecs wait services-inactive --cluster "${CLUSTER}" --service "${SERVICE}"

aws ecs delete-service --cluster "${CLUSTER}" --service "${SERVICE}"

done

GitHub Actions CI解説

ECSデプロイCI

ベースは以前いた会社の鈴木さんが作ったもので、鈴木さんに感謝!

タスク定義用Dockerfileとソース本体が変更('ecs/**'、'web/**')されたらECSをデプロイするようにしている。

ちなみにjob間のECRのURLを渡すのにoutputが使えない(※↓)のでartifactを使って渡している。

ECRのURLを渡すのにoutputが使えないのは、ECRのURLにAWSのアカウントIDが含まれており(例:************.dkr.ecr.ap-northeast-1.amazonaws.com)それが秘匿情報としてマスキングされているためoutputで受け渡せない。なのでoutputを使いたい場合はuses: aws-actions/configure-aws-credentialsの引数にmask-aws-account-id: 'false'を設定するとjob間の受け渡しにoutputが使えるようになる。ただしAWSのアカウントIDはマスキングされなくなるので注意

.github/workflows/deploy_ecs_dev.yml

name: Deploy ECS to Develop

on:

push:

paths:

- 'ecs/**'

- 'web/**'

branches:

- develop

env:

APP_ENV: dev

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: ap-northeast-1

ECR_PHP_REPOSITORY: y-oka-ecs/dev/php-fpm

ECR_NGINX_REPOSITORY: y-oka-ecs/dev/nginx

ECS_TASK_DEFINITION: ecs/dev/task_definition/y-oka-ecs.json

ECS_CLUSTER: y-oka-ecs-dev

ECS_SERVICE: y-oka-ecs-dev

jobs:

#

# Build PHP

#

build-php:

runs-on: ubuntu-latest

timeout-minutes: 30

steps:

#

# Setup Application

#

- name: Checkout Project

uses: actions/checkout@v2

#

# Build Image & Push to ECR

#

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1 # https://github.com/aws-actions/configure-aws-credentials

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1 # https://github.com/aws-actions/amazon-ecr-login

- name: Build, tag, and push image to Amazon ECR

id: build-image

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

IMAGE_TAG: ${{ github.sha }}

run: |

docker build -t $ECR_REGISTRY/${{ env.ECR_PHP_REPOSITORY }}:$IMAGE_TAG -f ecs/dev/container/php-fpm/Dockerfile .

docker push $ECR_REGISTRY/${{ env.ECR_PHP_REPOSITORY }}:$IMAGE_TAG

# artifact for render task definition

echo $ECR_REGISTRY/${{ env.ECR_PHP_REPOSITORY }}:$IMAGE_TAG > php_image_path.txt

- uses: actions/upload-artifact@v1

with:

name: artifact_php

path: php_image_path.txt

- name: Logout of Amazon ECR

if: always()

run: docker logout ${{ steps.login-ecr.outputs.registry }}

#

# Build Nginx

#

build-nginx:

runs-on: ubuntu-latest

timeout-minutes: 30

steps:

- name: Checkout Project

uses: actions/checkout@v2

#

# Build Image & Push to ECR

#

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1 # https://github.com/aws-actions/configure-aws-credentials

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1 # https://github.com/aws-actions/amazon-ecr-login

- name: Build, tag, and push image to Amazon ECR

id: build-image

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

IMAGE_TAG: ${{ github.sha }}

run: |

docker build -t $ECR_REGISTRY/${{ env.ECR_NGINX_REPOSITORY }}:$IMAGE_TAG -f ecs/dev/container/nginx/Dockerfile .

docker push $ECR_REGISTRY/${{ env.ECR_NGINX_REPOSITORY }}:$IMAGE_TAG

# artifact for render task definition

echo $ECR_REGISTRY/${{ env.ECR_NGINX_REPOSITORY }}:$IMAGE_TAG > nginx_image_path.txt

- uses: actions/upload-artifact@v1

with:

name: artifact_nginx

path: nginx_image_path.txt

- name: Logout of Amazon ECR

if: always()

run: docker logout ${{ steps.login-ecr.outputs.registry }}

#

# Deploy to ECS

#

deploy-ecs:

needs: [build-php, build-nginx]

runs-on: ubuntu-latest

timeout-minutes: 30

steps:

- name: Checkout Project

uses: actions/checkout@v2

# download artifacts

- uses: actions/download-artifact@v1

with:

name: artifact_php

- uses: actions/download-artifact@v1

with:

name: artifact_nginx

- name: Set Output from Artifacts

id: artifact-image

run: |

echo "php-image=`cat artifact_php/php_image_path.txt`" >> "$GITHUB_OUTPUT"

echo "nginx-image=`cat artifact_nginx/nginx_image_path.txt`" >> "$GITHUB_OUTPUT"

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1 # https://github.com/aws-actions/configure-aws-credentials

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Render TaskDefinition for php-image

id: render-php-container

uses: aws-actions/amazon-ecs-render-task-definition@v1

with:

task-definition: ${{ env.ECS_TASK_DEFINITION }}

container-name: php-fpm

image: ${{ steps.artifact-image.outputs.php-image }}

- name: Render TaskDefinition for nginx-image

id: render-nginx-container

uses: aws-actions/amazon-ecs-render-task-definition@v1

with:

task-definition: ${{ steps.render-php-container.outputs.task-definition }}

container-name: nginx

image: ${{ steps.artifact-image.outputs.nginx-image }}

- name: Deploy to ECS TaskDefinition

uses: aws-actions/amazon-ecs-deploy-task-definition@v1

with:

task-definition: ${{ steps.render-nginx-container.outputs.task-definition }}

cluster: ${{ env.ECS_CLUSTER }}

service: ${{ env.ECS_SERVICE }}

ECS Exec command CI

GitHub ActionsのGUIから手動で実行する。

commandにはコンテナに渡す Docker CMDを指定する

https://docs.docker.jp/engine/reference/builder.html#cmd

たとえばSeederを実行したい場合は"php","/var/www/web/laravel/artisan","db:seed","--class=UserSeeder","--force"のように実行する

CI実行後は「Open Run Task URL」からURLをクリック。タスク詳細を開いてログから実行状況を確認する

.github/workflows/ecs_exec_cmd_dev.yml

name: ECS Execute Command to Develop

on:

workflow_dispatch:

inputs:

command:

description: 'execute command(ex: "php","/var/www/web/laravel/artisan","xxxx")'

required: true

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: ap-northeast-1

ECS_CLUSTER: y-oka-ecs-dev

ECS_SERVICE: y-oka-ecs-dev

ECS_TASK_FAMILY: y-oka-ecs-dev

jobs:

#

# ECS Execute Command

#

ecs-execute-cmd:

runs-on: ubuntu-latest

timeout-minutes: 30

steps:

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1 # https://github.com/aws-actions/configure-aws-credentials

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: ECS Run Task for Exec Command

id: run-task-for-exec-command

run: |

network_config=$(

aws ecs describe-services \

--cluster ${{ env.ECS_CLUSTER }} \

--services ${{ env.ECS_SERVICE }} | jq '.services[0].networkConfiguration'

)

task_arn=$(

aws ecs run-task \

--cluster ${{ env.ECS_CLUSTER }} \

--launch-type "FARGATE" \

--network-configuration "${network_config}" \

--overrides '{

"containerOverrides": [

{

"name": "php-fpm",

"command": [${{ github.event.inputs.command }}]

}

]

}' \

--task-definition ${{ env.ECS_TASK_FAMILY }} | jq -r '.tasks[0].taskArn'

)

task_id=$(echo $task_arn | cut -d "/" -f 3)

task_url="https://${{ env.AWS_REGION }}.console.aws.amazon.com/ecs/v2/clusters/${{ env.ECS_CLUSTER }}/tasks/${task_id}/configuration"

echo "task_url=${task_url}" >> "$GITHUB_OUTPUT"

- name: Open Run Task URL

run: echo ${{ steps.run-task-for-exec-command.outputs.task_url }}

- name: Logout of Amazon ECR

if: always()

run: docker logout ${{ steps.login-ecr.outputs.registry }}

TerraformデプロイCI

terraformファイルが更新されたら('terraform/**')CIからterraform applyするようにしている。

apply時に必要なtfvarsの環境変数が増えたら、GitHub Actions Secretにも追加して、このCIファイルも更新する

.github/workflows/deploy_terraform_dev.yml

name: Deploy Terraform to Develop

on:

push:

paths:

- 'terraform/**'

branches:

- develop

env:

APP_ENV: dev

TF_VERSION: 1.4.6

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: ap-northeast-1

APP_KEY: ${{ secrets.DEV_APP_KEY }}

DB_USERNAME: ${{ secrets.DEV_DB_USERNAME }}

DB_PASSWORD: ${{ secrets.DEV_DB_PASSWORD }}

jobs:

#

# Terraform Apply

#

terrafom-apply:

runs-on: ubuntu-latest

timeout-minutes: 30

steps:

- name: Checkout Project

uses: actions/checkout@v2

- uses: hashicorp/setup-terraform@v2

with:

terraform_version: ${{ env.TF_VERSION }}

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1 # https://github.com/aws-actions/configure-aws-credentials

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Terraform setup

run: |

cp terraform/example.tfvars terraform/environments/dev/dev.tfvars

cd terraform/environments/dev/

sed -ie 's|app_key=".*"|app_key="${{ env.APP_KEY }}"|' dev.tfvars

sed -ie 's|db_username=".*"|db_username="${{ env.DB_USERNAME }}"|' dev.tfvars

sed -ie 's|db_password=".*"|db_password="${{ env.DB_PASSWORD }}"|' dev.tfvars

- name: Terraform init

working-directory: terraform/environments/dev

run: |

terraform init

- name: Terraform apply

working-directory: terraform/environments/dev

run: |

terraform apply -var-file=dev.tfvars -auto-approve -no-color

TerraformプランCI

developブランチにマージする前に、トピックブランチから手動実行して確認する

name: Terraform Plan to Develop

on: workflow_dispatch

env:

APP_ENV: dev

TF_VERSION: 1.4.6

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_REGION: ap-northeast-1

APP_KEY: ${{ secrets.DEV_APP_KEY }}

DB_USERNAME: ${{ secrets.DEV_DB_USERNAME }}

DB_PASSWORD: ${{ secrets.DEV_DB_PASSWORD }}

jobs:

#

# Terraform Plan

#

terrafom-plan:

runs-on: ubuntu-latest

timeout-minutes: 30

steps:

- name: Checkout Project

uses: actions/checkout@v2

- uses: hashicorp/setup-terraform@v2

with:

terraform_version: ${{ env.TF_VERSION }}

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1 # https://github.com/aws-actions/configure-aws-credentials

with:

aws-access-key-id: ${{ env.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ env.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Terraform setup

run: |

cp terraform/example.tfvars terraform/environments/dev/dev.tfvars

cd terraform/environments/dev

sed -ie 's|app_key=".*"|app_key="${{ env.APP_KEY }}"|' dev.tfvars

sed -ie 's|db_username=".*"|db_username="${{ env.DB_USERNAME }}"|' dev.tfvars

sed -ie 's|db_password=".*"|db_password="${{ env.DB_PASSWORD }}"|' dev.tfvars

- name: Terraform init

working-directory: terraform/environments/dev

run: |

terraform init

- name: Terraform plan

working-directory: terraform/environments/dev

run: |

terraform plan -var-file=dev.tfvars -no-color

ECS Execで直接コンテナに入ってデバッグする方法