TL;DR

- Openai apiを使ってプロンプトエンジニアリングとファインチューニングを比較・評価してみるまでが本記事のゴール

- model: gpt-3.5-turbo

- 環境:Colaboratory

- 題材はこちらの過去のKaggleコンペティション

(注)Openaiの有料アカウントが必要で、API利用料/Fineturning料が発生します

共通部分

インポート、認証、もろもろ

!pip install openai

from google.colab import auth

auth.authenticate_user()

from google.colab import drive

drive.mount('/content/drive')

import os

import pandas as pd

import openai

os.environ["OPENAI_API_KEY"] = 'sk-XXXXXXXXXXXXXXX'

データロード、作成

%cd drive/MyDrive/コンペ_Kaggle/'LLM Science Exam'

from sklearn.model_selection import train_test_split

df_sample = pd.read_csv("data/sample_submission.csv")

df_data = pd.read_csv("data/train.csv")

df_train, df_valid = train_test_split(df_data, test_size=0.25, random_state=24)

df_test = pd.read_csv("data/test.csv")

print(df_sample.shape)

display(df_sample.head(1))

print(df_train.shape)

display(df_train.head(1))

print(df_valid.shape)

display(df_valid.head(1))

print(df_test.shape)

display(df_test.head(1))

prompt engineering

推論

採用したプロンプトエンジニアリング手法は以下

- few-shot

- Chain-of-Thought (CoT)

def get_fewshot_by_train(num):

prompt_concat = []

for i in range(num):

prompt = f"""

Q: Select the answer to this question from the options. : {df_train["prompt"][i]}

options:

A: {df_train["A"][i]}

B: {df_train["B"][i]}

C: {df_train["C"][i]}

D: {df_train["D"][i]}

E: {df_train["E"][i]}

A: Let's think step by step. {df_train["answer"][i]}

"""

prompt_concat.append(prompt)

return prompt_concat

def get_answer(values):

prompt = values["prompt"]

answerA = values["A"]

answerB = values["B"]

answerC = values["C"]

answerD = values["D"]

answerE = values["E"]

res = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"""

{get_fewshot_by_train(5)}

Q: Select the answer to this question from the options. Let's think step by step : {prompt}

options:

A: {answerA}

B: {answerB}

C: {answerC}

D: {answerD}

E: {answerE}

A: Let's think step by step.

"""},

]

)

output = res["choices"][0]["message"]["content"]

return output

df_valid["id"] = df_valid["id"]

df_valid["prediction"] = df_valid.apply(get_answer, axis=1)

評価

base_cnt = df_valid[df_valid["prediction"].notna()].shape[0]

acc_cnt = df_valid[df_valid["answer"] == df_valid["prediction"]].shape[0]

print("Accuracy: ", acc_cnt / base_cnt * 100)

Accuracy: 26.0

全然ダメっぽい。と思いきや

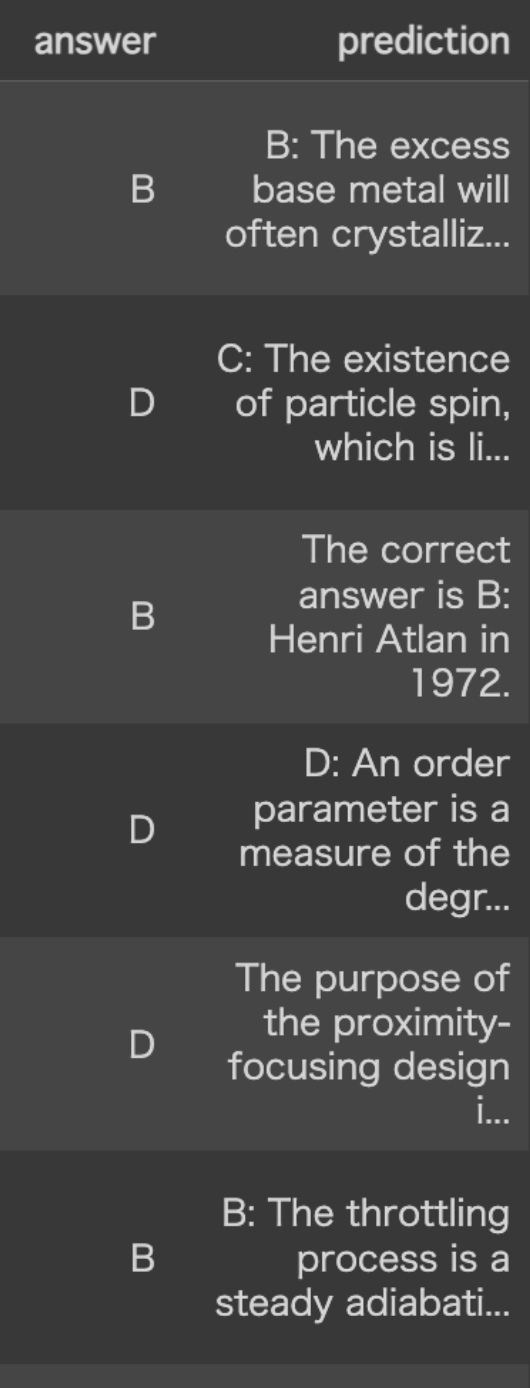

- 正解Bに対し「B: The excess base metal will often crystallixxxxx」

- 正解Bに対し「The correct answer is B: Henri Atlan in 1972xxxxx」

と表記揺れしてるものが多数!

出力補正としてさらに突っ込んでみる。

推論(出力補正)

採用したプロンプトエンジニアリングは以下

- few-shot

def get_answer(values):

prompt = values["prediction"]

res = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"""

The following sentence is a response to a certain question.

You need to answer with either A, B, C, D, or E.

However, there are unnecessary parts mixed in, so please format the sentence to be expressed only with A, B, C, D, or E.

BEFORE: C: This is xxxxx.

AFTER: C

BEFORE: The correct answer is B. xxxxx

AFTER: B

BEFORE: {prompt}

AFTER

"""},

]

)

output = res["choices"][0]["message"]["content"]

return output

df_valid["prediction_trans"] = df_valid.apply(get_answer, axis=1)

base_cnt = df_valid[df_valid["prediction_trans"].notna()].shape[0]

acc_cnt = df_valid[df_valid["answer"] == df_valid["prediction_trans"]].shape[0]

print("Accuracy: ", acc_cnt / base_cnt * 100)

Accuracy: 74.0

おお。実は正解してたなやつが結構いたぽい。

50件なので全部目視確認したが選んだラベルをちゃんと抜き出してくれていた

出力補正結果を全部見たい方はこちら:https://docs.google.com/spreadsheets/d/1LZY1j1pTXHPRyx-XJefOuGo_ugWc54BqgTBZmnvUOm8/edit#gid=957253354

fineturning

ファインチューニング用データ作成/変換

import json

train_data = []

for _, row in df_train.iterrows():

user_content = f"""

Question: {row["prompt"]}

Choises:

A: {row["A"]}

B: {row["B"]}

C: {row["C"]}

D: {row["D"]}

E: {row["E"]}

"""

assistant_content = row['answer']

messages = [

{"role": "user", "content": user_content},

{"role": "assistant", "content": assistant_content}

]

train_data.append({"messages": messages})

print(train_data)

with open('train.jsonl', 'w') as f:

for item in train_data:

f.write(json.dumps(item) + '\n')

ファインチューニング

データファイル変換

response = openai.File.create(

file=open("train.jsonl", "rb"),

purpose='fine-tune'

)

file_id = response.id

file_id

モデル作成

学習が完了したらOpenaiアカウントメールに完了メールがくるので、優雅に待つ

(今回だと10分~20分くらいで完了した肌感)

response_job = openai.FineTuningJob.create(

training_file=file_id,

model="gpt-3.5-turbo"

)

job_id = response_job.id

job_id

Job状況は以下で確認できる

status がCreatedになるまで待つ

response_retrieve = openai.FineTuningJob.retrieve(job_id)

response_retrieve

推論

作成した学習データと同じ形式で推論を行う

評価したいのでValidデータを推論にかける

fine_tuned_model = response_retrieve.fine_tuned_model

def get_answer(values):

prompt = values["prompt"]

answerA = values["A"]

answerB = values["B"]

answerC = values["C"]

answerD = values["D"]

answerE = values["E"]

model_name = fine_tuned_model

completion = openai.ChatCompletion.create(

model=model_name,

messages=[

{"role": "user", "content": f"""

Question: {prompt}

Choises:

A: {answerA}

B: {answerB}

C: {answerC}

D: {answerD}

E: {answerE}

"""

}

]

)

return completion.choices[0].message["content"]

df_valid["prediction"] = df_valid.apply(get_answer, axis=1)

df_valid

結果

評価

base_cnt = df_valid[df_valid["prediction"].notna()].shape[0]

acc_cnt = df_valid[df_valid["answer"] == df_valid["prediction"]].shape[0]

print("Accuracy: ", acc_cnt / base_cnt * 100)

Accuracy: 82.0

まとめ

- prompt engineering VS fineturning の結果は accuracy: 74% vs 82%

ハマりポイント

openai keyの指定方法

この指定方法ではFineTurnでKey Errorになった

openai.api_key = 'sk-XXXXXXXXXXXXXXX'

参考資料

さいごに

- ちょっと前にファインチューニング試したときからドキュメントもかなり更新されてて学習が安定してた(学習完了メールがとんでくるまで優雅に過ごせた嬉)

- 専門的なドメインでも追加学習なしでそこそこ精度出せるのGpt様様

- データが整っていればやはりファインチューニングのほうが精度良

- プロンプトエンジニアリングはもっと他にもやりようありそうだなと感じた