まえおき

先日投稿した「実践コンピュータビジョン」の全演習問題をやってみたの詳細です。なお、あくまで「やってみた」であって、模範解答ではありません。「やってみた」だけなので、うまくいかなかった、という結果もあります。

この記事は「実践コンピュータビジョン」(オライリージャパン、Jan Erik Solem著、相川愛三訳)の演習問題に関する記事です。この記事を書いた人間と書籍との間には何の関係もありません。著者のJan氏と訳者の相川氏にはコンピュータビジョンについて学ぶ機会を与えて頂いたことに感謝します。

実践コンピュータビジョンの原書("Programming Computer Vision with Python")の校正前の原稿は著者のJan Erik Solem氏がCreative Commons Licenseの元で公開されています。。

回答中で使用する画像の多く、また、sfm.py, sift.py, stereo.pyなどの教科書中で作られるファイルはこれらのページからダウンロードできます。

オライリージャパンのサポートページ

Jan Erik Solem氏のホームページ

相川愛三氏のホームページ

ここで扱うプログラムはすべてPython2.7上で動作を確認しています。一部の例外を除きほとんどがJupyter上で開発されました。また必要なライブラリーを適宜インストールしておく必要があります。Jupyter以外の点は、上記の書籍で説明されています。

結構な分量なので章毎に記事をアップしています。今回は第5章です。

5章 多視点幾何

ステレオ画像などの多視点画像から情報を得る、エピポーラを始めとした多視点幾何です

5.5.1 Use the techniques introduced in this chapter to verify matches in the White house example on page 66 (or even better, an example of your own) and see if you can improve on the results.

この章で習ったことをつかって、2.3.2の局所記述子の対応付けを改善しろ

私の回答

from PIL import Image

from numpy import *

from pylab import *

import numpy as np

import camera

import homography

import sfm

import sift

# Read features

im1 = array(Image.open('sf_view1.jpg'))

sift.process_image('sf_view1.jpg', 'im1.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

im2 = array(Image.open('sf_view2.jpg'))

sift.process_image('sf_view2.jpg', 'im2.sift')

l2, d2 = sift.read_features_from_file('im2.sift')

matches = sift.match_twosided(d1, d2)

ndx = matches.nonzero()[0]

x1 = homography.make_homog(l1[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

x2 = homography.make_homog(l2[ndx2, :2].T)

x1n = x1.copy()

x2n = x2.copy()

figure(figsize=(16,16))

sift.plot_matches(im1, im2, l1, l2, matches, True)

show()

def F_from_ransac(x1, x2, model, maxiter=5000, match_threshold=1e-6):

""" Robust estimation of a fundamental matrix F from point

correspondences using RANSAC (ransac.py from

http://www.scipy.org/Cookbook/RANSAC).

input: x1, x2 (3*n arrays) points in hom. coordinates. """

import ransac

data = np.vstack((x1, x2))

d = 20 # 20 is the original

# compute F and return with inlier index

F, ransac_data = ransac.ransac(data.T, model,

8, maxiter, match_threshold, d, return_all=True)

return F, ransac_data['inliers']

# find E through RANSAC

model = sfm.RansacModel()

F, inliers = F_from_ransac(x1n, x2n, model, maxiter=5000, match_threshold=1e-3)

P1 = array([[1, 0, 0, 0], [0, 1, 0, 0], [0, 0, 1, 0]])

P2 = sfm.compute_P_from_fundamental(F)

# triangulate inliers and remove points not in front of both cameras

X = sfm.triangulate(x1n[:, inliers], x2n[:, inliers], P1, P2)

# plot the projection of X

cam1 = camera.Camera(P1)

cam2 = camera.Camera(P2)

x1p = cam1.project(X)

x2p = cam2.project(X)

figure(figsize=(16, 16))

im3 = sift.appendimages(im1, im2)

im3 = vstack((im3, im3))

imshow(im3)

cols1 = im1.shape[1]

rows1 = im1.shape[0]

for i in range(len(x1p[0])):

if (0<= x1p[0][i]<cols1) and (0<= x2p[0][i]<cols1) and (0<=x1p[1][i]<rows1) and (0<=x2p[1][i]<rows1):

plot([x1p[0][i], x2p[0][i]+cols1],[x1p[1][i], x2p[1][i]],'c')

axis('off')

show()

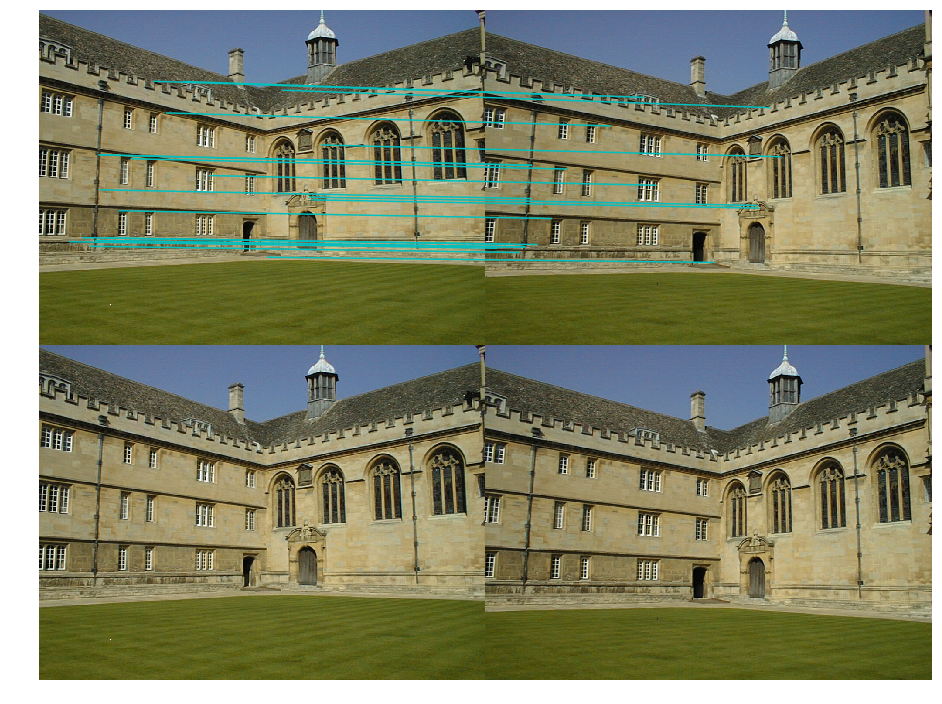

カメラ行列によって上手く移される点の組だけを残すようにしました。対応点の数は減ってしまいましたが、残っているものの精度は高そうです。2つほど例外がありますが、理由はわかりません。

5.5.2 Compute feature matches for an image pair and estimate the fundamental matrix. Use the epipolar lines to do a second pass to find more matches by searching for the best match along the epipolar line for each feature.

特徴点から基礎行列を計算しろ。つぎにエピポーラ線にマッチする頂点を探せ

私の回答

オックスフォードの多視点画像(Merton College I)を使います1

from PIL import Image

from numpy import *

from pylab import *

import numpy as np

import camera

import homography

import sfm

import sift

# Read features

im1 = array(Image.open('images/001.jpg'))

sift.process_image('images/001.jpg', 'im1.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

im2 = array(Image.open('images/003.jpg'))

sift.process_image('images/003.jpg', 'im2.sift')

l2, d2 = sift.read_features_from_file('im2.sift')

matches = sift.match_twosided(d1, d2)

ndx = matches.nonzero()[0]

x1 = homography.make_homog(l1[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

x2 = homography.make_homog(l2[ndx2, :2].T)

x1n = x1.copy()

x2n = x2.copy()

figure(figsize=(16,16))

sift.plot_matches(im1, im2, l1, l2, matches, True)

show()

# Don't use K1, and K2

def F_from_ransac(x1, x2, model, maxiter=5000, match_threshold=1e-6):

""" Robust estimation of a fundamental matrix F from point

correspondences using RANSAC (ransac.py from

http://www.scipy.org/Cookbook/RANSAC).

input: x1, x2 (3*n arrays) points in hom. coordinates. """

import ransac

data = np.vstack((x1, x2))

d = 20 # 20 is the original

# compute F and return with inlier index

F, ransac_data = ransac.ransac(data.T, model,

8, maxiter, match_threshold, d, return_all=True)

return F, ransac_data['inliers']

# find E through RANSAC

model = sfm.RansacModel()

F, inliers = F_from_ransac(x1n, x2n, model, maxiter=5000, match_threshold=1e-4)

P1 = array([[1, 0, 0, 0], [0, 1, 0, 0], [0, 0, 1, 0]])

P2 = sfm.compute_P_from_fundamental(F)

# triangulate inliers and remove points not in front of both cameras

X = sfm.triangulate(x1n[:, inliers], x2n[:, inliers], P1, P2)

# plot the projection of X

cam1 = camera.Camera(P1)

cam2 = camera.Camera(P2)

x1p = cam1.project(X)

x2p = cam2.project(X)

figure(figsize=(16, 16))

im3 = sift.appendimages(im1, im2)

im3 = vstack((im3, im3))

imshow(im3)

cols1 = im1.shape[1]

rows1 = im1.shape[0]

for i in range(len(x1p[0])):

if (0<= x1p[0][i]<cols1) and (0<= x2p[0][i]<cols1) and (0<=x1p[1][i]<rows1) and (0<=x2p[1][i]<rows1):

plot([x1p[0][i], x2p[0][i]+cols1],[x1p[1][i], x2p[1][i]],'c')

axis('off')

show()

# Chapter 5 Exercise 2

x1e = []

x2e = []

ers = []

for i,m in enumerate(matches):

if m>0: #plot([locs1[i][0],locs2[m][0]+cols1],[locs1[i][1],locs2[m][1]],'c')

p1 = array([l1[i][0], l1[i][1], 1])

p2 = array([l2[m][0], l2[m][1], 1])

# Use Sampson distance as error

Fx1 = dot(F, p1)

Fx2 = dot(F, p2)

denom = Fx1[0]**2 + Fx1[1]**2 + Fx2[0]**2 + Fx2[1]**2

e = (dot(p1.T, dot(F, p2)))**2 / denom

x1e.append([p1[0], p1[1]])

x2e.append([p2[0], p2[1]])

ers.append(e)

x1e = array(x1e)

x2e = array(x2e)

ers = array(ers)

indices = np.argsort(ers)

x1s = x1e[indices]

x2s = x2e[indices]

ers = ers[indices]

x1s = x1s[:20]

x2s = x2s[:20]

figure(figsize=(16, 16))

im3 = sift.appendimages(im1, im2)

im3 = vstack((im3, im3))

imshow(im3)

cols1 = im1.shape[1]

rows1 = im1.shape[0]

for i in range(len(x1s)):

if (0<= x1s[i][0]<cols1) and (0<= x2s[i][0]<cols1) and (0<=x1s[i][1]<rows1) and (0<=x2s[i][1]<rows1):

plot([x1s[i][0], x2s[i][0]+cols1],[x1s[i][1], x2s[i][1]],'c')

axis('off')

show()

精度は高いですが、残ったペアの数が少ないですね。

5.5.3 Take a set with three or more images. Pick one pair and compute 3D points and camera matrices. Match features to the remaining images to get correspondences. Then take the 3D points for the correspondences and compute camera matrices for the other images using resection. Plot the 3D points and the camera positions. Use a set of your own or one of the Oxford multi-view sets.

3枚以上の画像のうち、1組から3次元上の点とカメラ行列を計算せよ。残った他の画像に対し、特徴点を対応付けよ。

私の回答

またオックスフォードの画像を使います。

from PIL import Image

from numpy import *

from pylab import *

import numpy as np

import camera

import homography

import sfm

import sift

# Read features

im1 = array(Image.open('images2/001.jpg'))

sift.process_image('images2/001.jpg', 'im1.sift')

im2 = array(Image.open('images2/002.jpg'))

sift.process_image('images2/002.jpg', 'im2.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

l2, d2 = sift.read_features_from_file('im2.sift')

matches = sift.match_twosided(d1, d2)

ndx = matches.nonzero()[0]

x1 = homography.make_homog(l1[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

x2 = homography.make_homog(l2[ndx2, :2].T)

d1n = d1[ndx]

d2n = d2[ndx2]

x1n = x1.copy()

x2n = x2.copy()

figure(figsize=(16,16))

sift.plot_matches(im1, im2, l1, l2, matches, True)

show()

def F_from_ransac(x1, x2, model, maxiter=5000, match_threshold=1e-6):

""" Robust estimation of a fundamental matrix F from point

correspondences using RANSAC (ransac.py from

http://www.scipy.org/Cookbook/RANSAC).

input: x1, x2 (3*n arrays) points in hom. coordinates. """

import ransac

data = np.vstack((x1, x2))

d = 10 # 20 is the original

# compute F and return with inlier index

F, ransac_data = ransac.ransac(data.T, model,

8, maxiter, match_threshold, d, return_all=True)

return F, ransac_data['inliers']

# find F through RANSAC

model = sfm.RansacModel()

F, inliers = F_from_ransac(x1n, x2n, model, maxiter=5000, match_threshold=1e-5)

print F

[[ -1.20596150e-07 4.57524773e-06 -2.72747465e-03]

[ -1.25371232e-06 4.07586096e-07 2.16551968e-02]

[ 1.33001700e-03 -2.30377515e-02 1.00000000e+00]]

P1 = array([[1, 0, 0, 0], [0, 1, 0, 0], [0, 0, 1, 0]])

P2 = sfm.compute_P_from_fundamental(F)

print P2

print F

カメラ行列

[[ -1.58601475e+00 1.25923669e+01 5.81517049e+02 4.98349903e+03]

[ 1.35923671e+01 -1.07918654e+02 -4.98349770e+03 5.81494011e+02]

[ 2.28708685e-02 2.76023112e-03 -1.15582009e+02 1.00000000e+00]]

基礎行列

[[ -1.20596150e-07 4.57524773e-06 -2.72747465e-03]

[ -1.25371232e-06 4.07586096e-07 2.16551968e-02]

[ 1.33001700e-03 -2.30377515e-02 1.00000000e+00]]

# triangulate inliers and remove points not in front of both cameras

X = sfm.triangulate(x1n[:, inliers], x2n[:, inliers], P1, P2)

# plot the projection of X

cam1 = camera.Camera(P1)

cam2 = camera.Camera(P2)

x1p = cam1.project(X)

x2p = cam2.project(X)

figure(figsize=(16, 16))

imj = sift.appendimages(im1, im2)

imj = vstack((imj, imj))

imshow(imj)

cols1 = im1.shape[1]

rows1 = im1.shape[0]

for i in range(len(x1p[0])):

if (0<= x1p[0][i]<cols1) and (0<= x2p[0][i]<cols1) and (0<=x1p[1][i]<rows1) and (0<=x2p[1][i]<rows1):

plot([x1p[0][i], x2p[0][i]+cols1],[x1p[1][i], x2p[1][i]],'c')

axis('off')

show()

P3 = sfm.compute_P(x3_13, X[:, ndx_13])

print P1

print P2

print P3

カメラ行列

[[1 0 0 0]

[0 1 0 0]

[0 0 1 0]]

[[ -1.58601475e+00 1.25923669e+01 5.81517049e+02 4.98349903e+03]

[ 1.35923671e+01 -1.07918654e+02 -4.98349770e+03 5.81494011e+02]

[ 2.28708685e-02 2.76023112e-03 -1.15582009e+02 1.00000000e+00]]

[[ 3.62014810e-03 1.12572805e-04 2.03235270e-02 9.73656910e-02]

[ 2.27712894e-02 2.46572006e-03 -3.98419976e-01 9.11497397e-01]

[ 3.74477593e-05 2.32922416e-06 3.21441236e-04 1.49855279e-03]]

カメラ位置は、この画像に対応するカメラカリブレーション行列(K)がないのでわからないのでは無いかと思う

5.5.4 Implement a stereo version that uses sum of squared differences (SSD) instead of NCC using filtering the same way as in the NCC example.

NCCの代わりにSSD(自乗和)を使ってステレオ法を実装しろ

私の回答

from PIL import Image

from numpy import *

from pylab import *

import scipy.misc

from scipy import ndimage

import numpy as np

import stereo

im_l = array(Image.open('scene1.row3.col3.ppm').convert('L'), 'f')

im_r = array(Image.open('scene1.row3.col4.ppm').convert('L'), 'f')

def plane_sweep_sad(im_l, im_r, start, steps, wid):

""" Find disparity image using SAD (sum of absolute difference). """

m, n = im_l.shape

# arrays to hold the different sums

mean_l = np.zeros((m, n))

mean_r = np.zeros((m, n))

s = np.zeros((m, n))

s_l = np.zeros((m, n))

s_r = np.zeros((m, n))

# array to hold depth planes

dmaps = np.zeros((m, n, steps))

# compute mean of patch

ndimage.filters.uniform_filter(im_l, wid, mean_l)

ndimage.filters.uniform_filter(im_r, wid, mean_r)

# normalized images

#norm_l = im_l - mean_l

#norm_r = im_r - mean_r

norm_l = im_l

norm_r = im_r

# try different disparities

for displ in range(steps):

# move left image to the right, compute sums

# sum of nominator

ndimage.filters.uniform_filter(np.absolute(np.roll(norm_l, -displ-start)-norm_r), wid, s)

# sum of denominator

ndimage.filters.uniform_filter(

np.roll(norm_l, -displ-start)*np.roll(norm_l, -displ-start), wid, s_l)

# sum of denominator

ndimage.filters.uniform_filter(norm_r*norm_r, wid, s_r)

# store ncc scores

dmaps[:, :, displ] = s/np.sqrt(s_l*s_r)

# pick best depth for each pixel

return np.argmin(dmaps, axis=2)

def plane_sweep_sad_gauss(im_l, im_r, start, steps, wid):

""" Find disparity image using SAD

with Gaussian weighted neighborhoods. """

m, n = im_l.shape

# arrays to hold the different sums

mean_l = np.zeros((m, n))

mean_r = np.zeros((m, n))

s = np.zeros((m, n))

s_l = np.zeros((m, n))

s_r = np.zeros((m, n))

# array to hold depth planes

dmaps = np.zeros((m, n, steps))

# compute mean of patch

ndimage.filters.gaussian_filter(im_l, wid, 0, mean_l)

ndimage.filters.gaussian_filter(im_r, wid, 0, mean_r)

# normalized images

#norm_l = im_l - mean_l

#norm_r = im_r - mean_r

norm_l = im_l

norm_r = im_r

# try different disparities

for displ in range(steps):

# move left image to the right, compute sums

# sum of nominator

ndimage.filters.gaussian_filter(np.absolute(np.roll(norm_l, -displ-start)-norm_r), wid, 0, s)

# sum of denominator

ndimage.filters.gaussian_filter(

np.roll(norm_l, -displ-start)*np.roll(norm_l, -displ-start), wid, 0, s_l)

# sum of denominator

ndimage.filters.gaussian_filter(norm_r*norm_r, wid, 0, s_r)

# store ncc scores

dmaps[:, :, displ] = s/np.sqrt(s_l*s_r)

# pick best depth for each pixel

return np.argmin(dmaps, axis=2)

start = 4

steps = 12

wid = 9

res = stereo.plane_sweep_ncc(im_l, im_r, start, steps, wid)

start = 4

steps = 12

wid = 3

res2 = stereo.plane_sweep_gauss(im_l, im_r, start, steps, wid)

start = 4

steps = 12

wid = 9

res3 = plane_sweep_sad(im_l, im_r, start, steps, wid)

start = 4

steps = 12

wid = 3

res4 = plane_sweep_sad_gauss(im_l, im_r, start, steps, wid)

scipy.misc.imsave('depth.png', res)

figure(figsize=(16, 16))

gray()

subplot(3, 2, 1)

imshow(Image.open('scene1.row3.col3.ppm'))

axis('off')

subplot(3, 2, 2)

imshow(Image.open('scene1.row3.col4.ppm'))

axis('off')

subplot(3, 2, 3)

imshow(res)

axis('off')

subplot(3, 2, 4)

imshow(res2)

axis('off')

subplot(3, 2, 5)

imshow(res3)

axis('off')

subplot(3, 2, 6)

imshow(res4)

axis('off')

show()

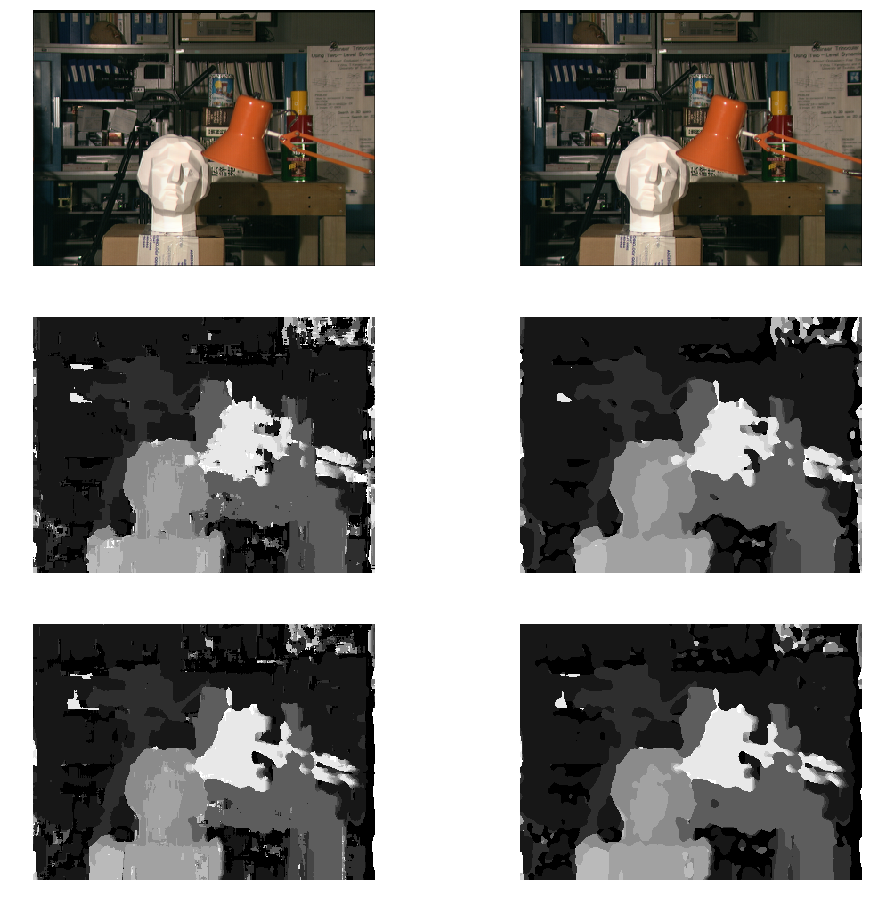

左がSSD(差の二乗和)、右がNCC(正規化相互相関)によるものですです。

SSDの方が、解像度が高く、代わりにひずみやノイズも多いようです。

5.5.5 Try smoothing the stereo depth maps using the ROF de-noising from Section 1.5. Experiment with the size of the cross-correlation patches to get sharp edges with noise levels that can be removed with smoothing.

1章で使ったROFで奥行きマップをなめらかにしろ。

私の回答

from PIL import Image

from numpy import *

from pylab import *

import scipy.misc

from scipy import ndimage

import numpy as np

import stereo

import rof

im_l = array(Image.open('scene1.row3.col3.ppm').convert('L'), 'f')

im_r = array(Image.open('scene1.row3.col4.ppm').convert('L'), 'f')

start = 4

steps = 12

wid = 9

res1 = stereo.plane_sweep_ncc(im_l, im_r, start, steps, wid)

res1_f, res1_t = rof.denoise(res1, res1)

start = 4

steps = 12

wid = 5

res2 = stereo.plane_sweep_ncc(im_l, im_r, start, steps, wid)

res2_f, res2_t = rof.denoise(res2, res2)

figure(figsize=(16, 16))

gray()

subplot(3, 2, 1)

imshow(Image.open('scene1.row3.col3.ppm'))

axis('off')

subplot(3, 2, 2)

imshow(Image.open('scene1.row3.col4.ppm'))

axis('off')

subplot(3, 2, 3)

imshow(res1)

axis('off')

subplot(3, 2, 4)

imshow(res1_f)

axis('off')

subplot(3, 2, 5)

imshow(res2)

axis('off')

subplot(3, 2, 6)

imshow(res2_f)

axis('off')

show()

左が平滑化前、右が平滑化後。中断はROFのウィンドウサイズ12,下段はウィンドウサイズ5。NCCの範囲を狭くしてノイズが増えても、ROFのウィンドウサイズを大きくすることで平滑化できた。

5.5.6 One way to improve the quality of the disparity maps is to compare the disparities from moving the left image to the right and the right image to the left and only keep the parts that are consistent. This will for example clean up the parts where there is occlusion. Implement this idea and compare the results to the one-directional plane sweeping.

左の画像を右に動かした時の視差と、右の画像を左に動かした時の視差が整合した時だけ保存する、という方法を試せ

私の回答

from PIL import Image

from numpy import *

from pylab import *

import scipy.misc

from scipy import ndimage

import numpy as np

import stereo

im_l = array(Image.open('scene1.row3.col3.ppm').convert('L'), 'f')

im_r = array(Image.open('scene1.row3.col4.ppm').convert('L'), 'f')

def plane_sweep_ncc_2dir(im_l, im_r, start, steps, wid):

""" Find disparity image using SAD (sum of absolute difference). """

m, n = im_l.shape

# arrays to hold the different sums

mean_l = np.zeros((m, n))

mean_r = np.zeros((m, n))

s1 = np.zeros((m, n))

s1_l = np.zeros((m, n))

s1_r = np.zeros((m, n))

s2 = np.zeros((m, n))

s2_l = np.zeros((m, n))

s2_r = np.zeros((m, n))

# array to hold depth planes

dmaps_lr = np.zeros((m, n, steps))

dmaps_rl = np.zeros((m, n, steps))

# compute mean of patch

ndimage.filters.uniform_filter(im_l, wid, mean_l)

ndimage.filters.uniform_filter(im_r, wid, mean_r)

# normalized images

norm_l = im_l - mean_l

norm_r = im_r - mean_r

# try different disparities

for displ in range(steps):

# move left image to the right, compute sums

# sum of nominator

ndimage.filters.uniform_filter(np.roll(norm_l, -displ-start)*norm_r, wid, s1)

ndimage.filters.uniform_filter(norm_l*np.roll(norm_r, +displ+start), wid, s2)

# sum of denominator

ndimage.filters.uniform_filter(

np.roll(norm_l, -displ-start)*np.roll(norm_l, -displ-start), wid, s1_l)

ndimage.filters.uniform_filter(

np.roll(norm_r, +displ+start)*np.roll(norm_r, +displ+start), wid, s2_r)

# sum of denominator

ndimage.filters.uniform_filter(norm_r*norm_r, wid, s1_r)

ndimage.filters.uniform_filter(norm_l*norm_l, wid, s2_l)

# store ncc scores

dmaps_lr[:, :, displ] = s1/np.sqrt(s1_l*s1_r)

dmaps_rl[:, :, displ] = s2/np.sqrt(s2_l*s2_r)

# pick best depth for each pixel

dl = np.argmax(dmaps_lr, axis=2)

dr = np.argmax(dmaps_rl, axis=2)

dd = np.absolute(dl - dr)

dl[dd>2] = 0 # if the difference is bigger than 2, ignore

return dl

def plane_sweep_ncc_gauss_2dir(im_l, im_r, start, steps, wid):

""" Find disparity image using SAD (sum of absolute difference). """

m, n = im_l.shape

# arrays to hold the different sums

mean_l = np.zeros((m, n))

mean_r = np.zeros((m, n))

s1 = np.zeros((m, n))

s1_l = np.zeros((m, n))

s1_r = np.zeros((m, n))

s2 = np.zeros((m, n))

s2_l = np.zeros((m, n))

s2_r = np.zeros((m, n))

# array to hold depth planes

dmaps_lr = np.zeros((m, n, steps))

dmaps_rl = np.zeros((m, n, steps))

# compute mean of patch

ndimage.filters.gaussian_filter(im_l, wid, 0, mean_l)

ndimage.filters.gaussian_filter(im_r, wid, 0, mean_r)

# normalized images

norm_l = im_l - mean_l

norm_r = im_r - mean_r

# try different disparities

for displ in range(steps):

# move left image to the right, compute sums

# sum of nominator

ndimage.filters.gaussian_filter(np.roll(norm_l, -displ-start)*norm_r, wid, 0, s1)

ndimage.filters.gaussian_filter(norm_l*np.roll(norm_r, +displ+start), wid, 0, s2)

# sum of denominator

ndimage.filters.gaussian_filter(

np.roll(norm_l, -displ-start)*np.roll(norm_l, -displ-start), wid, 0, s1_l)

ndimage.filters.gaussian_filter(

np.roll(norm_r, +displ+start)*np.roll(norm_r, +displ+start), wid, 0, s2_r)

# sum of denominator

ndimage.filters.gaussian_filter(norm_r*norm_r, wid, 0, s1_r)

ndimage.filters.gaussian_filter(norm_l*norm_l, wid, 0, s2_l)

# store ncc scores

dmaps_lr[:, :, displ] = s1/np.sqrt(s1_l*s1_r)

dmaps_rl[:, :, displ] = s2/np.sqrt(s2_l*s2_r)

# pick best depth for each pixel

dl = np.argmax(dmaps_lr, axis=2)

dr = np.argmax(dmaps_rl, axis=2)

dd = np.absolute(dl - dr)

dl[dd>2] = 0 # if the difference is bigger than 2, ignore

# you could put -1 here, as invalid value, and add post processing to fill this area

return dl

start = 4

steps = 12

wid = 9

res = stereo.plane_sweep_ncc(im_l, im_r, start, steps, wid)

start = 1

steps = 12

wid = 3

res2 = stereo.plane_sweep_gauss(im_l, im_r, start, steps, wid)

start = 4

steps = 12

wid = 9

res3 = plane_sweep_ncc_2dir(im_l, im_r, start, steps, wid)

start = 1

steps = 12

wid = 3

res4 = plane_sweep_ncc_gauss_2dir(im_l, im_r, start, steps, wid)

figure(figsize=(16, 16))

gray()

subplot(3, 2, 1)

imshow(Image.open('scene1.row3.col3.ppm'))

axis('off')

subplot(3, 2, 2)

imshow(Image.open('scene1.row3.col4.ppm'))

axis('off')

subplot(3, 2, 3)

imshow(res)

axis('off')

subplot(3, 2, 4)

imshow(res2)

axis('off')

subplot(3, 2, 5)

imshow(res3)

axis('off')

subplot(3, 2, 6)

imshow(res4)

axis('off')

show()

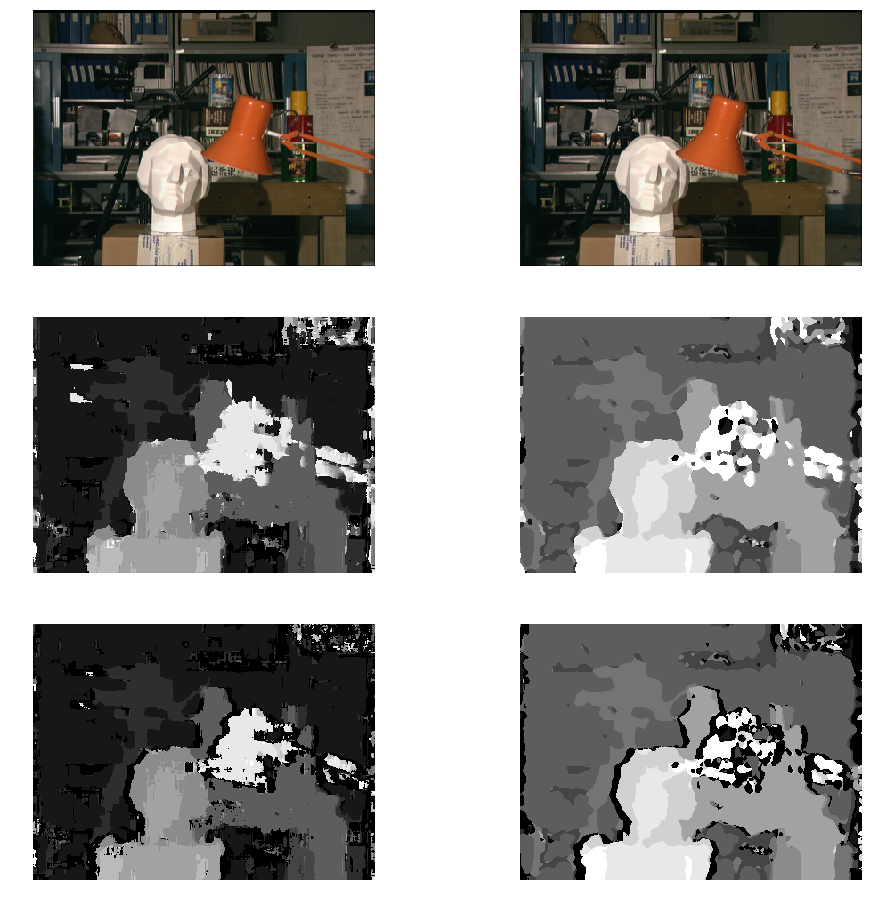

中断が一方向のみ、下段が双方向。左は平滑化なし、右は平滑化あり。

双方向では黒い部分(判定できなかった部分)が多いので、後処理が必要。

他はあまり性能があがったようには見えない。

両方向の結果が整合しない場合の処理を考える必要がありそうだ

5.5.7 The New York Public Library has many old historic stereo photographs. Browse the gallery at http://stereo.nypl.org/gallery and download some images you like (you can right click and save JPEGs). The images should be rectified already. Cut the image in two parts and try the dense depth reconstruction code.

ニューヨーク公共図書館の歴史的ステレオ写真から選び、奥行き判定せよ

私の回答

画像が標準的でないので苦労しました。画像を手作業で分割し、left/rightに分けてから処理を行いました。

from PIL import Image

from numpy import *

from pylab import *

import scipy.misc

from scipy import ndimage

import numpy as np

import stereo

# Images downloaded from http://stereo.nypl.org/view/91909, and cropped to left and right images

im_l = array(Image.open('NY_left.png').convert('L'), 'f')

im_r = array(Image.open('NY_right.png').convert('L'), 'f')

start = 10

steps = 80

wid = 21

res1 = stereo.plane_sweep_ncc(im_l, im_r, start, steps, wid)

start = 3

steps = 50

wid = 7

res2 = stereo.plane_sweep_gauss(im_l, im_r, start, steps, wid)

figure(figsize=(16, 16))

gray()

subplot(3, 2, 1)

imshow(Image.open('NY_left.png'))

axis('off')

subplot(3, 2, 2)

imshow(Image.open('NY_right.png'))

axis('off')

subplot(3, 2, 3)

imshow(res1)

axis('off')

subplot(3, 2, 4)

imshow(res2)

axis('off')

show()

下段が奥行き。左は平滑化なし。右は平滑化あり。

さすがに古い画像なのでいろいろむずかしかったです。位置の調整なども必要です。

5章終わり

これで5章終わりです。半分終わった!