はじめに

こんにちは streampack チームのメディです。

https://cloudpack.jp/service/option/streampack.html

Copyrights

コピーレフトのイラスト : poison-bottle-medicine-old-symbol-1481596

目的

簡単な例でtensorflow.jsの使用方法を学びます。

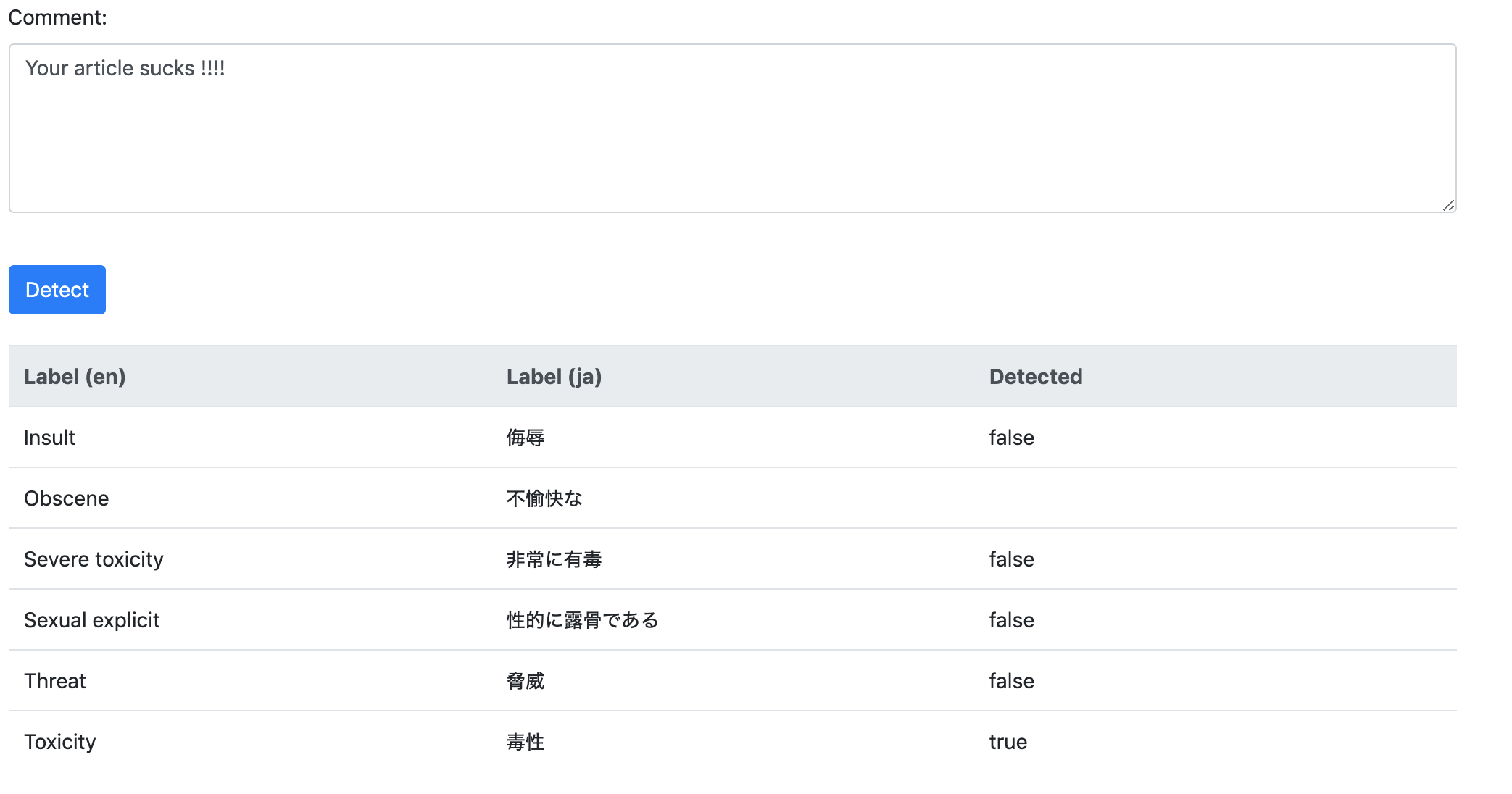

このモデルは、7種類の有毒なコメントを検出できます。

| 英語 | 日本語 |

|---|---|

| Insult | 侮辱 |

| Obscene | 不愉快な |

| Severe toxicity | 非常に有毒 |

| Sexual explicit | 性的露骨 |

| Threat | 脅威 |

| Toxicity | 毒性 |

実装

tensorflow.jsを使用して有毒なコメントを検出する方法を学びます。

- ラベルー

- 検出の信頼性(0から1の間)

- 検出一致

結果サンプル

...

"label": "identity_attack",

"results": [{

"probabilities": [0.9659664034843445, 0.03403361141681671],

"match": false

}]

},

...

ホームページから:

predictionsis an array of objects, one for each prediction head,

that contains the raw probabilities for each input along with the

final prediction inmatch(eithertrueorfalse).

If neither prediction exceeds the threshold,matchisnull.

実装

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<!-- Bootstrap CSS -->

<link rel="stylesheet" href="//stackpath.bootstrapcdn.com/bootstrap/4.3.1/css/bootstrap.min.css" crossorigin="anonymous" playsinline>

<!-- jQuery & Bootstrap JS -->

<script src="//code.jquery.com/jquery-3.3.1.slim.min.js" crossorigin="anonymous"></script>

<script src="//cdnjs.cloudflare.com/ajax/libs/popper.js/1.14.7/umd/popper.min.js" crossorigin="anonymous"></script>

<script src="//stackpath.bootstrapcdn.com/bootstrap/4.3.1/js/bootstrap.min.js" crossorigin="anonymous"></script>

<!-- Load TensorFlow.js -->

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs"></script>

<!-- Load the pre-trained model. -->

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/toxicity"></script>

<style>

body {

text-align: left;

}

#main_container {

display: inline-block;

margin: 0 auto;

position: relative;

}

#top_div {

padding: 1em;

}

#loader_div {

display: inline-block;

}

#spinner_div {

display: inline-block;

}

</style>

</head>

<body>

<div>

<div id="top_div">

<H2>Toxic comments detector in the browser (english language)</H2>

<div id="loader_div">

<p id="loader_info">Loading model...

<p />

</div>

<div id="spinner_div" class="spinner-border" role="status">

<span class="sr-only"></span>

</div>

<br></br>

</div>

<br></br>

<div class="container" id="main_container">

<div class="form-group">

<label for="comment">Comment:</label>

<textarea class="form-control" rows="5" id="comment"></textarea>

</div>

<br>

<div>

<button id="detectionBtn" type="button" class="btn btn-primary" onClick="handleDetection()" disabled>Detect</button>

</div>

<br>

<table class="table">

<thead class="thead-light">

<tr>

<th scope="col" style="width: 30%">Label (en)</th>

<th scope="col" style="width: 30%">Label (ja)</th>

<th scope="col" style="width: 30%">Detected</th>

</tr>

</thead>

<tbody>

<tr>

<td>Insult</th>

<td>侮辱</td>

<td id="identity_attack"></td>

</tr>

<tr>

<td>Obscene</td>

<td>不愉快な</td>

<td id="obscene"></td>

</tr>

<tr>

<td>Severe toxicity</td>

<td>非常に有毒</td>

<td id="severe_toxicity"></td>

</tr>

<tr>

<td>Sexual explicit</td>

<td>性的露骨</td>

<td id="sexual_explicit"></td>

</tr>

<tr>

<td>Threat</td>

<td>脅威</td>

<td id="threat"></td>

</tr>

<tr>

<td>Toxicity</td>

<td>毒性</td>

<td id="toxicity"></td>

</tr>

</tbody>

</table>

</div>

</div>

<script type="text/javascript">

var globalModel;

// Load the model. Users optionally pass in a threshold and an array of

// labels to include.

var isModelLoaded = false;

const threshold = 0.8;

toxicity.load(threshold).then(model => {

isModelLoaded = true;

$("#detectionBtn").prop('disabled', false);

updateTitle();

globalModel = model;

});

function handleDetection() {

$("#detectionBtn").prop('disabled', true);

var comment = $("#comment").val();

sentences = [];

sentences.push(comment);

clearFields();

globalModel.classify(sentences).then(predictions => {

console.log("[DEBUG] predictions: " + JSON.stringify(predictions));

$("#detectionBtn").prop('disabled', false);

predictions.map((itm) => {

switch (itm.label) {

case "identity_attack":

$("#identity_attack").text(itm.results[0].match);

break;

case "insult":

$("#insult").text(itm.results[0].match);

break;

case "obscene":

$("#obscene").text(itm.results[0].match);

break;

case "severe_toxicity":

$("#severe_toxicity").text(itm.results[0].match);

break;

case "sexual_explicit":

$("#sexual_explicit").text(itm.results[0].match);

break;

case "threat":

$("#threat").text(itm.results[0].match);

break;

case "toxicity":

$("#toxicity").text(itm.results[0].match);

break;

default:

break;

}

});

});

}

function updateTitle() {

var title = document.getElementById("loader_info");

title.textContent = "Model loaded successfully";

var element = document.getElementById("spinner_div");

element.classList.remove("spinner-border");

}

function clearFields() {

$("#identity_attack").text("");

$("#insult").text("");

$("#obscene").text("");

$("#severe_toxicity").text("");

$("#sexual_explicit").text("");

$("#threat").text("");

$("#toxicity").text("");

}

</script>

</body>

</html>

デモ

情報元

https://github.com/tensorflow/tfjs-models/tree/master/toxicity

https://pixabay.com/illustrations/poison-bottle-medicine-old-symbol-1481596/