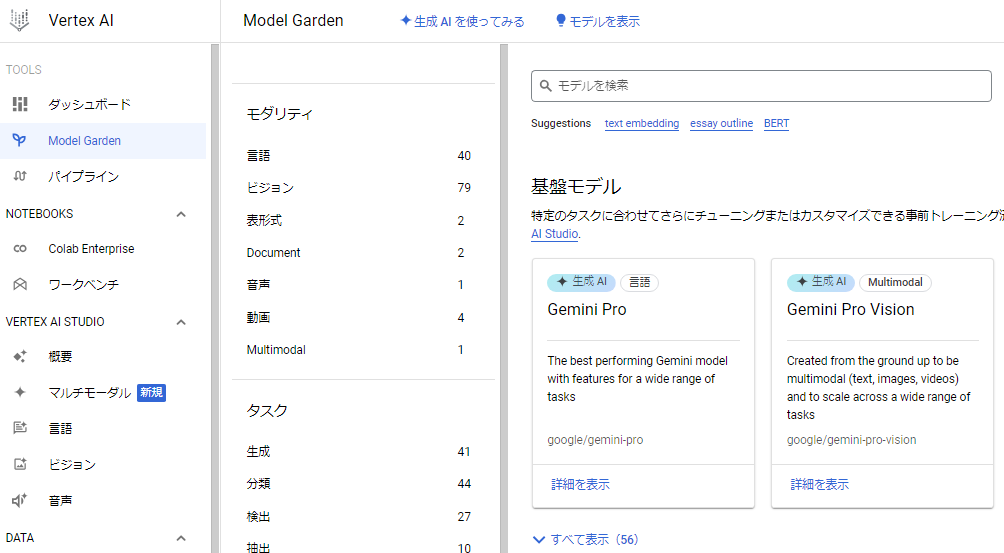

Gemini ProがVertexAIに来た!

12/13にGemini Proの利用開始とのアナウンスがあったが、日本時間の誤差のためか、12/14になって利用できるようになっていたため試してみた。

API 試してみる

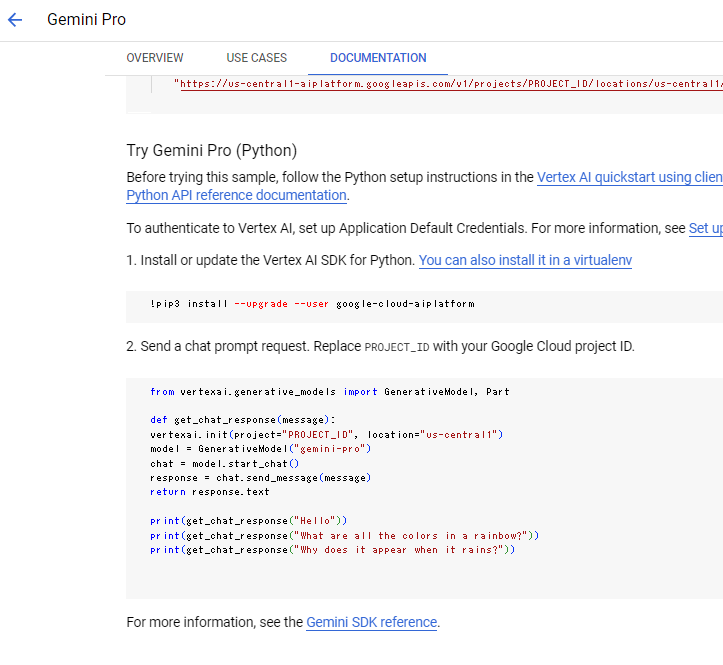

Gemini proの詳細ページにサンプルの記載があるが、そのままだと動かなかったので、検証したコードを記載。

google-cloud-aiplatformライブラリをインストールする必要があるが、バージョンについては記載がない。1.38.0以上のようだが、最新が1.38.1だったので最新を導入。

!pip install google-cloud-aiplatform==1.38.1

クレデンシャルを設定

import os

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = './xxx.json'

import google.auth

credentials, project_id = google.auth.default()

サンプルどおりにvertexai.generative_modelsからインポートすると下記のエラーが出る。

ImportError Traceback (most recent call last)

Cell In[10], line 1

----> 1 from vertexai.generative_models import GenerativeModel, Part

2 #from vertexai.preview.generative_models import GenerativeModel, Part

4 def get_chat_response(message):

ImportError: cannot import name 'GenerativeModel' from 'vertexai.generative_models' (unknown location)

このため、vertexai.preview.generative_modelsからインポート。

#from vertexai.generative_models import GenerativeModel, Part

from vertexai.preview.generative_models import GenerativeModel, Part

def get_chat_response(message):

vertexai.init(project="PROJECT_ID", location="us-central1")

model = GenerativeModel("gemini-pro")

chat = model.start_chat()

response = chat.send_message(message)

return response.text

そして実行。

print(get_chat_response("Hello"))

print(get_chat_response("What are all the colors in a rainbow?"))

print(get_chat_response("Why does it appear when it rains?"))

実行結果(サンプルどおりのクエリ)

Hello! How may I assist you?

1. Red

2. Orange

3. Yellow

4. Green

5. Blue

6. Indigo

7. Violet

Rainbows appear when sunlight strikes raindrops at a specific angle, causing the light to be refracted (bent), reflected, and refracted again. The light is separated into its component colors (red, orange, yellow, green, blue, indigo, and violet) due to the different wavelengths of light being refracted at different angles.

Here's a detailed explanation of the process:

1. Sunlight enters a raindrop.

2. The light is refracted, or bent, as it enters the raindrop due to the change in the speed of light as it passes from air to water.

3. The light then strikes the back of the raindrop and is reflected.

4. As the light exits the raindrop, it is refracted again, and the different colors of light are separated due to the different wavelengths of light being refracted at different angles.

5. The refracted light then reaches the observer's eye, who sees the separated colors as a rainbow.

The angle of the sun, the position of the observer, and the size and shape of the raindrops all play a role in the formation of a rainbow. Rainbows are typically seen opposite the sun, and the center of the rainbow is always directly opposite the sun. The larger the raindrops, the brighter and more distinct the rainbow will be.

Rainbows are a beautiful natural phenomenon that can be seen all over the world. They are a reminder of the beauty and wonder of the natural world and a symbol of hope and new beginnings.

マルチモーダル(Gemini Pro Vision)

こちらのサンプルは上記の記載不一致はなく、previewからインポートするようになっているので、問題なく実行できる。

import vertexai

from vertexai.preview.generative_models import GenerativeModel, Image

PROJECT_ID = "PROJECT_ID"

REGION = "us-central1"

vertexai.init(project=PROJECT_ID, location=REGION)

IMAGE_FILE = "./cat.jpg"

image = Image.load_from_file(IMAGE_FILE)

generative_multimodal_model = GenerativeModel("gemini-pro-vision")

response = generative_multimodal_model.generate_content(["What is shown in this image?", image])

print(response)

猫の画像を配置して読み込ませると、ちゃんと子猫の画像だと説明してくれた。

candidates {

content {

role: "model"

parts {

text: " This is a picture of a kitten."

}

}

finish_reason: STOP

safety_ratings {

category: HARM_CATEGORY_HARASSMENT

probability: NEGLIGIBLE

}

safety_ratings {

category: HARM_CATEGORY_HATE_SPEECH

probability: NEGLIGIBLE

}

safety_ratings {

category: HARM_CATEGORY_SEXUALLY_EXPLICIT

probability: NEGLIGIBLE

}

safety_ratings {

category: HARM_CATEGORY_DANGEROUS_CONTENT

probability: NEGLIGIBLE

}

}

usage_metadata {

prompt_token_count: 265

candidates_token_count: 8

total_token_count: 273

}

以上、サンプル実行のままだが、ちょっと詰まるところもあったのでメモ書き。

APIでのマルチモーダル機能を試せることと、gpt-4並み(と言われる)LLMをGCPで使える恩恵はこれから出てくるかと。LiteLLMやLangChainでの利用もできるようになったら追記予定。

2023/12/17 追記(LangchainでのGemini利用)

LangChainのVertexAIページにGemini Proの設定が記載されたので追記。

まずLangChainのバージョンを最新(執筆時点では0.0.350)にあげる。旧バージョンだとモデルエラーが出る。

Unknown model publishers/google/models/gemini-pro; {'gs://google-cloud-aiplatform/schema/predict/instance/text_generation_1.0.0.yaml': <class 'vertexai.preview.language_models._PreviewTextGenerationModel'>} (type=value_error)

pip install langchain==0.0.350

クレデンシャルの設定は上記で記載済みの通り行う。

これで下記のように、"gemini-pro"を指定することでいつものLangChainのモデルとして扱うことが可能。

from langchain.llms import VertexAI

llm = VertexAI(model_name="gemini-pro")

result = llm.invoke("Write a ballad about LangChain")

print(result)

In a realm where code entwines, Where innovation brightly shines, A tale unfolds, a ballad grand, Of LangChain, a marvel in this land.

From humble seeds, it took its flight, A vision born, a beacon of light. With every line, a story grew, In LangChain's embrace, dreams came true.

Its syntax, elegant and refined, A symphony of logic, intertwined. Like notes on a staff, they dance and soar, Creating wonders never seen before.

Within its framework, worlds arise, Where possibilities touch the skies. From games

マルチモーダルについては、HumanMessageのcontentとしてtext_messageとimage_messageを指定することで利用することが可能。

from langchain.chat_models import ChatVertexAI

from langchain.schema.messages import HumanMessage

llm = ChatVertexAI(model_name="gemini-ultra-vision")

image_message = {

"type": "image_url",

"image_url": {"url": "image_example.jpg"},

}

text_message = {

"type": "text",

"text": "What is shown in this image?",

}

message = HumanMessage(content=[text_message, image_message])

output = llm([message])

print(output.content)

なお、Geminiのトークンサイズは、

- Gemini Pro 32k (出力8k)

- Gemini Pro Vision 6k (出力2k)

となっている。LangChainで利用するとデフォルトでは出力2kになっているようで、回答が切れてしまうため、mat_output_tokensを拡張することで途切れることなく出力させることが可能。

llm = VertexAI(

model_name="gemini-pro",

max_output_tokens=8192

)