カメラ使用の許可

info.plistにNSCameraUsageDescriptionの追加

基本コード

ほとんどiOSアプリの場合と同じです。

CameraManager.swift

import Cocoa

import AVFoundation

/// カメラ周りの処理担当するやつ

class CameraManager {

//ターゲットのカメラがあれば設定(見つからなければデフォルト)

private let targetDeviceName = ""

// private let targetDeviceName = "FaceTime HDカメラ(ディスプレイ)"

// private let targetDeviceName = "FaceTime HD Camera"

// AVFoundation

private let session = AVCaptureSession()

private var captureDevice : AVCaptureDevice!

private var videoOutput = AVCaptureVideoDataOutput()

/// セッション開始

func startSession(delegate:AVCaptureVideoDataOutputSampleBufferDelegate){

let devices = AVCaptureDevice.devices()

if devices.count > 0 {

captureDevice = AVCaptureDevice.default(for: AVMediaType.video)

// ターゲットが設定されていればそれを選択

print("\n[接続カメラ一覧]")

for d in devices {

if d.localizedName == targetDeviceName {

captureDevice = d

}

print(d.localizedName)

}

print("\n[使用カメラ]\n\(captureDevice!.localizedName)\n\n")

// セッションの設定と開始

session.beginConfiguration()

let videoInput = try? AVCaptureDeviceInput.init(device: captureDevice)

session.sessionPreset = .low

session.addInput(videoInput!)

session.addOutput(videoOutput)

session.commitConfiguration()

session.startRunning()

// 画像バッファ取得のための設定

let queue:DispatchQueue = DispatchQueue(label: "videoOutput", attributes: .concurrent)

videoOutput.videoSettings = [kCVPixelBufferPixelFormatTypeKey as AnyHashable as! String : Int(kCVPixelFormatType_32BGRA)]

videoOutput.setSampleBufferDelegate(delegate, queue: queue)

videoOutput.alwaysDiscardsLateVideoFrames = true

} else {

print("カメラが接続されていません")

}

}

}

// Singleton

extension CameraManager {

class var shared : CameraManager {

struct Static { static let instance : CameraManager = CameraManager() }

return Static.instance

}

}

NSViewController からカメラの映像を取得

AVCaptureVideoDataOutputSampleBufferDelegateを設定してcaptureOutputでカメラ映像を受け取れます。

MainVC.swift

import Cocoa

import AVFoundation

/// メイン画面のViewController

class MainVC: NSViewController {

override func viewDidLoad() {

super.viewDidLoad()

//セッション開始

CameraManager.shared.startSession(delegate: self)

}

override var representedObject: Any? {

didSet {}

}

}

/// カメラ映像を取得して処理

extension MainVC: AVCaptureVideoDataOutputSampleBufferDelegate {

/// カメラ映像取得時

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

DispatchQueue.main.sync(execute: {

connection.videoOrientation = .portrait

let pixelBuffer:CVImageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer)!

//CIImage

let ciImage = CIImage(cvPixelBuffer: pixelBuffer)

let w = CGFloat(CVPixelBufferGetWidth(pixelBuffer))

let h = CGFloat(CVPixelBufferGetHeight(pixelBuffer))

let rect:CGRect = CGRect.init(x: 0, y: 0, width: w, height: h)

let context = CIContext.init()

//CGImage

let cgimage = context.createCGImage(ciImage, from: rect)

//UIImage

let image = NSImage(cgImage: cgimage!, size: NSSize(width: w, height: h))

//加工してNSImageViewなどに..

})

}

}

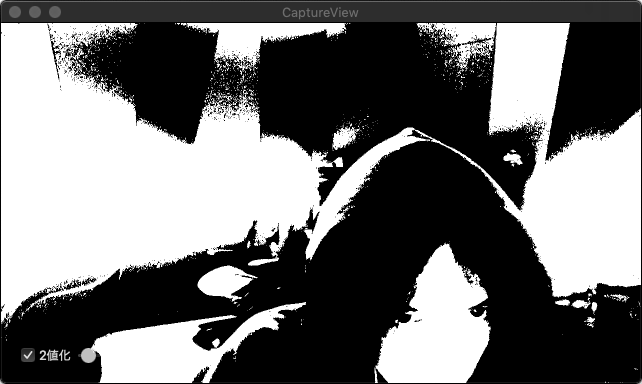

加工例

本記事では、CIFilterを利用しますが、Vision.FrameworkやOpenCVとの組み合わせで画像解析にも利用できます。

MainVC.swift

let filter = ThresouldFilter()

filter.inputImage = ciImage

filter.inputAmount = filterThresholdSlider.floatValue

ciImage = filter.outputImage()!

ThresouldFilter.swift

import Cocoa

class ThresouldFilter: CIFilter {

private let kernelStr = "kernel vec4 threshold(__sample image, float threshold) { vec3 col = image.rgb; float bright = 0.33333 * (col.r + col.g + col.b); float b = mix(0.0, 1.0, step(threshold, bright)); return vec4(vec3(b), 1.0);}"

private let kernel: CIColorKernel

var inputImage: CIImage?

var inputAmount: Float = 0.5

override init() {

kernel = CIColorKernel(source: kernelStr)!

super.init()

}

required init?(coder aDecoder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

func outputImage() -> CIImage? {

guard let inputImage = inputImage else {return nil}

return kernel.apply(extent: inputImage.extent, arguments: [inputImage, inputAmount])

}

}