やりたいこと

KubernetesにはCPUの使用率に基づいてPodをオートスケールさせる HPA(Horizontal Pod Autoscaler)という機能があります。

CPUの使用率以外にも custom metricsを設定することができるのですが、今回はSysdig Monitorを使ってHPAを設定してみたいと思います。

なお、今回はIBM Cloudで提供している IBM Cloud Monitoring with Sysdig を使って IBM Cloud Kubernetes Services(IKS) 上のアプリをオートスケールさせる方法を記述します。

前提

- Kubernetesクラスター v1.11以上。今回はIKSのv1.14を利用します。

- クラスターへのsysdigエージェントの導入。やり方はこちらで解説しています。

サンプルアプリのデプロイ

HPAの対象とするアプリをデプロイします。今回はKubernetes Up and Runningnで使われるサンプルアプリkuardを使用します。

$ kubectl get pod -l app=kuard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kuard-67789b8754-96mzw 1/1 Running 0 5m43s 172.30.206.9 10.129.177.58 <none> <none>

kuard-67789b8754-p6rfz 1/1 Running 0 5m43s 172.30.33.210 10.192.27.25 <none> <none>

kuard-67789b8754-px44p 1/1 Running 0 5m43s 172.30.208.198 10.193.37.162 <none> <none>

$ kubectl get svc -l app=kuard -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kuard LoadBalancer 172.21.105.23 128.168.68.122 80:32644/TCP 33m app=kuard

事前準備

このあと実行するスクリプト一式をこちらから取得してください。

まずは必要な権限を付与するためにRBAC系の設定をします。

$ kubectl apply -f deploy/01-sysdig-metrics-rbac.yml

namespace/custom-metrics created

serviceaccount/custom-metrics-apiserver created

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/custom-metrics-auth-reader created

clusterrole.rbac.authorization.k8s.io/custom-metrics-resource-reader created

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics-apiserver-resource-reader created

clusterrole.rbac.authorization.k8s.io/custom-metrics-getter created

clusterrolebinding.rbac.authorization.k8s.io/hpa-custom-metrics-getter created

service/api created

apiservice.apiregistration.k8s.io/v1beta1.custom.metrics.k8s.io created

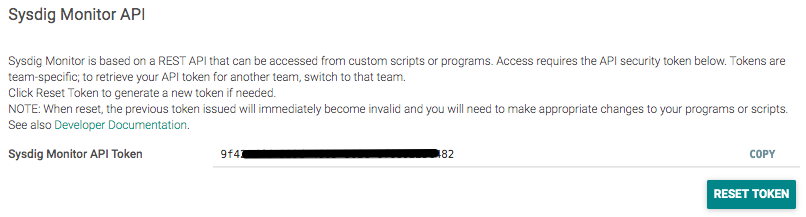

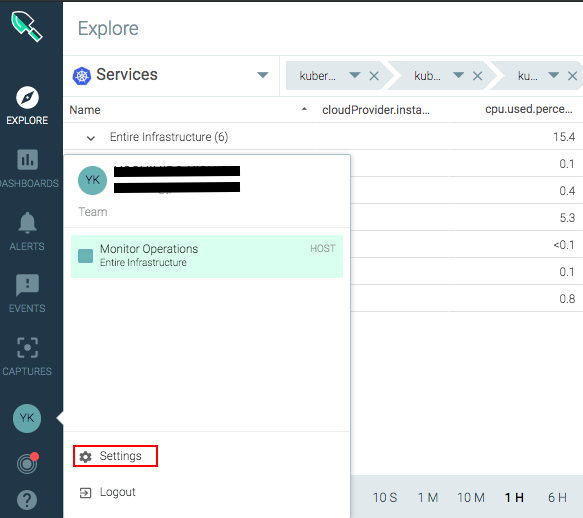

Sysdig Monitor APIを確認します。

Sysdig Monitor ダッシュボードで "Settings" > "User Profile" > "Sysdig Monitor API" から取得してください。

API Tokenを使ってsecretを作成します。

$ kubectl create secret generic --from-literal access-key=<Sysdig API Token> -n custom-metrics sysdig-api

secret/sysdig-api created

IBM Cloudで作成したSysdigを使う場合は、エンドポイントのURLが異なりますので、02-sysdig-metrics-server.ymlを以下のように変更してください。

- name: SDC_ENDPOINT

value: "https://app.sysdigcloud.com/api/"

↓

- name: SDC_ENDPOINT

value: "https://jp-tok.monitoring.cloud.ibm.com/api/"

あとはこのファイルをapplyすれば準備は完了です。

$ kubectl apply -f deploy/02-sysdig-metrics-server.yml

deployment.apps/custom-metrics-apiserver created

HPAの設定

以下のようにyamlファイルを作成します。

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: kuard-autoscaler

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: kuard

minReplicas: 3

maxReplicas: 10

metrics:

- type: Object

object:

target:

kind: Service

name: kuard

metricName: net.http.request.count

targetValue: 50

上記の場合、HPAの条件となるのはnet.http.request.countです。

したがって、Service:kuardへのアクセスが1分あたり50リクエストを超える場合にレプリカ数が3~10の間でスケールすることになります。

この他にもSysdigが取得する様々なメトリクスを指定することができます。

利用可能なメトリクス一覧は以下のとおりです。

$ kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1" | jq -r ".resources[].name"

services/redis.cpu.user

services/nginx.net.conn_opened_per_s

services/net.request.time.net.percent

services/redis.mem.fragmentation_ratio

services/redis.perf.latest_fork_usec

services/command.loginshell.distance

services/memcache.rusage_user_rate

services/cpu.shares.count

services/net.request.time.local.percent

services/kubernetes.pod.resourceRequests.memBytes

services/kubernetes.pod.status.ready

services/container.count

services/memcache.delete_misses_rate

services/swap.limit.bytes

services/nginx.net.connections

services/redis.mem.maxmemory

services/net.request.time.processing

services/command.cwd

services/command.comm

services/memcache.cas_hits_rate

services/memcache.connection_structures

services/uptime

services/net.request.time.nextTiers.percent

services/redis.pubsub.patterns

services/kubernetes.pod.resourceLimits.cpuCores

services/cpu.cpuset.used.percent

services/redis.expires.percent

services/fs.root.used.percent

services/redis.can_connect

services/compliance.k8s-bench.2.1.kubelet.tests_fail

services/net.request.time.net

services/compliance.k8s-bench.2.1.kubelet.tests_pass

services/redis.clients.blocked

services/memory.used.percent

services/memcache.cmd_flush_rate

services/cpu.cores.used.percent

services/nginx.net.waiting

services/redis.rdb.changes_since_last

services/net.request.time.file.percent

services/command.count

services/redis.persist.percent

services/redis.replication.backlog_histlen

services/memcache.threads

services/cpu.cores.cgroup.limit

services/redis.net.clients

services/file.bytes.total

services/net.request.time.out

services/memcache.evictions_rate

services/net.request.time.worst.in

services/file.bytes.out

services/apache.can_connect

services/net.request.time.nextTiers

services/kubernetes.pod.resourceLimits.memBytes

services/fs.inodes.total.count

services/redis.cpu.user_children

services/policyEvent.severity

services/net.request.time.in

services/redis.cpu.sys_children

services/dragent.analyzer.n_drops

services/memcache.fill_percent

services/memcache.can_connect

services/file.iops.out

services/net.request.count.out

services/redis.stats.keyspace_misses

services/cpu.cores.cpuset.limit

services/memory.limit.bytes

services/redis.slowlog.micros.max

services/net.request.count

services/file.time.out

services/redis.keys.evicted

services/compliance.k8s-bench.tests_fail

services/command.id

services/redis.net.rejected

services/memcache.bytes

services/net.http.request.time.worst

services/dragent.analyzer.n_drops_buffer

services/policyEvent.id

services/net.connection.count.in

services/nginx.net.conn_dropped_per_s

services/memcache.rusage_system_rate

services/cpu.cgroup.used.percent

services/redis.slowlog.micros.avg

services/command.timestamp

services/fs.free.percent

services/compliance.k8s-bench.2.2.configuration-files.tests_total

services/memcache.get_misses_rate

services/net.request.count.in

services/net.request.time

services/redis.net.commands

services/redis.replication.master_repl_offset

services/redis.mem.lua

services/redis.info.latency_ms

services/redis.mem.used

services/redis.slowlog.micros.count

services/net.mongodb.request.time

services/redis.slowlog.micros.median

services/file.error.open.count

services/net.http.request.count

services/redis.stats.keyspace_hits

services/memcache.total_connections_rate

services/fs.bytes.used

services/compliance.k8s-bench.2.2.configuration-files.tests_warn

services/fs.largest.used.percent

services/memcache.cmd_get_rate

services/compliance.k8s-bench.tests_warn

services/net.bytes.in

services/redis.keys

services/memcache.curr_connections

services/swap.limit.used.percent

services/net.request.time.processing.percent

services/fs.bytes.total

services/net.request.time.worst.out

services/memcache.pointer_size

services/redis.rdb.bgsave

services/net.sql.request.time

services/net.mongodb.request.count

services/net.bytes.out

services/command.loginshell.id

services/dragent.analyzer.n_evts

services/timestamp

services/memcache.get_hits_rate

services/memcache.listen_disabled_num_rate

services/net.request.time.file

services/redis.aof.rewrite

services/net.mongodb.error.count

services/compliance.k8s-bench.tests_pass

services/net.error.count

services/redis.persist

services/compliance.k8s-bench.2.2.configuration-files.pass_pct

services/file.bytes.in

services/fs.inodes.used.count

services/cpu.quota.used.percent

services/memcache.cas_badval_rate

services/file.time.total

services/memcache.limit_maxbytes

services/compliance.k8s-bench.2.1.kubelet.pass_pct

services/command.uid

services/syscall.count

services/command.all

services/policyEvent.policyId

services/memcache.uptime

services/nginx.net.reading

services/redis.net.slaves

services/net.sql.request.time.worst

services/compliance.k8s-bench.2.2.configuration-files.tests_fail

services/redis.expires

services/redis.rdb.last_bgsave_time

services/net.sql.error.count

services/memcache.delete_hits_rate

services/net.tcp.queue.len

services/fs.bytes.free

services/command.ppid

services/compliance.k8s-bench.2.2.configuration-files.tests_pass

services/file.time.in

services/net.http.error.count

services/nginx.net.writing

services/kubernetes.pod.restart.count

services/compliance.k8s-bench.2.1.kubelet.tests_total

services/file.iops.total

services/memory.pageFault.major

services/memcache.total_items

services/net.connection.count.total

services/net.bytes.total

services/redis.net.instantaneous_ops_per_sec

services/net.mongodb.request.time.worst

services/file.error.total.count

services/memcache.bytes_read_rate

services/memcache.bytes_written_rate

services/fs.inodes.used.percent

services/command.pid

services/cpu.shares.used.percent

services/redis.mem.rss

services/redis.cpu.sys

services/nginx.net.request_per_s

services/dragent.analyzer.sr

services/kubernetes.pod.resourceRequests.cpuCores

services/memory.pageFault.minor

services/dragent.subproc.cointerface.memory.kb

services/kubernetes.pod.restart.rate

services/net.request.time.local

services/net.http.request.time

services/redis.aof.last_rewrite_time

services/memcache.cmd_set_rate

services/file.iops.in

services/dragent.analyzer.fl.ms

services/thread.count

services/compliance.k8s-bench.tests_total

services/redis.pubsub.channels

services/command.cmdline

services/proc.count

services/memcache.cas_misses_rate

services/cpu.cores.used

services/memory.limit.used.percent

services/fd.used.percent

services/compliance.k8s-bench.2.1.kubelet.tests_warn

services/kubernetes.pod.containers.waiting

services/memcache.curr_items

services/file.open.count

services/redis.keys.expired

services/redis.mem.peak

services/net.sql.request.count

services/compliance.k8s-bench.pass_pct

services/net.connection.count.out

services/fs.used.percent

services/cpu.cores.quota.limit

services/host.error.count

services/nginx.can_connect

services/memory.bytes.used

services/cpu.used.percent

詳細を確認する場合は以下のように--rawで指定すればよいです。

$ kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/services/kuard/net.http.request.count" | jq .

結果

{

"kind": "MetricValueList",

"apiVersion": "custom.metrics.k8s.io/v1beta1",

"metadata": {

"selfLink": "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/services/kuard/net.http.request.count"

},

"items": [

{

"describedObject": {

"kind": "Service",

"namespace": "default",

"name": "kuard",

"apiVersion": "/__internal"

},

"metricName": "net.http.request.count",

"timestamp": "2019-10-31T06:19:27Z",

"value": "0"

}

]

}

動作確認

実際に大量のリクエストを与えて正しく動くか確認してみます。

$ kubectl apply -f deploy/03-kuard-hpa.yml

horizontalpodautoscaler.autoscaling/kuard-autoscaler created

負荷生成のツールとしてheyを使いました。

$ hey -c 5 -q 85 http://128.168.68.122

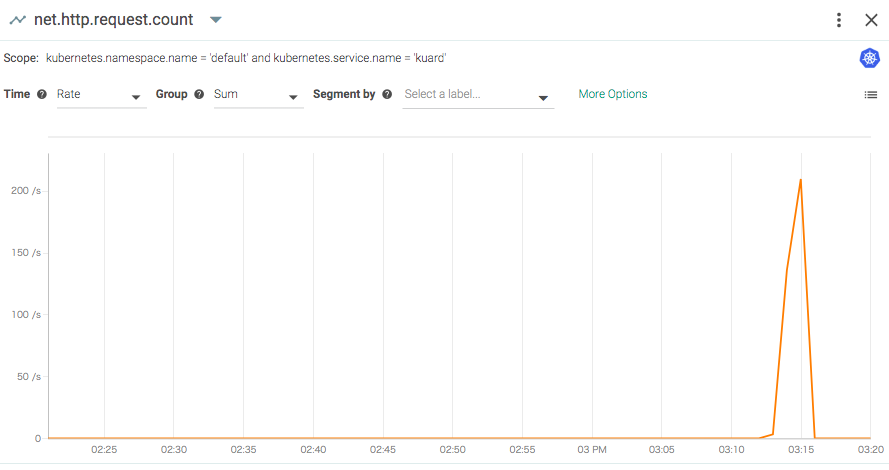

実際にSysdig Monitor のダッシュボードを確認すると大量にリクエストが来ていることがわかります。

そして、このメトリクスに応じてちゃんとPodがスケールしました。

$ kubectl get hpa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

kuard-autoscaler Deployment/kuard 0/50 3 10 3 41d

kuard-autoscaler Deployment/kuard 19478m/50 3 10 3 41d

kuard-autoscaler Deployment/kuard 82567m/50 3 10 3 41d

kuard-autoscaler Deployment/kuard 79734m/50 3 10 5 41d

kuard-autoscaler Deployment/kuard 75667m/50 3 10 8 41d

kuard-autoscaler Deployment/kuard 84200m/50 3 10 10 41d

kuard-autoscaler Deployment/kuard 82834m/50 3 10 10 41d