本記事について

Huggingface transformersを利用して、ひたすら101問の実装問題と解説を行う。これにより、自身の学習定着と、どこかの誰かの役に立つと最高。

本記事では、transformersの実行環境構築・極性判定(Sentiment Analysis)・質問回答(Question Answering)の推論の例題を解く。

はじめに

近年、自然言語処理・画像認識・音声認識において、Transformerを使った事前学習モデルの成果が著しい。一方、日本では日本語で公開されている事前学習モデルを使いこなし業務に活用されている例はまだ限られている。そこで、本記事の最後に掲載した拙著では、huggingfaceを使った事前学習モデルの実装方法を、読者の手元で試せる環境構築も含めて合計101問の問題・解答例を公開する。

対象読者

- 人工知能・機械学習・深層学習の概要は知っているという方

- 理論より実装を重視する方

- Pythonを触ったことがある方

目次

1. 素振り

Pythonの文法については、日本屈指の2強(京)大学テキストをDLし、いつでも復習できるようにしておきましょう。講座を進めながら参照いただくように現在はDLしておくだけでOKです。

2. 環境構築

-

質問:Google Colaboratory(Colab)でPythonコードが実行できる環境を構築し、huggingfaceのtransformersのver.4.6.1をインストールせよ。

-

回答:

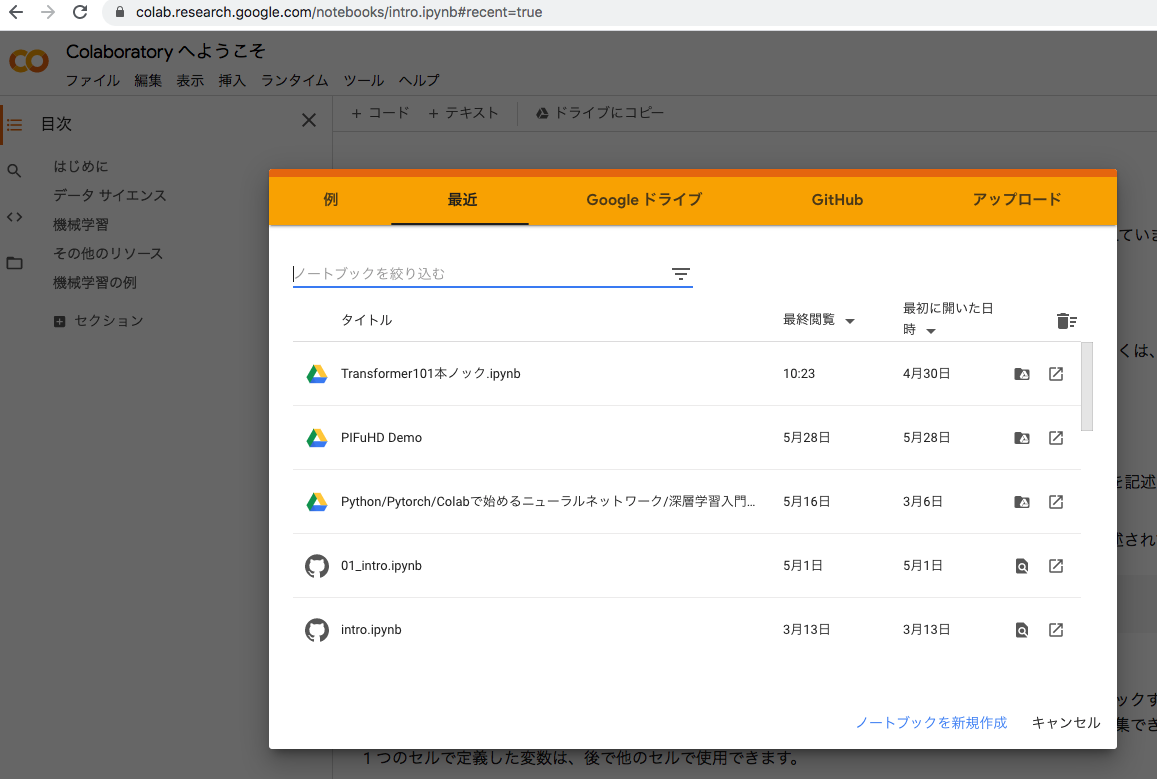

- 以下、環境にGoogle Chromeでアクセスする。

- 以下のようなモーダルが表示されるので、ノートブック新規作成をクリックする。

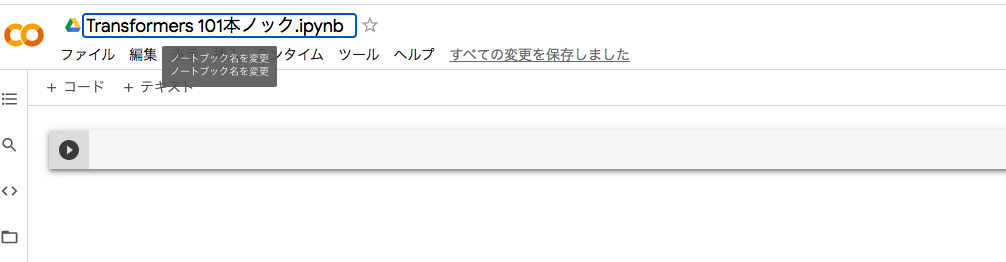

- 画面上部のUntitled0.ipynbの箇所をクリックし、タイトルをわかりやすいタイトルに変更する。

(例では、Transformers 101本ノック.ipynb)

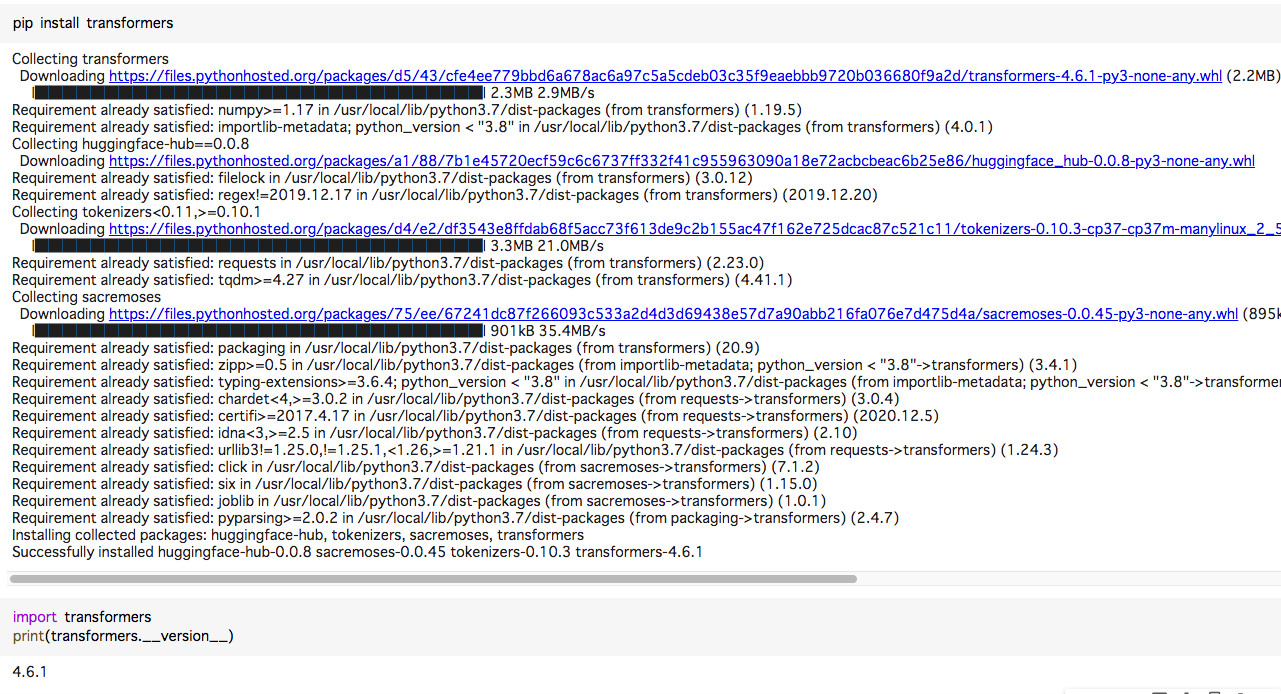

4) huggingfaceのtransformersをインストールしver.確認を行う。

# huggingfaceのtransformersをインストール

pip install transformers==4.6.1

# ver.も確認しておく。

print(transformers.__version__)

3. 極性判定

2本目:「I love you」「I ignore you」のそれぞれの文書をdistilbertで極性判定(ポジネガ判定)せよ。

コード

from transformers import pipeline

sentiment = pipeline('sentiment-analysis')

print(sentiment(['I love you']))

print(sentiment(['I ignore you']))

結果

[{'label': 'POSITIVE', 'score': 0.9998656511306763}]

[{'label': 'NEGATIVE', 'score': 0.999695897102356}]

両方とも正解となった。

ちなみに特にdistilbertとしていないが、pipelineでsentiment-analysisを指定してモデルをロードすると、4.6.1のtransformersではデフォルトでdistilbertが入ってくる。

確認したければ以下のようにする。

コード

sentiment.model

結果

DistilBertForSequenceClassification(

(distilbert): DistilBertModel(

(embeddings): Embeddings(

(word_embeddings): Embedding(30522, 768, padding_idx=0)

(position_embeddings): Embedding(512, 768)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(transformer): Transformer(

(layer): ModuleList(

(0): TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(1): TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(2): TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(3): TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(4): TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

(5): TransformerBlock(

(attention): MultiHeadSelfAttention(

(dropout): Dropout(p=0.1, inplace=False)

(q_lin): Linear(in_features=768, out_features=768, bias=True)

(k_lin): Linear(in_features=768, out_features=768, bias=True)

(v_lin): Linear(in_features=768, out_features=768, bias=True)

(out_lin): Linear(in_features=768, out_features=768, bias=True)

)

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(ffn): FFN(

(dropout): Dropout(p=0.1, inplace=False)

(lin1): Linear(in_features=768, out_features=3072, bias=True)

(lin2): Linear(in_features=3072, out_features=768, bias=True)

)

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

)

)

)

)

(pre_classifier): Linear(in_features=768, out_features=768, bias=True)

(classifier): Linear(in_features=768, out_features=2, bias=True)

(dropout): Dropout(p=0.2, inplace=False)

)

4. 質問回答

DistilBertForQuestionAnsweringモデルを用いて、東京オリンピック2020に関するwikipediaの抜粋であるコンテキストと、質問文「"What caused Tokyo Olympic postponed?"」を入力し、回答を出力させよ。

コード

from transformers import pipeline

qa = pipeline("question-answering")

olympic_wiki_text = """

The 2020 Summer Olympics (Japanese: 2020年夏季オリンピック, Hepburn: Nisen Nijū-nen Kaki Orinpikku), officially the Games of the XXXII Olympiad (Japanese: 第32回オリンピック競技大会, Hepburn: Dai Sanjūni-kai Orinpikku Kyōgi Taikai), and also known as Tokyo 2020 (東京2020, Tōkyō ni-zero-ni-zero[2]), is an upcoming international multi-sport event scheduled to be held from 23 July to 8 August 2021 in Tokyo, Japan. Formerly scheduled to take place from 24 July to 9 August 2020, the event was postponed in March 2020 as a result of the COVID-19 pandemic, and will not allow international spectators.[3][4] Despite being rescheduled for 2021, the event retains the Tokyo 2020 name for marketing and branding purposes.[5] This is the first time that the Olympic Games have been postponed and rescheduled, rather than cancelled.[6]

Tokyo was selected as the host city during the 125th IOC Session in Buenos Aires, Argentina, on 7 September 2013.[7] The 2020 Games will mark the second time that Japan has hosted the Summer Olympic Games, the first being also in Tokyo in 1964, making this the first city in Asia to host the Summer Games twice. Overall, these will be the fourth Olympic Games to be held in Japan, which also hosted the Winter Olympics in 1972 (Sapporo) and 1998 (Nagano). Tokyo was also scheduled to host the 1940 Summer Olympics but pulled out in 1938. The 2020 Games will also be the second of three consecutive Olympics to be held in East Asia, the first being in Pyeongchang County, South Korea in 2018, and the next in Beijing, China in 2022.

The 2020 Games will see the introduction of new competitions including 3x3 basketball, freestyle BMX, and madison cycling, as well as further mixed events. Under new IOC policies, which allow the host organizing committee to add new sports to the Olympic program to augment the permanent core events, these Games will see karate, sport climbing, surfing, and skateboarding make their Olympic debuts, as well as the return of baseball and softball for the first time since 2008.[8]

"""

print(qa(question="What caused Tokyo Olympic postponed?", context=olympic_wiki_text))

結果

{'score': 0.5628985166549683, 'start': 514, 'end': 531, 'answer': 'COVID-19 pandemic'}

質問を理解しているような高精度なDistilbertの出力には著者もびっくりしてしまいました。

それではまた4本目記事にてお会いしましょう!

参考文献

著者

ツイッターもやってます。

@keiji_dl

参考動画

画像認識をViT(Vision Transformer)を用いて行うWebアプリをPython/Pytorch Lightning Flask/Jinja2/Bootstrap/JQuery/CSS/HTMLなどをフルスタックに使って7ステップ2時間で解説する動画を開設しました。

全101本ノックはKindle Unlimitedにて公開中。

ソースコードへのリンクも手に入りますのでぜひご一読下さい。