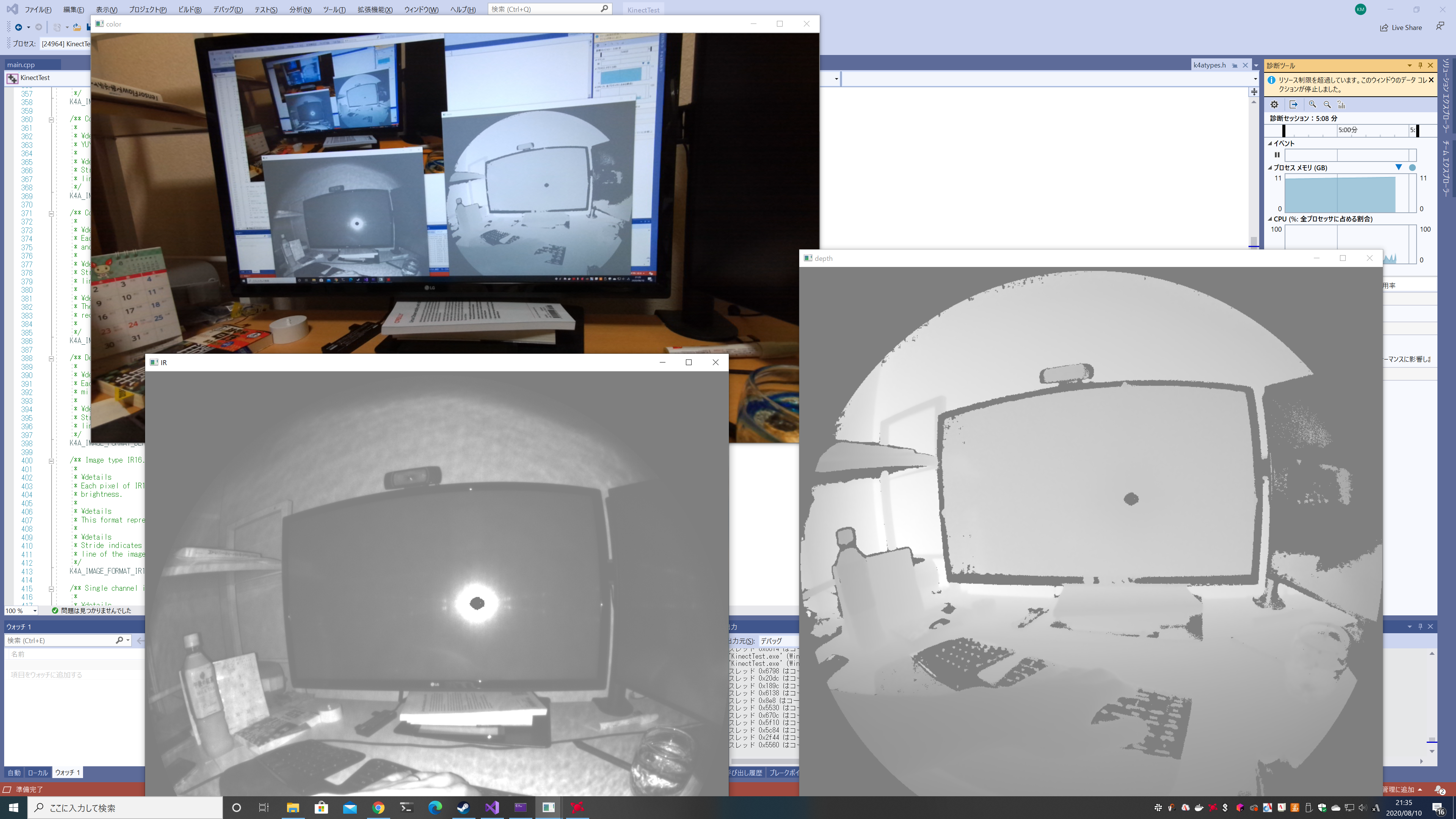

Kinect Azure のキャプチャ画像をとりあえず OpenCV で見るところまで。

そんなに難しい所は無いです。

Memo

-

K4A_IMAGE_FORMAT_COLOR_BGRA32を指定しないと BGRA で降りてこない(JPEG のまま)

Example

Code

# include <iostream>

# include <k4a/k4a.h>

# include <k4arecord/record.h>

# include <k4arecord/playback.h>

# include <opencv2/core/core.hpp>

# include <opencv2/highgui.hpp>

# ifdef _DEBUG

# pragma comment(lib, "opencv_world430d.lib")

# else

# pragma comment(lib, "opencv_world430.lib")

# endif // DEBUG

int main() {

uint32_t count = k4a_device_get_installed_count();

if (count == 0)

{

printf("No k4a devices attached!\n");

return 1;

}

// Open the first plugged in Kinect device

k4a_device_t device = NULL;

if (K4A_FAILED(k4a_device_open(K4A_DEVICE_DEFAULT, &device)))

{

printf("Failed to open k4a device!\n");

return 1;

}

// Configure a stream with 720P BRGA color

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

config.camera_fps = K4A_FRAMES_PER_SECOND_15;

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

config.color_resolution = K4A_COLOR_RESOLUTION_720P;

config.depth_mode = K4A_DEPTH_MODE_WFOV_UNBINNED;

// Start the camera with the given configuration

if (K4A_FAILED(k4a_device_start_cameras(device, &config)))

{

printf("Failed to start cameras!\n");

k4a_device_close(device);

return 1;

}

while (cv::waitKey(1) != 'q') {

// Capture a depth frame

k4a_capture_t capture;

switch (k4a_device_get_capture(device, &capture, 1000))

{

case K4A_WAIT_RESULT_SUCCEEDED:

break;

case K4A_WAIT_RESULT_TIMEOUT:

printf("Timed out waiting for a capture\n");

continue;

break;

case K4A_WAIT_RESULT_FAILED:

printf("Failed to read a capture\n");

return 1;

}

// Kinect for Azure color & depth.

const auto k4a_color = k4a_capture_get_color_image(capture);

const auto k4a_ir = k4a_capture_get_ir_image(capture);

const auto k4a_depth = k4a_capture_get_depth_image(capture);

if (k4a_color == NULL || k4a_ir == NULL) {

continue;

}

// Print depth image details.

printf(" | Depth16 res:%4dx%4d stride:%5d\n",

k4a_image_get_height_pixels(k4a_ir),

k4a_image_get_width_pixels(k4a_ir),

k4a_image_get_stride_bytes(k4a_ir));

// Get color as cv::Mat

const auto cv_color = cv::Mat_<cv::Vec4b>(

k4a_image_get_height_pixels(k4a_color),

k4a_image_get_width_pixels(k4a_color),

(cv::Vec4b*)k4a_image_get_buffer(k4a_color),

k4a_image_get_stride_bytes(k4a_color));

cv::imshow("color", cv_color);

const auto cv_ir = cv::Mat_<short>(

k4a_image_get_height_pixels(k4a_ir),

k4a_image_get_width_pixels(k4a_ir),

(short*)k4a_image_get_buffer(k4a_ir),

k4a_image_get_stride_bytes(k4a_ir));

cv::imshow("IR", cv_ir * 20);

const auto cv_depth = cv::Mat_<short>(

k4a_image_get_height_pixels(k4a_depth),

k4a_image_get_width_pixels(k4a_depth),

(short*)k4a_image_get_buffer(k4a_depth),

k4a_image_get_stride_bytes(k4a_depth));

cv::imshow("depth", cv_depth * 40);

cv::waitKey(1);

// Release the image and capture.

k4a_image_release(k4a_color);

k4a_image_release(k4a_ir);

k4a_image_release(k4a_depth);

k4a_capture_release(capture);

}

// Shut down the camera when finished with application logic

k4a_device_stop_cameras(device);

k4a_device_close(device);

return 0;

}