概要

OpenStack のオブジェクトストレージとしてよく使用される Swift と Ceph について

SSL を使用した S3 API 通信をするための設定方法を

Miranis OpenStack 9.0(以下、MOS9.0)をベースに説明していきたいと思います。

radosgw は keystone 連携していきます。

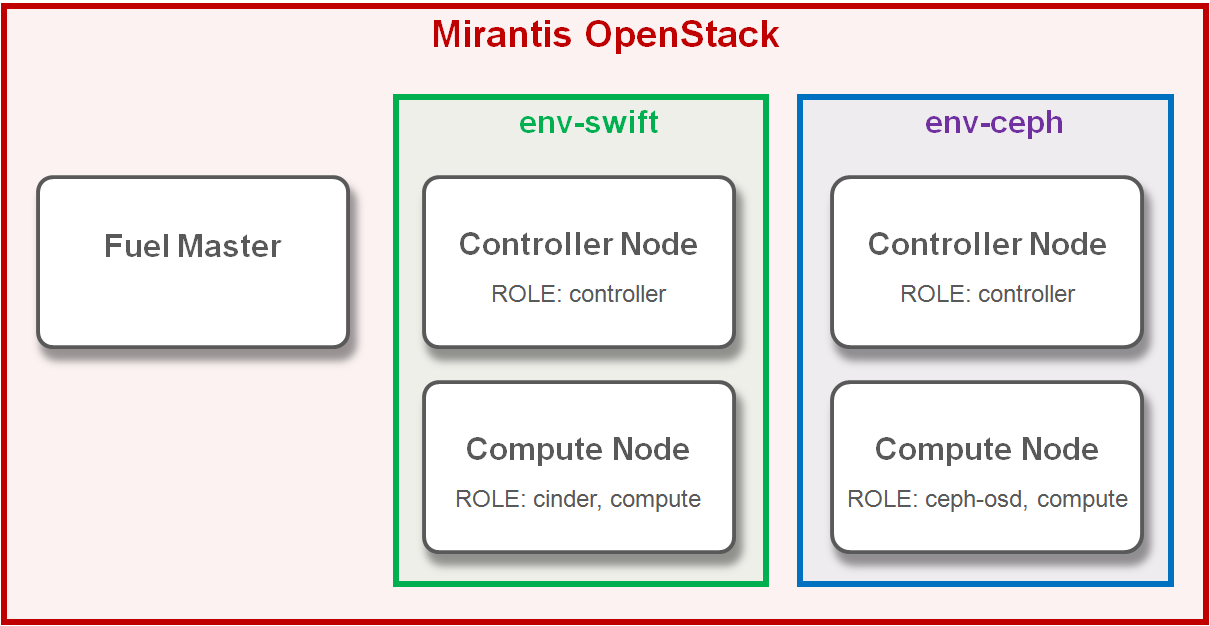

システム構成

今回は、OpenStack 環境をSwiftストレージ用(env-swift)とCephストレージ用(env-ceph)の2パターン作成しています。

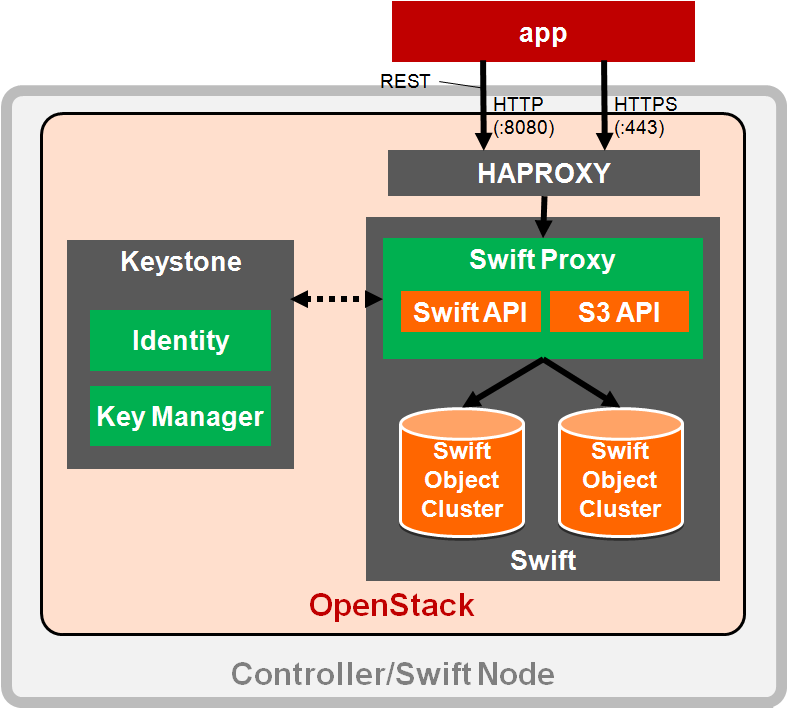

Swift API 内部構成

- アプリケーションが haproxy の VIP に対して API リクエストを送信

- haproxy の定義に従い、swift proxy へ振り分け

- Swift Proxy が Keystone 連携により認証を行う

- 認証に問題ない場合、Swfit Object Cluster へアクセス

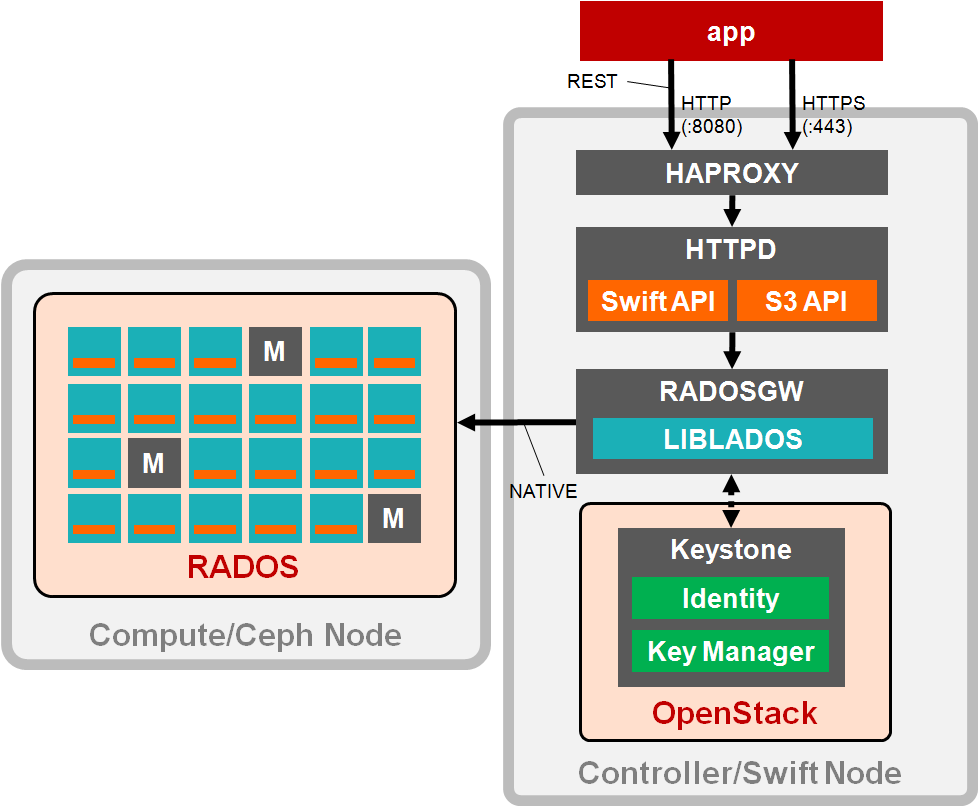

Ceph API 内部構成

- アプリケーションが haproxy の VIP に対して API リクエストを送信

- haproxy の定義に従い、Apache へ振り分け

- apache の定義に従い、radosgw へフォワード

- radosgw が Keystone 連携により認証を行う

- 認証に問題ない場合、RADOS へ NATIVE 通信でアクセス

設定手順

Swift

S3 互換 API

アクセスユーザ登録

keystone に S3 API アクセスで使用する EC2 認証情報を登録します。

# openstack ec2 credentials create --project admin --user admin

+------------+----------------------------------+

| Field | Value |

+------------+----------------------------------+

| access | <アクセスキー> |

| project_id | 3e56084d91a24e09af421a51db75ad49 |

| secret | <シークレットキー> |

| trust_id | None |

| user_id | 9a13c4bcf29243a5aaefe9deca7ebaf6 |

+------------+----------------------------------+

Apache Proxy 接続許可

@@ -35,7 +35,7 @@

RequestReadTimeout header=0,MinRate=500 body=0,MinRate=500

<Proxy *>

Order Deny,Allow

- Allow from 10.20.0.2

+ Allow from all

Deny from all

</Proxy>

root@swift-controller01:/etc# /etc/init.d/apache2 restart

SSL 対応

haproxy 設定

OS の 'haproxy' ユーザを 'ssl-cert' グループに所属させます。

# adduser haproxy ssl-cert

# id haproxy

uid=109(haproxy) gid=116(haproxy) groups=116(haproxy),110(ssl-cert)

SSL 証明書登録

次に SSL 証明書をサーバ上の '/etc/ssl/private/haproxy/' 配下に配置し、権限を設定します。

必要に応じて、以下のようにSSL 証明書、チェーン証明書、秘密鍵を1つのファイルにまとめた後、

'ファイル名を haproxy.pem'に変更し、'/etc/ssl/private/haproxy/' 配下に配置します。

-----BEGIN CERTIFICATE-----

<SSL 証明書>

-----END CERTIFICATE-----

-----BEGIN CERTIFICATE-----

<チェーン証明書>

-----END CERTIFICATE-----

-----BEGIN PRIVATE KEY-----

<秘密鍵>

-----END PRIVATE KEY-----

# chown -R root:haproxy /etc/ssl/private/

# chmod g+x /etc/ssl/private/

# ls -la /etc/ssl/private/

-rw-r----- 1 root haproxy 1679 Sep 13 05:08 /etc/ssl/private/haproxy.key

-rw-r----- 1 root haproxy 5168 Sep 13 05:08 /etc/ssl/private/haproxy.pem

SSL のポート番号は '443' に設定し、SSL 証明書を指定します。

今回、http 通信はそのまま使用できる状態で残し、

また、haproxy と radosgw 間の通信は、http となるように設定しています。

@@ -2,7 +2,9 @@

listen object-storage

bind 10.16.11.2:8080

bind 192.168.75.3:8080

+ bind *:443 ssl crt /etc/ssl/private/haproxy.pem no-sslv3

http-request set-header X-Forwarded-Proto https if { ssl_fc }

+ mode http

option httpchk HEAD /healthcheck HTTP/1.0

option httplog

option httpclose

最後に設定を適用するためにサービスを再起動します。

# crm resource restart clone_p_haproxy

# service haproxy restart

Ceph

S3 互換 API(keystone 連携)

keystone 連携

今回は、radosgw と keystone を連携させるため、

まず、ceph.conf を開き、rgw_keystone_* が設定されていることを確認します。

[client.radosgw.gateway]

rgw_keystone_accepted_roles = _member_, Member, admin, swiftoperator

keyring = /etc/ceph/keyring.radosgw.gateway

rgw_frontends = fastcgi socket_port=9000 socket_host=127.0.0.1

rgw_socket_path = /tmp/radosgw.sock

rgw_keystone_revocation_interval = 1000000

rgw_keystone_url = http://<keystone haproxy vip>:35357

rgw_keystone_admin_token = <keystone 管理者トークン>

host = ceph-controller01

rgw_dns_name = *.domain.tld

rgw_print_continue = True

rgw_keystone_token_cache_size = 10

rgw_data = /var/lib/ceph/radosgw

user = www-data

rgw_s3_auth_use_keystone を有効にします。

@@ -27,6 +27,7 @@

rbd_cache = True

[client.radosgw.gateway]

+rgw_s3_auth_use_keystone = true

rgw_keystone_accepted_roles = _member_, Member, admin, swiftoperator

keyring = /etc/ceph/keyring.radosgw.gateway

rgw_frontends = fastcgi socket_port=9000 socket_host=127.0.0.1

設定を適用するため、radosgw を一度再起動して keystone 連携は完了となります。

# /etc/init.d/radosgw restart

アクセスユーザ登録

続けて、keystone に S3 API アクセスで使用する EC2 認証情報を登録します。

# openstack ec2 credentials create --project admin --user admin

+------------+----------------------------------+

| Field | Value |

+------------+----------------------------------+

| access | <アクセスキー> |

| project_id | 7a964fe86daa4148a7d4c34b42746233 |

| secret | <シークレットキー> |

| trust_id | None |

| user_id | 7c2b6158cfe7468b9a47a538f55c56f8 |

+------------+----------------------------------+

SSL 対応

haproxy 設定

OS の 'haproxy' ユーザを 'ssl-cert' グループに所属させます。

# adduser haproxy ssl-cert

# id haproxy

uid=109(haproxy) gid=116(haproxy) groups=116(haproxy),110(ssl-cert)

SSL 証明書登録

次に SSL 証明書をサーバ上の '/etc/ssl/private/haproxy/' 配下に配置し、権限を設定します。

# chmod 640 /etc/ssl/private/haproxy*

# chown root:ssl-cert /etc/ssl/private/haproxy*

# ls -la /etc/ssl/private/haproxy*

-rw-r----- 1 root haproxy 1679 Sep 13 02:17 /etc/ssl/private/haproxy.key

-rw-r----- 1 root haproxy 5168 Sep 13 00:58 /etc/ssl/private/haproxy.pem

SSL のポート番号は '443' に設定し、SSL 証明書を指定します。

今回、http 通信はそのまま使用できる状態で残し、

また、haproxy と radosgw 間の通信は、http となるように設定しています。

@@ -1,8 +1,10 @@

listen object-storage

bind 172.16.101.2:8080

bind 192.168.76.3:8080

+ bind *:443 ssl crt /etc/ssl/private/haproxy.pem no-sslv3

http-request set-header X-Forwarded-Proto https if { ssl_fc }

+ mode http

option httplog

option httpchk HEAD /

option http-server-close

最後に設定を適用するためにサービスを再起動します。

# crm resource restart clone_p_haproxy

確認手順

今回は、Python boto を使用した S3 API 確認用スクリプトを作成し、

バケットの取得・作成・削除について、確認をしています。

-

Ceph S3 互換 API (HTTP)

ubuntu@work:~$ ./s3test_ceph.py ============================================================= S3 API TEST Host: 192.168.76.3 Port: 8080 Secure: False Bucket: hoge ============================================================= * Deleting Bucket.. * Bucket was deleted. * Bucket was created. ------------------------------------------------------------- hoge 2016-09-13T05:43:07.000Z ============================================================= -

Ceph S3 互換 API (HTTPS)

ubuntu@work:~$ ./s3test_ceph2.py ============================================================= S3 API TEST Host: 192.168.76.3 Port: 443 Secure: True Bucket: hoge ============================================================= * Deleting Bucket.. * Bucket was deleted. * Bucket was created. ------------------------------------------------------------- hoge 2016-09-13T05:43:13.000Z ============================================================= -

Swift S3 互換 API (HTTP)

$ ./s3test_swift.py ============================================================= S3 API TEST Host: 192.168.75.3 Port: 8080 Secure: False Bucket: hoge ============================================================= * Deleting Bucket.. * Bucket was deleted. * Bucket was created. ------------------------------------------------------------- hoge 2009-02-03T16:45:09.000Z ============================================================= -

Swift S3 互換 API (HTTPS)

$ ./s3test_swift2.py ============================================================= S3 API TEST Host: 192.168.75.3 Port: 443 Secure: True Bucket: hoge ============================================================= * Deleting Bucket.. * Bucket was deleted. * Bucket was created. ------------------------------------------------------------- hoge 2009-02-03T16:45:09.000Z =============================================================

【参考】確認用スクリプト

以下に確認用スクリプトを掲載しておきます。

-

Ceph S3 互換 API (HTTP)

s3test_ceph.py#!/usr/bin/python import sys import boto import boto.s3.connection ACCESS_KEY = <アクセスキー> SECRET_KEY = <シークレットキー> HOST = '192.168.76.3' PORT = 8080 IS_SECURE = False #connect to rados gateway conn = boto.connect_s3( aws_access_key_id = ACCESS_KEY, aws_secret_access_key = SECRET_KEY, port = PORT, host = HOST, is_secure=IS_SECURE, # uncommmnt if you are not using ssl calling_format = boto.s3.connection.OrdinaryCallingFormat(), ) argv = sys.argv if (len(argv) == 2): bucket_name = argv[1] else: bucket_name = 'hoge' print "=============================================================" print "S3 API TEST" print " Host:\t{host}".format(host = HOST,) print " Port:\t{port}".format(port = PORT,) print " Secure:\t{secure}".format(secure = IS_SECURE,) print " Bucket:\t{name}".format(name = bucket_name,) print "=============================================================" #delete bucket for bucket in conn.get_all_buckets(): if bucket.name == bucket_name: try: print "* Deleting Bucket..".format( name = bucket.name, ) conn.delete_bucket(bucket_name) print "* Bucket was deleted." except: print "* Bucket Contains Keys." full_bucket = conn.get_bucket(n) print "* Deleting keys..." for key in full_bucket.list(): key.delete() print "* Keys deleted. Deleting Bucket.." conn.delete_bucket(n) print "* Bucket was deleted." #create bucket conn.create_bucket(bucket_name) print "* Bucket was created.".format( name = bucket_name, ) print "-------------------------------------------------------------" #get bucket list for bucket in conn.get_all_buckets(): print "{name}\t{created}".format( name = bucket.name, created = bucket.creation_date, ) print "=============================================================" -

Ceph S3 互換 API (HTTPS)

s3test_ceph2.py#!/usr/bin/python import sys import boto import boto.s3.connection ACCESS_KEY = <アクセスキー> SECRET_KEY = <シークレットキー> HOST = '192.168.76.3' PORT = 443 IS_SECURE = True #connect to rados gateway conn = boto.connect_s3( aws_access_key_id = ACCESS_KEY, aws_secret_access_key = SECRET_KEY, port = PORT, host = HOST, is_secure=IS_SECURE, # uncommmnt if you are not using ssl calling_format = boto.s3.connection.OrdinaryCallingFormat(), ) argv = sys.argv if (len(argv) == 2): bucket_name = argv[1] else: bucket_name = 'hoge' print "=============================================================" print "S3 API TEST" print " Host:\t{host}".format(host = HOST,) print " Port:\t{port}".format(port = PORT,) print " Secure:\t{secure}".format(secure = IS_SECURE,) print " Bucket:\t{name}".format(name = bucket_name,) print "=============================================================" #delete bucket for bucket in conn.get_all_buckets(): if bucket.name == bucket_name: try: print "* Deleting Bucket..".format( name = bucket.name, ) conn.delete_bucket(bucket_name) print "* Bucket was deleted." except: print "* Bucket Contains Keys." full_bucket = conn.get_bucket(n) print "* Deleting keys..." for key in full_bucket.list(): key.delete() print "* Keys deleted. Deleting Bucket.." conn.delete_bucket(n) print "* Bucket was deleted." #create bucket conn.create_bucket(bucket_name) print "* Bucket was created.".format( name = bucket_name, ) print "-------------------------------------------------------------" #get bucket list for bucket in conn.get_all_buckets(): print "{name}\t{created}".format( name = bucket.name, created = bucket.creation_date, ) print "=============================================================" -

Swift S3 互換 API (HTTP)

s3test_swift.py#!/usr/bin/python import sys import boto import boto.s3.connection ACCESS_KEY = <アクセスキー> SECRET_KEY = <シークレットキー> HOST = '192.168.75.3' PORT = 8080 IS_SECURE = False #connect to rados gateway conn = boto.connect_s3( aws_access_key_id = ACCESS_KEY, aws_secret_access_key = SECRET_KEY, port = PORT, host = HOST, is_secure=IS_SECURE, # uncommmnt if you are not using ssl calling_format = boto.s3.connection.OrdinaryCallingFormat(), ) argv = sys.argv if (len(argv) == 2): bucket_name = argv[1] else: bucket_name = 'hoge' print "=============================================================" print "S3 API TEST" print " Host:\t{host}".format(host = HOST,) print " Port:\t{port}".format(port = PORT,) print " Secure:\t{secure}".format(secure = IS_SECURE,) print " Bucket:\t{name}".format(name = bucket_name,) print "=============================================================" #delete bucket for bucket in conn.get_all_buckets(): if bucket.name == bucket_name: try: print "* Deleting Bucket..".format( name = bucket.name, ) conn.delete_bucket(bucket_name) print "* Bucket was deleted." except: print "* Bucket Contains Keys." full_bucket = conn.get_bucket(n) print "* Deleting keys..." for key in full_bucket.list(): key.delete() print "* Keys deleted. Deleting Bucket.." conn.delete_bucket(n) print "* Bucket was deleted." #create bucket conn.create_bucket(bucket_name) print "* Bucket was created.".format( name = bucket_name, ) print "-------------------------------------------------------------" #get bucket list for bucket in conn.get_all_buckets(): print "{name}\t{created}".format( name = bucket.name, created = bucket.creation_date, ) print "=============================================================" -

Swift S3 互換 API (HTTPS)

s3test_swift2.py#!/usr/bin/python import sys import boto import boto.s3.connection ACCESS_KEY = <アクセスキー> SECRET_KEY = <シークレットキー> HOST = '192.168.75.3' PORT = 443 IS_SECURE = True #connect to rados gateway conn = boto.connect_s3( aws_access_key_id = ACCESS_KEY, aws_secret_access_key = SECRET_KEY, port = PORT, host = HOST, is_secure=IS_SECURE, # uncommmnt if you are not using ssl calling_format = boto.s3.connection.OrdinaryCallingFormat(), ) argv = sys.argv if (len(argv) == 2): bucket_name = argv[1] else: bucket_name = 'hoge' print "=============================================================" print "S3 API TEST" print " Host:\t{host}".format(host = HOST,) print " Port:\t{port}".format(port = PORT,) print " Secure:\t{secure}".format(secure = IS_SECURE,) print " Bucket:\t{name}".format(name = bucket_name,) print "=============================================================" #delete bucket for bucket in conn.get_all_buckets(): if bucket.name == bucket_name: try: print "* Deleting Bucket..".format( name = bucket.name, ) conn.delete_bucket(bucket_name) print "* Bucket was deleted." except: print "* Bucket Contains Keys." full_bucket = conn.get_bucket(n) print "* Deleting keys..." for key in full_bucket.list(): key.delete() print "* Keys deleted. Deleting Bucket.." conn.delete_bucket(n) print "* Bucket was deleted." #create bucket conn.create_bucket(bucket_name) print "* Bucket was created.".format( name = bucket_name, ) print "-------------------------------------------------------------" #get bucket list for bucket in conn.get_all_buckets(): print "{name}\t{created}".format( name = bucket.name, created = bucket.creation_date, ) print "============================================================="